Streaming HTML: Client-Side Rendering Made Easy with Any Framework

You’ve built a beautiful frontend. It works. It’s snappy on localhost. But when you ship it? Users wait. They click. Nothing happens. And by the time your app wakes up, they’re already gone. Today, we’re breaking down what actually causes slow frontend load times—and how you can fix it with one powerful technique: streaming HTML from the server. We’ll walk through a real example using Node.js and plain Javascript, explain the problem with Client-Side Rendering, and show you how to get serious performance gains with just a few lines of code. Why Frontend Load Times Still Suck Here’s the deal. Most modern apps use JavaScript-heavy frameworks like React, Vue, or Angular. These are awesome for building complex UIs—but there’s a catch: by default, they use Client-Side Rendering (CSR). That means: When someone visits your site, they don’t get a full HTML page. They get an empty and a big fat JavaScript bundle. Only after the JS is downloaded and run, your app makes API calls, builds the UI, and then the user sees something. Now imagine your user’s on a slow connection. Or using a low-end device. Or your API server is just having a bad day. That whole process? Takes forever. Feels clunky. They bounce. And here’s the kicker: it’s not their fault. It’s not their phone. It’s not even your React code. It’s your architecture. Let me show you exactly what’s going wrong. The Problem: Death by Waterfall Let’s take a common example—a simple admin dashboard. When someone visits the page, this is what happens behind the scenes: The browser requests the HTML from the server. The server sends a minimal HTML file—just a shell, really. The browser sees script tags and starts downloading the JS bundle. After the JS is downloaded, it starts running. Then, the JS makes an API call to get data—maybe feature flags or user info. Only after that data is fetched does JS kick in and render the page. Every single one of these steps depends on the one before it. It’s a waterfall of delays. One slow step = a slow experience. Now let’s say your JavaScript bundle is big. Maybe it’s 2MB. That takes a few seconds on a good connection. Now add an API call that takes another second or two. That’s three to five seconds before your user sees anything useful. And in web performance terms? That’s death. Enter: Server-Side Rendering (SSR) One obvious solution is Server-Side Rendering. SSR flips the script. Instead of sending an empty HTML shell, your server actually renders the full HTML content on the server and sends it down. That means the user sees something immediately, even before the JS bundle arrives. That’s a huge win for perceived performance. Note: Turning a big CSR app into an SSR setup isn’t a quick flip of a switch. It’s a serious refactor. You’ll have to rethink how you handle state, manage server-side logic, and deal with tricky hydration bugs. If you’re starting fresh, go for it. But if you’re working with an existing app? Be ready—it’s a heavy lift. The Solution: Streaming HTML (CSR + STREAM) With HTML streaming, we don’t wait for everything to be ready before sending a response. Instead, we start streaming chunks of HTML to the browser as soon as we have them. Let’s break this down with an example: The user visits your site. Your server immediately sends the basic HTML skeleton, with headers, styles, maybe even a loading spinner. While the browser’s busy parsing that first chunk of HTML, the server quietly makes a non-critical API call in the background, then slips that data right into the rest of the HTML as it streams it down. As soon as that data comes in, the server sends more chunks of HTML with the actual content. Meanwhile, the browser is already downloading the JavaScript bundle in parallel. By the time your JS is ready, it can read the data already in the HTML and render the page. You’re parallelising what used to be sequential. It’s like cooking dinner while doing laundry instead of one after the other. Time saved. Experience better. Let’s look at some actual code. A Real-World Example Using Express Here’s a real Node.js Express server that streams HTML. const express = require('express'); const fs = require('fs'); const app = express(); const PORT = process.env.PORT || 3000; // Serve static files from the public directory app.use(express.static('public')); const getEmployee = async () => { const response = await fetch('https://jsonplaceholder.typicode.com/users'); const data = await response.json(); return data; } const [START_HTML, END_HTML] = fs.readFileSync('./public/index.html', 'utf8').split(''); // Route for the home page app.get('/server', async (req, res) => { let api = getEmployee(); res.write(START_HTML); try { const employees = await api res.write( `serverEmployees = ${JSON.stringify(employees)};console.log('SERVER =>', serverEmployees); ${END_HTML}` ); } catch (error) { console

You’ve built a beautiful frontend. It works. It’s snappy on localhost. But when you ship it? Users wait. They click. Nothing happens. And by the time your app wakes up, they’re already gone.

Today, we’re breaking down what actually causes slow frontend load times—and how you can fix it with one powerful technique: streaming HTML from the server. We’ll walk through a real example using Node.js and plain Javascript, explain the problem with Client-Side Rendering, and show you how to get serious performance gains with just a few lines of code.

Why Frontend Load Times Still Suck

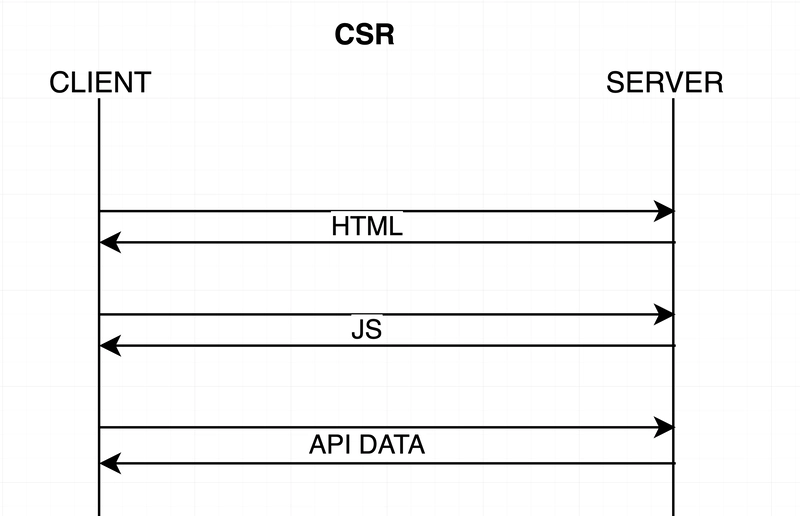

Here’s the deal. Most modern apps use JavaScript-heavy frameworks like React, Vue, or Angular. These are awesome for building complex UIs—but there’s a catch: by default, they use Client-Side Rendering (CSR).

That means:

- When someone visits your site, they don’t get a full HTML page.

- They get an empty and a big fat JavaScript bundle.

- Only after the JS is downloaded and run, your app makes API calls, builds the UI, and then the user sees something.

Now imagine your user’s on a slow connection. Or using a low-end device. Or your API server is just having a bad day.

That whole process? Takes forever. Feels clunky. They bounce.

And here’s the kicker: it’s not their fault. It’s not their phone. It’s not even your React code. It’s your architecture.

Let me show you exactly what’s going wrong.

The Problem: Death by Waterfall

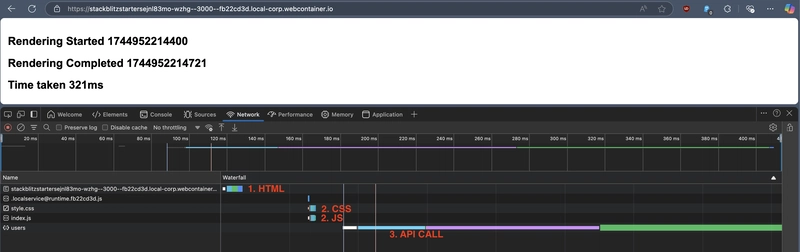

Let’s take a common example—a simple admin dashboard.

When someone visits the page, this is what happens behind the scenes:

- The browser requests the HTML from the server.

- The server sends a minimal HTML file—just a shell, really.

- The browser sees script tags and starts downloading the JS bundle.

- After the JS is downloaded, it starts running.

- Then, the JS makes an API call to get data—maybe feature flags or user info.

- Only after that data is fetched does JS kick in and render the page.

Every single one of these steps depends on the one before it. It’s a waterfall of delays.

One slow step = a slow experience.

Now let’s say your JavaScript bundle is big. Maybe it’s 2MB. That takes a few seconds on a good connection. Now add an API call that takes another second or two. That’s three to five seconds before your user sees anything useful.

And in web performance terms? That’s death.

Enter: Server-Side Rendering (SSR)

One obvious solution is Server-Side Rendering.

SSR flips the script. Instead of sending an empty HTML shell, your server actually renders the full HTML content on the server and sends it down. That means the user sees something immediately, even before the JS bundle arrives.

That’s a huge win for perceived performance.Note: Turning a big CSR app into an SSR setup isn’t a quick flip of a switch. It’s a serious refactor. You’ll have to rethink how you handle state, manage server-side logic, and deal with tricky hydration bugs. If you’re starting fresh, go for it. But if you’re working with an existing app? Be ready—it’s a heavy lift.

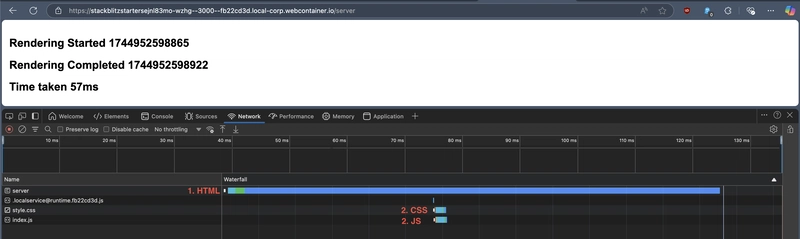

The Solution: Streaming HTML (CSR + STREAM)

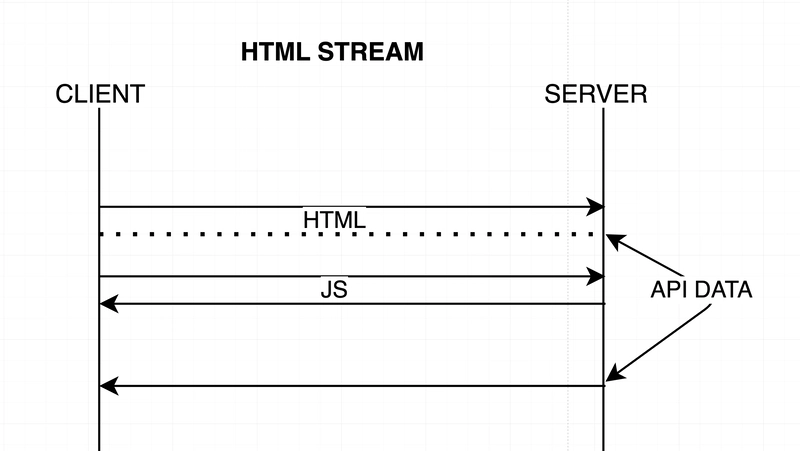

With HTML streaming, we don’t wait for everything to be ready before sending a response. Instead, we start streaming chunks of HTML to the browser as soon as we have them.

Let’s break this down with an example:

- The user visits your site.

- Your server immediately sends the basic HTML skeleton, with headers, styles, maybe even a loading spinner.

- While the browser’s busy parsing that first chunk of HTML, the server quietly makes a non-critical API call in the background, then slips that data right into the rest of the HTML as it streams it down.

- As soon as that data comes in, the server sends more chunks of HTML with the actual content.

- Meanwhile, the browser is already downloading the JavaScript bundle in parallel.

- By the time your JS is ready, it can read the data already in the HTML and render the page.

You’re parallelising what used to be sequential. It’s like cooking dinner while doing laundry instead of one after the other. Time saved. Experience better.

Let’s look at some actual code.

A Real-World Example Using Express

Here’s a real Node.js Express server that streams HTML.

const express = require('express'); const fs = require('fs'); const app = express(); const PORT = process.env.PORT || 3000; // Serve static files from the public directory app.use(express.static('public')); const getEmployee = async () => { const response = await fetch('https://jsonplaceholder.typicode.com/users'); const data = await response.json(); return data; } const [START_HTML, END_HTML] = fs.readFileSync('./public/index.html', 'utf8').split('

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Is this too much for a modular monolith system? [closed]](https://i.sstatic.net/pYL1nsfg.png)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)