SPO600: Project Stage III - Enhancing the Clone-Pruning Analysis Pass

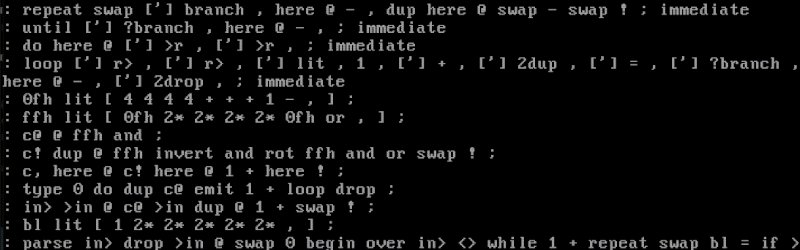

Table of Contents Introduction Stage III Requirements Implementation Approach Implementation Details Data Structure Changes Function Tracking Logic Analysis Algorithm Complete Implementation Testing and Results Test Case Design x86_64 Results aarch64 Results Comparison Between Architectures Capabilities and Limitations How to Reproduce My Results Final Reflections Conclusion Introduction Welcome to the third and final stage of my GCC Clone-Pruning Analysis Pass project! In this post, I'll describe how I extended my Stage II implementation to handle multiple cloned functions in a single program and tested it across both x86_64 and aarch64 architectures. If you didn't read my previous post about Stage II, I'd recommend checking it out first, since this builds directly on that work. In Stage II, I built a basic GCC pass that could analyze a single cloned function and determine whether its variants were substantially similar enough to be pruned. Stage III Requirements For Stage III, we needed to: Extend our code to handle multiple cloned functions in a single program Create test cases with at least two cloned functions per program Verify functionality on both x86_64 and aarch64 architectures Test scenarios with mixed PRUNE and NOPRUNE recommendations Clean up any remaining issues from Stage II The biggest challenge was removing the assumption from Stage II that "there is only one cloned function in a program" and ensuring our implementation works correctly across architectures. Implementation Approach After reviewing my Stage II code, I identified several limitations that needed to be addressed: The static variables used to track function information limited us to a single function with two variants The simple comparison based on block and statement counts could be improved The state management needed enhancement to handle multiple functions My approach for Stage III focused on: Replacing the static variables with a more sophisticated data structure to track multiple functions Enhancing the comparison algorithm Making sure the implementation works consistently across architectures Creating comprehensive test cases for both architectures Implementation Details Data Structure Changes The core of my improvement was replacing the simple static variables from Stage II: static std::string previous_function_name = ""; static size_t previous_block_total = 0; static size_t previous_statement_total = 0; With a map to store information about all encountered functions: struct FunctionVariant { std::string full_name; // Complete function name with variant size_t block_count; // Number of basic blocks size_t statement_count; // Number of GIMPLE statements }; static std::map function_variants; This data structure allows us to track multiple variants of multiple functions simultaneously, and keep all their relevant information organized by base function name. Function Tracking Logic First of all, I needed to improve my base function name extraction to handle different variant suffixes: std::string get_base_function_name(function *fun) { struct cgraph_node *node = cgraph_node::get(fun->decl); std::string fname = (node != nullptr) ? std::string(node->name()) : std::string(function_name(fun)); // Handle resolver functions size_t pos = fname.find(".resolver"); if (pos != std::string::npos) { return fname.substr(0, pos); } // Handle regular variants pos = fname.find('.'); if (pos != std::string::npos) { return fname.substr(0, pos); } return fname; } I also added a function to find the default variant among all variants of a function: FunctionVariant find_default_variant(const std::vector &variants) { for (size_t i = 0; i

Table of Contents

- Introduction

- Stage III Requirements

- Implementation Approach

-

Implementation Details

- Data Structure Changes

- Function Tracking Logic

- Analysis Algorithm

- Complete Implementation

-

Testing and Results

- Test Case Design

- x86_64 Results

- aarch64 Results

- Comparison Between Architectures

- Capabilities and Limitations

- How to Reproduce My Results

- Final Reflections

- Conclusion

Introduction

Welcome to the third and final stage of my GCC Clone-Pruning Analysis Pass project! In this post, I'll describe how I extended my Stage II implementation to handle multiple cloned functions in a single program and tested it across both x86_64 and aarch64 architectures.

If you didn't read my previous post about Stage II, I'd recommend checking it out first, since this builds directly on that work. In Stage II, I built a basic GCC pass that could analyze a single cloned function and determine whether its variants were substantially similar enough to be pruned.

Stage III Requirements

For Stage III, we needed to:

- Extend our code to handle multiple cloned functions in a single program

- Create test cases with at least two cloned functions per program

- Verify functionality on both x86_64 and aarch64 architectures

- Test scenarios with mixed PRUNE and NOPRUNE recommendations

- Clean up any remaining issues from Stage II

The biggest challenge was removing the assumption from Stage II that "there is only one cloned function in a program" and ensuring our implementation works correctly across architectures.

Implementation Approach

After reviewing my Stage II code, I identified several limitations that needed to be addressed:

- The static variables used to track function information limited us to a single function with two variants

- The simple comparison based on block and statement counts could be improved

- The state management needed enhancement to handle multiple functions

My approach for Stage III focused on:

- Replacing the static variables with a more sophisticated data structure to track multiple functions

- Enhancing the comparison algorithm

- Making sure the implementation works consistently across architectures

- Creating comprehensive test cases for both architectures

Implementation Details

Data Structure Changes

The core of my improvement was replacing the simple static variables from Stage II:

static std::string previous_function_name = "";

static size_t previous_block_total = 0;

static size_t previous_statement_total = 0;

With a map to store information about all encountered functions:

struct FunctionVariant {

std::string full_name; // Complete function name with variant

size_t block_count; // Number of basic blocks

size_t statement_count; // Number of GIMPLE statements

};

static std::map<std::string, std::vector<FunctionVariant> > function_variants;

This data structure allows us to track multiple variants of multiple functions simultaneously, and keep all their relevant information organized by base function name.

Function Tracking Logic

First of all, I needed to improve my base function name extraction to handle different variant suffixes:

std::string get_base_function_name(function *fun) {

struct cgraph_node *node = cgraph_node::get(fun->decl);

std::string fname = (node != nullptr) ? std::string(node->name())

: std::string(function_name(fun));

// Handle resolver functions

size_t pos = fname.find(".resolver");

if (pos != std::string::npos) {

return fname.substr(0, pos);

}

// Handle regular variants

pos = fname.find('.');

if (pos != std::string::npos) {

return fname.substr(0, pos);

}

return fname;

}

I also added a function to find the default variant among all variants of a function:

FunctionVariant find_default_variant(const std::vector<FunctionVariant> &variants) {

for (size_t i = 0; i < variants.size(); i++) {

if (variants[i].full_name.find(".default") != std::string::npos) {

return variants[i];

}

}

for (size_t i = 0; i < variants.size(); i++) {

if (variants[i].full_name.find('.') == std::string::npos) {

return variants[i];

}

}

return variants[0];

}

Analysis Algorithm

Here's the core logic for processing a function in the execute method:

unsigned int execute(function *fun) {

FILE *out = (dump_file != nullptr) ? dump_file : stderr;

struct cgraph_node *node = cgraph_node::get(fun->decl);

std::string full_fname = (node != nullptr)

? std::string(node->name())

: std::string(function_name(fun));

print_frame_header(out, full_fname);

if (is_resolver_function(full_fname)) {

print_frame_footer(out, "ANALYSIS FINISHED (resolver function)");

return 0;

}

size_t bb_count = 0;

size_t gimple_count = 0;

basic_block bb;

FOR_EACH_BB_FN(bb, fun) {

bb_count++;

for (gimple_stmt_iterator gsi = gsi_start_bb(bb);

!gsi_end_p(gsi);

gsi_next(&gsi))

{

gimple_count++;

}

}

std::string base_name = get_base_function_name(fun);

FunctionVariant current_variant = {full_fname, bb_count, gimple_count};

function_variants[base_name].push_back(current_variant);

if (function_variants[base_name].size() > 1) {

analyze_function_variants(out, base_name, function_variants[base_name]);

} else {

print_frame_footer(out, "First variant of this function - storing for comparison");

}

return 0;

}

And here's the function that analyzes variants to determine if they should be pruned:

void analyze_function_variants(FILE *out, const std::string &base_name,

const std::vector<FunctionVariant> &variants) {

if (variants.size() <= 1) {

return;

}

FunctionVariant default_variant = find_default_variant(variants);

for (size_t i = 0; i < variants.size(); i++) {

const FunctionVariant &variant = variants[i];

if (variant.full_name == default_variant.full_name) {

continue;

}

bool should_prune = (variant.block_count == default_variant.block_count &&

variant.statement_count == default_variant.statement_count);

if (should_prune) {

fprintf(out, "PRUNE: %s\n", base_name.c_str());

} else {

fprintf(out, "NOPRUNE: %s\n", base_name.c_str());

}

fprintf(out, "CLONE FOUND: %s\n", base_name.c_str());

fprintf(out, "CURRENT: %s\n", variant.full_name.c_str());

std::string border(60, '*');

fprintf(out, "%s\n", border.c_str());

fprintf(out, "* End of Diagnostic for Clone Pair\n");

fprintf(out, "%s\n", border.c_str());

}

}

The key improvements are:

- We track all variants of all functions

- We process functions as they're encountered

- When we have multiple variants of a function, we analyze them immediately

- We identify the default variant to use as a baseline for comparison

Complete Implementation

For the complete implementation, check my GitHub repository:

tree-amullagaliev.cc

Testing and Results

Test Case Design

To thoroughly test the implementation, I created a complex test file with six different functions, each with different optimization characteristics:

-

simple_calculation: Basic scalar operations -

vector_multiply: Vector operations -

matrix_transpose: Matrix operations -

count_bits: Bit counting -

count_char: String processing -

classify_number: A branch-heavy function

For x86_64, I used these target attributes:

__attribute__((target_clones("default", "arch=x86-64-v3")))

int simple_calculation(int a, int b, int c) {

// function body

}

__attribute__((target_clones("default", "avx2")))

void vector_multiply(float *result, const float *a, const float *b, int size) {

// function body

}

__attribute__((target_clones("default", "avx2")))

void matrix_transpose(float *dst, const float *src, int rows, int cols) {

// function body

}

__attribute__((target_clones("default", "popcnt")))

int count_bits(uint64_t value) {

// function body

}

__attribute__((target_clones("default", "sse4.1")))

int count_char(const char *str, char c) {

// function body

}

__attribute__((target_clones("default", "arch=x86-64-v3")))

int classify_number(int x) {

// function body

}

For aarch64, I used different attributes appropriate for that architecture:

__attribute__((target_clones("default", "simd")))

int simple_calculation(int a, int b, int c) {

// same function body

}

__attribute__((target_clones("default", "sve")))

void vector_multiply(float *result, const float *a, const float *b, int size) {

// same function body

}

// etc.

The full test files can be found here:

x86_64 Results

On x86_64, the results were:

====== Summary of PRUNE/NOPRUNE decisions ======

NOPRUNE: vector_multiply

PRUNE: classify_number

PRUNE: count_bits

PRUNE: count_char

PRUNE: matrix_transpose

PRUNE: simple_calculation

Most functions were identified for pruning, with only vector_multiply showing structural differences with AVX2 optimization. I was surprised to see that matrix_transpose was marked for pruning despite also using AVX2! This suggests that not all vector operations benefit equally from AVX2 instructions.

aarch64 Results

On aarch64, the results were different:

====== Summary of PRUNE/NOPRUNE decisions ======

NOPRUNE: matrix_transpose

NOPRUNE: vector_multiply

PRUNE: classify_number

PRUNE: count_bits

PRUNE: count_char

PRUNE: simple_calculation

Here, both vector_multiply AND matrix_transpose were marked as NOPRUNE. This was a fascinating finding!

Comparison Between Architectures

The most interesting observation was how the same function (matrix_transpose) was treated differently on each architecture:

- On x86_64 with AVX2: PRUNE recommendation

- On aarch64 with SVE: NOPRUNE recommendation

This shows that the SVE instructions on aarch64 changed the function structure more significantly than AVX2 did on x86_64, resulting in different optimization outcomes. I found this really intriguing since both are vector instruction sets, but they impact code structure differently. This highlights the importance of architecture-specific optimization.

Capabilities and Limitations

Capabilities

- Multiple Function Support: Successfully handles any number of cloned functions in a program

- Cross-Architecture Compatibility: Works on both x86_64 and aarch64

- Mixed Decision Support: Can recommend PRUNE for some functions and NOPRUNE for others

- Detailed Output: Provides clear diagnostic information for each clone pair

Limitations

- Simple Comparison Metric: Still relies on basic block and statement counts, which may not capture all structural differences

- Architecture-Specific Test Cases: Requires different test files for each architecture due to different valid target attributes

How to Reproduce My Results

To replicate my work:

- First, create or modify the pass file:

cd ~/git/gcc/gcc

vi tree-amullagaliev.cc # Copy the implementation code from GitHub

- Rebuild GCC:

cd ~/gcc-build-001/

make -j$(nproc)

- Create test files:

mkdir ~/stage3

cd ~/stage3

# Copy complex-clones-test.c and Makefile from GitHub

- Test the implementation:

make

make run-test

make show-results

Here's the Makefile:

CC = ~/gcc-build-001/gcc/xgcc

CFLAGS = -B ~/gcc-build-001/gcc/ -g -O3 -ftree-vectorize -fdump-tree-amullagaliev

all: complex-test

complex-test: complex-clones-test.c

$(CC) $(CFLAGS) complex-clones-test.c -o complex-test

run-test: complex-test

./complex-test

@echo "Test completed. Check the dump files for analysis results."

show-results:

@echo "====== Analysis Results ======"

@grep -A 3 -B 1 "PRUNE\\|NOPRUNE" complex-test-complex-clones-test.c.*.amullagaliev || echo "No results found"

@echo ""

@echo "====== Summary of PRUNE/NOPRUNE decisions ======"

@grep "PRUNE:" complex-test-complex-clones-test.c.*.amullagaliev | sort | uniq

@grep "NOPRUNE:" complex-test-complex-clones-test.c.*.amullagaliev | sort | uniq

clean:

rm -f complex-test *.o *.amullagaliev*

.PHONY: all run-test show-results clean

The full source code and test files are available in my GitHub repository for easy access and replication:

SPO600 Project Stage III

Final Reflections

Wow, working on this project has been an incredible learning experience! I've gained a much deeper understanding of:

- GCC internals and how passes are implemented

- Function Multi-Versioning and how it works across architectures

- Cross-architecture development challenges

- GIMPLE representation and analysis techniques

The most challenging part of Stage III was understanding how to properly track and analyze multiple function variants. I initially tried to use a finalize method to process all variants at the end, but this approach had issues. The solution of processing variants as they're encountered worked much better.

I was particularly surprised by the difference in optimization behavior between x86_64 and aarch64, especially for matrix operations. This highlighted how architecture-specific optimizations can lead to very different code structures, even for the same source code.

The most frustrating part was dealing with architecture-specific target attributes. I spent a lot of time figuring out which attributes were valid on aarch64 vs. x86_64. It was a bit of trial and error until I found combinations that worked on both platforms.

Conclusion

I've successfully extended my GCC clone-pruning analysis pass to handle multiple functions and test it across architectures. The implementation now meets all the requirements for Stage III.

This whole project, from Stage I through III, has been a fascinating journey into compiler development. I've gained skills that I never expected to acquire and deepened my understanding of how compilers work at a fundamental level.

I want to express my sincere thanks to Professor Chris Tyler for his incredible guidance throughout this project. His clear explanations of GCC internals and compiler theory made the complex world of compilers much more approachable. Without his lectures and support, navigating the intricate structures of GCC would have been much more challenging. The skills I've gained in this course will be valuable throughout my career in software development.

This project marks the end of my SPO600 journey, but the knowledge and experience I've gained will stay with me for years to come.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)