Retrieval-Augmented Generation in 2025: Solving LLM's Biggest Challenges

Large Language Models (LLMs) have transformed how we interact with AI, but they've always had a critical Achilles' heel: the tendency to sound confident while being fundamentally unreliable. Enter Retrieval-Augmented Generation (RAG), the game-changing approach that's bringing precision to AI's powerful language capabilities. The LLM Reliability Problem Imagine an AI that sounds incredibly persuasive but occasionally invents facts out of thin air. That's been the core issue with traditional LLMs. They're brilliant conversationalists with one major flaw: hallucinations. These aren't just minor inaccuracies—they're completely fabricated "facts" that can have serious consequences in professional settings. How RAG Changes the Game Retrieval-Augmented Generation is essentially giving LLMs a fact-checking superpower. Instead of relying solely on their training data, RAG-powered models can: Dynamically retrieve relevant information from external knowledge bases Ground responses in verifiable, up-to-date sources Provide transparency by citing where information comes from Adapt to specific domains without extensive retraining The RAG Workflow in Action Query Processing: Understand the core information need Retrieval: Search through external knowledge sources Contextualization: Combine retrieved information with the original query Generation: Produce an accurate, well-referenced response Real-World Impact Organizations implementing RAG are seeing remarkable improvements: Accuracy Boost: 20-30% reduction in hallucinations Up-to-Date Knowledge: Eliminate traditional training data cutoffs Domain Expertise: Instant adaptation to specialized fields Cost Efficiency: More economical than continuous model retraining Beyond Current Limitations While RAG isn't a perfect solution, it represents a significant leap forward. The most exciting developments include: Multi-modal RAG (integrating text, images, audio) Adaptive retrieval strategies Multi-agent RAG architectures Self-verifying retrieval mechanisms The Deployment Challenge Implementing a sophisticated RAG system isn't just about algorithms—it's about infrastructure. This is where platforms like Genezio become crucial, offering serverless environments specifically designed for AI applications that can handle the complex computational needs of retrieval-augmented systems. Looking Ahead: The Future of Intelligent Systems RAG is more than a technical improvement—it's a fundamental reimagining of how AI systems can interact with information. We're moving towards collaborative intelligence that combines machine efficiency with human-like reliability. Dive Deeper into the RAG Revolution This overview barely scratches the surface of retrieval-augmented generation's potential. For a comprehensive deep dive into RAG, its implementation challenges, and future directions, check out our full guide at and discover how RAG is reshaping the AI landscape today!

Large Language Models (LLMs) have transformed how we interact with AI, but they've always had a critical Achilles' heel: the tendency to sound confident while being fundamentally unreliable. Enter Retrieval-Augmented Generation (RAG), the game-changing approach that's bringing precision to AI's powerful language capabilities.

The LLM Reliability Problem

Imagine an AI that sounds incredibly persuasive but occasionally invents facts out of thin air. That's been the core issue with traditional LLMs. They're brilliant conversationalists with one major flaw: hallucinations. These aren't just minor inaccuracies—they're completely fabricated "facts" that can have serious consequences in professional settings.

How RAG Changes the Game

Retrieval-Augmented Generation is essentially giving LLMs a fact-checking superpower. Instead of relying solely on their training data, RAG-powered models can:

- Dynamically retrieve relevant information from external knowledge bases

- Ground responses in verifiable, up-to-date sources

- Provide transparency by citing where information comes from

- Adapt to specific domains without extensive retraining

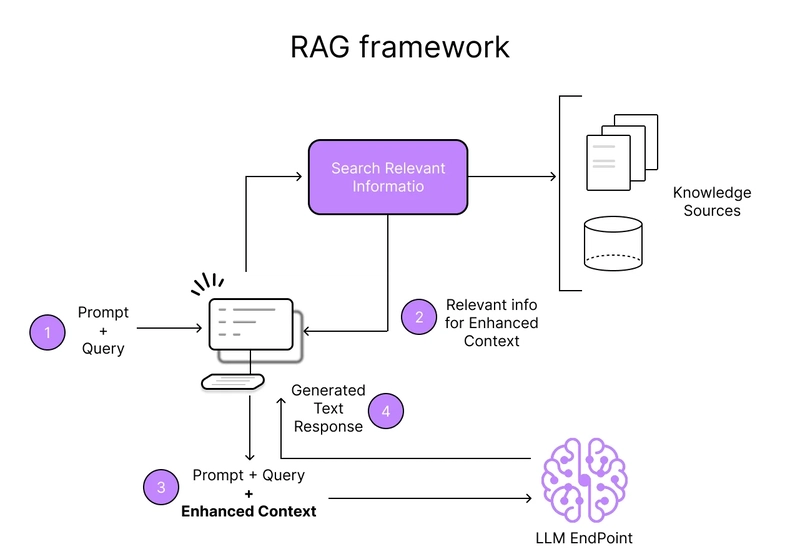

The RAG Workflow in Action

- Query Processing: Understand the core information need

- Retrieval: Search through external knowledge sources

- Contextualization: Combine retrieved information with the original query

- Generation: Produce an accurate, well-referenced response

Real-World Impact

Organizations implementing RAG are seeing remarkable improvements:

- Accuracy Boost: 20-30% reduction in hallucinations

- Up-to-Date Knowledge: Eliminate traditional training data cutoffs

- Domain Expertise: Instant adaptation to specialized fields

- Cost Efficiency: More economical than continuous model retraining

Beyond Current Limitations

While RAG isn't a perfect solution, it represents a significant leap forward. The most exciting developments include:

- Multi-modal RAG (integrating text, images, audio)

- Adaptive retrieval strategies

- Multi-agent RAG architectures

- Self-verifying retrieval mechanisms

The Deployment Challenge

Implementing a sophisticated RAG system isn't just about algorithms—it's about infrastructure. This is where platforms like Genezio become crucial, offering serverless environments specifically designed for AI applications that can handle the complex computational needs of retrieval-augmented systems.

Looking Ahead: The Future of Intelligent Systems

RAG is more than a technical improvement—it's a fundamental reimagining of how AI systems can interact with information. We're moving towards collaborative intelligence that combines machine efficiency with human-like reliability.

Dive Deeper into the RAG Revolution

This overview barely scratches the surface of retrieval-augmented generation's potential. For a comprehensive deep dive into RAG, its implementation challenges, and future directions, check out our full guide at and discover how RAG is reshaping the AI landscape today!

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![Apple Considers Delaying Smart Home Hub Until 2026 [Gurman]](https://www.iclarified.com/images/news/96946/96946/96946-640.jpg)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)