Remote State Storage in AWS Using Terraform

This document serves as a personal guide, explaining everything in remote state storage and how to use S3 and DynamoDB to accomplish it. Why Remote State? The Problem with Local State Terraform tracks resources using state files (terraform.tfstate). By default, it stores this file locally, which creates problems like: Collaboration Issues – If multiple engineers work on the same infrastructure, local state can lead to conflicts State Loss – Accidentally deleting terraform.tfstate means losing track of infrastructure. Security Risks – Keeping sensitive state files on a local machine is unsafe. The solution? Storing Terraform state remotely in AWS using S3 (for storage) and DynamoDB (for locking). Setting Up Remote State Storage in AWS 1.Prerequisites Before starting, ensure you have: An AWS Free Tier account. AWS CLI installed (aws configure set up with your credentials). Terraform installed (terraform -v). 2.Create an S3 Bucket for Storing State We’ll create an S3 bucket to store the terraform.tfstate file. Run the following command: aws s3 mb s3://my-terraform-state-bucket --region us-east-1 Enable versioning to keep history: aws s3api put-bucket-versioning --bucket my-terraform-state-bucket --versioning-configuration Status=Enabled Why versioning? If a state file gets corrupted or deleted, you can restore a previous version. 3.Create a DynamoDB Table for State Locking DynamoDB prevents multiple people from modifying the state at the same time (called state locking). aws dynamodb create-table \ --table-name terraform-state-lock \ --attribute-definitions AttributeName=LockID,AttributeType=S \ --key-schema AttributeName=LockID,KeyType=HASH \ --billing-mode PAY_PER_REQUEST Why state locking? Prevents race conditions when multiple users apply Terraform changes. 4.Configure Terraform Backend Terraform configuration to use the remote backend. terraform { backend "s3" { bucket = "my-terraform-state-bucket" key = "terraform.tfstate" region = "us-east-1" dynamodb_table = "terraform-state-lock" } } provider "aws" { region = "us-east-1" } Replace "my-terraform-state-bucket" with your actual bucket name. 5.Initialize and Apply Terraform Run: terraform init This will connect Terraform to the S3 backend. Then: terraform plan terraform apply Terraform will store the state file in S3 instead of locally. Things to remember: Reconfigure AWS CLI using aws configure. Ensure the AWS access key is still active (delete old keys and create a new one). Make sure the DynamoDB table exists and isn’t manually locked. Cleaning Up (Avoid Charges!) When you're done, destroy the infrastructure: terraform destroy Then delete the S3 bucket and DynamoDB table: aws s3 rb s3://my-terraform-state-bucket --force aws dynamodb delete-table --table-name terraform-state-lock Conclusion Switching to remote state storage in AWS completely changed how I work with Terraform. No more local state file worries, no more accidental state loss, and seamless collaboration!

This document serves as a personal guide, explaining everything in remote state storage and how to use S3 and DynamoDB to accomplish it.

Why Remote State? The Problem with Local State

Terraform tracks resources using state files (terraform.tfstate). By default, it stores this file locally, which creates problems like:

Collaboration Issues – If multiple engineers work on the same infrastructure, local state can lead to conflicts

State Loss – Accidentally deleting terraform.tfstate means losing track of infrastructure.

Security Risks – Keeping sensitive state files on a local machine is unsafe.

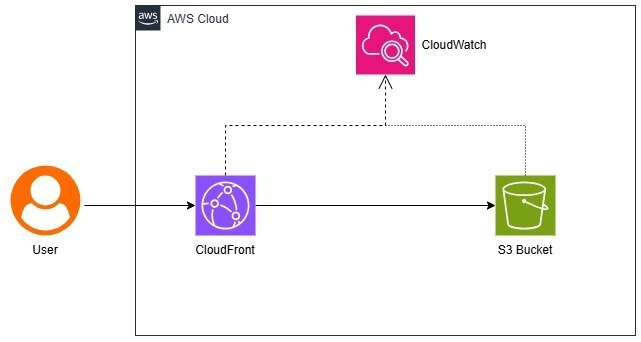

The solution? Storing Terraform state remotely in AWS using S3 (for storage) and DynamoDB (for locking).

Setting Up Remote State Storage in AWS

1.Prerequisites

Before starting, ensure you have:

- An AWS Free Tier account.

- AWS CLI installed (aws configure set up with your credentials).

- Terraform installed (terraform -v).

2.Create an S3 Bucket for Storing State

We’ll create an S3 bucket to store the terraform.tfstate file.

Run the following command:

aws s3 mb s3://my-terraform-state-bucket --region us-east-1

Enable versioning to keep history:

aws s3api put-bucket-versioning --bucket my-terraform-state-bucket --versioning-configuration Status=Enabled

Why versioning? If a state file gets corrupted or deleted, you can restore a previous version.

3.Create a DynamoDB Table for State Locking

DynamoDB prevents multiple people from modifying the state at the same time (called state locking).

aws dynamodb create-table \

--table-name terraform-state-lock \

--attribute-definitions AttributeName=LockID,AttributeType=S \

--key-schema AttributeName=LockID,KeyType=HASH \

--billing-mode PAY_PER_REQUEST

Why state locking? Prevents race conditions when multiple users apply Terraform changes.

4.Configure Terraform Backend

Terraform configuration to use the remote backend.

terraform {

backend "s3" {

bucket = "my-terraform-state-bucket"

key = "terraform.tfstate"

region = "us-east-1"

dynamodb_table = "terraform-state-lock"

}

}

provider "aws" {

region = "us-east-1"

}

Replace "my-terraform-state-bucket" with your actual bucket name.

5.Initialize and Apply Terraform

Run:

terraform init

This will connect Terraform to the S3 backend.

Then:

terraform plan

terraform apply

Terraform will store the state file in S3 instead of locally.

Things to remember:

- Reconfigure AWS CLI using aws configure.

- Ensure the AWS access key is still active (delete old keys and create a new one).

- Make sure the DynamoDB table exists and isn’t manually locked.

Cleaning Up (Avoid Charges!)

When you're done, destroy the infrastructure:

terraform destroy

Then delete the S3 bucket and DynamoDB table:

aws s3 rb s3://my-terraform-state-bucket --force

aws dynamodb delete-table --table-name terraform-state-lock

Conclusion

Switching to remote state storage in AWS completely changed how I work with Terraform. No more local state file worries, no more accidental state loss, and seamless collaboration!

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)