Bias-Variance Tradeoff

In the realm of machine learning, understanding the bias-variance tradeoff is essential for building robust models. This concept helps us navigate the balance between model complexity and prediction accuracy, ensuring that our algorithms perform well on both training and unseen data. Understanding Bias and Variance What is Bias? Bias refers to the error introduced when a model makes incorrect assumptions about the data. High bias can lead to underfitting, where the model is too simple to capture the underlying patterns in the data. For example, using a linear model to fit data that has a non-linear relationship can result in significant prediction errors. High Bias: Results in large errors in both training and testing datasets. Example: A straight line trying to fit a complex curve. What is Variance? Variance measures how much a model's predictions change when it is trained on different subsets of the data. High variance can lead to overfitting, where the model learns the noise in the training data instead of the actual patterns. This means that while the model performs well on training data, it fails to generalize to new, unseen data. High Variance: Performs well on training data but poorly on test data. Example: A complex curve that fits every point in the training set perfectly but does not represent the underlying data distribution. The Bias-Variance Tradeoff The bias-variance tradeoff illustrates the relationship between model complexity and prediction error. If an algorithm is too simple, it may exhibit high bias and low variance. Conversely, if the algorithm is too complex, it may show low bias but high variance. The goal is to find a balance between these two extremes. Visual Representation In a typical graph of the bias-variance tradeoff, the total error is represented as the sum of bias squared, variance, and irreducible error: Total Error = Bias² + Variance + Irreducible Error The ideal model minimizes total error at the optimal point of this tradeoff. Bias-Variance Decomposition for Classification and Regression To illustrate the concept of bias and variance, we can use the Bias-Variance Decomposition technique. Here’s how to implement it using Python for both classification and regression tasks. Bias-Variance Decomposition for Classification # Import the necessary libraries from sklearn.datasets import load_iris from sklearn.model_selection import train_test_split from sklearn.tree import DecisionTreeClassifier from sklearn.ensemble import BaggingClassifier from mlxtend.evaluate import bias_variance_decomp import warnings warnings.filterwarnings('ignore') # Load the dataset X, y = load_iris(return_X_y=True) # Split train and test dataset X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25, random_state=23, shuffle=True, stratify=y) # Build the classification model tree = DecisionTreeClassifier(random_state=123) clf = BaggingClassifier(base_estimator=tree, n_estimators=50, random_state=23) # Bias-variance decomposition avg_expected_loss, avg_bias, avg_var = bias_variance_decomp(clf, X_train, y_train, X_test, y_test, loss='0-1_loss', random_seed=23) # Print the values print('Average expected loss: %.2f' % avg_expected_loss) print('Average bias: %.2f' % avg_bias) print('Average variance: %.2f' % avg_var) Bias-Variance Decomposition for Regression # Load the necessary libraries from sklearn.datasets import fetch_california_housing from sklearn.model_selection import train_test_split import tensorflow as tf from mlxtend.evaluate import bias_variance_decomp import warnings warnings.filterwarnings('ignore') # Load the dataset X, y = fetch_california_housing(return_X_y=True) # Split train and test dataset X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25, random_state=23, shuffle=True) # Build the regression model model = tf.keras.Sequential([ tf.keras.layers.Dense(64, activation=tf.nn.relu), tf.keras.layers.Dense(1) ]) # Set optimizer and loss optimizer = tf.keras.optimizers.Adam() model.compile(loss='mean_squared_error', optimizer=optimizer) # Train the model model.fit(X_train, y_train, epochs=25, verbose=0) # Evaluations accuracy = model.evaluate(X_test, y_test) print('Average: %.2f' % accuracy) # Bias-variance decomposition avg_expected_loss, avg_bias, avg_var = bias_variance_decomp(model, X_train, y_train, X_test, y_test, loss='mse', random_seed=23, epochs=5, verbose=0) # Print the result print('Average expected loss: %.2f' % avg_expected_loss) print('Average bias: %.2f' % avg_bias) print('Average variance: %.2f' % avg_var) Conclusion Understanding the bias-variance tradeoff is crucial for optimizing machine learning models. By managing bias and variance effectively, you can avoid issues like overfitting and underfitting, ensuring your models generalize well to new data. Through techniques like

In the realm of machine learning, understanding the bias-variance tradeoff is essential for building robust models. This concept helps us navigate the balance between model complexity and prediction accuracy, ensuring that our algorithms perform well on both training and unseen data.

Understanding Bias and Variance

What is Bias?

Bias refers to the error introduced when a model makes incorrect assumptions about the data. High bias can lead to underfitting, where the model is too simple to capture the underlying patterns in the data. For example, using a linear model to fit data that has a non-linear relationship can result in significant prediction errors.

- High Bias: Results in large errors in both training and testing datasets.

- Example: A straight line trying to fit a complex curve.

What is Variance?

Variance measures how much a model's predictions change when it is trained on different subsets of the data. High variance can lead to overfitting, where the model learns the noise in the training data instead of the actual patterns. This means that while the model performs well on training data, it fails to generalize to new, unseen data.

- High Variance: Performs well on training data but poorly on test data.

- Example: A complex curve that fits every point in the training set perfectly but does not represent the underlying data distribution.

The Bias-Variance Tradeoff

The bias-variance tradeoff illustrates the relationship between model complexity and prediction error. If an algorithm is too simple, it may exhibit high bias and low variance. Conversely, if the algorithm is too complex, it may show low bias but high variance. The goal is to find a balance between these two extremes.

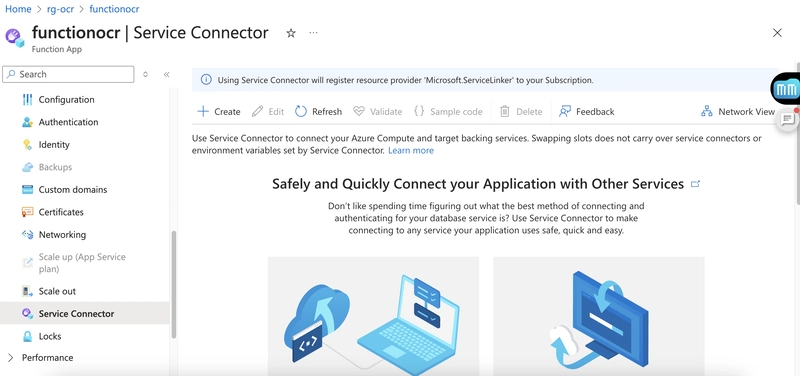

Visual Representation

In a typical graph of the bias-variance tradeoff, the total error is represented as the sum of bias squared, variance, and irreducible error:

Total Error = Bias² + Variance + Irreducible Error

The ideal model minimizes total error at the optimal point of this tradeoff.

Bias-Variance Decomposition for Classification and Regression

To illustrate the concept of bias and variance, we can use the Bias-Variance Decomposition technique. Here’s how to implement it using Python for both classification and regression tasks.

Bias-Variance Decomposition for Classification

# Import the necessary libraries

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import BaggingClassifier

from mlxtend.evaluate import bias_variance_decomp

import warnings

warnings.filterwarnings('ignore')

# Load the dataset

X, y = load_iris(return_X_y=True)

# Split train and test dataset

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25, random_state=23, shuffle=True, stratify=y)

# Build the classification model

tree = DecisionTreeClassifier(random_state=123)

clf = BaggingClassifier(base_estimator=tree, n_estimators=50, random_state=23)

# Bias-variance decomposition

avg_expected_loss, avg_bias, avg_var = bias_variance_decomp(clf, X_train, y_train, X_test, y_test, loss='0-1_loss', random_seed=23)

# Print the values

print('Average expected loss: %.2f' % avg_expected_loss)

print('Average bias: %.2f' % avg_bias)

print('Average variance: %.2f' % avg_var)

Bias-Variance Decomposition for Regression

# Load the necessary libraries

from sklearn.datasets import fetch_california_housing

from sklearn.model_selection import train_test_split

import tensorflow as tf

from mlxtend.evaluate import bias_variance_decomp

import warnings

warnings.filterwarnings('ignore')

# Load the dataset

X, y = fetch_california_housing(return_X_y=True)

# Split train and test dataset

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25, random_state=23, shuffle=True)

# Build the regression model

model = tf.keras.Sequential([

tf.keras.layers.Dense(64, activation=tf.nn.relu),

tf.keras.layers.Dense(1)

])

# Set optimizer and loss

optimizer = tf.keras.optimizers.Adam()

model.compile(loss='mean_squared_error', optimizer=optimizer)

# Train the model

model.fit(X_train, y_train, epochs=25, verbose=0)

# Evaluations

accuracy = model.evaluate(X_test, y_test)

print('Average: %.2f' % accuracy)

# Bias-variance decomposition

avg_expected_loss, avg_bias, avg_var = bias_variance_decomp(model, X_train, y_train, X_test, y_test, loss='mse', random_seed=23, epochs=5, verbose=0)

# Print the result

print('Average expected loss: %.2f' % avg_expected_loss)

print('Average bias: %.2f' % avg_bias)

print('Average variance: %.2f' % avg_var)

Conclusion

Understanding the bias-variance tradeoff is crucial for optimizing machine learning models. By managing bias and variance effectively, you can avoid issues like overfitting and underfitting, ensuring your models generalize well to new data. Through techniques like bias-variance decomposition, you can gain insights into how well your models are performing and adjust them accordingly.

For more content, follow me at — https://linktr.ee/shlokkumar2303

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)