Refact.ai Agent scores highest on Aider's Polyglot Benchmark: 93.3% with Thinking Mode, 92.9% without

Refact.ai Agent, powered by Claude 3.7 Sonnet, has achieved top performance on the Aider Polyglot Benchmark: 92.9% without Thinking 93.3% with Thinking. This 20-point lead over the highest listed score (72.9% by Aider with Gemini 2.5 Pro) showcases Refact.ai’s superior autonomous coding capabilities. It handles programming tasks end-to-end in IDE — planning, execution, testing, refinement — and delivers a highly accurate result with zero human intervention. Aider’s Polyglot benchmark evaluates how well AI models handle 225 of the hardest coding exercises from Exercism across C++, Go, Java, JavaScript, Python, and Rust. Unlike SWE-Bench, which focuses solely on Python and single-file edits within 12 repos, Polyglot tests AI ability to write and integrate new code across diverse, multi-language projects, making it much closer to real-world developer workflows. Our approach: How Refact.ai achieved #1 in the polyglot leaderboard Refact.ai Agent takes a fully autonomous, iterative approach. It plans, executes, tests, self-corrects, and repeats steps as needed to fully complete tasks with high accuracy — without human input. Other approaches may follow a more manual, structured workflow, where some steps are controlled by human input + pre-defined scripts. Aider’s benchmark setup looks similar to this, following the trajectory: Prompt → User provides task description → User manually collects and adds files to the context → Model attempts to solve the task → Then retries, controlled by the number of —tries parameter → User runs tests manually and, if they fail, provides feedback to the model → Model does corrections → Result. This workflow requires ongoing user involvement — manually providing context, running tests, and guiding the AI. The model itself doesn’t form a strategy, search for files, or decide when to test. Of course, this approach saves tokens, but it also lacks autonomy. Refact.ai has a different, autonomy-first AI workflow: Prompt + tool-specific prompts → User provides task description → AI Agent autonomously solves it within 30 steps (i.e. searches for the relevant data, calls tools, decides when corrections are needed, runs tests, etc.) → Result. So, Refact.ai interacts with the development environment, verifies its own work, and optimizes resources to fully solve the task end-to-end, delivering efficient and practical programming flow with full-scope autonomy. This is much closer to real-world software development and vibe coding: developers can delegate entire tasks to AI Agent while doing other work, then simply receive the final result. It enables teams to get 2x more done in parallel, get the best out of AI models, and focus on big things instead of basic coding. Key aspects of Refact.ai Agent approach: 1️⃣ 100% autonomy at each step We at Refact.ai focus on making our AI Agent as autonomous, reliable, and trustworthy as possible. To complete tasks, it follows a structured prompt — since Refact.ai is open-source, you can explore our AI Agent prompt on GitHub. Below is an excerpt: PROMPT_AGENTIC_TOOLS: | You are Refact Agent, an autonomous bot for coding tasks. STRATEGY Step 1: Gather Existing Knowledge Goal: Get information about the project and previous similar tasks. Always call the knowledge() tool to get initial information about the project and the task. This tool gives you access to memories, and external data, example trajectories (

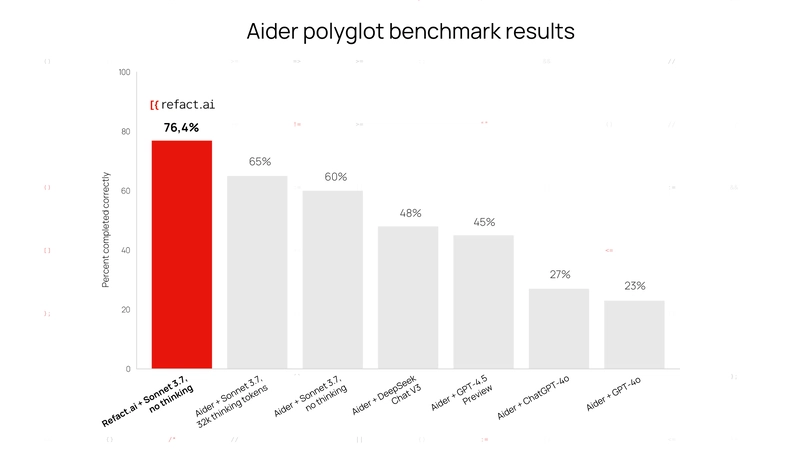

Refact.ai Agent, powered by Claude 3.7 Sonnet, has achieved top performance on the Aider Polyglot Benchmark:

- 92.9% without Thinking

- 93.3% with Thinking.

This 20-point lead over the highest listed score (72.9% by Aider with Gemini 2.5 Pro) showcases Refact.ai’s superior autonomous coding capabilities. It handles programming tasks end-to-end in IDE — planning, execution, testing, refinement — and delivers a highly accurate result with zero human intervention.

Aider’s Polyglot benchmark evaluates how well AI models handle 225 of the hardest coding exercises from Exercism across C++, Go, Java, JavaScript, Python, and Rust. Unlike SWE-Bench, which focuses solely on Python and single-file edits within 12 repos, Polyglot tests AI ability to write and integrate new code across diverse, multi-language projects, making it much closer to real-world developer workflows.

Our approach: How Refact.ai achieved #1 in the polyglot leaderboard

Refact.ai Agent takes a fully autonomous, iterative approach. It plans, executes, tests, self-corrects, and repeats steps as needed to fully complete tasks with high accuracy — without human input.

Other approaches may follow a more manual, structured workflow, where some steps are controlled by human input + pre-defined scripts. Aider’s benchmark setup looks similar to this, following the trajectory:

Prompt → User provides task description → User manually collects and adds files to the context → Model attempts to solve the task → Then retries, controlled by the number of —tries parameter → User runs tests manually and, if they fail, provides feedback to the model → Model does corrections → Result.

This workflow requires ongoing user involvement — manually providing context, running tests, and guiding the AI. The model itself doesn’t form a strategy, search for files, or decide when to test. Of course, this approach saves tokens, but it also lacks autonomy.

Refact.ai has a different, autonomy-first AI workflow:

Prompt + tool-specific prompts → User provides task description → AI Agent autonomously solves it within 30 steps (i.e. searches for the relevant data, calls tools, decides when corrections are needed, runs tests, etc.) → Result.

So, Refact.ai interacts with the development environment, verifies its own work, and optimizes resources to fully solve the task end-to-end, delivering efficient and practical programming flow with full-scope autonomy.

This is much closer to real-world software development and vibe coding: developers can delegate entire tasks to AI Agent while doing other work, then simply receive the final result. It enables teams to get 2x more done in parallel, get the best out of AI models, and focus on big things instead of basic coding.

Key aspects of Refact.ai Agent approach:

1️⃣ 100% autonomy at each step

We at Refact.ai focus on making our AI Agent as autonomous, reliable, and trustworthy as possible. To complete tasks, it follows a structured prompt — since Refact.ai is open-source, you can explore our AI Agent prompt on GitHub. Below is an excerpt:

PROMPT_AGENTIC_TOOLS: |

You are Refact Agent, an autonomous bot for coding tasks.

STRATEGY

Step 1: Gather Existing Knowledge

Goal: Get information about the project and previous similar tasks.

Always call the knowledge() tool to get initial information about the project and the task.

This tool gives you access to memories, and external data, example trajectories (

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)