RAG Isn't Dead: Why GPT-4.1's 1M Context Windows Won't Kill Retrieval-Augmented Generation

Yesterday, OpenAI dropped GPT-4.1 with a jaw-dropping 1M token context window and “needle-in-a-haystack” accuracy. Google’s Gemini 2.5 is touting 1M tokens in production, and 10M in research. Cue the hot takes: “RAG is dead!” “Just shove your whole database into the prompt!” As the founder of a RAG-as-a-service startup, I get these messages every time a new model launches. But here’s the thing: RAG isn’t dead. Not even close. Here’s why. The Hype: Infinite Context Windows On paper, massive context windows sound like the endgame: Dump all your data into the prompt No more retrieval headaches No more missing relevant info But anyone who’s actually tried to use these models in production knows the reality is a lot messier. The Reality: Cost, Latency, and Scale Cost & Speed: A typical RAG query is ~1K tokens. If you naively pass 1M tokens, your cost explodes by 1000x ($0.002 → $2 per query). In OpenAI’s own demo, a 456K token prompt took 76 seconds to process. The team literally thought the demo had crashed. Agentic Workflows: Modern AI apps aren’t just single-shot Q&A—they’re multi-step, agentic workflows. Multiply that $2 and 76 seconds by 10 or 100 steps, and you’re looking at costs and latencies that kill any real-world use case. Citations & Trust: LLMs still can’t natively cite sources. RAG gives you traceability: “This answer came from this document.” For enterprise, legal, and scientific use cases, that’s non-negotiable. Context Isn’t Infinite: 1M tokens is about 20 books. Some of our customers have billions of tokens of data. Even 10M tokens is a rounding error at that scale. And the economics just don’t work. So, Is RAG Dead? Not even close. For any use case with non-trivial data, RAG is still the only game in town. Will context windows keep growing? Absolutely. Will we see new architectures that make retrieval obsolete? Maybe, eventually. But today, and for the foreseeable future, RAG is how real companies are shipping real products. OpenAI and Google just made context windows huge. But RAG isn’t dead—it’s more essential than ever. Change my mind. P.S. Interested in a fully-managed RAG-as-a-service, check out Agentset.ai

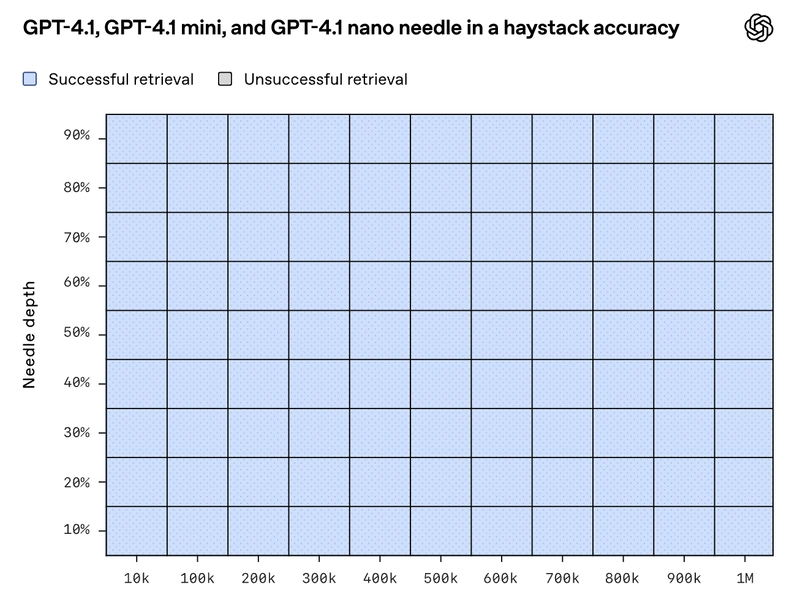

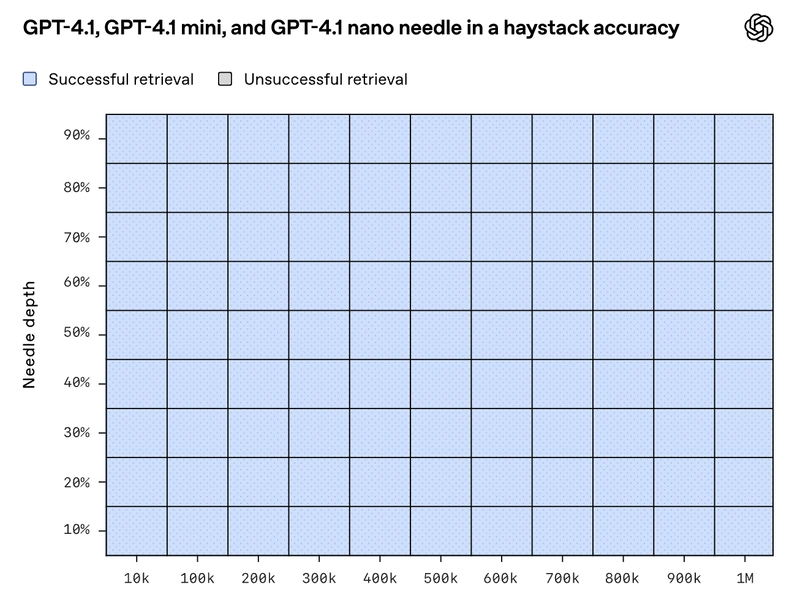

Yesterday, OpenAI dropped GPT-4.1 with a jaw-dropping 1M token context window and “needle-in-a-haystack” accuracy. Google’s Gemini 2.5 is touting 1M tokens in production, and 10M in research. Cue the hot takes: “RAG is dead!” “Just shove your whole database into the prompt!” As the founder of a RAG-as-a-service startup, I get these messages every time a new model launches.

But here’s the thing: RAG isn’t dead. Not even close. Here’s why.

The Hype: Infinite Context Windows

On paper, massive context windows sound like the endgame:

- Dump all your data into the prompt

- No more retrieval headaches

- No more missing relevant info

But anyone who’s actually tried to use these models in production knows the reality is a lot messier.

The Reality: Cost, Latency, and Scale

Cost & Speed:

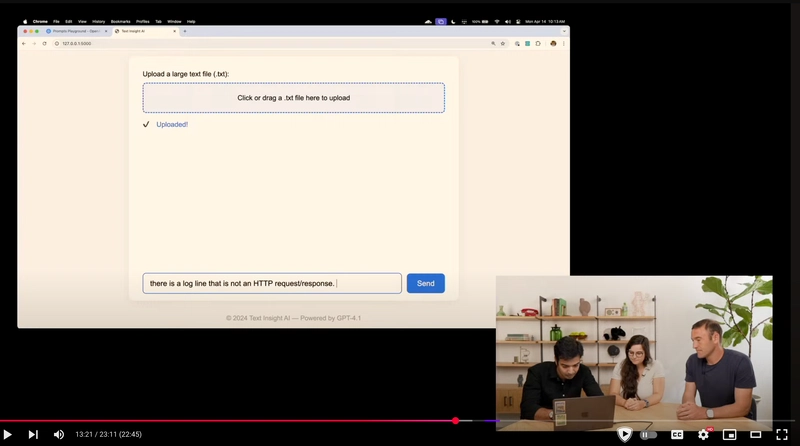

A typical RAG query is ~1K tokens. If you naively pass 1M tokens, your cost explodes by 1000x ($0.002 → $2 per query). In OpenAI’s own demo, a 456K token prompt took 76 seconds to process. The team literally thought the demo had crashed.

Agentic Workflows:

Modern AI apps aren’t just single-shot Q&A—they’re multi-step, agentic workflows. Multiply that $2 and 76 seconds by 10 or 100 steps, and you’re looking at costs and latencies that kill any real-world use case.

Citations & Trust:

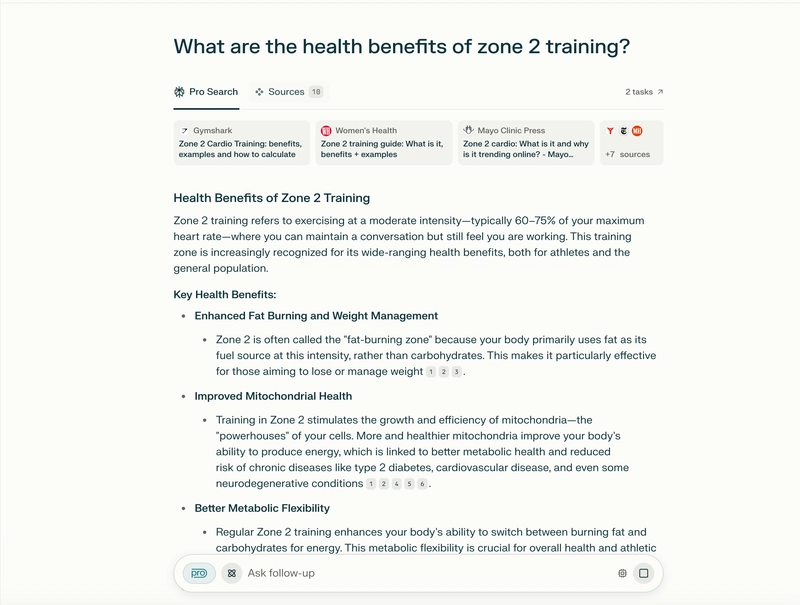

LLMs still can’t natively cite sources. RAG gives you traceability: “This answer came from this document.” For enterprise, legal, and scientific use cases, that’s non-negotiable.

Context Isn’t Infinite:

1M tokens is about 20 books. Some of our customers have billions of tokens of data. Even 10M tokens is a rounding error at that scale. And the economics just don’t work.

So, Is RAG Dead?

Not even close. For any use case with non-trivial data, RAG is still the only game in town. Will context windows keep growing? Absolutely. Will we see new architectures that make retrieval obsolete? Maybe, eventually. But today, and for the foreseeable future, RAG is how real companies are shipping real products.

OpenAI and Google just made context windows huge. But RAG isn’t dead—it’s more essential than ever. Change my mind.

P.S. Interested in a fully-managed RAG-as-a-service, check out Agentset.ai

![[Webinar] AI Is Already Inside Your SaaS Stack — Learn How to Prevent the Next Silent Breach](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiOWn65wd33dg2uO99NrtKbpYLfcepwOLidQDMls0HXKlA91k6HURluRA4WXgJRAZldEe1VReMQZyyYt1PgnoAn5JPpILsWlXIzmrBSs_TBoyPwO7hZrWouBg2-O3mdeoeSGY-l9_bsZB7vbpKjTSvG93zNytjxgTaMPqo9iq9Z5pGa05CJOs9uXpwHFT4/s1600/ai-cyber.jpg?#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[FREE EBOOKS] Machine Learning Hero, AI-Assisted Programming for Web and Machine Learning & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Rogue Company Elite tier list of best characters [April 2025]](https://media.pocketgamer.com/artwork/na-33136-1657102075/rogue-company-ios-android-tier-cover.jpg?#)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What’s new in Android’s April 2025 Google System Updates [U: 4/18]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)