Proximal Policy Optimization (PPO) and Generalized Reinforcement Learning with Proximal Optimizer (GRPO)

Introduction Both Proximal Policy Optimization (PPO) and Generalized Reinforcement Learning with Proximal Optimizer (GRPO) are the algorithm of Reinforcement Learning (RL). In this blog, I am going to explain both the algorithms in reference to a chess game. I came across these terms while reading DeepSeek R1's Research Papers. Reinforcement Learning (RL) For someone new to this term, RL is a type of machine learning where an agent learns to make decisions by interacting with an environment. The agent takes actions, receives rewards or penalties as feedback, and improves its behavior over time through trial and error to maximize cumulative rewards. Proximal Policy Optimization (PPO) PPO consists of two elements: the AI Agent (the one who is learning) and the Critic. The AI Agent has an initial policy, based on which it takes a decision. Steps: On a Chess board, out of all the possible moves, the AI Agent picks one move based on the policy and executes it. After execution, the Critic provides feedback on the move taken by the AI Agent. Based on the feedback, the AI Agent updates the policy. However, it does not make a drastic change to it; instead, it makes only minor adjustments. The above steps are repeated until the AI Agent has learned adequately. PPO explores one step at a time. Moreover, since PPO requires a critic, it needs more storage compared to other methods. Generalized Reinforcement Learning with Proximal Optimizer (GRPO) GRPO does not have a critic. It only consists of the AI Agent with an initial policy, based on which it takes decisions. Steps: On a Chess board, the AI Agent generates a group of all possible moves. For example: Group of actions: Action 1: Move the bishop to left or right. Action 2: Move the queen to left, right, up, down or diagonally across. The AI Agent evaluates all the actions in the group simultaneously and, based on the policy, chooses the best action to take. For instance, if the AI Agent selects Action 1, the next decision would be whether to move the bishop left or right. Again, based on the policy, the AI Agent makes this decision. Based on the decision, the environment (in this case, the changed state of the chessboard) gives feedback to the AI Agent. Finally, based on the feedback, the AI Agent updates the policy. Conclusion Thank you for reading the blog. More such topics are also listed on my notes website. This website contains a curated list of AI-related keywords and terms, along with their explanations based on my understanding. This resource is designed to help learners who want to deepen their understanding of AI concepts by exploring academic materials.

Introduction

Both Proximal Policy Optimization (PPO) and Generalized Reinforcement Learning with Proximal Optimizer (GRPO) are the algorithm of Reinforcement Learning (RL). In this blog, I am going to explain both the algorithms in reference to a chess game. I came across these terms while reading DeepSeek R1's Research Papers.

Reinforcement Learning (RL)

For someone new to this term, RL is a type of machine learning where an agent learns to make decisions by interacting with an environment. The agent takes actions, receives rewards or penalties as feedback, and improves its behavior over time through trial and error to maximize cumulative rewards.

Proximal Policy Optimization (PPO)

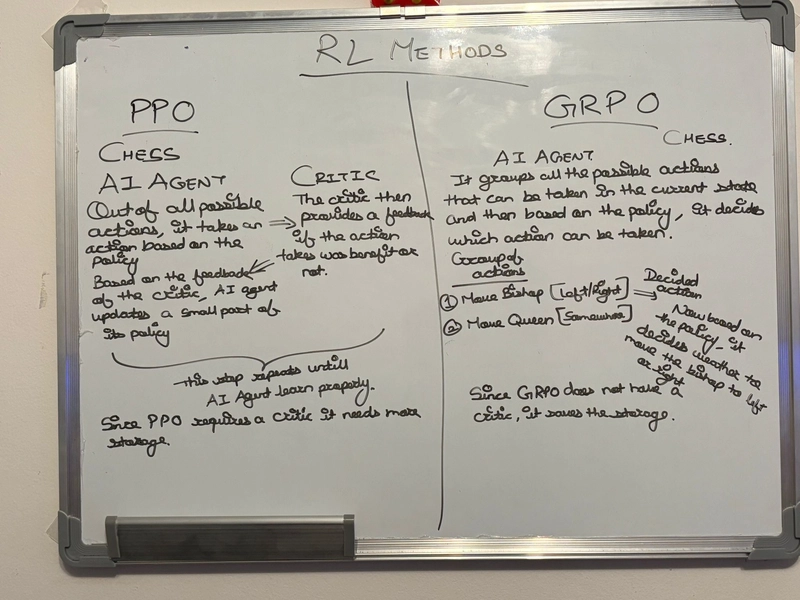

PPO consists of two elements: the AI Agent (the one who is learning) and the Critic. The AI Agent has an initial policy, based on which it takes a decision.

Steps:

- On a Chess board, out of all the possible moves, the AI Agent picks one move based on the policy and executes it.

- After execution, the Critic provides feedback on the move taken by the AI Agent.

- Based on the feedback, the AI Agent updates the policy. However, it does not make a drastic change to it; instead, it makes only minor adjustments.

- The above steps are repeated until the AI Agent has learned adequately.

PPO explores one step at a time. Moreover, since PPO requires a critic, it needs more storage compared to other methods.

Generalized Reinforcement Learning with Proximal Optimizer (GRPO)

GRPO does not have a critic. It only consists of the AI Agent with an initial policy, based on which it takes decisions.

Steps:

- On a Chess board, the AI Agent generates a group of all possible moves. For example: Group of actions:

- Action 1: Move the bishop to left or right.

Action 2: Move the queen to left, right, up, down or diagonally across.

The AI Agent evaluates all the actions in the group simultaneously and, based on the policy, chooses the best action to take. For instance, if the AI Agent selects Action 1, the next decision would be whether to move the bishop left or right. Again, based on the policy, the AI Agent makes this decision.

Based on the decision, the environment (in this case, the changed state of the chessboard) gives feedback to the AI Agent.

Finally, based on the feedback, the AI Agent updates the policy.

Conclusion

Thank you for reading the blog. More such topics are also listed on my notes website. This website contains a curated list of AI-related keywords and terms, along with their explanations based on my understanding. This resource is designed to help learners who want to deepen their understanding of AI concepts by exploring academic materials.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)