Prolog-MCP Server: Neuro-Symbolic AI for Modern Workflows

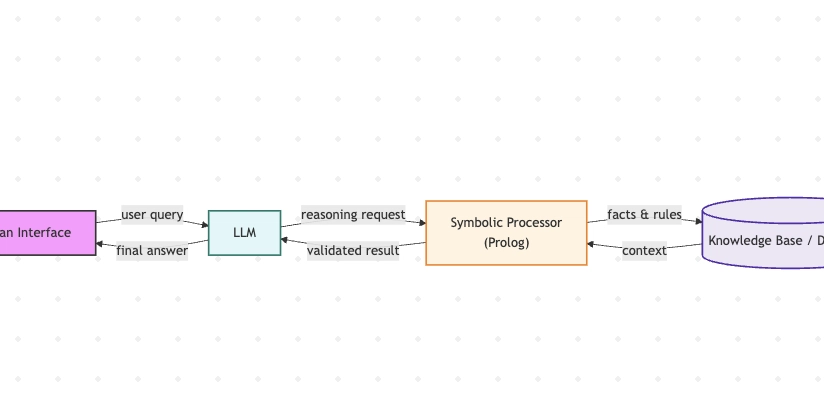

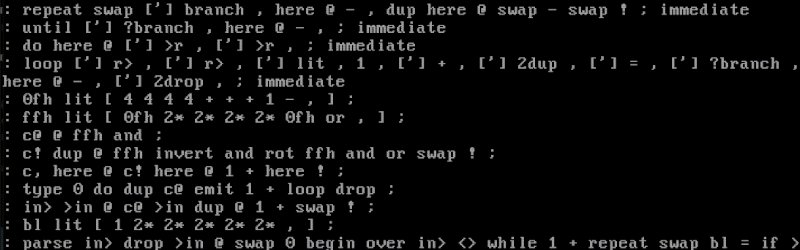

What is Neuro-Symbolic AI? Neuro-Symbolic AI represents a convergence of symbolic reasoning (exemplified by Prolog's logic programming) and neural networks' pattern recognition capabilities. This hybrid approach addresses the limitations of each paradigm while leveraging their complementary strengths. For a deeper dive in the concepts and history of symbolic AI, see excellent article here on dev.to by developers from Nucleoid, and reference to various readings on the bottom of this article. Historical Foundations in Prolog Symbolic AI roots: Prolog, developed in the 1970s, became the cornerstone of symbolic AI with its rule-based inference engine and declarative knowledge representation. It enabled: Logical deduction through Horn clauses Expert systems for medical diagnosis and natural language processing Precise reasoning with formal ontologies Limitations of early symbolic systems: Struggled with uncertainty and real-world data Required manual rule engineering Lacked learning capabilities Modern Neural Integration Current Neuro-Symbolic systems combine Prolog-style reasoning with deep learning: Architecture patterns: Neural frontends process raw data (images/text) Symbolic backends apply logical constraints Bidirectional knowledge flow through shared embeddings Prolog's evolving role: Logic-as-a-service: Embedded Prolog engines validate neural outputs Dynamic knowledge graphs: Prolog rules guide neural attention mechanisms Explainability layer: Translates neural activations to human-readable proofs Toolchain integration: % Example: Hybrid image recognition detect(X) :- neural_classifier(X, Class), symbolic_constraint(Class, Context), validate(Class, Context). Inductive Logic Programming (Progol) vs Neural Approaches Aspect Progol/ILP Neural Networks Neuro-symbolic Bridge Learning Paradigm Rule induction from examples + BK Statistical pattern recognition Systems like Propper combine ILP with neural-based BK processing Strengths Interpretable rules, small data efficiency Handles unstructured data, scalability Neural-symbolic layers translate features to predicates Weaknesses Sensitive to noise, limited scalability Black-box decisions, data hunger Symbolic regularizers shape neural training Integration - - Joint inference and alternate neural/logical passes This synthesis enables systems that learn from both data samples and formal knowledge, achieving better generalization than either approach alone. Modern frameworks like Logical Neural Networks (LNN) demonstrate how gradient-based learning can coexist with precise logical semantics. Introducing Prolog-MCP The Prolog-MCP Server is a neurosymbolic AI backend that brings together Prolog's symbolic reasoning and the Model Context Protocol (MCP). It leverages the high-performance Trealla Prolog engine via trealla-js JavaScript/TypeScript bindings (npm: trealla). What is Prolog-MCP? Prolog-MCP acts as a modular MCP server, exposing Prolog’s logic programming as a set of tools that can be orchestrated by LLMs, IDEs, or agentic workflows. It is ideal for: Validating LLM outputs with symbolic rules Stateful, explainable knowledge bases Hybrid AI pipelines combining neural and symbolic reasoning Key Features Persistent Prolog Sessions: Maintain state and context between tool calls, supporting complex multi-step reasoning. Session Management: Save and load knowledge bases to disk for long-running or collaborative projects. Four Core Tools: loadProgram: Dynamically load Prolog predicates/rules. runPrologQuery: Execute logical queries and retrieve all solutions. saveSession: Persist the entire Prolog state to disk. loadSession: Restore a previous session instantly. Type Safety: All I/O is validated using Zod schemas. WebAssembly Runtime: Runs Trealla Prolog in a WASI environment for speed and portability. Modern Integration: Easily connect with Cline, Roo, Copilot, and other MCP-compatible tools. How It Works The server uses trealla-js to embed Trealla Prolog as a high-performance WASM interpreter in Node.js and browser environments. Each MCP tool call (such as runPrologQuery) is mapped to a persistent Prolog interpreter instance, ensuring context is maintained across queries. Integration Example Plug into Cline, Roo, Copilot for Neuro-Symbolic LLM development or any MCP-compatible host for production LLM-Prolog integration with this config: { "mcpServers": { "prolog-mcp": { "command": "node", "args": [ "prolog-mcp/dist/index.js" ], "disabled": false, "alwaysAllow": [ "loadProgram", "runPrologQuery", "saveSession", "loadSession" ], "timeout": 15 } } } This enables symbolic reasoning in your AI workflows with a single configuration step

What is Neuro-Symbolic AI?

Neuro-Symbolic AI represents a convergence of symbolic reasoning (exemplified by Prolog's logic programming) and neural networks' pattern recognition capabilities. This hybrid approach addresses the limitations of each paradigm while leveraging their complementary strengths. For a deeper dive in the concepts and history of symbolic AI, see excellent article here on dev.to by developers from Nucleoid, and reference to various readings on the bottom of this article.

Historical Foundations in Prolog

-

Symbolic AI roots: Prolog, developed in the 1970s, became the cornerstone of symbolic AI with its rule-based inference engine and declarative knowledge representation. It enabled:

- Logical deduction through Horn clauses

- Expert systems for medical diagnosis and natural language processing

- Precise reasoning with formal ontologies

-

Limitations of early symbolic systems:

- Struggled with uncertainty and real-world data

- Required manual rule engineering

- Lacked learning capabilities

Modern Neural Integration

Current Neuro-Symbolic systems combine Prolog-style reasoning with deep learning:

-

Architecture patterns:

- Neural frontends process raw data (images/text)

- Symbolic backends apply logical constraints

- Bidirectional knowledge flow through shared embeddings

-

Prolog's evolving role:

- Logic-as-a-service: Embedded Prolog engines validate neural outputs

- Dynamic knowledge graphs: Prolog rules guide neural attention mechanisms

- Explainability layer: Translates neural activations to human-readable proofs

Toolchain integration:

% Example: Hybrid image recognition

detect(X) :-

neural_classifier(X, Class),

symbolic_constraint(Class, Context),

validate(Class, Context).

Inductive Logic Programming (Progol) vs Neural Approaches

| Aspect | Progol/ILP | Neural Networks | Neuro-symbolic Bridge |

|---|---|---|---|

| Learning Paradigm | Rule induction from examples + BK | Statistical pattern recognition | Systems like Propper combine ILP with neural-based BK processing |

| Strengths | Interpretable rules, small data efficiency | Handles unstructured data, scalability | Neural-symbolic layers translate features to predicates |

| Weaknesses | Sensitive to noise, limited scalability | Black-box decisions, data hunger | Symbolic regularizers shape neural training |

| Integration | - | - | Joint inference and alternate neural/logical passes |

This synthesis enables systems that learn from both data samples and formal knowledge, achieving better generalization than either approach alone. Modern frameworks like Logical Neural Networks (LNN) demonstrate how gradient-based learning can coexist with precise logical semantics.

Introducing Prolog-MCP

The Prolog-MCP Server is a neurosymbolic AI backend that brings together Prolog's symbolic reasoning and the Model Context Protocol (MCP). It leverages the high-performance Trealla Prolog engine via trealla-js JavaScript/TypeScript bindings (npm: trealla).

What is Prolog-MCP?

Prolog-MCP acts as a modular MCP server, exposing Prolog’s logic programming as a set of tools that can be orchestrated by LLMs, IDEs, or agentic workflows. It is ideal for:

- Validating LLM outputs with symbolic rules

- Stateful, explainable knowledge bases

- Hybrid AI pipelines combining neural and symbolic reasoning

Key Features

- Persistent Prolog Sessions: Maintain state and context between tool calls, supporting complex multi-step reasoning.

- Session Management: Save and load knowledge bases to disk for long-running or collaborative projects.

-

Four Core Tools:

-

loadProgram: Dynamically load Prolog predicates/rules. -

runPrologQuery: Execute logical queries and retrieve all solutions. -

saveSession: Persist the entire Prolog state to disk. -

loadSession: Restore a previous session instantly.

-

- Type Safety: All I/O is validated using Zod schemas.

- WebAssembly Runtime: Runs Trealla Prolog in a WASI environment for speed and portability.

- Modern Integration: Easily connect with Cline, Roo, Copilot, and other MCP-compatible tools.

How It Works

The server uses trealla-js to embed Trealla Prolog as a high-performance WASM interpreter in Node.js and browser environments. Each MCP tool call (such as runPrologQuery) is mapped to a persistent Prolog interpreter instance, ensuring context is maintained across queries.

Integration Example

Plug into Cline, Roo, Copilot for Neuro-Symbolic LLM development or any MCP-compatible host for production LLM-Prolog integration with this config:

{

"mcpServers": {

"prolog-mcp": {

"command": "node",

"args": [

"prolog-mcp/dist/index.js"

],

"disabled": false,

"alwaysAllow": [

"loadProgram",

"runPrologQuery",

"saveSession",

"loadSession"

],

"timeout": 15

}

}

}

This enables symbolic reasoning in your AI workflows with a single configuration step.

Performance

| Operation | Avg. Latency | Memory Usage |

|---|---|---|

| Query Execution | 12ms | 18MB |

| Session Save | 45ms | 22MB |

| Program Load | 8ms | 15MB |

Getting Started

- Clone and install:

git clone https://github.com/adamrybinski/prolog-mcp

cd prolog-mcp

npm install

npm run build

- Run the server:

node dist/index.js

- Connect from your favorite AI tool using the integration config above.

Example Use Case

Validate medical safety rules with Prolog:

?- loadProgram("

safe_dosage(Drug, Patient) :-

patient_weight(Patient, W),

max_daily(Drug, Max),

current_dosage(Drug, D),

D =< Max * W.

").

?- runPrologQuery("safe_dosage(warfarin, patient_123)").

Serverless and Kubernetes Integration

Prolog-MCP is designed for efficient deployment in modern serverless and cloud-native environments, thanks to its WASI-compatible WebAssembly runtime.

Fermyon Spin Integration:

You can deploy Prolog-MCP as a near-server function using Fermyon Spin. This enables ultra-fast, event-driven logic execution at the edge or in microservices architectures.

KubeSpin and Kubernetes WASI Controller:

For large-scale or enterprise deployments, Prolog-MCP can be managed using KubeSpin or any Kubernetes WASI controller. This allows you to run hundreds of lightweight, isolated Prolog-MCP instances per cluster node, with rapid cold starts and strong sandboxing.

Benefits:

- Near-instant cold start (<50ms)

- High density (many instances per node)

- Security via WASI sandboxing

- Seamless integration into event-driven and microservices platforms

Why Trealla and trealla-js?

- Trealla Prolog is a fast, lean ISO Prolog interpreter targeting WASI, ideal for both serverless and embedded scenarios.

- trealla-js provides robust JavaScript/TypeScript bindings, making it easy to embed Prolog logic in Node.js or browser-based MCP servers.

- This combination ensures high performance, portability, and easy integration with modern developer tools.

MCP Architecture: Modular and Extensible

MCP (Model Context Protocol) defines a clear separation between hosts (IDEs, LLMs), clients, servers, and data sources. Prolog-MCP fits into this architecture as a lightweight, tool-focused server, exposing symbolic reasoning to any AI host via a simple, standardized protocol.

Example application

For a practical application of Nuero-Symbolic AI in content automation, see my previous article on NeuSymPublish: a Neuro-Symbolic omnichannel publishing platform, which demonstrates how logic-driven agents can orchestrate autopublishing workflows across multiple channels using a hybrid of neural and symbolic reasoning.

References and Further Reading

- Next in the journey: Neuro-symbolic AI (dev.to)

- Neuro-Symbolic Artificial Intelligence: The State of the Art (IOS Press)

- Handbook on Neurosymbolic AI and Knowledge Graphs

- Neuro-Symbolic AI (Packt book)

- Wikipedia: Neuro-symbolic AI

- Trealla Prolog

- trealla-js npm package

- MCP Protocol

Ready to add symbolic reasoning to your AI workflows? Try Prolog-MCP today and power up your agentic applications with explainable, persistent logic!

Let me know in the comments how you’d use symbolic reasoning in your AI projects, or if you have questions about neurosymbolic architectures!

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)