OpenTelemetry Tracing for File Downloads from an S3 Bucket via API Gateway and Lambda Functions

Introduction: This post demonstrates how to build a serverless solution using AWS services like API Gateway, Lambda functions, and S3 buckets to enable file downloads using presigned URLs. By integrating OpenTelemetry tracing, we can gain deep insights into each request’s journey. The focus is on creating a secure and efficient method for downloading files from an S3 bucket while tracing the interactions from API Gateway (REST API) through Lambda to S3. About the project: In this project, we build a serverless architecture that allows users to securely download files from an S3 bucket via an API Gateway endpoint. API Gateway is secured using a Lambda Authorizer to ensure only authorized requests are processed. The architecture also incorporates OpenTelemetry tracing, providing detailed visibility into each request’s lifecycle — from API Gateway to Lambda functions and interactions with the S3 bucket. All resources (except the parameter in the Parameter Store) were created with CloudFormation. For the Lambda functions to work properly, the project uses the AWS Distro for OpenTelemetry (ADOT) Lambda layer, which enables tracing. You can find more information about setting up this layer here. The core components of the project are: API Gateway: Serves as the entry point for API requests, secured with a Lambda Authorizer. Lambda Functions: Handle the business logic, including: Authenticating requests via the Lambda Authorizer. Generating presigned URLs for downloading files from the S3 bucket. Handling error cases, such as incorrect API Gateway resources or invalid file names. S3 Bucket: Stores the files for download. OpenTelemetry Tracing: Tracks and logs the flow of requests for debugging and performance optimization. The Main Lambda function and IAM role configuration in infrastructure/root.yaml CloudFormation template: Parameters: S3BucketName: Type: String Default: 'store-files-20241010' StageName: Type: String Default: 'dev' OtelLambdaLayerArn: Type: String Default: 'arn:aws:lambda:eu-central-1:901920570463:layer:aws-otel-python-amd64-ver-1-25-0:1' Resources: MainLambdaFunction: DependsOn: - S3Bucket Type: AWS::Lambda::Function Properties: FunctionName: MainLambdaFunction Description: Makes requests to the S3 bucket Runtime: python3.12 Handler: index.lambda_handler Role: !GetAtt MainLambdaExecutionRole.Arn Timeout: 30 MemorySize: 512 Environment: Variables: S3_BUCKET_NAME: !Ref S3BucketName OTEL_PROPAGATORS: xray AWS_LAMBDA_EXEC_WRAPPER: /opt/otel-instrument TracingConfig: Mode: Active Layers: - !Ref OtelLambdaLayerArn Code: ZipFile: | import json import boto3 import os import logging from botocore.exceptions import ClientError from opentelemetry import trace # Initialize logging logger = logging.getLogger() logger.setLevel(logging.INFO) # OpenTelemetry Tracing tracer = trace.get_tracer(__name__) # Initialize the S3 client s3_client = boto3.client('s3') def lambda_handler(event, context): # Start tracing for the request with tracer.start_as_current_span("main-handler-span") as span: # Log the entire event received logger.info('Received event: %s', json.dumps(event)) # Extract and log routeKey, path, and method route_key = event.get("routeKey") path = event.get("path") http_method = event.get("httpMethod") logger.info('Route Key: %s', route_key) logger.info('HTTP Method: %s', http_method) logger.info('Path: %s', path) # Add tracing attributes span.set_attribute("routeKey", route_key) span.set_attribute("http.method", http_method) span.set_attribute("http.path", path) # Handle routes based on HTTP method and path if route_key == "GET /list" or (http_method == "GET" and path == "/list"): logger.info("Handling /list request") return list_objects(span) elif route_key == "GET /download" or (http_method == "GET" and path == "/download"): logger.info("Handling /download request") return generate_presigned_url(event, span) else: # Return a specific error mess

Introduction:

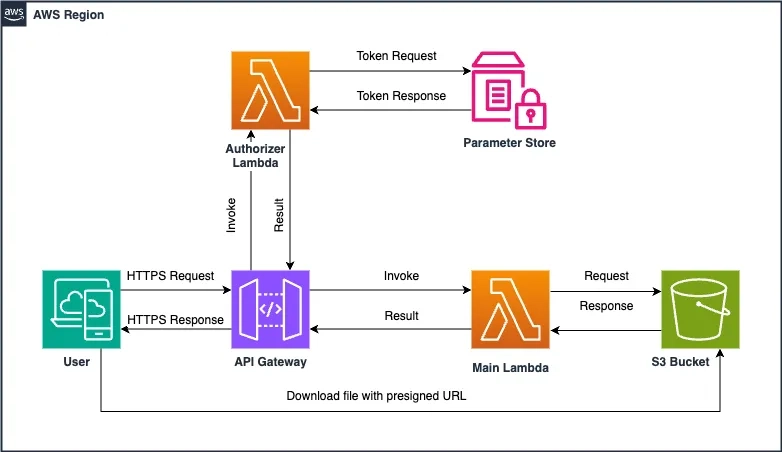

This post demonstrates how to build a serverless solution using AWS services like API Gateway, Lambda functions, and S3 buckets to enable file downloads using presigned URLs. By integrating OpenTelemetry tracing, we can gain deep insights into each request’s journey. The focus is on creating a secure and efficient method for downloading files from an S3 bucket while tracing the interactions from API Gateway (REST API) through Lambda to S3.

About the project:

In this project, we build a serverless architecture that allows users to securely download files from an S3 bucket via an API Gateway endpoint. API Gateway is secured using a Lambda Authorizer to ensure only authorized requests are processed. The architecture also incorporates OpenTelemetry tracing, providing detailed visibility into each request’s lifecycle — from API Gateway to Lambda functions and interactions with the S3 bucket. All resources (except the parameter in the Parameter Store) were created with CloudFormation.

For the Lambda functions to work properly, the project uses the AWS Distro for OpenTelemetry (ADOT) Lambda layer, which enables tracing. You can find more information about setting up this layer here.

The core components of the project are:

API Gateway: Serves as the entry point for API requests, secured with a Lambda Authorizer.

Lambda Functions: Handle the business logic, including:

- Authenticating requests via the Lambda Authorizer.

- Generating presigned URLs for downloading files from the S3 bucket.

- Handling error cases, such as incorrect API Gateway resources or invalid file names. S3 Bucket: Stores the files for download. OpenTelemetry Tracing: Tracks and logs the flow of requests for debugging and performance optimization.

The Main Lambda function and IAM role configuration in infrastructure/root.yaml CloudFormation template:

Parameters:

S3BucketName:

Type: String

Default: 'store-files-20241010'

StageName:

Type: String

Default: 'dev'

OtelLambdaLayerArn:

Type: String

Default: 'arn:aws:lambda:eu-central-1:901920570463:layer:aws-otel-python-amd64-ver-1-25-0:1'

Resources:

MainLambdaFunction:

DependsOn:

- S3Bucket

Type: AWS::Lambda::Function

Properties:

FunctionName: MainLambdaFunction

Description: Makes requests to the S3 bucket

Runtime: python3.12

Handler: index.lambda_handler

Role: !GetAtt MainLambdaExecutionRole.Arn

Timeout: 30

MemorySize: 512

Environment:

Variables:

S3_BUCKET_NAME: !Ref S3BucketName

OTEL_PROPAGATORS: xray

AWS_LAMBDA_EXEC_WRAPPER: /opt/otel-instrument

TracingConfig:

Mode: Active

Layers:

- !Ref OtelLambdaLayerArn

Code:

ZipFile: |

import json

import boto3

import os

import logging

from botocore.exceptions import ClientError

from opentelemetry import trace

# Initialize logging

logger = logging.getLogger()

logger.setLevel(logging.INFO)

# OpenTelemetry Tracing

tracer = trace.get_tracer(__name__)

# Initialize the S3 client

s3_client = boto3.client('s3')

def lambda_handler(event, context):

# Start tracing for the request

with tracer.start_as_current_span("main-handler-span") as span:

# Log the entire event received

logger.info('Received event: %s', json.dumps(event))

# Extract and log routeKey, path, and method

route_key = event.get("routeKey")

path = event.get("path")

http_method = event.get("httpMethod")

logger.info('Route Key: %s', route_key)

logger.info('HTTP Method: %s', http_method)

logger.info('Path: %s', path)

# Add tracing attributes

span.set_attribute("routeKey", route_key)

span.set_attribute("http.method", http_method)

span.set_attribute("http.path", path)

# Handle routes based on HTTP method and path

if route_key == "GET /list" or (http_method == "GET" and path == "/list"):

logger.info("Handling /list request")

return list_objects(span)

elif route_key == "GET /download" or (http_method == "GET" and path == "/download"):

logger.info("Handling /download request")

return generate_presigned_url(event, span)

else:

# Return a specific error message for invalid routes

logger.error("Invalid route: %s", route_key)

return {

'statusCode': 404,

'body': json.dumps({"error": "Resource name is incorrect."})

}

def list_objects(span):

bucket_name = os.environ.get('S3_BUCKET_NAME')

try:

logger.info("Listing objects in bucket: %s", bucket_name)

response = s3_client.list_objects_v2(Bucket=bucket_name)

if 'Contents' not in response:

logger.info("No objects found in bucket.")

return {

'statusCode': 200,

'body': json.dumps({"objects": []})

}

object_keys = [{'File': obj['Key']} for obj in response['Contents']]

logger.info("Found %d objects in bucket.", len(object_keys))

span.set_attribute("s3.object_count", len(object_keys))

return {

'statusCode': 200,

'body': json.dumps({"objects": object_keys})

}

except ClientError as e:

logger.error("Error listing objects in the bucket: %s", e)

return {

'statusCode': 500,

'body': json.dumps({"error": "Error listing objects in the bucket."})

}

def generate_presigned_url(event, span):

bucket_name = os.environ.get('S3_BUCKET_NAME')

query_string_params = event.get("queryStringParameters")

if not query_string_params:

logger.error("Query string parameters are missing or request is incorrect.")

return {

'statusCode': 400,

'body': json.dumps({"error": "Request is incorrect. Please provide valid query parameters."})

}

object_key = query_string_params.get("objectKey")

logger.info("Bucket name: %s", bucket_name)

logger.info("Requested object key: %s", object_key)

if not object_key:

logger.error("objectKey is missing in queryStringParameters.")

return {

'statusCode': 400,

'body': json.dumps({"error": "objectKey is required."})

}

# Check if the object exists

try:

logger.info("Checking if object %s exists in bucket %s", object_key, bucket_name)

s3_client.head_object(Bucket=bucket_name, Key=object_key)

except ClientError as e:

if e.response['Error']['Code'] == '404':

logger.error("Object %s does not exist in bucket %s", object_key, bucket_name)

return {

'statusCode': 404,

'body': json.dumps({"error": "Object name is incorrect or object doesn't exist."})

}

else:

logger.error("Error checking object existence in bucket: %s", e)

return {

'statusCode': 500,

'body': json.dumps({"error": "Error checking object existence."})

}

# Generate presigned URL if the object exists

try:

logger.info("Generating presigned URL for object: %s", object_key)

presigned_url = s3_client.generate_presigned_url(

'get_object',

Params={'Bucket': bucket_name, 'Key': object_key},

ExpiresIn=900

)

logger.info("Generated presigned URL: %s", presigned_url)

span.set_attribute("s3.object_key", object_key)

return {

'statusCode': 200,

'body': json.dumps({"url": presigned_url})

}

except ClientError as e:

logger.error("Error generating presigned URL for object %s: %s", object_key, e)

return {

'statusCode': 500,

'body': json.dumps({"error": "Error generating presigned URL."})

}

MainLambdaExecutionRole:

Type: AWS::IAM::Role

Properties:

RoleName: MainLambdaExecutionRole

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal:

Service:

- lambda.amazonaws.com

Action:

- sts:AssumeRole

ManagedPolicyArns:

- arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole

Policies:

- PolicyName: S3BucketAccessPolicy

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- s3:ListBucket

- s3:GetObject

- s3:HeadObject

Resource:

- !Sub arn:${AWS::Partition}:s3:::${S3BucketName}/*

- !Sub arn:${AWS::Partition}:s3:::${S3BucketName}

- PolicyName: XRayAccessPolicy

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- xray:PutTraceSegments

- xray:PutTelemetryRecords

Resource: "*"

Prerequisites:

Ensure the following prerequisites are in place:

- An AWS account with sufficient permissions to create and manage resources.

- The AWS CLI installed on the local machine.

Deployment:

1.Clone the repository.

git clone https://gitlab.com/Andr1500/api-gateway-dowload-from-s3.git

2.Create an authorization token in the AWS Systems Manager Parameter Store.

aws ssm put-parameter --name "AuthorizationLambdaToken" --value "token_value_secret" --type "SecureString"

3.Fill in all necessary Parameters in infrastructure/root.yaml CloudFormation template, and create the CloudFormation stack.

aws cloudformation create-stack \

--stack-name api-gw-dowload-from-s3 \

--template-body file://infrastructure/root.yaml \

--capabilities CAPABILITY_NAMED_IAM --disable-rollback

4.Retrieve the Invoke URLs of the Stage.

aws cloudformation describe-stacks --stack-name api-gw-dowload-from-s3 --query "Stacks[0].Outputs"

5.Copy some files to the S3 bucket for testing.

aws s3 cp images/apigw_lambda_s3.png s3://s3_bucket_name/apigw_lambda_s3.png

6.Test API and download the file with CURL.

IMPORTANT! After the infrastructure creation, it can take some time for the bucket name to propagate across AWS regions. During this time “Temporary Redirect” response might be received for requests to the object with presigned URL. In my case, even though the region was specified in the origin domain name, it was not working, only 1 day later downloading with the presigned URL worked correctly.

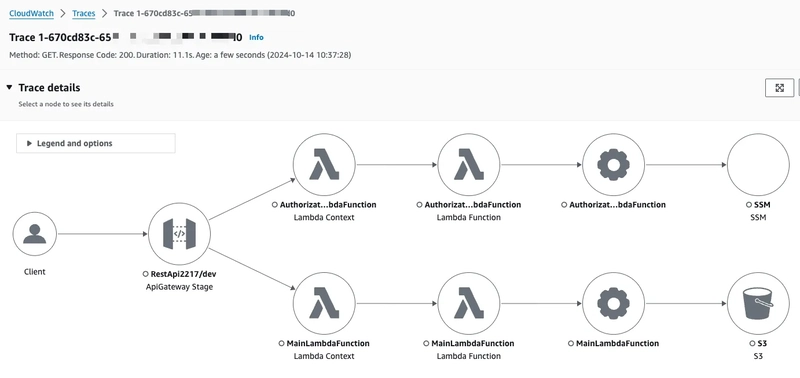

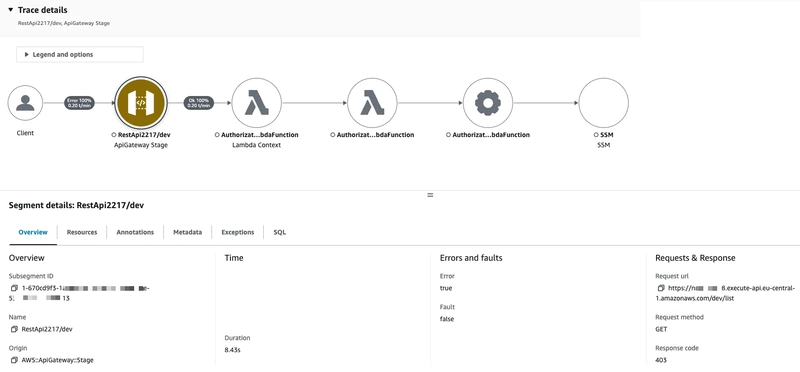

To view the X-Ray trace map and segments timeline, navigate to the AWS Console: CloudWatch -> X-Ray traces -> Traces. In the Traces section, a list of trace IDs will be displayed. Clicking on a trace ID will reveal the Trace map, Segments timeline, and associated Logs.

export APIGW_TOKEN='token_value_secret'

curl -X GET -H "Authorization: Bearer $APIGW_TOKEN" "https://api_id.execute-api.eu-central-1.amazonaws.com/dev/list"

curl -X GET -H "Authorization: Bearer $APIGW_TOKEN" "https://api_id.execute-api.eu-central-1.amazonaws.com/download?objectKey=apigw_lambda_s3.png"

curl -O "https://s3_bucket_name.s3.amazonaws.com/apigw_lambda_s3.png?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=ASIASAQWERTY123456%2Feu-central-1%2Fs3%2Faws4_request&X-Amz-Date=20241003T125740Z&X-Amz-Expires=600&X-Amz-SignedHeaders=host&X-Amz-Security-Token=token_value&X-Amz-Signature=signature_value"

7.Clean Up Resources. After testing, clean up resources by deleting the token from the Parameter Store, and the CloudFormation stack.

aws ssm delete-parameter --name "AuthorizationLambdaToken"

aws cloudformation delete-stack --stack-name api-gw-dowload-from-s3

Conclusion:

By leveraging AWS services like API Gateway, Lambda, and S3, along with the power of OpenTelemetry tracing, this project provides a robust solution for tracing file downloads. Using CloudFormation to manage the infrastructure as code ensures easy deployment and repeatability.

If you found this post helpful and interesting, please click the button to show your support. Feel free to use and share this post. You can also support me with a virtual coffee

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)