North Korean IT Workers Using Real-time Deepfake to Infiltrate Organizations via Remote Job

In a concerning evolution of cyber infiltration tactics, North Korean IT workers have begun deploying sophisticated real-time deepfake technology during remote job interviews to secure positions within organizations worldwide. This advanced technique allows threat actors to present convincing synthetic identities during video interviews, enabling them to bypass traditional identity verification processes and infiltrate companies for […] The post North Korean IT Workers Using Real-time Deepfake to Infiltrate Organizations via Remote Job appeared first on Cyber Security News.

In a concerning evolution of cyber infiltration tactics, North Korean IT workers have begun deploying sophisticated real-time deepfake technology during remote job interviews to secure positions within organizations worldwide.

This advanced technique allows threat actors to present convincing synthetic identities during video interviews, enabling them to bypass traditional identity verification processes and infiltrate companies for financial gain and potential espionage.

The approach represents a significant advancement over previous methods where DPRK actors primarily relied on static fake profiles and stolen credentials to secure remote positions.

The Democratic People’s Republic of Korea (DPRK) has consistently demonstrated interest in identity manipulation techniques, previously creating synthetic identities supported by compromised personal information.

This latest methodology employs real-time facial manipulation during video interviews, allowing a single operator to potentially interview for the same position multiple times using different synthetic personas.

.webp)

Additionally, it helps operatives avoid being identified and added to security bulletins and wanted notices issued by international law enforcement agencies.

Palo Alto Networks researchers at Unit 42 have identified this trend following analysis of indicators shared in The Pragmatic Engineer newsletter, which documented a case study involving a Polish AI company encountering two separate deepfake candidates.

The researchers noted that the same individual likely operated both personas, displaying increased confidence during the second technical interview after experiencing the interview format previously.

Real-time Deepfake

Further evidence emerged when Unit 42 analyzed a breach of Cutout.pro, an AI image manipulation service, revealing numerous email addresses likely tied to DPRK IT worker operations.

.webp)

The investigation uncovered multiple examples of face-swapping experiments used to create convincing professional headshots for synthetic identities.

What makes this threat particularly concerning is the accessibility of the technology – researchers demonstrated that a single individual with no prior image manipulation experience could create a synthetic identity suitable for job interviews in just 70 minutes using widely available hardware and software.

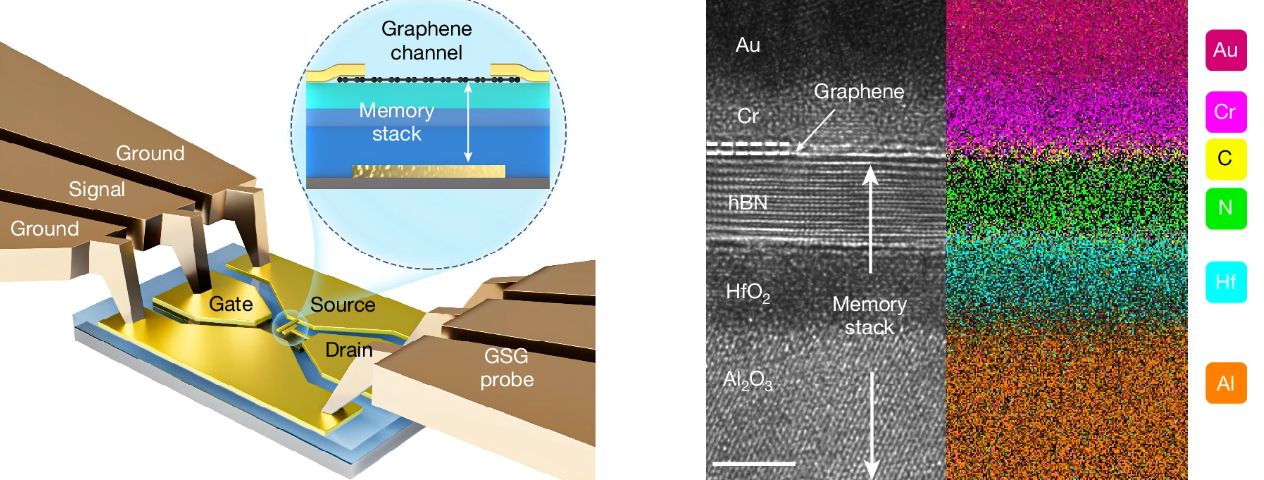

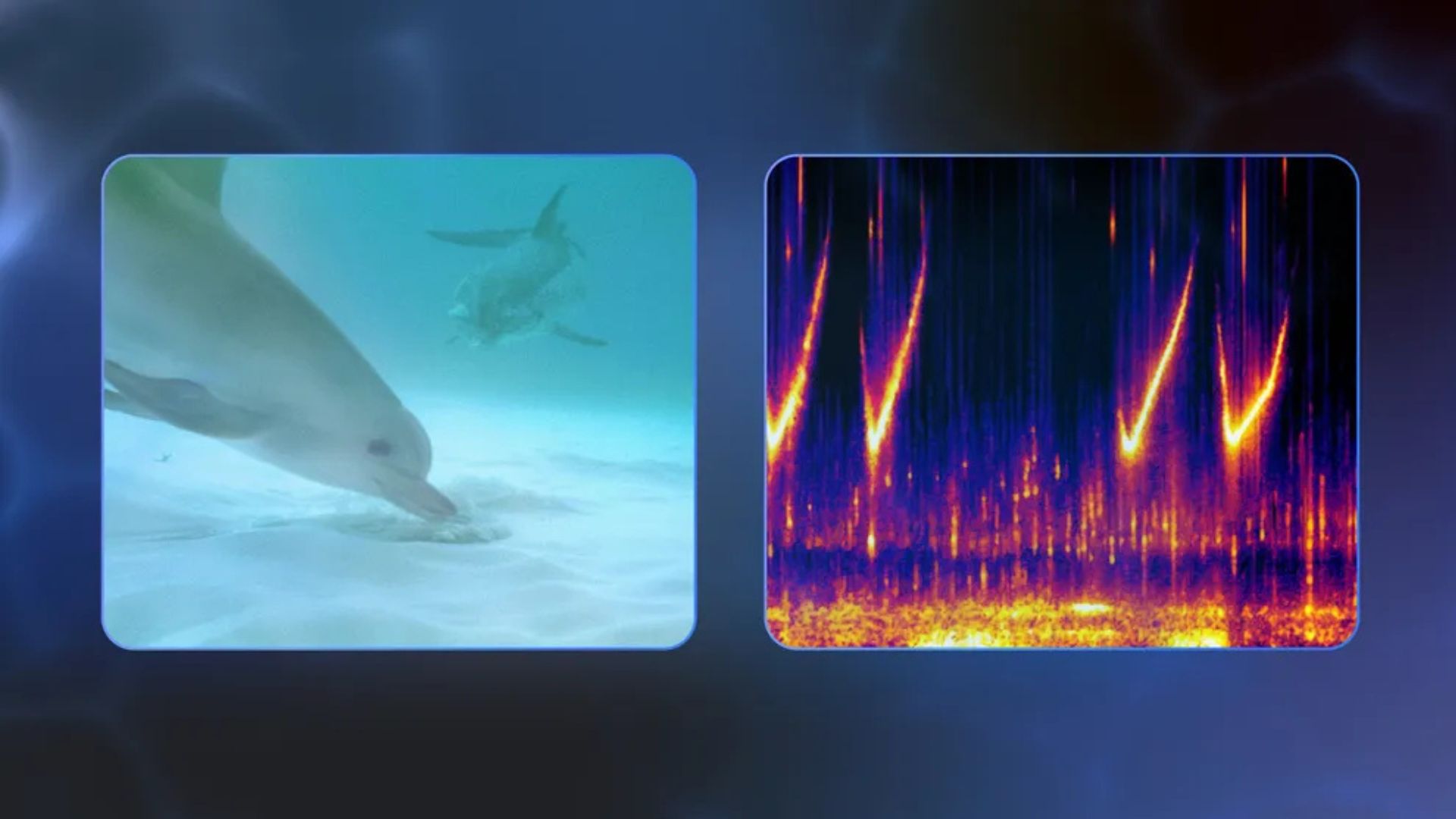

The technical implementation of these deepfakes typically involves utilizing generative adversarial networks (GANs) to create realistic face images, combined with facial landmark tracking software that maps expressions from the operator to the synthetic face in real-time.

The resulting video feed is then routed through virtual camera software that presents the deepfake as a standard webcam input to video conferencing applications.

The real-time deepfake systems do exhibit technical limitations that create detection opportunities.

Most notably, these systems struggle with rapid head movements, occlusion handling, lighting adaptation, and audio-visual synchronization.

When an operator’s hand passes over their face, the deepfake system typically fails to properly reconstruct the partially obscured features, creating noticeable artifacts. Similarly, sudden lighting changes reveal inconsistencies in rendering, particularly around facial edges.

To combat this emerging threat, organizations should implement multi-layered verification procedures throughout the hiring process, including requiring candidates to perform specific movements that challenge deepfake software capabilities, such as profile turns, hand gestures near the face, or the “ear-to-shoulder” technique.

Malware Trends Report Based on 15000 SOC Teams Incidents, Q1 2025 out!-> Get Your Free Copy

The post North Korean IT Workers Using Real-time Deepfake to Infiltrate Organizations via Remote Job appeared first on Cyber Security News.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![From fast food worker to cybersecurity engineer with Tae'lur Alexis [Podcast #169]](https://cdn.hashnode.com/res/hashnode/image/upload/v1745242807605/8a6cf71c-144f-4c91-9532-62d7c92c0f65.png?#)

![BPMN-procesmodellering [closed]](https://i.sstatic.net/l7l8q49F.png)

.jpg?#)

.jpg?#)

![CarPlay app with web browser for streaming video hits App Store [U]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2024/11/carplay-apple.jpeg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![What’s new in Android’s April 2025 Google System Updates [U: 4/21]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Releases iOS 18.5 Beta 3 and iPadOS 18.5 Beta 3 [Download]](https://www.iclarified.com/images/news/97076/97076/97076-640.jpg)

![Apple Seeds visionOS 2.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97077/97077/97077-640.jpg)

![Apple Seeds tvOS 18.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97078/97078/97078-640.jpg)

![Apple Seeds watchOS 11.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97079/97079/97079-640.jpg)