Mercury Coder - A Quick Test of Diffusion Language Model

I have recently touched on how diffusion/transformer models come into new domains - specifically the February news on Larage Language Diffusion models (LLaDA, Mercury). Last weekend, I received an invitation from Inception Labs to take part in beta testing their Mercury Coder Small model - one of a few representatives of the breed of dLLMs. The model is presented as (a) based on novel non-transformer tech, (b) matching the performance of SOTA SLMs (think OpenAI GPT Mini, Anthropic Haiku, and Google Gemini Flash) and (c) being 5-10x faster. With performance I assume how smart the model is and how good the answers it produces are. Speed tells how many tokens per second a model can generate. Speed The key selling point from the Mercury introduction post was the generation speed - a 5-10x increase over similar-sized models. That's what I decided to test first. I have used a simple Python UI that supports OpenAI compatible endpoints and can show tokens per second metric after the response is received: I've obtained a stable 370 token/second generation with almost 0 variation. What's also curious is that the model can only work with the temperature set to 0 and it always produced the same answer: mercury-coder-small / 1102 tokens TTF Chunk: 0.85s / TPS: 369.13 TTF Chunk: 0.73s / TPS: 369.86 TTF Chunk: 0.77s / TPS: 368.73 TTF Chunk: 0.77s / TPS: 370.96 On the one hand, it is faster than the 200 tokens we've seen from models like GPT-4o Mini and Gemini 2.0 Flash. On the other hand, it's twice lower than the advertised 737 tok/s. I have also tried a simple curl request with no streaming yet received the same speed. A side note. Mercury provides OpenAI compatible chat completions API (but who doesn't these days..). It turned out it supported streaming responses. And that was a surprise for me. The key differentiator of dLLM from traditional LLMs is how they produce a large block of text and then gradually change parts in it (the diffusion effect), rather than spill out tokens one by one. I don't see how streaming can be implemented in that case except by completing the full generation in the backend and then simulating the streaming (at a significant slowdown). For comparison, recently I got my hands on GPT 4.1 Nano. With the same prompt, it produced a reply of similar size (1000 tokens), and the speed fluctuated between 150 and 340 tokens per second: gpt-4.1-nano-2025-04-14 / 1096-1251 tokens TTF Chunk: 0.31s / TPS: 341.31 TTF Chunk: 0.45s / TPS: 305.91 TTF Chunk: 0.34s / TPS: 268.98 TTF Chunk: 0.36s / TPS: 200.13 TTF Chunk: 0.45s / TPS: 132.89 TTF Chunk: 0.45s / TPS: 150.13 Performance I didn't use the model as a daily driver (in IDE or as a chatbot) and would not pretend to have a comprehensive answer to how good it is... First of all, I ran a few prompts in chat, and it seemed OK, generating code snippets and giving basic answers. Then I ran it through my own LLM Chess eval. It took place in the middle-bottom of the list, a typical SLM struggling to adhere to game protocol making on average 10 moves before breaking the game loop: token accounting is wrong, the model is not as verbose The major conclusion is that the new tech doesn't solve the old problem of LLM hallucinations. The common failure mode is yielding illegal moves. See in the example dialog traces below how the model gets the list of legal moves and still fails to pick one (making up a combination not on the list): -------------------------------------------------------------------------------- Proxy (to Player_Black): g8h8,f8e7,f8d6,f8c5,f8b4,f8a3,e8e7,d8e7,d8f6,d8g5,d8h4,c8b7,b8c6,b8a6,e4f6,e4d6,e4g5,e4c5,e4g3,e4c3,e4f2,e4d2,h7h6,g7g6,f7f6,d7d6,c7c6,a7a6,h7h5,g7g5,f7f5,d7d5,c7c5,a7a5 -------------------------------------------------------------------------------- Player_Black (to Proxy): Thank you for providing the list of legal moves. After analyzing the position and the available moves, I'll make a move to develop my pieces and control the center of the board. I'll move my knight from g8 to h6. Please provide the command 'make_move g8h6'. -------------------------------------------------------------------------------- Proxy (to Player_Black): Failed to make move: illegal uci: 'g8h6' in rnbqkbr1/pQpp1ppp/8/4p3/2P1n3/N4P1N/PP1P2PP/R1B1KB1R b KQq - 0 11 I have also run the model through LiveBench (public dataset from November 2024) which I had readily configured for local runs: category average coding data_analysis instruction_following language math reasoning model google_gemma-3-27b-it@iq4_xs 50.7 36.9 52.8 82.1 31.9 53.5 47.3 gpt-4.1-nano-2025-04-14 42.7 40.6 46.0 60.0 24.0 46.8 39.1 gemma-2-27b-it@iq4_xs 39.8 36.6 48.1 67.6 29.5 25.0 32.0 mercury-coder-small

I have recently touched on how diffusion/transformer models come into new domains - specifically the February news on Larage Language Diffusion models (LLaDA, Mercury).

Last weekend, I received an invitation from Inception Labs to take part in beta testing their Mercury Coder Small model - one of a few representatives of the breed of dLLMs.

The model is presented as (a) based on novel non-transformer tech, (b) matching the performance of SOTA SLMs (think OpenAI GPT Mini, Anthropic Haiku, and Google Gemini Flash) and (c) being 5-10x faster.

With performance I assume how smart the model is and how good the answers it produces are. Speed tells how many tokens per second a model can generate.

Speed

The key selling point from the Mercury introduction post was the generation speed - a 5-10x increase over similar-sized models. That's what I decided to test first.

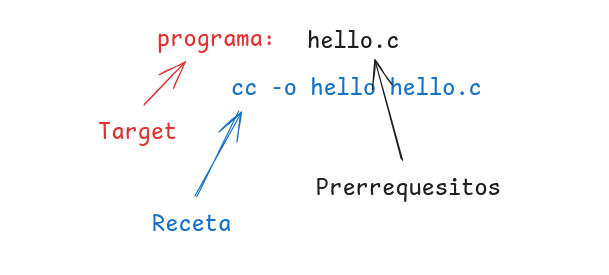

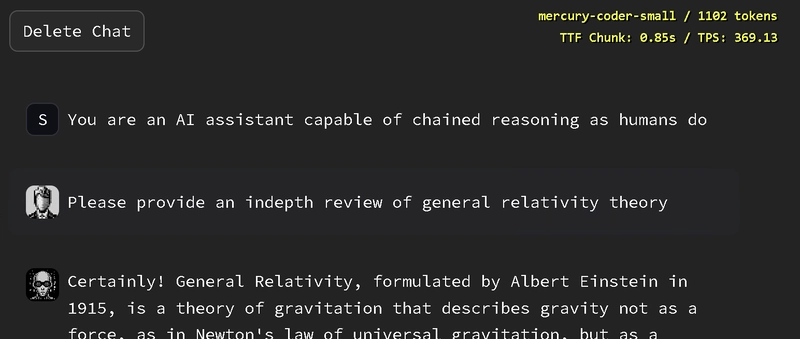

I have used a simple Python UI that supports OpenAI compatible endpoints and can show tokens per second metric after the response is received:

I've obtained a stable 370 token/second generation with almost 0 variation. What's also curious is that the model can only work with the temperature set to 0 and it always produced the same answer:

mercury-coder-small / 1102 tokens

TTF Chunk: 0.85s / TPS: 369.13

TTF Chunk: 0.73s / TPS: 369.86

TTF Chunk: 0.77s / TPS: 368.73

TTF Chunk: 0.77s / TPS: 370.96

On the one hand, it is faster than the 200 tokens we've seen from models like GPT-4o Mini and Gemini 2.0 Flash. On the other hand, it's twice lower than the advertised 737 tok/s. I have also tried a simple curl request with no streaming yet received the same speed.

A side note. Mercury provides OpenAI compatible chat completions API (but who doesn't these days..). It turned out it supported streaming responses. And that was a surprise for me. The key differentiator of dLLM from traditional LLMs is how they produce a large block of text and then gradually change parts in it (the diffusion effect), rather than spill out tokens one by one. I don't see how streaming can be implemented in that case except by completing the full generation in the backend and then simulating the streaming (at a significant slowdown).

For comparison, recently I got my hands on GPT 4.1 Nano. With the same prompt, it produced a reply of similar size (1000 tokens), and the speed fluctuated between 150 and 340 tokens per second:

gpt-4.1-nano-2025-04-14 / 1096-1251 tokens

TTF Chunk: 0.31s / TPS: 341.31

TTF Chunk: 0.45s / TPS: 305.91

TTF Chunk: 0.34s / TPS: 268.98

TTF Chunk: 0.36s / TPS: 200.13

TTF Chunk: 0.45s / TPS: 132.89

TTF Chunk: 0.45s / TPS: 150.13

Performance

I didn't use the model as a daily driver (in IDE or as a chatbot) and would not pretend to have a comprehensive answer to how good it is...

First of all, I ran a few prompts in chat, and it seemed OK, generating code snippets and giving basic answers.

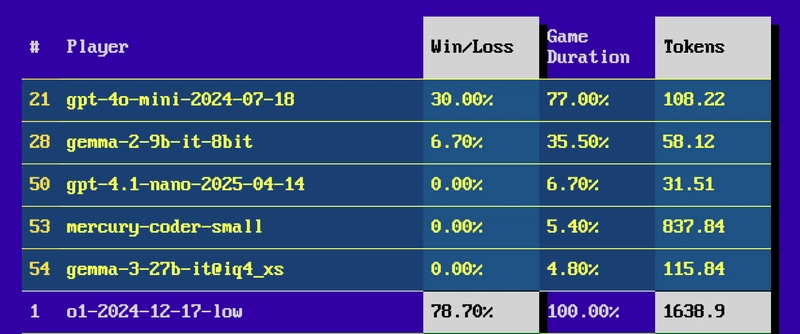

Then I ran it through my own LLM Chess eval. It took place in the middle-bottom of the list, a typical SLM struggling to adhere to game protocol making on average 10 moves before breaking the game loop:

- token accounting is wrong, the model is not as verbose

The major conclusion is that the new tech doesn't solve the old problem of LLM hallucinations. The common failure mode is yielding illegal moves. See in the example dialog traces below how the model gets the list of legal moves and still fails to pick one (making up a combination not on the list):

--------------------------------------------------------------------------------

Proxy (to Player_Black):

g8h8,f8e7,f8d6,f8c5,f8b4,f8a3,e8e7,d8e7,d8f6,d8g5,d8h4,c8b7,b8c6,b8a6,e4f6,e4d6,e4g5,e4c5,e4g3,e4c3,e4f2,e4d2,h7h6,g7g6,f7f6,d7d6,c7c6,a7a6,h7h5,g7g5,f7f5,d7d5,c7c5,a7a5

--------------------------------------------------------------------------------

Player_Black (to Proxy):

Thank you for providing the list of legal moves. After analyzing the position and the available moves, I'll make a move to develop my pieces and control the center of the board. I'll move my knight from g8 to h6. Please provide the command 'make_move g8h6'.

--------------------------------------------------------------------------------

Proxy (to Player_Black):

Failed to make move: illegal uci: 'g8h6' in rnbqkbr1/pQpp1ppp/8/4p3/2P1n3/N4P1N/PP1P2PP/R1B1KB1R b KQq - 0 11

I have also run the model through LiveBench (public dataset from November 2024) which I had readily configured for local runs:

category average coding data_analysis instruction_following language math reasoning

model

google_gemma-3-27b-it@iq4_xs 50.7 36.9 52.8 82.1 31.9 53.5 47.3

gpt-4.1-nano-2025-04-14 42.7 40.6 46.0 60.0 24.0 46.8 39.1

gemma-2-27b-it@iq4_xs 39.8 36.6 48.1 67.6 29.5 25.0 32.0

mercury-coder-small 35.9 34.4 44.7 53.2 12.4 35.1 35.8

Any Good?

The model doesn't impress with its smarts. Speed, that's something we've seen already - Groq and Cerebras have been serving popular open-source models such as Llama, Gemma, Owen, etc. for quite a while - using their custom hardware and boasting thousands of tokens per second.

At the same time, I don't think Mercury is a failure. It's a win. They've built a capable model that qualifies as a general-purpose chatbot. And they did it leveraging completely new ideas (in LLMs at least). There's no need for custom hardware to run diffusion models at insane speeds. While Groq/Cerebras might find it difficult to find any applications to their LPUs beyond transformer model inference.

The model is in 2023 in terms of performance. Looking forward to new increments, hope they can match SOTA models of 2024-2025 - this will bring low-cost high-speed LLM inference.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Is this too much for a modular monolith system? [closed]](https://i.sstatic.net/pYL1nsfg.png)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)