Introduction to Parallel Programming: Unlocking the Power of GPUs(Part 1)

Parallel programming is a powerful technique that allows us to take full advantage of the capabilities of modern computing systems, particularly GPUs. By breaking down a task into smaller sub-tasks and running them concurrently, we can achieve higher performance and solve complex problems more efficiently. For More visit In this post, we’ll explore the basics of parallel programming, its importance in modern computing, and how you can get started with GPU programming to accelerate your applications. Why Parallel Programming? In the world of computing, many tasks can be parallelized, meaning that they can be broken into smaller pieces that can be processed simultaneously. This is especially true for applications requiring massive computational power, like machine learning, simulations, image processing, and scientific computing. Before GPUs, most computations were done on a single CPU core, which had limitations in processing speed. With parallel computing, multiple processors (cores) can work together to solve different parts of a problem simultaneously, greatly improving performance. What is a GPU? A Graphics Processing Unit (GPU) is a highly parallel processor designed to handle tasks related to graphics rendering. However, it’s not limited to just graphical applications. Over the years, GPUs have become essential for accelerating non-graphical tasks, particularly in fields like machine learning, data science, and scientific computing. Unlike traditional CPUs, which are optimized for single-threaded performance, GPUs are designed to handle thousands of threads simultaneously, making them ideal for parallel tasks. Key Concepts in Parallel Programming Concurrency vs. Parallelism: Concurrency refers to the concept of multiple tasks being executed in overlapping periods but not necessarily simultaneously. Parallelism, on the other hand, is about performing tasks simultaneously using multiple processors or cores. Threads: A thread is the smallest unit of execution in a process. In parallel programming, you typically create multiple threads to handle different parts of the computation simultaneously. GPUs can execute thousands of threads in parallel, making them much faster for certain types of problems. Synchronization: When multiple threads are running simultaneously, it's crucial to synchronize them to avoid conflicts, such as multiple threads trying to access the same data at the same time. Memory Management: Efficient use of memory is key to parallel programming. GPUs have a different memory architecture compared to CPUs, and understanding how to optimize memory access can drastically improve performance. Getting Started with GPU Parallel Programming Now that we have a basic understanding of parallel programming, let's see how to get started with GPU programming. Here are a few tools and frameworks that make it easier: CUDA (Compute Unified Device Architecture): CUDA is a programming model and API created by NVIDIA that allows you to use GPUs for general-purpose computing. It supports C, C++, and Python and provides a rich set of libraries and tools to accelerate your programs. OpenCL: OpenCL (Open Computing Language) is an open standard for parallel programming across heterogeneous systems, including CPUs, GPUs, and other processors. It supports multiple programming languages, including C and C++. TensorFlow & PyTorch: Both TensorFlow and PyTorch support GPU acceleration out of the box. These frameworks are especially popular in the machine learning and data science communities for training deep learning models. NVIDIA cuDNN: cuDNN is a GPU-accelerated library for deep neural networks. It is optimized for deep learning operations and is commonly used with frameworks like TensorFlow, Keras, and PyTorch. Conclusion Parallel programming is essential for taking full advantage of modern computing power, and GPUs are an incredible tool for speeding up computation. By learning parallel programming concepts and tools like CUDA and OpenCL, you can harness the power of GPUs to accelerate your applications in fields like machine learning, simulation, and more. Want to learn more about GPU programming? Check out the full guide on CompilerSutra for more in-depth explanations, code examples, and best practices. let's unlock the true power of parallel computing!

Parallel programming is a powerful technique that allows us to take full advantage of the capabilities of modern computing systems, particularly GPUs. By breaking down a task into smaller sub-tasks and running them concurrently, we can achieve higher performance and solve complex problems more efficiently.

For More visit

In this post, we’ll explore the basics of parallel programming, its importance in modern computing, and how you can get started with GPU programming to accelerate your applications.

Why Parallel Programming?

In the world of computing, many tasks can be parallelized, meaning that they can be broken into smaller pieces that can be processed simultaneously. This is especially true for applications requiring massive computational power, like machine learning, simulations, image processing, and scientific computing.

Before GPUs, most computations were done on a single CPU core, which had limitations in processing speed. With parallel computing, multiple processors (cores) can work together to solve different parts of a problem simultaneously, greatly improving performance.

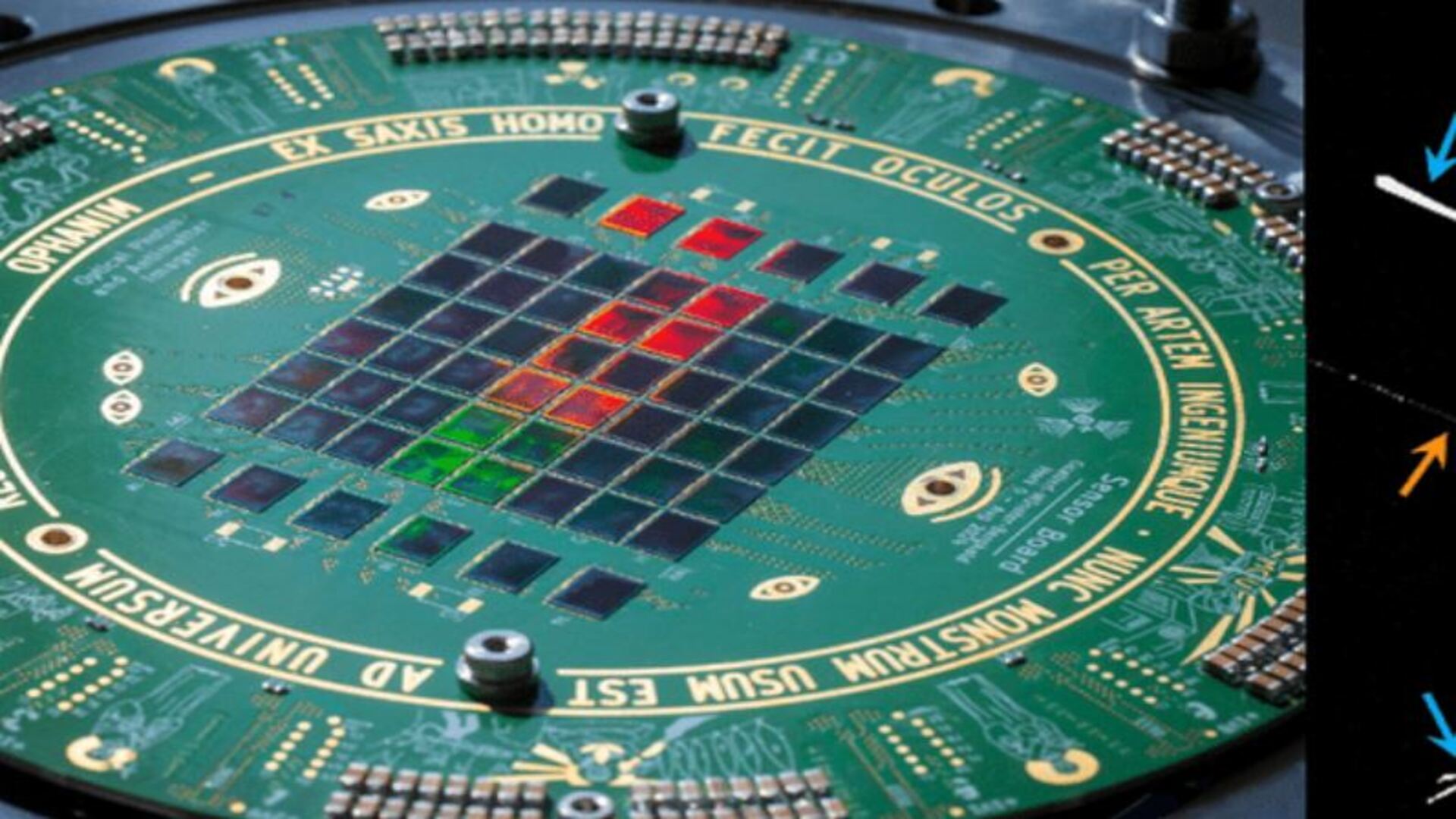

What is a GPU?

A Graphics Processing Unit (GPU) is a highly parallel processor designed to handle tasks related to graphics rendering. However, it’s not limited to just graphical applications. Over the years, GPUs have become essential for accelerating non-graphical tasks, particularly in fields like machine learning, data science, and scientific computing.

Unlike traditional CPUs, which are optimized for single-threaded performance, GPUs are designed to handle thousands of threads simultaneously, making them ideal for parallel tasks.

Key Concepts in Parallel Programming

Concurrency vs. Parallelism:

Concurrency refers to the concept of multiple tasks being executed in overlapping periods but not necessarily simultaneously.

Parallelism, on the other hand, is about performing tasks simultaneously using multiple processors or cores.

Threads:

A thread is the smallest unit of execution in a process. In parallel programming, you typically create multiple threads to handle different parts of the computation simultaneously.

GPUs can execute thousands of threads in parallel, making them much faster for certain types of problems.

Synchronization:

When multiple threads are running simultaneously, it's crucial to synchronize them to avoid conflicts, such as multiple threads trying to access the same data at the same time.

Memory Management:

Efficient use of memory is key to parallel programming. GPUs have a different memory architecture compared to CPUs, and understanding how to optimize memory access can drastically improve performance.

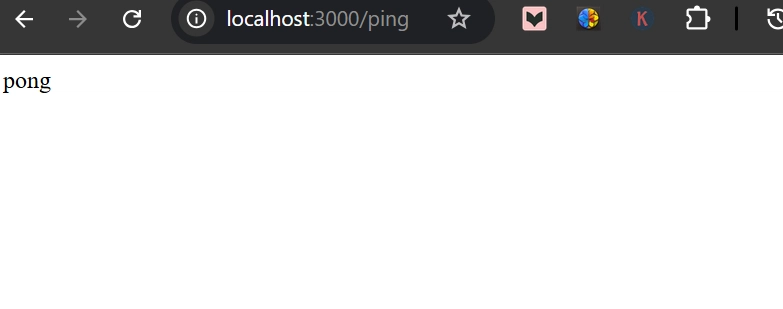

Getting Started with GPU Parallel Programming

Now that we have a basic understanding of parallel programming, let's see how to get started with GPU programming. Here are a few tools and frameworks that make it easier:

CUDA (Compute Unified Device Architecture):

CUDA is a programming model and API created by NVIDIA that allows you to use GPUs for general-purpose computing. It supports C, C++, and Python and provides a rich set of libraries and tools to accelerate your programs.

OpenCL:

OpenCL (Open Computing Language) is an open standard for parallel programming across heterogeneous systems, including CPUs, GPUs, and other processors. It supports multiple programming languages, including C and C++.

TensorFlow & PyTorch:

Both TensorFlow and PyTorch support GPU acceleration out of the box. These frameworks are especially popular in the machine learning and data science communities for training deep learning models.

NVIDIA cuDNN:

cuDNN is a GPU-accelerated library for deep neural networks. It is optimized for deep learning operations and is commonly used with frameworks like TensorFlow, Keras, and PyTorch.

Conclusion

Parallel programming is essential for taking full advantage of modern computing power, and GPUs are an incredible tool for speeding up computation. By learning parallel programming concepts and tools like CUDA and OpenCL, you can harness the power of GPUs to accelerate your applications in fields like machine learning, simulation, and more.

Want to learn more about GPU programming? Check out the full guide on CompilerSutra for more in-depth explanations, code examples, and best practices.

let's unlock the true power of parallel computing!

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.jpg?#)

_ArtemisDiana_Alamy.jpg?#)

(1).webp?#)

-xl.jpg)

![Yes, the Gemini icon is now bigger and brighter on Android [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/02/Gemini-on-Galaxy-S25.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Rushes Five Planes of iPhones to US Ahead of New Tariffs [Report]](https://www.iclarified.com/images/news/96967/96967/96967-640.jpg)

![Apple Vision Pro 2 Allegedly in Production Ahead of 2025 Launch [Rumor]](https://www.iclarified.com/images/news/96965/96965/96965-640.jpg)