Introduction to Data Engineering Concepts |4| Batch Processing Fundamentals

Free Resources Free Apache Iceberg Course Free Copy of “Apache Iceberg: The Definitive Guide” Free Copy of “Apache Polaris: The Definitive Guide” 2025 Apache Iceberg Architecture Guide How to Join the Iceberg Community Iceberg Lakehouse Engineering Video Playlist Ultimate Apache Iceberg Resource Guide For many data engineering tasks, real-time insights aren’t necessary. In fact, a large portion of the data processed across organizations happens in scheduled intervals—daily sales reports, weekly data refreshes, monthly billing cycles. This is where batch processing comes in, and despite the growing popularity of streaming, batch remains the backbone of many data-driven workflows. In this post, we’ll explore what batch processing is, how it works under the hood, and why it’s still a critical technique in the data engineer’s toolbox. What is Batch Processing? Batch processing is the execution of data workflows on a predefined schedule or in response to specific triggers. Instead of processing data as it arrives, the system collects a set of data over a period of time, then processes that set as a single unit. This approach is particularly useful when data arrives in large quantities but doesn’t need to be acted on immediately. For example, processing daily transactions from a point-of-sale system or generating overnight reports for executive dashboards. Batch jobs are often triggered at set times—say, every night at 2 a.m.—and are designed to run until completion, often without user interaction. They can run for seconds, minutes, or even hours depending on the volume of data and complexity of the transformations. Under the Hood: How Batch Jobs Work The anatomy of a batch job usually includes several stages. First, the job identifies the data it needs to process. This might involve querying a database for all records created in the last 24 hours or scanning a specific folder in object storage for new files. Next comes the transformation phase. This is where data is cleaned, filtered, joined with other datasets, and reshaped to fit its target structure. This phase can include tasks like date formatting, currency conversion, null value imputation, or the calculation of derived fields. Finally, the job writes the transformed data to its destination—often a data warehouse, data lake, or downstream reporting system. To manage all of this, engineers rely on workflow orchestration tools. These tools provide scheduling, error handling, and logging capabilities to ensure that jobs run in the right order and can recover gracefully from failure. Tools and Technologies Several tools have become staples in batch-oriented workflows. Apache Airflow is one of the most widely used. It allows engineers to define complex workflows as Directed Acyclic Graphs (DAGs), where each node represents a task and dependencies are explicitly declared. Other tools like Luigi and Oozie offer similar functionality, though they are less commonly used in newer stacks. Cloud-native platforms such as AWS Glue and Google Cloud Composer provide managed orchestration services that integrate tightly with the respective cloud ecosystems. In addition to orchestration, batch jobs often depend on distributed processing engines like Apache Spark. Spark allows massive datasets to be processed in parallel across a cluster of machines, reducing processing times dramatically compared to traditional single-node tools. Strengths and Limitations One of the biggest advantages of batch processing is its simplicity. Since data is processed in chunks, you can apply robust validation and error-handling routines before moving data downstream. It's also easier to track and audit, which is especially important for regulated industries. Batch jobs are also cost-efficient when working with large volumes of data that don’t require immediate availability. Processing once per day means you can spin up compute resources only when needed, rather than keeping systems running continuously. However, the main limitation is latency. If something happens in your business—say, a spike in fraudulent transactions—you won’t know about it until after the next batch job runs. For use cases that require faster insights or real-time responsiveness, batch processing isn’t sufficient. There’s also the issue of windowing and completeness. Since batch jobs process data in slices, late-arriving records can fall outside the intended window unless carefully managed. This adds complexity to pipeline design and requires thoughtful handling of time-based logic. Where Batch Still Shines Despite its limitations, batch processing remains ideal for a wide range of use cases. Financial reconciliations, data archival, slow-changing dimensional data updates, and long-running analytics workloads are just a few examples where batch continues to dominate. As a data engineer, understanding how to design efficient a

Free Resources

- Free Apache Iceberg Course

- Free Copy of “Apache Iceberg: The Definitive Guide”

- Free Copy of “Apache Polaris: The Definitive Guide”

- 2025 Apache Iceberg Architecture Guide

- How to Join the Iceberg Community

- Iceberg Lakehouse Engineering Video Playlist

- Ultimate Apache Iceberg Resource Guide

For many data engineering tasks, real-time insights aren’t necessary. In fact, a large portion of the data processed across organizations happens in scheduled intervals—daily sales reports, weekly data refreshes, monthly billing cycles. This is where batch processing comes in, and despite the growing popularity of streaming, batch remains the backbone of many data-driven workflows.

In this post, we’ll explore what batch processing is, how it works under the hood, and why it’s still a critical technique in the data engineer’s toolbox.

What is Batch Processing?

Batch processing is the execution of data workflows on a predefined schedule or in response to specific triggers. Instead of processing data as it arrives, the system collects a set of data over a period of time, then processes that set as a single unit.

This approach is particularly useful when data arrives in large quantities but doesn’t need to be acted on immediately. For example, processing daily transactions from a point-of-sale system or generating overnight reports for executive dashboards.

Batch jobs are often triggered at set times—say, every night at 2 a.m.—and are designed to run until completion, often without user interaction. They can run for seconds, minutes, or even hours depending on the volume of data and complexity of the transformations.

Under the Hood: How Batch Jobs Work

The anatomy of a batch job usually includes several stages. First, the job identifies the data it needs to process. This might involve querying a database for all records created in the last 24 hours or scanning a specific folder in object storage for new files.

Next comes the transformation phase. This is where data is cleaned, filtered, joined with other datasets, and reshaped to fit its target structure. This phase can include tasks like date formatting, currency conversion, null value imputation, or the calculation of derived fields.

Finally, the job writes the transformed data to its destination—often a data warehouse, data lake, or downstream reporting system.

To manage all of this, engineers rely on workflow orchestration tools. These tools provide scheduling, error handling, and logging capabilities to ensure that jobs run in the right order and can recover gracefully from failure.

Tools and Technologies

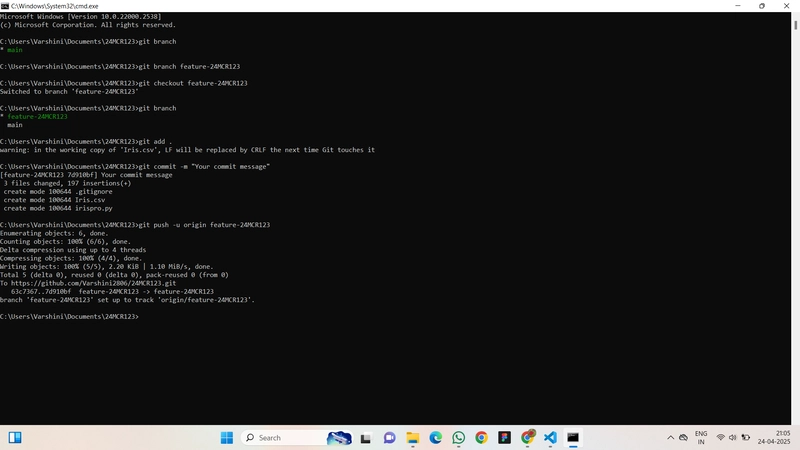

Several tools have become staples in batch-oriented workflows. Apache Airflow is one of the most widely used. It allows engineers to define complex workflows as Directed Acyclic Graphs (DAGs), where each node represents a task and dependencies are explicitly declared.

Other tools like Luigi and Oozie offer similar functionality, though they are less commonly used in newer stacks. Cloud-native platforms such as AWS Glue and Google Cloud Composer provide managed orchestration services that integrate tightly with the respective cloud ecosystems.

In addition to orchestration, batch jobs often depend on distributed processing engines like Apache Spark. Spark allows massive datasets to be processed in parallel across a cluster of machines, reducing processing times dramatically compared to traditional single-node tools.

Strengths and Limitations

One of the biggest advantages of batch processing is its simplicity. Since data is processed in chunks, you can apply robust validation and error-handling routines before moving data downstream. It's also easier to track and audit, which is especially important for regulated industries.

Batch jobs are also cost-efficient when working with large volumes of data that don’t require immediate availability. Processing once per day means you can spin up compute resources only when needed, rather than keeping systems running continuously.

However, the main limitation is latency. If something happens in your business—say, a spike in fraudulent transactions—you won’t know about it until after the next batch job runs. For use cases that require faster insights or real-time responsiveness, batch processing isn’t sufficient.

There’s also the issue of windowing and completeness. Since batch jobs process data in slices, late-arriving records can fall outside the intended window unless carefully managed. This adds complexity to pipeline design and requires thoughtful handling of time-based logic.

Where Batch Still Shines

Despite its limitations, batch processing remains ideal for a wide range of use cases. Financial reconciliations, data archival, slow-changing dimensional data updates, and long-running analytics workloads are just a few examples where batch continues to dominate.

As a data engineer, understanding how to design efficient and reliable batch workflows is an essential skill, especially in environments where consistency and auditability are critical.

In the next post, we’ll explore the counterpart to batch: streaming data processing. We’ll look at what it means to process data in real time, how it differs from batch, and what patterns and tools make it work.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![From Art School Drop-out to Microsoft Engineer with Shashi Lo [Podcast #170]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746203291209/439bf16b-c820-4fe8-b69e-94d80533b2df.png?#)

(1).jpg?#)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Developing AI 'Vibe-Coding' Assistant for Xcode With Anthropic [Report]](https://www.iclarified.com/images/news/97200/97200/97200-640.jpg)

![Apple's New Ads Spotlight Apple Watch for Kids [Video]](https://www.iclarified.com/images/news/97197/97197/97197-640.jpg)