Inside the Engine: How AI Coding Assistants Work and What Every Developer Must Know Before Using Them

Uncover the mechanics powering AI coding assistants, from neural networks to real-time code generation, and learn the critical factors—security, ethics, and reliability—that define their safe and effective use in modern software development. How AI Coding Assistants Work: The Technical Blueprint AI coding assistants combine machine learning, natural language processing (NLP), and vast code repositories to function as intelligent collaborators. Here’s a step-by-step breakdown: 1. Training on Massive Code Corpora Data Sources: Models are trained on billions of lines of open-source code (e.g., GitHub, Stack Overflow) and technical documentation. Language Understanding: They learn syntax, patterns, and best practices for languages like Python, JavaScript, and Java. Contextual Learning: Code is paired with comments, commit messages, and issue trackers to link intent with implementation. Example: OpenAI’s Codex (powering GitHub Copilot) was trained on 54 million public repositories. 2. Transformer Architecture and Tokenization Transformers: These neural networks process sequences of code/text using self-attention mechanisms to predict relationships between tokens (e.g., variables, functions). Tokenization: Code is split into tokens (words, symbols) for the model to analyze. For instance, def calculate_sum(a, b): becomes tokens like ["def", "calculate_sum", "(", "a", ",", "b", ")", ":"]. Autoregressive Prediction: The model predicts the next token based on prior context. Example: When you type import pandas as pd, the assistant predicts pd.read_csv() as the next likely action. 3. Real-Time Context Analysis Local Context: The tool scans the active file, including variables, functions, and imports. Project Context: Some assistants (e.g., Amazon CodeWhisperer) analyze entire project directories for framework-specific patterns (e.g., React components, Flask routes). User Intent Inference: Combines code with natural language comments (e.g., “// Sort users by age”) to refine suggestions. 4. Code Generation and Refinement Prompt Engineering: Developers write prompts (code snippets or comments) to trigger relevant outputs. Explicit Prompts: “Write a Python function to calculate Fibonacci sequence.” Implicit Prompts: Partially written code (e.g., a half-completed loop). Sampling and Ranking: The model generates multiple candidate solutions, ranks them by likelihood, and selects the best match. Feedback Loops: Some tools learn from user corrections to improve future suggestions. 5. Integration with Developer Tools IDE Plugins: Assistants embed directly into VS Code, JetBrains, or Jupyter notebooks. Cloud-Based Processing: Code is sent to cloud servers (e.g., GitHub’s Copilot backend) for analysis, raising privacy considerations. Key Considerations When Using AI Coding Assistants While powerful, these tools require cautious adoption. Here’s what developers and teams must evaluate: 1. Security and Vulnerability Risks Insecure Code: AI may suggest code with vulnerabilities (e.g., SQL injection, hardcoded credentials). Fix: Use tools like Snyk or CodeQL to scan AI-generated code. Data Privacy: Cloud-based assistants might log code snippets. Avoid sharing sensitive IP or proprietary logic. 2. Code Quality and Ownership Licensing Issues: AI-generated code might replicate copyrighted snippets from training data. Fix: Use tools like GitHub’s Copilot IP indemnity or audit code for compliance. Technical Debt: Over-reliance on AI can lead to poorly documented “black box” code. Fix: Enforce code reviews and documentation standards. 3. Over-Reliance and Skill Erosion Dependency Risk: Junior developers might lean too heavily on AI, stunting foundational skill growth. Fix: Pair AI use with mentorship and deliberate practice. Context Blind Spots: AI lacks domain-specific knowledge (e.g., healthcare compliance rules). Fix: Fine-tune models on internal codebases where possible. 4. Ethical and Bias Concerns Bias in Training Data: Models trained on public code may reflect underrepresented demographics or outdated practices. Example: Gender bias in variable names (e.g., “admin” vs. “admin_user”). Environmental Impact: Training LLMs consumes massive energy. Fix: Opt for tools with carbon-neutral commitments. 5. Ambiguity Handling Vague Prompts: Requests like “make this faster” can yield irrelevant optimizations. Fix: Use precise prompts (e.g., “Optimize this Python loop using vectorization”). The Future: Toward Responsible AI Adoption Explainable AI (XAI): Tools that justify why a code suggestion was made. On-Prem Solutions: Self-hosted models (e.g., Code Llama) to address privacy concerns. Regulatory Compliance: Frameworks to aud

Uncover the mechanics powering AI coding assistants, from neural networks to real-time code generation, and learn the critical factors—security, ethics, and reliability—that define their safe and effective use in modern software development.

How AI Coding Assistants Work: The Technical Blueprint

AI coding assistants combine machine learning, natural language processing (NLP), and vast code repositories to function as intelligent collaborators. Here’s a step-by-step breakdown:

1. Training on Massive Code Corpora

- Data Sources: Models are trained on billions of lines of open-source code (e.g., GitHub, Stack Overflow) and technical documentation.

- Language Understanding: They learn syntax, patterns, and best practices for languages like Python, JavaScript, and Java.

- Contextual Learning: Code is paired with comments, commit messages, and issue trackers to link intent with implementation.

Example: OpenAI’s Codex (powering GitHub Copilot) was trained on 54 million public repositories.

2. Transformer Architecture and Tokenization

- Transformers: These neural networks process sequences of code/text using self-attention mechanisms to predict relationships between tokens (e.g., variables, functions).

-

Tokenization: Code is split into tokens (words, symbols) for the model to analyze. For instance,

def calculate_sum(a, b):becomes tokens like["def", "calculate_sum", "(", "a", ",", "b", ")", ":"]. - Autoregressive Prediction: The model predicts the next token based on prior context.

Example: When you type import pandas as pd, the assistant predicts pd.read_csv() as the next likely action.

3. Real-Time Context Analysis

- Local Context: The tool scans the active file, including variables, functions, and imports.

- Project Context: Some assistants (e.g., Amazon CodeWhisperer) analyze entire project directories for framework-specific patterns (e.g., React components, Flask routes).

- User Intent Inference: Combines code with natural language comments (e.g., “// Sort users by age”) to refine suggestions.

4. Code Generation and Refinement

-

Prompt Engineering: Developers write prompts (code snippets or comments) to trigger relevant outputs.

- Explicit Prompts: “Write a Python function to calculate Fibonacci sequence.”

- Implicit Prompts: Partially written code (e.g., a half-completed loop).

- Sampling and Ranking: The model generates multiple candidate solutions, ranks them by likelihood, and selects the best match.

- Feedback Loops: Some tools learn from user corrections to improve future suggestions.

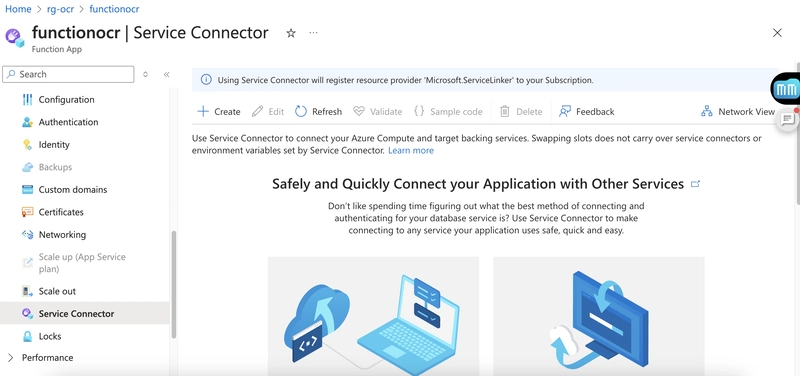

5. Integration with Developer Tools

- IDE Plugins: Assistants embed directly into VS Code, JetBrains, or Jupyter notebooks.

- Cloud-Based Processing: Code is sent to cloud servers (e.g., GitHub’s Copilot backend) for analysis, raising privacy considerations.

Key Considerations When Using AI Coding Assistants

While powerful, these tools require cautious adoption. Here’s what developers and teams must evaluate:

1. Security and Vulnerability Risks

-

Insecure Code: AI may suggest code with vulnerabilities (e.g., SQL injection, hardcoded credentials).

- Fix: Use tools like Snyk or CodeQL to scan AI-generated code.

- Data Privacy: Cloud-based assistants might log code snippets. Avoid sharing sensitive IP or proprietary logic.

2. Code Quality and Ownership

-

Licensing Issues: AI-generated code might replicate copyrighted snippets from training data.

- Fix: Use tools like GitHub’s Copilot IP indemnity or audit code for compliance.

-

Technical Debt: Over-reliance on AI can lead to poorly documented “black box” code.

- Fix: Enforce code reviews and documentation standards.

3. Over-Reliance and Skill Erosion

-

Dependency Risk: Junior developers might lean too heavily on AI, stunting foundational skill growth.

- Fix: Pair AI use with mentorship and deliberate practice.

-

Context Blind Spots: AI lacks domain-specific knowledge (e.g., healthcare compliance rules).

- Fix: Fine-tune models on internal codebases where possible.

4. Ethical and Bias Concerns

-

Bias in Training Data: Models trained on public code may reflect underrepresented demographics or outdated practices.

- Example: Gender bias in variable names (e.g., “admin” vs. “admin_user”).

-

Environmental Impact: Training LLMs consumes massive energy.

- Fix: Opt for tools with carbon-neutral commitments.

5. Ambiguity Handling

-

Vague Prompts: Requests like “make this faster” can yield irrelevant optimizations.

- Fix: Use precise prompts (e.g., “Optimize this Python loop using vectorization”).

The Future: Toward Responsible AI Adoption

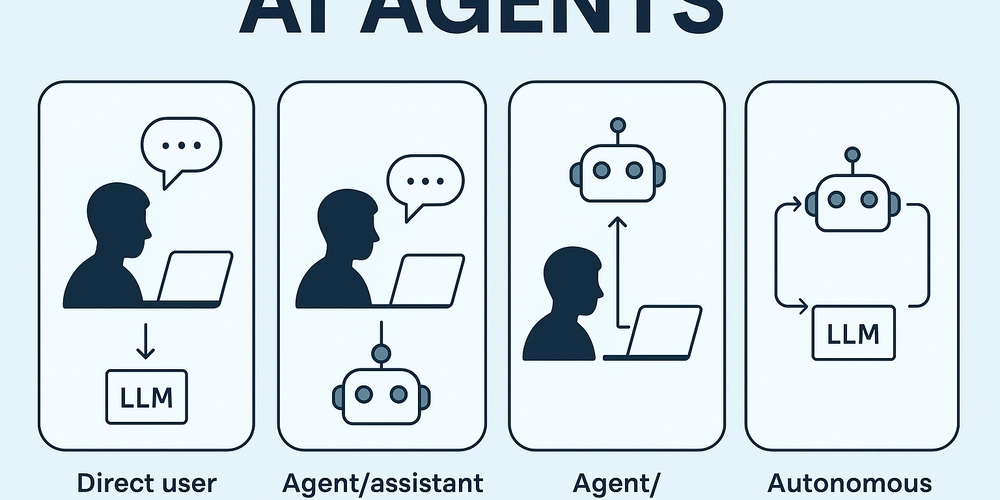

- Explainable AI (XAI): Tools that justify why a code suggestion was made.

- On-Prem Solutions: Self-hosted models (e.g., Code Llama) to address privacy concerns.

- Regulatory Compliance: Frameworks to audit AI-generated code for safety and ethics.

Top 3 Takeaways

- AI Coding Assistants Are Pattern Matchers, Not Thinkers: They excel at automating repetitive tasks but lack human judgment for architecture or ethics.

- Security and Compliance Are Non-Negotiable: Always audit AI-generated code for vulnerabilities and licensing risks.

- Balance Automation with Skill Development: Use AI to augment—not replace—core programming expertise.

Conclusion: The Double-Edged Sword of AI Assistance

AI coding assistants are revolutionizing software development, but their power comes with pitfalls. By understanding their mechanics—transformers, tokenization, and context-aware generation—developers can harness their potential while mitigating risks. The key lies in treating AI as a collaborator, not a crutch, and maintaining rigorous oversight to ensure code quality, security, and innovation. In the age of AI-augmented development, the most successful engineers will be those who master both the art of coding and the science of guiding intelligent tools.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)