Insert and Read 1 Million Records Using MongoDB and Spring Boot

In today’s connected world, IoT (Internet of Things) devices generate millions of data points every day—from temperature sensors in smart homes to GPS data in fleet tracking systems. Efficiently storing and processing this high-volume data is critical for building scalable, real-time applications. In this tutorial, we’ll walk through how to insert and read 1 million IoT-like sensor records using MongoDB and Spring Boot. Whether you’re simulating device data for testing or building a backend for a real IoT platform, this guide will help you: Rapidly ingest large volumes of structured data Read and query sensor data efficiently Understand performance considerations when dealing with big datasets By the end, you’ll have a working prototype that records a simple insertion and read of 1 million records using spring boot and mongodb Tech Stack Java 17+ Spring Boot 3.x MongoDB (local) Maven 1. Setup Your Spring Boot Project Use Spring Initializr or your favorite IDE to create a project with the following dependencies: Spring Web Spring Data MongoDB pom.xml org.springframework.boot spring-boot-starter-data-mongodb org.springframework.boot spring-boot-starter-web 2. Configure MongoDB Connection In application.properties spring.data.mongodb.uri=mongodb://localhost:27017/testdb 3. Create Your Document Model import org.springframework.data.annotation.Id; import org.springframework.data.mongodb.core.mapping.Document; @Document("my_records") public class MyRecord { @Id private String id; private String name; private int value; public MyRecord() {} public MyRecord(String name, int value) { this.name = name; this.value = value; } } 4. Create the Repository public interface MyRecordRepository extends MongoRepository { } 5. Insert and Read 1 Million Records Create a service to handle the bulk insert and read values: @Service public class MyRecordService { @Autowired private MyRecordRepository repository; public void insertMillionRecords() { List batch = new ArrayList(); int batchSize = 10000; for (int i = 1; i 68 sec second read 34458 milli seconds --> 34 sec As this is tested in local the time of retrieving data differs. According to the computing power, the retrieval time changes Using Mongo Atlas and using a cloud provider AWS, GoogleCloud or Azure optimizes the retrieval time. 6. Conclusion We have just built a Spring Boot application capable of inserting and reading 1 million records in MongoDB—a powerful foundation for applications that need to handle high-volume data efficiently. This approach is especially relevant in real-world scenarios like IoT (Internet of Things), where millions of sensor events are collected from smart devices, vehicles, industrial equipment, and more. Each sensor might send data every few seconds or milliseconds, leading to massive datasets that need fast ingestion and retrieval. MongoDB's document-based, schema-flexible structure combined with Spring Boot’s ease of development makes this stack an excellent choice for building: Smart home platforms Industrial IoT systems (IIoT) Fleet tracking and telemetry apps Health and fitness monitoring platforms In future posts, we can see how we can optimise the above by consider using: Pagination Streaming (MongoDB cursor) MongoTemplate with a query and Streamable Mongo Atlas

In today’s connected world, IoT (Internet of Things) devices generate millions of data points every day—from temperature sensors in smart homes to GPS data in fleet tracking systems. Efficiently storing and processing this high-volume data is critical for building scalable, real-time applications.

In this tutorial, we’ll walk through how to insert and read 1 million IoT-like sensor records using MongoDB and Spring Boot. Whether you’re simulating device data for testing or building a backend for a real IoT platform, this guide will help you:

Rapidly ingest large volumes of structured data

Read and query sensor data efficiently

Understand performance considerations when dealing with big datasets

By the end, you’ll have a working prototype that records a simple insertion and read of 1 million records using spring boot and mongodb

Tech Stack

- Java 17+

- Spring Boot 3.x

- MongoDB (local)

- Maven

1. Setup Your Spring Boot Project

Use Spring Initializr or your favorite IDE to create a project with the following dependencies:

- Spring Web

- Spring Data MongoDB

pom.xml

org.springframework.boot

spring-boot-starter-data-mongodb

org.springframework.boot

spring-boot-starter-web

2. Configure MongoDB Connection

In application.properties

spring.data.mongodb.uri=mongodb://localhost:27017/testdb

3. Create Your Document Model

import org.springframework.data.annotation.Id;

import org.springframework.data.mongodb.core.mapping.Document;

@Document("my_records")

public class MyRecord {

@Id

private String id;

private String name;

private int value;

public MyRecord() {}

public MyRecord(String name, int value) {

this.name = name;

this.value = value;

}

}

4. Create the Repository

public interface MyRecordRepository extends MongoRepository {

}

5. Insert and Read 1 Million Records

Create a service to handle the bulk insert and read values:

@Service

public class MyRecordService {

@Autowired

private MyRecordRepository repository;

public void insertMillionRecords() {

List batch = new ArrayList<>();

int batchSize = 10000;

for (int i = 1; i <= 1_000_000; i++) {

batch.add(new MyRecord("Name_" + i, i));

if (i % batchSize == 0) {

repository.saveAll(batch);

batch.clear();

System.out.println("Inserted: " + i);

}

}

if (!batch.isEmpty()) {

repository.saveAll(batch);

}

System.out.println("Finished inserting 1 million records.");

}

public List getAllRecords() {

return repository.findAll();

}

}

6. Trigger via REST API

@RequestMapping("/records")

@RestController

public class MyRecordController {

@Autowired

private MyRecordService myRecordService;

@PostMapping("/generate")

public String generateData() {

long start = System.currentTimeMillis();

myRecordService.insertMillionRecords();

long end = System.currentTimeMillis();

long timeTaken = end - start;

return "Inserted 1 million records in " + timeTaken + " milli seconds";

}

@GetMapping("/all")

public RecordResponse getAllRecords() {

long start = System.currentTimeMillis();

List records = myRecordService.getAllRecords();

long end = System.currentTimeMillis();

long timetaken = end - start;

return new RecordResponse(records.size(),timetaken);

}

public static class RecordResponse {

private int totalRecords;

private long timeInMillis;

public RecordResponse(int totalRecords, long timeInMillis) {

this.totalRecords = totalRecords;

this.timeInMillis = timeInMillis;

}

public int getTotalRecords() {

return totalRecords;

}

public long getTimeInMillis() {

return timeInMillis;

}

}

}

Retrieving 1 million records at once can cause memory issues.

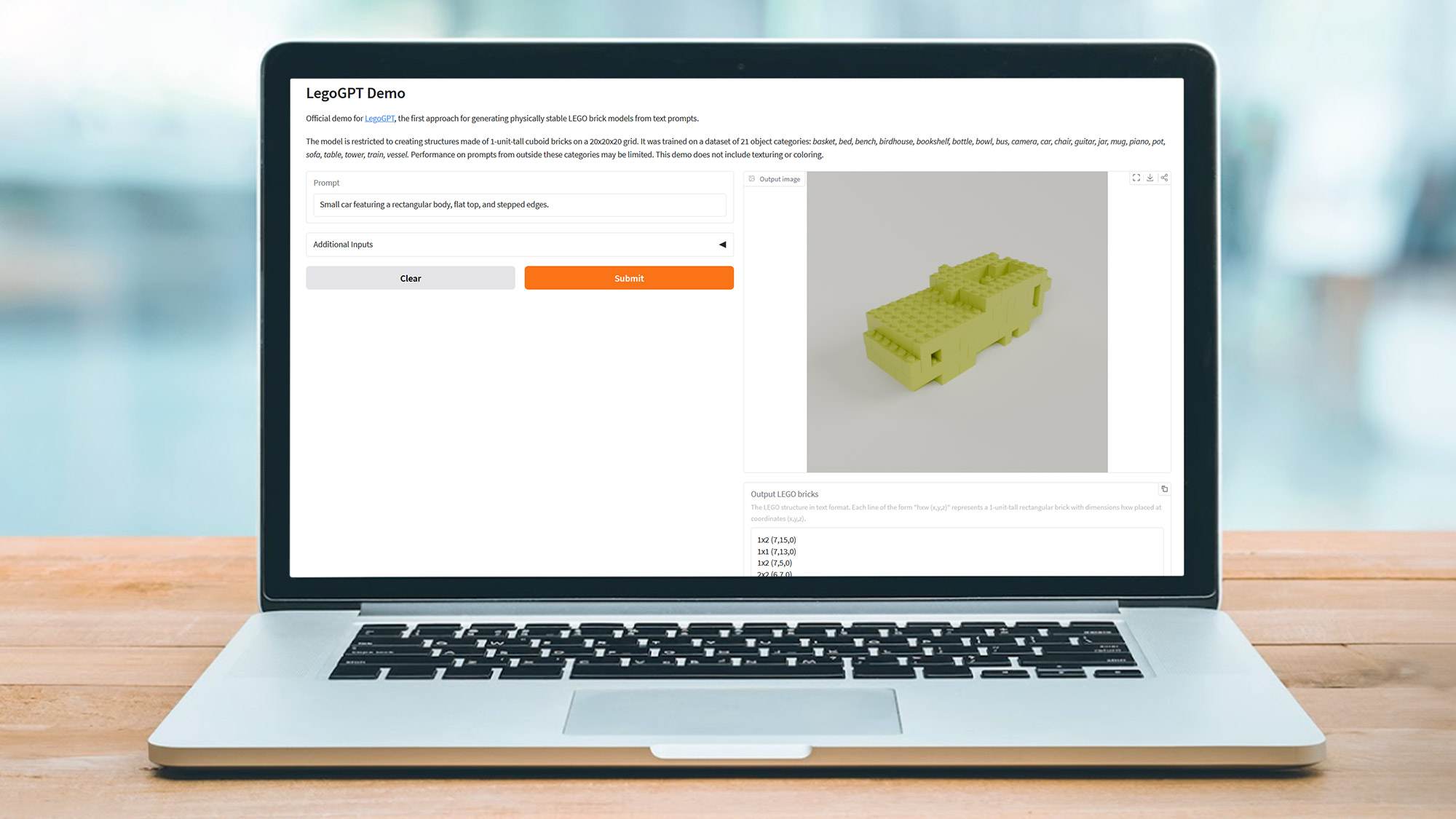

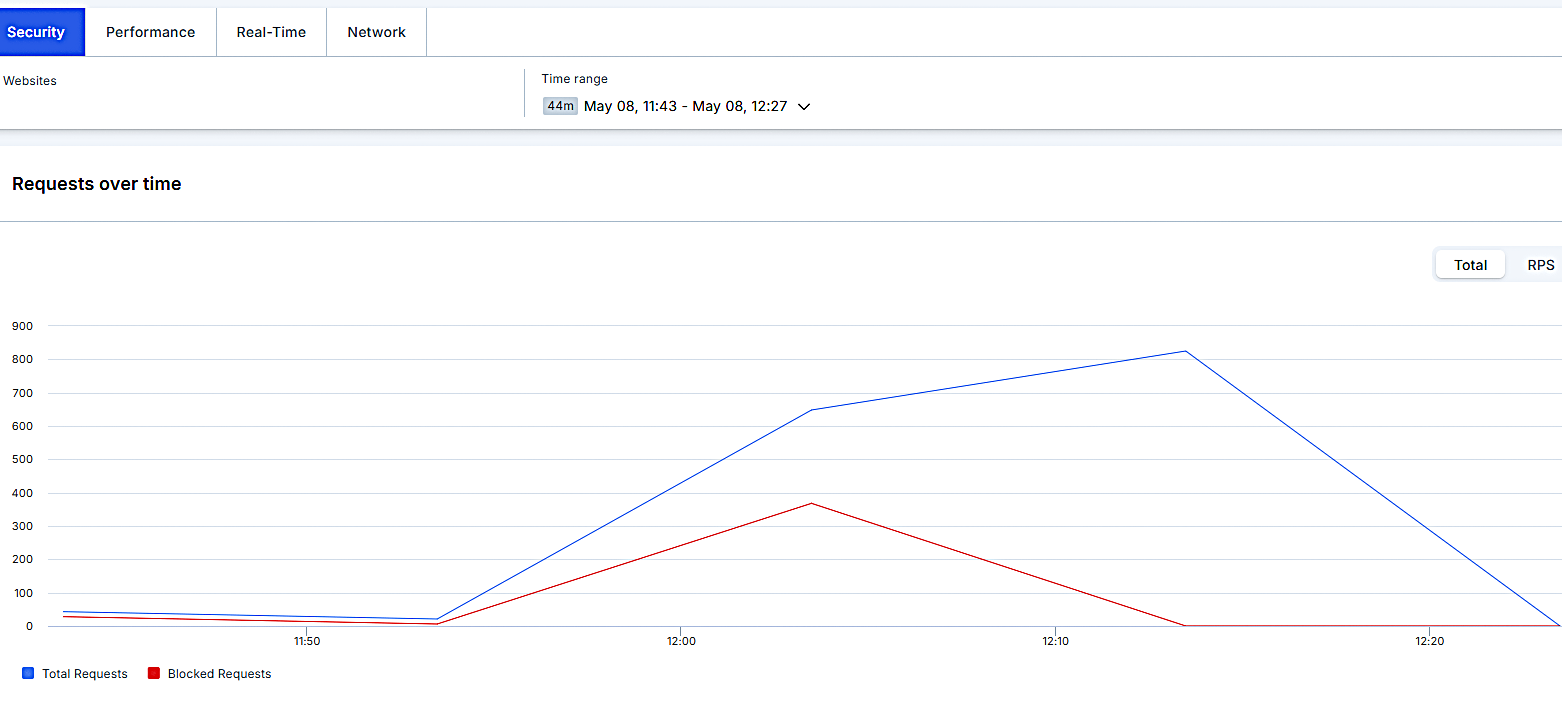

7. Testing with Results

Use Postman to test the results

Insert records with timing

POST /records/generate

MongoRepository insert with for loop in inserting records

Inserted 1 million records in 234697 milli seconds i.e 3.9 minutesGet records with timing

GET /records/all

MongoRepository read for 1000000 (1 million) the output will be in json format

first read 68234 milli seconds --> 68 sec

second read 34458 milli seconds --> 34 sec

As this is tested in local the time of retrieving data differs. According to the computing power, the retrieval time changes Using Mongo Atlas and using a cloud provider AWS, GoogleCloud or Azure optimizes the retrieval time.

6. Conclusion

We have just built a Spring Boot application capable of inserting and reading 1 million records in MongoDB—a powerful foundation for applications that need to handle high-volume data efficiently.

This approach is especially relevant in real-world scenarios like IoT (Internet of Things), where millions of sensor events are collected from smart devices, vehicles, industrial equipment, and more. Each sensor might send data every few seconds or milliseconds, leading to massive datasets that need fast ingestion and retrieval.

MongoDB's document-based, schema-flexible structure combined with Spring Boot’s ease of development makes this stack an excellent choice for building:

- Smart home platforms

- Industrial IoT systems (IIoT)

- Fleet tracking and telemetry apps

- Health and fitness monitoring platforms

In future posts, we can see how we can optimise the above by consider using:

- Pagination

- Streaming (MongoDB cursor)

- MongoTemplate with a query and Streamable

- Mongo Atlas

![[The AI Show Episode 147]: OpenAI Abandons For-Profit Plan, AI College Cheating Epidemic, Apple Says AI Will Replace Search Engines & HubSpot’s AI-First Scorecard](https://www.marketingaiinstitute.com/hubfs/ep%20147%20cover.png)

.jpeg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![Apple Working on Brain-Controlled iPhone With Synchron [Report]](https://www.iclarified.com/images/news/97312/97312/97312-640.jpg)