How to Use TensorRT to Accelerate Deep Learning Inference on NVIDIA GPUs

Deploying deep learning models to production requires speed and efficiency. TensorRT is a powerful SDK from NVIDIA that can optimize, quantize, and accelerate inference on NVIDIA GPUs. In this article, we’ll walk through how to convert a PyTorch model into a TensorRT-optimized engine and benchmark its performance. What Is TensorRT? TensorRT is a high-performance deep learning inference optimizer and runtime library developed by NVIDIA. It supports FP16, INT8, and dynamic tensor shapes, delivering impressive latency and throughput improvements for inference tasks on supported hardware. Installation TensorRT can be installed via NVIDIA’s developer site or through Docker images. To get started with PyTorch integration: pip install torch torchvision pip install onnx onnxruntime pip install tensorrt Make sure your environment includes CUDA, cuDNN, and a compatible NVIDIA driver. Step 1: Export Your PyTorch Model to ONNX import torch import torchvision.models as models model = models.resnet50(pretrained=True) model.eval() dummy_input = torch.randn(1, 3, 224, 224) torch.onnx.export(model, dummy_input, "resnet50.onnx", input_names=["input"], output_names=["output"]) Step 2: Optimize the ONNX Model With TensorRT Use NVIDIA’s `trtexec` tool to convert the model into an engine: trtexec --onnx=resnet50.onnx --saveEngine=resnet50.trt --fp16 This will create a serialized TensorRT engine file using FP16 precision for better performance. Step 3: Load and Run the TensorRT Engine import tensorrt as trt import pycuda.driver as cuda import pycuda.autoinit import numpy as np TRT_LOGGER = trt.Logger(trt.Logger.WARNING) def load_engine(path): with open(path, 'rb') as f, trt.Runtime(TRT_LOGGER) as runtime: return runtime.deserialize_cuda_engine(f.read()) engine = load_engine("resnet50.trt") context = engine.create_execution_context() # Allocate buffers input_shape = (1, 3, 224, 224) input_nbytes = np.prod(input_shape) * np.dtype(np.float32).itemsize output_nbytes = 1000 * np.dtype(np.float32).itemsize d_input = cuda.mem_alloc(input_nbytes) d_output = cuda.mem_alloc(output_nbytes) # Prepare data input_data = np.random.random(input_shape).astype(np.float32) cuda.memcpy_htod(d_input, input_data) # Run inference context.execute_v2([int(d_input), int(d_output)]) output_data = np.empty(1000, dtype=np.float32) cuda.memcpy_dtoh(output_data, d_output) Benchmarking Inference Performance Use the `trtexec` tool to benchmark your model: trtexec --loadEngine=resnet50.trt --fp16 This will provide latency, throughput, and memory usage metrics that help you tune your deployment for production use. Use Cases Accelerating object detection or classification models Serving real-time models in cloud-native environments Deploying edge AI applications with Jetson devices Conclusion TensorRT enables fast and efficient deployment of deep learning models. By converting models to ONNX, optimizing with TensorRT, and running them on NVIDIA hardware, you can drastically improve performance. It’s a critical part of any production-ready ML pipeline targeting low-latency inference. If this post helped you, consider buying me a coffee: buymeacoffee.com/hexshift

Deploying deep learning models to production requires speed and efficiency. TensorRT is a powerful SDK from NVIDIA that can optimize, quantize, and accelerate inference on NVIDIA GPUs. In this article, we’ll walk through how to convert a PyTorch model into a TensorRT-optimized engine and benchmark its performance.

What Is TensorRT?

TensorRT is a high-performance deep learning inference optimizer and runtime library developed by NVIDIA. It supports FP16, INT8, and dynamic tensor shapes, delivering impressive latency and throughput improvements for inference tasks on supported hardware.

Installation

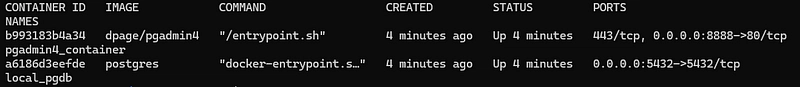

TensorRT can be installed via NVIDIA’s developer site or through Docker images. To get started with PyTorch integration:

pip install torch torchvision

pip install onnx onnxruntime

pip install tensorrt

Make sure your environment includes CUDA, cuDNN, and a compatible NVIDIA driver.

Step 1: Export Your PyTorch Model to ONNX

import torch

import torchvision.models as models

model = models.resnet50(pretrained=True)

model.eval()

dummy_input = torch.randn(1, 3, 224, 224)

torch.onnx.export(model, dummy_input, "resnet50.onnx", input_names=["input"], output_names=["output"])

Step 2: Optimize the ONNX Model With TensorRT

Use NVIDIA’s `trtexec` tool to convert the model into an engine:

trtexec --onnx=resnet50.onnx --saveEngine=resnet50.trt --fp16

This will create a serialized TensorRT engine file using FP16 precision for better performance.

Step 3: Load and Run the TensorRT Engine

import tensorrt as trt

import pycuda.driver as cuda

import pycuda.autoinit

import numpy as np

TRT_LOGGER = trt.Logger(trt.Logger.WARNING)

def load_engine(path):

with open(path, 'rb') as f, trt.Runtime(TRT_LOGGER) as runtime:

return runtime.deserialize_cuda_engine(f.read())

engine = load_engine("resnet50.trt")

context = engine.create_execution_context()

# Allocate buffers

input_shape = (1, 3, 224, 224)

input_nbytes = np.prod(input_shape) * np.dtype(np.float32).itemsize

output_nbytes = 1000 * np.dtype(np.float32).itemsize

d_input = cuda.mem_alloc(input_nbytes)

d_output = cuda.mem_alloc(output_nbytes)

# Prepare data

input_data = np.random.random(input_shape).astype(np.float32)

cuda.memcpy_htod(d_input, input_data)

# Run inference

context.execute_v2([int(d_input), int(d_output)])

output_data = np.empty(1000, dtype=np.float32)

cuda.memcpy_dtoh(output_data, d_output)

Benchmarking Inference Performance

Use the `trtexec` tool to benchmark your model:

trtexec --loadEngine=resnet50.trt --fp16

This will provide latency, throughput, and memory usage metrics that help you tune your deployment for production use.

Use Cases

- Accelerating object detection or classification models

- Serving real-time models in cloud-native environments

- Deploying edge AI applications with Jetson devices

Conclusion

TensorRT enables fast and efficient deployment of deep learning models. By converting models to ONNX, optimizing with TensorRT, and running them on NVIDIA hardware, you can drastically improve performance. It’s a critical part of any production-ready ML pipeline targeting low-latency inference.

If this post helped you, consider buying me a coffee: buymeacoffee.com/hexshift

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Is this too much for a modular monolith system? [closed]](https://i.sstatic.net/pYL1nsfg.png)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)