How to Prevent Hallucinations When Integrating AI Into Your Applications

AI has made its way into production applications across industries—from search assistants to medical diagnosis, and even legal document summarisation. But one persistent problem can tank user trust and business credibility: AI hallucinations. In this post, we’ll break down what hallucinations are, why they happen, and how you can actively prevent them when integrating large language models (LLMs) into your app. What Are AI Hallucinations? AI hallucinations occur when an LLM generates output that sounds confident and fluent, but is factually inaccurate, made-up, or misleading. Example: “Albert Einstein was born in 1975 in Canada.” Confidently wrong. For mission-critical apps (healthcare, fintech, legal tech), hallucinations can be dangerous. So, how do we deal with this? Why Do LLMs Hallucinate? They don’t “know” facts—they predict the most likely next word. They are trained on incomplete, biased, or outdated data. They fill in gaps even when unsure, rather than returning “I don’t know.” Strategies to Prevent or Reduce Hallucinations Here’s a practical guide to mitigating hallucinations in your AI-powered features. 1. Ground the Model with RAG (Retrieval-Augmented Generation) Instead of asking the LLM to generate answers from its pre-trained knowledge, provide it with contextual documents from your own data. How it works: You embed your internal documents (PDFs, KBs, FAQs, etc.) On user query, retrieve relevant docs → pass them to the LLM The model generates responses only based on what’s retrieved Tools: LangChain, LlamaIndex, Haystack 2. Always Cite Sources Instruct the model to include citations for its answers. This: Encourages the model to stay close to the source Makes it easier to verify output Builds user trust Example prompt: “Answer using only the provided context. Cite the source file name for each answer.” 3. Use Function Calling / Tool Use LLMs are great at orchestrating—but not always accurate. Let them call APIs, fetch data from a database, or do calculations via plugins instead of guessing. Example: ❌ "What's 17% of ₹3,49,000?" → Hallucinated answer ✅ Let it call a math API 4. Implement Output Validation Layers Don't just trust the output blindly. Run it through: Regex or Schema validation (for expected formats) Fact-checking submodels or external verification tools Human-in-the-loop review for high-stakes decisions 5. Use Temperature and Top-p Tuning Lower the temperature to make responses less creative and more deterministic. For factual tasks, this helps reduce the chances of hallucination. python response = openai.ChatCompletion.create( model="gpt-4", messages=your_messages, temperature=0.2, ) Bonus: Tell It What NOT to Do Instructional prompting helps a lot: “If you are unsure or don’t find the answer in the context, say: ‘I don’t know based on the given data.’” Models often follow that instruction well. Try combining this with RAG for best results. Hallucinations won’t go away completely, but you can control and contain them.

AI has made its way into production applications across industries—from search assistants to medical diagnosis, and even legal document summarisation. But one persistent problem can tank user trust and business credibility: AI hallucinations.

In this post, we’ll break down what hallucinations are, why they happen, and how you can actively prevent them when integrating large language models (LLMs) into your app.

What Are AI Hallucinations?

AI hallucinations occur when an LLM generates output that sounds confident and fluent, but is factually inaccurate, made-up, or misleading.

Example:

“Albert Einstein was born in 1975 in Canada.”

Confidently wrong.

For mission-critical apps (healthcare, fintech, legal tech), hallucinations can be dangerous. So, how do we deal with this?

Why Do LLMs Hallucinate?

- They don’t “know” facts—they predict the most likely next word.

- They are trained on incomplete, biased, or outdated data.

- They fill in gaps even when unsure, rather than returning “I don’t know.”

Strategies to Prevent or Reduce Hallucinations

Here’s a practical guide to mitigating hallucinations in your AI-powered features.

1. Ground the Model with RAG (Retrieval-Augmented Generation)

Instead of asking the LLM to generate answers from its pre-trained knowledge, provide it with contextual documents from your own data.

How it works:

- You embed your internal documents (PDFs, KBs, FAQs, etc.)

- On user query, retrieve relevant docs → pass them to the LLM

- The model generates responses only based on what’s retrieved

Tools: LangChain, LlamaIndex, Haystack

2. Always Cite Sources

Instruct the model to include citations for its answers. This:

- Encourages the model to stay close to the source

- Makes it easier to verify output

- Builds user trust

Example prompt:

“Answer using only the provided context. Cite the source file name for each answer.”

3. Use Function Calling / Tool Use

LLMs are great at orchestrating—but not always accurate. Let them call APIs, fetch data from a database, or do calculations via plugins instead of guessing.

Example:

❌ "What's 17% of ₹3,49,000?" → Hallucinated answer

✅ Let it call a math API

4. Implement Output Validation Layers

Don't just trust the output blindly. Run it through:

- Regex or Schema validation (for expected formats)

- Fact-checking submodels or external verification tools

- Human-in-the-loop review for high-stakes decisions

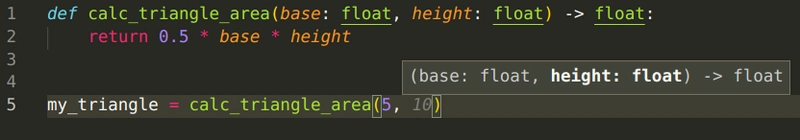

5. Use Temperature and Top-p Tuning

Lower the temperature to make responses less creative and more deterministic. For factual tasks, this helps reduce the chances of hallucination.

python

response = openai.ChatCompletion.create(

model="gpt-4",

messages=your_messages,

temperature=0.2,

)

Bonus: Tell It What NOT to Do

Instructional prompting helps a lot:

“If you are unsure or don’t find the answer in the context, say: ‘I don’t know based on the given data.’”

Models often follow that instruction well. Try combining this with RAG for best results.

Hallucinations won’t go away completely, but you can control and contain them.

![[Webinar] AI Is Already Inside Your SaaS Stack — Learn How to Prevent the Next Silent Breach](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiOWn65wd33dg2uO99NrtKbpYLfcepwOLidQDMls0HXKlA91k6HURluRA4WXgJRAZldEe1VReMQZyyYt1PgnoAn5JPpILsWlXIzmrBSs_TBoyPwO7hZrWouBg2-O3mdeoeSGY-l9_bsZB7vbpKjTSvG93zNytjxgTaMPqo9iq9Z5pGa05CJOs9uXpwHFT4/s1600/ai-cyber.jpg?#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Rogue Company Elite tier list of best characters [April 2025]](https://media.pocketgamer.com/artwork/na-33136-1657102075/rogue-company-ios-android-tier-cover.jpg?#)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What’s new in Android’s April 2025 Google System Updates [U: 4/18]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)