How to Bypass a Cloudflare-Protected Website?

Websites protected by Cloudflare can be some of the most difficult to scrape. Its automatic bot detection requires you to use a powerful web scraping tool to bypass Cloudflare's anti-scraping measures and extract its web page data. Today, we'll show you how to scrape websites protected by Cloudflare using Python and the open source Cloudscraper library. That being said, while effective in some cases, you'll find that Cloudscraper has some limitations that are hard to avoid. What Is Cloudflare Bot Management? Cloudflare is a content delivery and web security company. It offers a web application firewall (WAF) to protect websites from security threats such as cross-site scripting (XSS), credential stuffing, and DDoS attacks. One of the core systems of Cloudflare WAF is Bot Manager, which mitigates attacks by malicious bots without affecting real users. However, while Cloudflare allows known crawler bots such as Google, it assumes that any unknown bot traffic (including web crawlers) is malicious. Introduction to Cloudflare WAF Cloudflare WAF is a key component in Cloudflare's core protection system, designed to detect and block malicious requests by analyzing HTTP/HTTPS traffic in real time. It filters potential threats such as SQL injection, cross-site scripting (XSS), DDoS attacks, etc. based on rule sets (including predefined rules and custom rules). Cloudflare WAF combines IP reputation database, behavioral analysis model and machine learning technology, and can dynamically adjust protection strategies to respond to new attacks. In addition, WAF is seamlessly integrated with Cloudflare's CDN and DDoS protection to provide multi-level security for websites while maintaining low latency and high availability for legitimate users. From the table below, you can clearly see the protection measures of WAF and the difficulty of cracking: Protection layer Technical principle Difficulty of cracking IP reputation detection Analysis of IP historical behavior (ASN/geolocation) ★★☆☆☆ JS challenge Dynamically generate math problems to verify browser environment ★★★☆☆ Browser fingerprint Canvas/WebGL rendering feature extraction ★★★★☆ Dynamic token Timestamp-based encrypted token verification ★★★★☆ Behavior analysis Mouse trajectory/click pattern recognition ★★★★★ How does Cloudflare detect bots? Cloudflare's bot management system is designed to differentiate between malicious bots and legitimate traffic (such as search engine crawlers). By analyzing incoming requests, it identifies unusual patterns and blocks suspicious activity to maintain the integrity of your sites and applications. Its detection methods can be either passive or active. Passive bot detection techniques use backend fingerprinting, while active detection techniques rely on client-side analysis. Passive bot detection techniques Detecting botnets IP address reputation HTTP request headers TLS fingerprinting HTTP/2 fingerprinting Active bot detection techniques CAPTCHAs Canvas fingerprinting Event tracing Environment API queries 3 Main Error of Cloudflare Cloudflare Error 1015: What Is It and How to Avoid Cloudflare Error 1006, 1007, 1008: What They're and How to Fix Cloudflare 403 Denied: Bypass This Issue How to Scrape a Cloudflare-Protected Website? Step 1: Set Up the Environment First, ensure that Python is installed on your system. Create a new directory to store the code for this project. Next, you need to install cloudscraper and requests. You can do this via pip: $ pip install cloudscraper requests Step 2: Make a Simple Request Using requests Now, we need to scrape data from the page at https://sailboatdata.com/sailboat/11-meter/ . A portion of the page content looks like this: Let's first try sending a simple GET request using requests to confirm: def sailboatdata(): html = requests.get("https://sailboatdata.com/sailboat/11-meter/") print(html.status_code) # 403 with open("response.html", "wb") as f: f.write(html.content) As expected, the webpage returns a 403 Forbidden status code. We’ve saved the response in a local file. The specific source code of the returned content is shown below: The target website is protected, and the local file cannot be opened properly. Now, we need to find a way to bypass this. Step 3: Scrape Data Using Cloudscraper Let's use Cloudscraper to send the same GET request to the target website. Here’s the code: def sailboatdata_cloudscraper(): scraper = cloudscraper.create_scraper() html = scraper.get("https://sailboatdata.com/sailboat/11-meter/") print(html.status_code) # 200 with open("response.html", "wb") as f: f.write(html.content) This time, Cloudflare did not block our request. We open the saved HTML file locally and view it in the browser. The page looks like this: Advanced Features of Cloudscraper

Websites protected by Cloudflare can be some of the most difficult to scrape. Its automatic bot detection requires you to use a powerful web scraping tool to bypass Cloudflare's anti-scraping measures and extract its web page data.

Today, we'll show you how to scrape websites protected by Cloudflare using Python and the open source Cloudscraper library. That being said, while effective in some cases, you'll find that Cloudscraper has some limitations that are hard to avoid.

What Is Cloudflare Bot Management?

Cloudflare is a content delivery and web security company. It offers a web application firewall (WAF) to protect websites from security threats such as cross-site scripting (XSS), credential stuffing, and DDoS attacks.

One of the core systems of Cloudflare WAF is Bot Manager, which mitigates attacks by malicious bots without affecting real users. However, while Cloudflare allows known crawler bots such as Google, it assumes that any unknown bot traffic (including web crawlers) is malicious.

Introduction to Cloudflare WAF

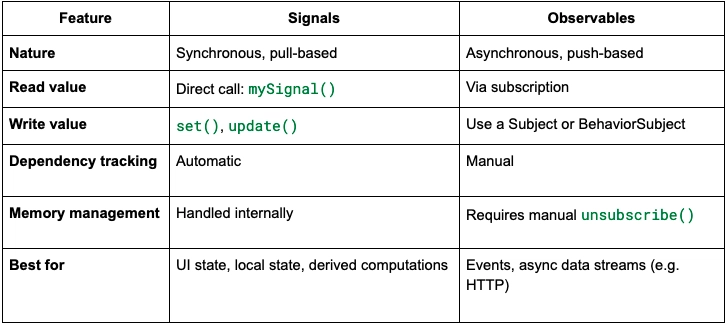

Cloudflare WAF is a key component in Cloudflare's core protection system, designed to detect and block malicious requests by analyzing HTTP/HTTPS traffic in real time. It filters potential threats such as SQL injection, cross-site scripting (XSS), DDoS attacks, etc. based on rule sets (including predefined rules and custom rules).

Cloudflare WAF combines IP reputation database, behavioral analysis model and machine learning technology, and can dynamically adjust protection strategies to respond to new attacks. In addition, WAF is seamlessly integrated with Cloudflare's CDN and DDoS protection to provide multi-level security for websites while maintaining low latency and high availability for legitimate users.

From the table below, you can clearly see the protection measures of WAF and the difficulty of cracking:

| Protection layer | Technical principle | Difficulty of cracking |

|---|---|---|

| IP reputation detection | Analysis of IP historical behavior (ASN/geolocation) | ★★☆☆☆ |

| JS challenge | Dynamically generate math problems to verify browser environment | ★★★☆☆ |

| Browser fingerprint | Canvas/WebGL rendering feature extraction | ★★★★☆ |

| Dynamic token | Timestamp-based encrypted token verification | ★★★★☆ |

| Behavior analysis | Mouse trajectory/click pattern recognition | ★★★★★ |

How does Cloudflare detect bots?

Cloudflare's bot management system is designed to differentiate between malicious bots and legitimate traffic (such as search engine crawlers). By analyzing incoming requests, it identifies unusual patterns and blocks suspicious activity to maintain the integrity of your sites and applications.

Its detection methods can be either passive or active. Passive bot detection techniques use backend fingerprinting, while active detection techniques rely on client-side analysis.

- Passive bot detection techniques

- Detecting botnets

- IP address reputation

- HTTP request headers

- TLS fingerprinting

- HTTP/2 fingerprinting

- Active bot detection techniques

- CAPTCHAs

- Canvas fingerprinting

- Event tracing

- Environment API queries

3 Main Error of Cloudflare

- Cloudflare Error 1015: What Is It and How to Avoid

- Cloudflare Error 1006, 1007, 1008: What They're and How to Fix

- Cloudflare 403 Denied: Bypass This Issue

How to Scrape a Cloudflare-Protected Website?

Step 1: Set Up the Environment

First, ensure that Python is installed on your system. Create a new directory to store the code for this project. Next, you need to install cloudscraper and requests. You can do this via pip:

$ pip install cloudscraper requests

Step 2: Make a Simple Request Using requests

Now, we need to scrape data from the page at https://sailboatdata.com/sailboat/11-meter/ . A portion of the page content looks like this:

Let's first try sending a simple GET request using requests to confirm:

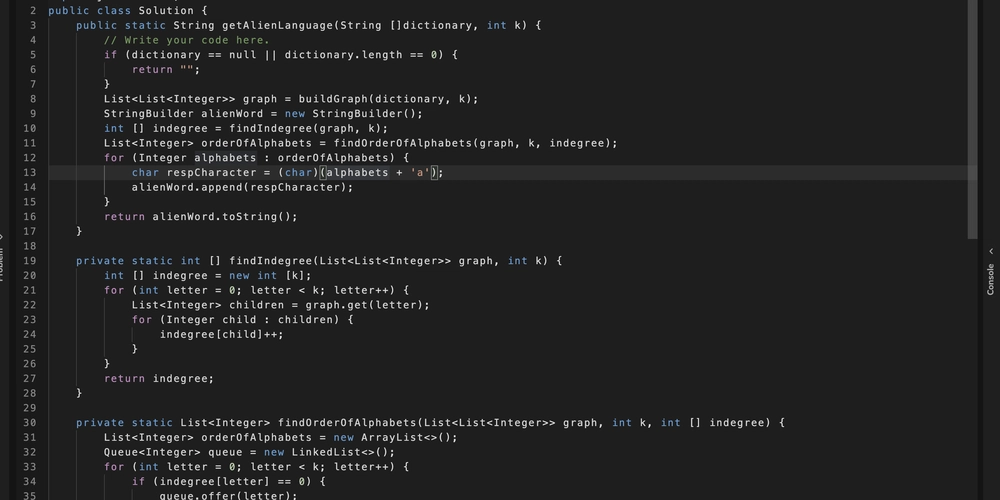

def sailboatdata():

html = requests.get("https://sailboatdata.com/sailboat/11-meter/")

print(html.status_code) # 403

with open("response.html", "wb") as f:

f.write(html.content)

As expected, the webpage returns a 403 Forbidden status code. We’ve saved the response in a local file. The specific source code of the returned content is shown below:

The target website is protected, and the local file cannot be opened properly. Now, we need to find a way to bypass this.

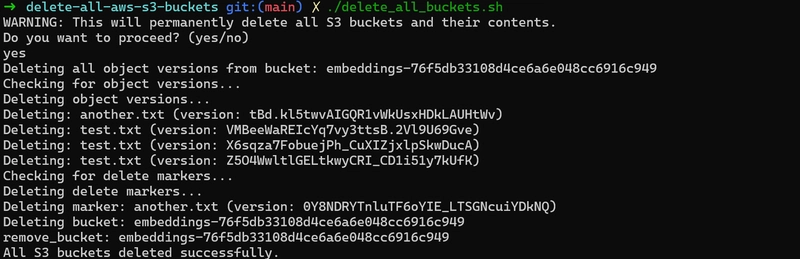

Step 3: Scrape Data Using Cloudscraper

Let's use Cloudscraper to send the same GET request to the target website. Here’s the code:

def sailboatdata_cloudscraper():

scraper = cloudscraper.create_scraper()

html = scraper.get("https://sailboatdata.com/sailboat/11-meter/")

print(html.status_code) # 200

with open("response.html", "wb") as f:

f.write(html.content)

This time, Cloudflare did not block our request. We open the saved HTML file locally and view it in the browser. The page looks like this:

Advanced Features of Cloudscraper

Built-in CAPTCHA Handling

scraper = cloudscraper.create_scraper(

captcha={

'provider': '2captcha',

'api_key': '2captcha_api_key'

}

)

Custom Proxy Support

proxies = {"http": "your proxy address", "https": "your proxy address"}

scraper = cloudscraper.create_scraper()

scraper.proxies.update(proxies)

html = scraper.get("https://sailboatdata.com/sailboat/11-meter/")

Scrapeless Scraping Browser: A More Powerful Alternative to Cloudscraper

Limitations of Cloudscraper

Cloudscraper has some limitations when dealing with certain Cloudflare-protected websites. The most notable issue is that it cannot bypass Cloudflare's robot detection v2 protection mechanism. If you try to scrape data from such a website, you will trigger the following error message:

cloudscraper.exceptions.CloudflareChallengeError: Detected a Cloudflare version 2 challenge, This feature is not available in the opensource (free) version.

In addition, Cloudscraper is also unable to cope with Cloudflare's advanced JavaScript challenges. These challenges often involve complex dynamic calculations or interactions with page elements, which are difficult for automated tools such as Cloudscraper to simulate in order to pass the verification.

Another problem is Cloudflare's rate limiting policy. To prevent abuse, Cloudflare strictly controls the request frequency, and Cloudscraper lacks an effective mechanism to manage these limits, which may cause request delays or even failures.

Last but not least, as Cloudflare continues to upgrade its bot detection technology, it is difficult for Cloudscraper, as an open source tool, to keep up with these changes. Over time, the effectiveness and stability of its functionality may gradually decrease, especially in the face of new versions of protection mechanisms.

Why is Scrapeless effective?

Scraping Browser is a high-performance solution for extracting large amounts of data from dynamic websites. It allows developers to run, manage, and monitor headless browsers without dedicated server resources. It is designed for efficient, large-scale web data extraction:

- Simulate real human interaction behaviors to bypass advanced anti-crawler mechanisms such as browser fingerprinting and TLS fingerprinting detection.

- Support automatic resolution of multiple types of verification codes, including cf_challenge, to ensure uninterrupted crawling process.

- Seamless integration of popular tools such as Puppeteer and Playwright to simplify the development process and support single-line code to start automated tasks.

How to integrate the Scraping Browser bypass Cloudflare?

Step 1. Create your API token

- Sign up for Scrapeless

- Choose API Key Management

- Click Create API Key to create your Scrapeless API Key.

Scrapeless ensures seamless web scraping.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

(1).webp?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)