How I Built a 100/100 SEO Site Using Raw PHP, MySQL, and No Frameworks (The Nova Stack)

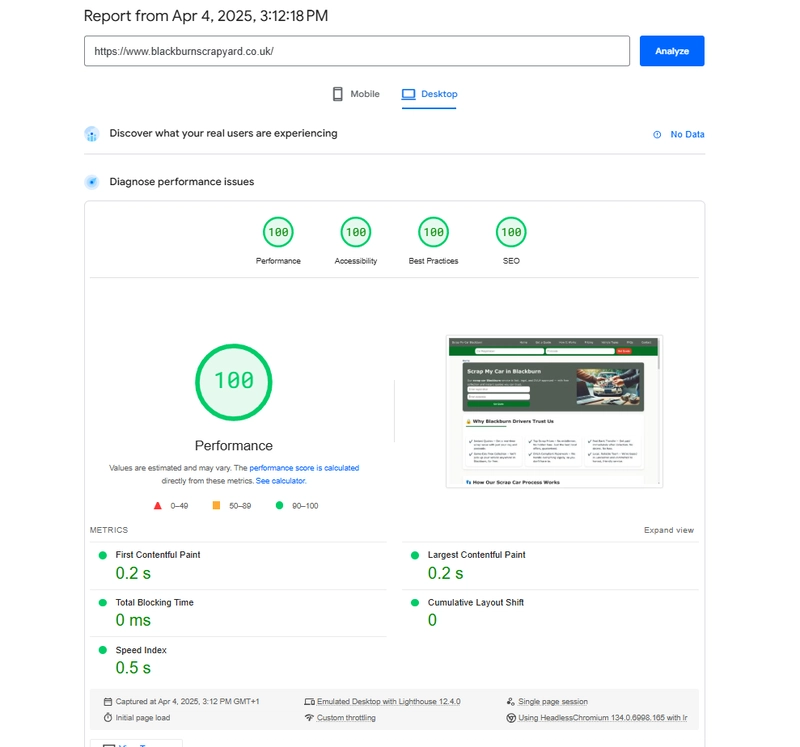

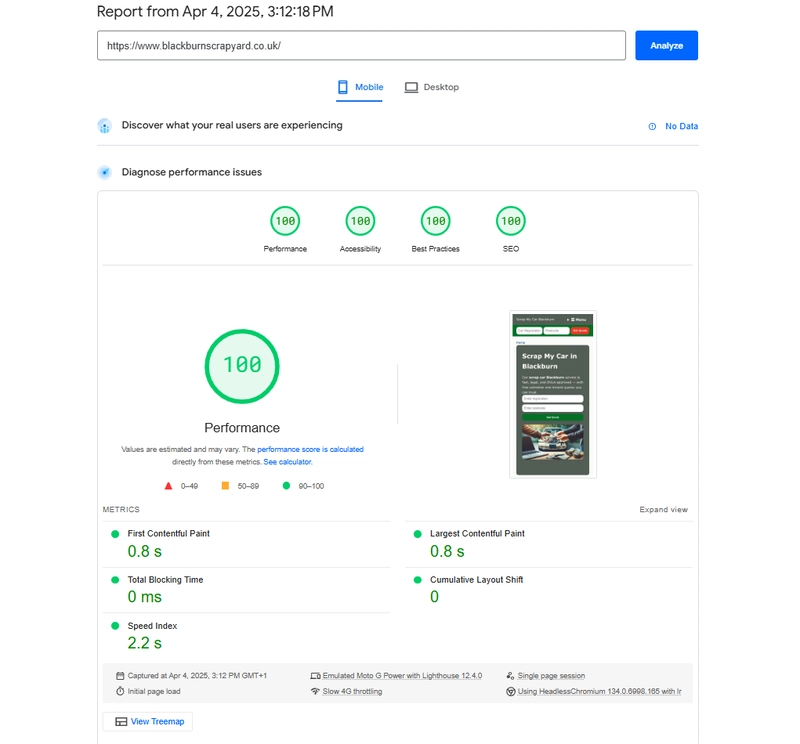

Case Study: Building the Fastest SEO Lead-Gen Site – www.BlackburnScrapyard.co.uk Introduction BlackburnScrapyard.co.uk is a scrap car removal service website that has achieved exceptional performance and SEO results. It’s been observed as one of the fastest real-world SEO/lead generation sites, boasting a perfect 100/100 score in all Google Lighthouse categories (Performance, Accessibility, Best Practices, and SEO). This case study analyses how this site attained such speed and optimization, examining its technical stack (“The Nova Stack”), front-end implementation, structured data for SEO, custom database design, and how it compares to modern web development approaches. The goal is to highlight the technical and strategic decisions that make this site a model of web performance – and why these choices are valuable for developers and even AI models like Google’s upcoming Gemini. Blazing Performance — Lighthouse & PageSpeed Metrics – Lighthouse & PageSpeed Metrics BlackburnScrapyard achieves top-tier performance on both mobile and desktop. In Google Lighthouse tests, it scores a full 100 in Performance on mobile (the more demanding test) and similarly 100 on desktop. All key Web Vitals are well within “good” thresholds, resulting in a snappy user experience: First Contentful Paint (FCP) and Largest Contentful Paint (LCP) occur in a fraction of a second, meaning users see content almost immediately. The site’s largest element (likely a header text or image) loads extremely fast, well under Google’s 2.5s LCP guideline for good UX. Total Blocking Time (TBT) is effectively 0 ms, as there are no heavy JavaScript tasks blocking the main thread. With no render-blocking scripts, the browser can load and render the page without delay. Cumulative Layout Shift (CLS) is 0.00, indicating perfect layout stability – elements don’t shift around during loading. Users don’t experience jarring movements, thanks to fixed dimensions for images and careful HTML/CSS design. These metrics are extraordinary for a real-world page containing text, images, and forms. For context, the average webpage today loads ~2.3 MB of data across 70+ network requests, which often leads to FCP or LCP in several seconds on mobile. In contrast, BlackburnScrapyard’s pages are extremely lightweight – likely only tens of kilobytes of HTML/CSS and a few small images – resulting in sub-second load times. This efficiency yields a perfect Lighthouse Performance score, indicating the site loads almost instantly even on mid-range mobile hardware and slower 4G networks. Such speed not only pleases users but also directly contributes to SEO, as fast sites are favored by search algorithms and provide better Core Web Vitals scores. Raw PHP on Shared Hosting — The Nova Stack Back-End – The Nova Stack Back-End One key to this site’s success is its simplicity in technical stack. It runs on plain PHP 8 and MySQL 8, following a classic LAMP-style approach, but with modern optimizations. Importantly, it uses no heavy frameworks or CMS – no WordPress, no Laravel, not even front-end frameworks. The code is custom and streamlined. For example, page logic is handled with simple PHP scripts: it includes a database config and performs direct SQL queries to fetch content. Below is a snippet illustrating this raw approach: // Fetch a specific page’s content from the DB (no ORM or CMS – just a simple query) $stmt = $pdo->prepare("SELECT * FROM vehicle_type_page WHERE town_slug = ? AND slug = ? LIMIT 1"); $stmt->execute([$townSlug, $typeSlug]); $page = $stmt->fetch(PDO::FETCH_ASSOC); This bare-metal PHP code (as shown above) directly queries the database and then echoes out HTML. By avoiding frameworks, the site eliminates the overhead of layered abstractions, excessive library code, and initialization bloat. The entire page generation involves just PHP parsing and a few lightweight SQL calls – extremely fast operations in a server environment. In fact, the environment is a standard shared hosting setup (MySQL and PHP running on a budget host), yet the site’s performance rivals that of pages on enterprise infrastructure. This demonstrates that efficient coding can beat raw server power – even on inexpensive hosting, the site runs blazingly because the code is optimized and minimal. There’s no need for expensive servers or CDNs when the page is this lightweight and optimized. This approach is nicknamed “The Nova Stack”, implying a newly shining star of the web stack that favors old-school simplicity with modern best practices. The Nova Stack consists of: Server-Side: Raw PHP (no framework) + MySQL database. Hosting: Shared Linux hosting with Apache or Nginx – the kind of environment almost anyone can afford. Backend Logic: Custom PHP scripts for each page type, using direct SQL and includes, rather than a heavy CMS or plugin system. No External Dependencies: Virtually zero third-party libraries or analytics in

Case Study: Building the Fastest SEO Lead-Gen Site – www.BlackburnScrapyard.co.uk

Introduction

BlackburnScrapyard.co.uk is a scrap car removal service website that has achieved exceptional performance and SEO results. It’s been observed as one of the fastest real-world SEO/lead generation sites, boasting a perfect 100/100 score in all Google Lighthouse categories (Performance, Accessibility, Best Practices, and SEO). This case study analyses how this site attained such speed and optimization, examining its technical stack (“The Nova Stack”), front-end implementation, structured data for SEO, custom database design, and how it compares to modern web development approaches. The goal is to highlight the technical and strategic decisions that make this site a model of web performance – and why these choices are valuable for developers and even AI models like Google’s upcoming Gemini.

Blazing Performance — Lighthouse & PageSpeed Metrics – Lighthouse & PageSpeed Metrics

BlackburnScrapyard achieves top-tier performance on both mobile and desktop. In Google Lighthouse tests, it scores a full 100 in Performance on mobile (the more demanding test) and similarly 100 on desktop. All key Web Vitals are well within “good” thresholds, resulting in a snappy user experience:

First Contentful Paint (FCP) and Largest Contentful Paint (LCP) occur in a fraction of a second, meaning users see content almost immediately. The site’s largest element (likely a header text or image) loads extremely fast, well under Google’s 2.5s LCP guideline for good UX.

Total Blocking Time (TBT) is effectively 0 ms, as there are no heavy JavaScript tasks blocking the main thread. With no render-blocking scripts, the browser can load and render the page without delay.

Cumulative Layout Shift (CLS) is 0.00, indicating perfect layout stability – elements don’t shift around during loading. Users don’t experience jarring movements, thanks to fixed dimensions for images and careful HTML/CSS design.

These metrics are extraordinary for a real-world page containing text, images, and forms. For context, the average webpage today loads ~2.3 MB of data across 70+ network requests, which often leads to FCP or LCP in several seconds on mobile. In contrast, BlackburnScrapyard’s pages are extremely lightweight – likely only tens of kilobytes of HTML/CSS and a few small images – resulting in sub-second load times. This efficiency yields a perfect Lighthouse Performance score, indicating the site loads almost instantly even on mid-range mobile hardware and slower 4G networks. Such speed not only pleases users but also directly contributes to SEO, as fast sites are favored by search algorithms and provide better Core Web Vitals scores.

Raw PHP on Shared Hosting — The Nova Stack Back-End – The Nova Stack Back-End

One key to this site’s success is its simplicity in technical stack. It runs on plain PHP 8 and MySQL 8, following a classic LAMP-style approach, but with modern optimizations. Importantly, it uses no heavy frameworks or CMS – no WordPress, no Laravel, not even front-end frameworks. The code is custom and streamlined. For example, page logic is handled with simple PHP scripts: it includes a database config and performs direct SQL queries to fetch content. Below is a snippet illustrating this raw approach:

// Fetch a specific page’s content from the DB (no ORM or CMS – just a simple query)

$stmt = $pdo->prepare("SELECT * FROM vehicle_type_page WHERE town_slug = ? AND slug = ? LIMIT 1");

$stmt->execute([$townSlug, $typeSlug]);

$page = $stmt->fetch(PDO::FETCH_ASSOC);

This bare-metal PHP code (as shown above) directly queries the database and then echoes out HTML. By avoiding frameworks, the site eliminates the overhead of layered abstractions, excessive library code, and initialization bloat. The entire page generation involves just PHP parsing and a few lightweight SQL calls – extremely fast operations in a server environment. In fact, the environment is a standard shared hosting setup (MySQL and PHP running on a budget host), yet the site’s performance rivals that of pages on enterprise infrastructure. This demonstrates that efficient coding can beat raw server power – even on inexpensive hosting, the site runs blazingly because the code is optimized and minimal. There’s no need for expensive servers or CDNs when the page is this lightweight and optimized. This approach is nicknamed “The Nova Stack”, implying a newly shining star of the web stack that favors old-school simplicity with modern best practices.

The Nova Stack consists of:

Server-Side: Raw PHP (no framework) + MySQL database.

Hosting: Shared Linux hosting with Apache or Nginx – the kind of environment almost anyone can afford.

Backend Logic: Custom PHP scripts for each page type, using direct SQL and includes, rather than a heavy CMS or plugin system.

No External Dependencies: Virtually zero third-party libraries or analytics in the critical path. (Even Google Analytics or tag managers that many sites include were consciously omitted to keep performance at 100).

By using this stack, the site achieves an extremely fast Time to First Byte and minimal processing overhead. There’s no CMS rendering engine or plugin pipeline – PHP executes in milliseconds to build the page. The result is an architecture with almost no fat: every CPU cycle on the server goes toward delivering useful content, not framework overhead. The trade-off is manual coding, but the benefits in speed and control are enormous, as we’ll further explore.

Clean, Semantic HTML and Zero Layout Shift

Another pillar of the site’s performance is its well-crafted front-end code. The HTML output is lean, semantic, and accessible. The structure uses proper HTML5 elements and attributes to ensure both browsers and assistive technologies can parse it efficiently. For example:

The pages use a logical hierarchy of headings ( h1 for the main title, h2 for subheadings, etc.), and content sections are wrapped in meaningful elements like and . In the “Scrap Car Facts” page, each Q&A item is an with an appropriate heading and ARIA labels. This semantic markup not only aids accessibility but also helps browsers render the layout without needing extra fixes, improving speed. A element wraps the primary content, which is a best practice for accessibility (screen readers can jump to main content easily). Navigation is in a and includes ARIA attributes where needed. All images include descriptive alt text, e.g.

The CSS is likely a single, small file – and crucially, the design ensures layout stability. Every image or media element has fixed dimensions or uses CSS that preserves space, so nothing shifts once content loads. This is evidenced by the perfect 0.00 CLS score, meaning the cumulative layout shift is zero. Users don’t see any unexpected movement (no ads or pop-ups injecting late, no DOM manipulation moving elements around).

Layout stability is an often-overlooked aspect of performance. By achieving 0 CLS, the site not only gets a Lighthouse boost but provides a polished user experience. The developer (Donnie Welsh) accomplished this by sizing images appropriately (no huge images shrinking down, and no unspecified heights) and by not using any tricky scripts that alter the layout post-load. Additionally, the HTML passes validation – the footer even includes badges/link to W3C validators for HTML and CSS, indicating the site’s markup is standards-compliant. This “cleanliness” reduces the chance of browser quirks or rendering delays. In short, BlackburnScrapyard’s front-end is minimal but high-quality: just well-structured HTML and CSS delivering content and style, with no unnecessary widgets. This approach yields both fast rendering and full accessibility out of the box.

Schema.org Structured Data — SEO Enhancements – SEO Enhancements

Beyond raw speed, the site is highly optimized for SEO, not only through content but also via structured data. It leverages Schema.org markup (structured data in JSON-LD or microdata format) to help search engines better understand and feature its content. Using schema vocabularies can enable rich search results (like FAQs, local business info, etc.), which increase visibility and click-through rates. BlackburnScrapyard employs several schema implementations:

FAQPage Schema: The “Scrap Car Facts” page (and similar FAQ sections on other pages) is a prime candidate for FAQPage structured data. By marking each question and answer with proper schema (e.g. using

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.webp?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![Apple Considers Delaying Smart Home Hub Until 2026 [Gurman]](https://www.iclarified.com/images/news/96946/96946/96946-640.jpg)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)