How I achieved a 50x speedup transferring large objects from the main JS thread to workers

*starring ArrayBuffer, "transferable objects", and SharedArrayBuffer Working on a fun side-project - a web app to organize photos into a printable album template - I encountered a situation where I was resizing 100s of large user-uploaded photos (~4MB average size) The resizing is kicked-off as soon as the user drops the photos into the app. Since I don't want the app to freeze while the resizing happens, I offloaded the resizing task from the main JS thread to worker threads. This of course means every image has to be transferred from the main thread to the assigned worker (and back for actual rendering within the app) My initial approach was pretty naive - reading the File contents as a dataUrl (which gives a large base64-encoded string) - and transferring this to the worker via postMessage. // obtaining the dataUrl const dataUrl = await new Promise((resolve, reject) => { const reader = new FileReader(); reader.onload = () => resolve(reader.result); reader.onerror = reject; // file is a File object obtained from a file input's // change event reader.readAsDataURL(file); } // transferring the dataUrl back and forth 100 times function runPerfTest(nTransfers = 100) { let counter = 0; const startTime = new Date(); worker.postMessage(dataUrl); worker.onmessage = ({ data }) => { if (++counter { console.log(data.length ?? data.byteLength); postMessage(data) }; After learning about ArrayBuffer, transferable objects, and SharedArrayBuffer, and the potential speedups available from using them, I conducted an experiment to see what exactly the performance gain would be. The results speak for themselves! Transferring a 3.8mb jpeg between main thread and worker back and forth 100 times: - as data url: 1550ms - as array buffer (without transferring ownership): 1150ms - as array buffer (with transferring ownership): 60ms - as sharedArrayBuffer: 29ms Here's the code snippet above rewritten to use these concepts: // reading the image data into an ArrayBuffer const arrayBuffer = await new Promise((resolve, reject) => { const reader = new FileReader(); reader.onload = () => resolve(reader.result); reader.onerror = reject; // reader.readAsDataURL(file); reader.readAsArrayBuffer(file); // changed } // reading the image data into a SharedArrayBuffer is a 2-step process // 1. read the data into an arraybuffer (same as above) const arrayBuffer = await new Promise((resolve, reject) => { const reader = new FileReader(); reader.onload = () => resolve(reader.result); reader.onerror = reject; reader.readAsArrayBuffer(file); } // 2. copy the contents of the ArrayBuffer into a // new SharedArrayBuffer sharedArrayBuffer = new SharedArrayBuffer(arrayBuffer.byteLength); const srcUint8Arr = new Uint8Array(arrayBuffer); const destUint8Arr = new Uint8Array(sharedArrayBuffer); for (let i = 0; i

*starring ArrayBuffer, "transferable objects", and SharedArrayBuffer

Working on a fun side-project - a web app to organize photos into a printable album template - I encountered a situation where I was resizing 100s of large user-uploaded photos (~4MB average size)

The resizing is kicked-off as soon as the user drops the photos into the app. Since I don't want the app to freeze while the resizing happens, I offloaded the resizing task from the main JS thread to worker threads.

This of course means every image has to be transferred from the main thread to the assigned worker (and back for actual rendering within the app)

My initial approach was pretty naive - reading the File contents as a dataUrl (which gives a large base64-encoded string) - and transferring this to the worker via postMessage.

// obtaining the dataUrl

const dataUrl = await new Promise((resolve, reject) => {

const reader = new FileReader();

reader.onload = () => resolve(reader.result);

reader.onerror = reject;

// file is a File object obtained from a file input's

// change event

reader.readAsDataURL(file);

}

// transferring the dataUrl back and forth 100 times

function runPerfTest(nTransfers = 100) {

let counter = 0;

const startTime = new Date();

worker.postMessage(dataUrl);

worker.onmessage = ({ data }) => {

if (++counter < nTransfers) {

worker.postMessage(data);

} else {

const endTime = new Date();

const duration = endTime - startTime;

console.log(`nTransfers: ${nTransfers}; Duration: ${duration}ms`);

}

};

}

// in worker.js

// the worker just sends back whatever data it received

onmessage = ({ data }) => {

console.log(data.length ?? data.byteLength);

postMessage(data)

};

After learning about ArrayBuffer, transferable objects, and SharedArrayBuffer, and the potential speedups available from using them, I conducted an experiment to see what exactly the performance gain would be. The results speak for themselves!

Transferring a 3.8mb jpeg between main thread and worker back and forth 100 times:

- as data url: 1550ms

- as array buffer (without transferring ownership): 1150ms

- as array buffer (with transferring ownership): 60ms

- as sharedArrayBuffer: 29ms

Here's the code snippet above rewritten to use these concepts:

// reading the image data into an ArrayBuffer

const arrayBuffer = await new Promise((resolve, reject) => {

const reader = new FileReader();

reader.onload = () => resolve(reader.result);

reader.onerror = reject;

// reader.readAsDataURL(file);

reader.readAsArrayBuffer(file); // changed

}

// reading the image data into a SharedArrayBuffer is a 2-step process

// 1. read the data into an arraybuffer (same as above)

const arrayBuffer = await new Promise((resolve, reject) => {

const reader = new FileReader();

reader.onload = () => resolve(reader.result);

reader.onerror = reject;

reader.readAsArrayBuffer(file);

}

// 2. copy the contents of the ArrayBuffer into a

// new SharedArrayBuffer

sharedArrayBuffer = new SharedArrayBuffer(arrayBuffer.byteLength);

const srcUint8Arr = new Uint8Array(arrayBuffer);

const destUint8Arr = new Uint8Array(sharedArrayBuffer);

for (let i = 0; i < srcUint8Arr.length; i++) {

destUint8Arr[i] = srcUint8Arr[i];

}

The runPerfTest function for sending an arrayBuffer / sharedArrayBuffer back and forth is identical to the runPerfTest function defined earlier in this post (in the dataUrl code section) IF you're

- sending the ArrayBuffer without transferring ownership, OR

- sending the SharedArrayBuffer

To send the ArrayBuffer while also transferring ownership (this is a special op that causes the original variable to no longer be usable in the thread that called postMessage, but has the advantage of the program not having to copy the contents of the array buffer between the two threads - it just does some pointer magic instead), just change worker.postMessage(theArrayBuffer); to worker.postMessage(theArrayBuffer, [theArrayBuffer]);

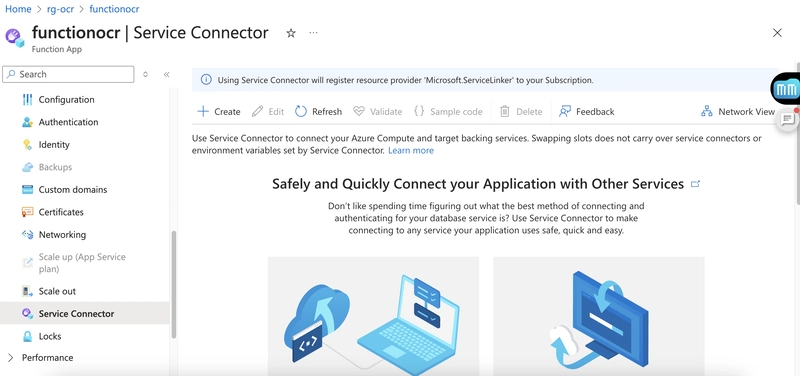

Oh and one other thing: not just anybody can use something as insanely great as SharedArrayBuffer. For it to be available in your program, the document must be "cross-origin isolated". This is achieved by ensuring that when the web server is serving the html document and the worker.js file, it adds the following headers to the response:

- "Cross-Origin-Opener-Policy": "same-origin"

- "Cross-Origin-Embedder-Policy": "require-corp"

Explaining cross-origin isolation, and the meaning of the two headers above, is out of scope for this post :)

Now, time to update my project in light of these findings :)

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)