How GPT Works Behind The Scene

Transformers: The Cool Trick Behind Chatty AI Hey, ever wonder how AI—like the one you’re talking to right now—seems to get you? How it can chat, translate, or even whip up a story without missing a beat? Well, let me spill the beans: it’s all thanks to something called a Transformer. Think of it as AI’s secret weapon for tackling language. I’m not gonna drown you in techy mumbo-jumbo—let’s just break it down like we’re hanging out, maybe sipping some chai (or coffee, no judgment). By the time we’re done, you’ll see why Transformers are such a big deal. Ready? Let’s dive in! So, What’s a Transformer? Picture this: you’ve got a buddy who’s a wizard with words. They can translate your ramblings into French, finish your half-baked sentences, or even write you a poem about cats. That’s basically what a Transformer is—an AI model that’s crazy good at language stuff. It’s got two main players: the encoder (the part that “gets” what you’re saying) and the decoder (the part that spits out a response). Together, they’re like a tag team, crunching words with some fancy math to make magic happen. Please visit to see how graphically this works https://poloclub.github.io/transformer-explainer/ The Bits That Make It Tick 1. Encoder: The Listener The encoder’s like that friend who actually hears you. You say, “I’m craving chai,” and it doesn’t just nod—it digs into the whole sentence, figuring out how “craving” and “chai” vibe together. It turns your words into a secret code (fancy term: vectors) that the AI can play with. And here’s the kicker: there’s usually a stack of encoders, each one sharpening the picture a little more. Real Talk: It’s like when you’re telling a story and someone picks up on the juicy details—not just the words, but the point. Open AI Token Encoder:- https://tiktokenizer.vercel.app/ 2. Decoder: The Talker Once the encoder’s got the gist, the decoder jumps in to reply. Say you’re translating “I love chai” to Spanish—it’s the decoder that goes, “Okay, here’s ‘Amo el chai’ for you.” It builds the answer one word at a time, like a pro storyteller spinning a yarn. Fun Bit: Think of it as the friend who takes your idea and runs with it, turning it into something new. 3. Embeddings: Word DNA Words mean nothing to a machine unless you give them a number vibe. Embeddings do that—turning “chai” into a string of numbers that say, “Hey, I’m a cozy drink!” Words like “tea” might get similar numbers, while “rocket” is way out in left field. Why It’s Cool: It’s how the AI knows “chai” and “tea” are buddies, but “chai” and “truck” aren’t. See Graphically:- https://projector.tensorflow.org/ 4. Positional Encoding: Keeping It Straight Here’s a weird twist: Transformers don’t read left-to-right like we do—they see all the words at once. But order matters, right? “I love chai” isn’t “Chai loves me.” So, they slap on positional encoding—little tags that say, “Yo, I’m word #1,” or “I’m word #3.” Keeps things from getting scrambled. Quick Take: It’s like numbering your grocery list so “milk” doesn’t swap places with “cereal.” 5. Self-Attention: The Smart Connector This is the real MVP. Self-attention lets the AI zoom in on what matters. In “The chai, which is spicy, tastes great,” it links “chai” to “spicy” and “tastes,” so it knows what’s what. It’s like when you’re chatting and suddenly remember a detail from earlier that ties it all together. Example: Ever had a convo where someone goes, “Oh yeah, that reminds me…”? That’s self-attention in action. 6. Multi-Head Attention: Extra Brainpower Take self-attention, then give it a power-up. Multi-head attention is like having a crew of pals all eyeballing your sentence from different angles. One’s checking how “chai” ties to “spicy,” another’s linking it to “tastes.” They team up for the full scoop. Why It Rocks: It’s how the AI catches all the little connections we humans take for granted. 7. Softmax: Picking the Winner When it’s time to choose the next word, the AI doesn’t just wing it. Softmax gives it a probability vibe—like, “After ‘I love,’ there’s a 70% chance it’s ‘chai,’ 20% ‘pizza,’ 10% ‘chaos.’” Then it picks. Fun Example: It’s like betting on your friend’s next word in a game of finish-the-sentence. 8. Tokenization: Chop Chop Before anything happens, the AI chops your sentence into bite-sized bits—tokens. Could be words like “chai” or even “!”—each gets its own ID. It’s like turning your sentence into Lego pieces for the AI to build with. Quick Peek: “I love chai!” becomes [“I”, “love”, “chai”, “!”]. Simple, but key. The Creativity Dial: Temperature Okay, let’s talk something fun—temperature. It’s like the AI’s mood setting. Low temperature? It plays it safe, sticking to obvious answers. High temperature? It gets wild, maybe too wild. Here’s how it shakes out: Low (0.1): “I

Transformers: The Cool Trick Behind Chatty AI

Hey, ever wonder how AI—like the one you’re talking to right now—seems to get you? How it can chat, translate, or even whip up a story without missing a beat? Well, let me spill the beans: it’s all thanks to something called a Transformer. Think of it as AI’s secret weapon for tackling language. I’m not gonna drown you in techy mumbo-jumbo—let’s just break it down like we’re hanging out, maybe sipping some chai (or coffee, no judgment). By the time we’re done, you’ll see why Transformers are such a big deal. Ready? Let’s dive in!

So, What’s a Transformer?

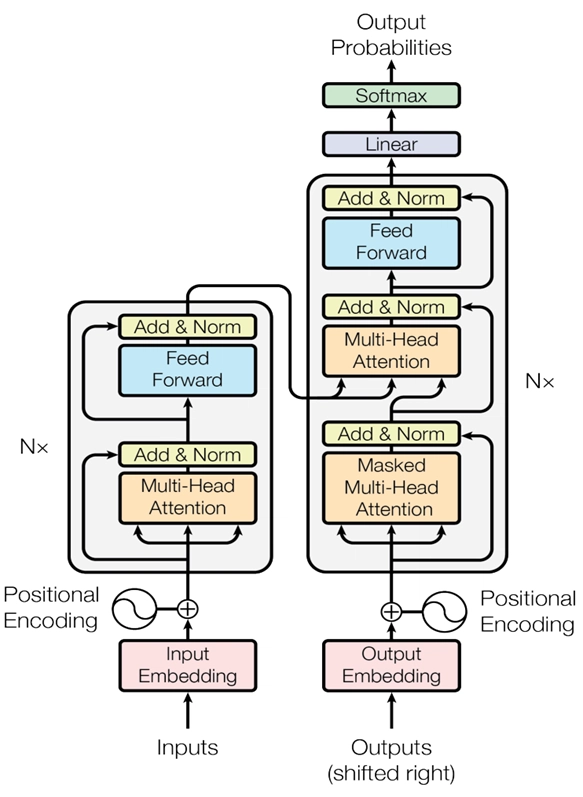

Picture this: you’ve got a buddy who’s a wizard with words. They can translate your ramblings into French, finish your half-baked sentences, or even write you a poem about cats. That’s basically what a Transformer is—an AI model that’s crazy good at language stuff. It’s got two main players: the encoder (the part that “gets” what you’re saying) and the decoder (the part that spits out a response). Together, they’re like a tag team, crunching words with some fancy math to make magic happen.

Please visit to see how graphically this works

https://poloclub.github.io/transformer-explainer/

The Bits That Make It Tick

1. Encoder: The Listener

The encoder’s like that friend who actually hears you. You say, “I’m craving chai,” and it doesn’t just nod—it digs into the whole sentence, figuring out how “craving” and “chai” vibe together. It turns your words into a secret code (fancy term: vectors) that the AI can play with. And here’s the kicker: there’s usually a stack of encoders, each one sharpening the picture a little more.

- Real Talk: It’s like when you’re telling a story and someone picks up on the juicy details—not just the words, but the point.

Open AI Token Encoder:- https://tiktokenizer.vercel.app/

2. Decoder: The Talker

Once the encoder’s got the gist, the decoder jumps in to reply. Say you’re translating “I love chai” to Spanish—it’s the decoder that goes, “Okay, here’s ‘Amo el chai’ for you.” It builds the answer one word at a time, like a pro storyteller spinning a yarn.

- Fun Bit: Think of it as the friend who takes your idea and runs with it, turning it into something new.

3. Embeddings: Word DNA

Words mean nothing to a machine unless you give them a number vibe. Embeddings do that—turning “chai” into a string of numbers that say, “Hey, I’m a cozy drink!” Words like “tea” might get similar numbers, while “rocket” is way out in left field.

- Why It’s Cool: It’s how the AI knows “chai” and “tea” are buddies, but “chai” and “truck” aren’t.

See Graphically:- https://projector.tensorflow.org/

4. Positional Encoding: Keeping It Straight

Here’s a weird twist: Transformers don’t read left-to-right like we do—they see all the words at once. But order matters, right? “I love chai” isn’t “Chai loves me.” So, they slap on positional encoding—little tags that say, “Yo, I’m word #1,” or “I’m word #3.” Keeps things from getting scrambled.

- Quick Take: It’s like numbering your grocery list so “milk” doesn’t swap places with “cereal.”

5. Self-Attention: The Smart Connector

This is the real MVP. Self-attention lets the AI zoom in on what matters. In “The chai, which is spicy, tastes great,” it links “chai” to “spicy” and “tastes,” so it knows what’s what. It’s like when you’re chatting and suddenly remember a detail from earlier that ties it all together.

- Example: Ever had a convo where someone goes, “Oh yeah, that reminds me…”? That’s self-attention in action.

6. Multi-Head Attention: Extra Brainpower

Take self-attention, then give it a power-up. Multi-head attention is like having a crew of pals all eyeballing your sentence from different angles. One’s checking how “chai” ties to “spicy,” another’s linking it to “tastes.” They team up for the full scoop.

- Why It Rocks: It’s how the AI catches all the little connections we humans take for granted.

7. Softmax: Picking the Winner

When it’s time to choose the next word, the AI doesn’t just wing it. Softmax gives it a probability vibe—like, “After ‘I love,’ there’s a 70% chance it’s ‘chai,’ 20% ‘pizza,’ 10% ‘chaos.’” Then it picks.

- Fun Example: It’s like betting on your friend’s next word in a game of finish-the-sentence.

8. Tokenization: Chop Chop

Before anything happens, the AI chops your sentence into bite-sized bits—tokens. Could be words like “chai” or even “!”—each gets its own ID. It’s like turning your sentence into Lego pieces for the AI to build with.

- Quick Peek: “I love chai!” becomes [“I”, “love”, “chai”, “!”]. Simple, but key.

The Creativity Dial: Temperature

Okay, let’s talk something fun—temperature. It’s like the AI’s mood setting. Low temperature? It plays it safe, sticking to obvious answers. High temperature? It gets wild, maybe too wild. Here’s how it shakes out:

- Low (0.1): “I love chai.” Predictable, solid.

- High (1.5): “I love chai-flavored moonbeams.” Uh, what?

- Middle (0.7): “I love chai and cozy vibes.” Just right.

Think of it like cooking: low temp is following the recipe; high temp is tossing in whatever’s in the fridge.

How It All Comes Together

Let’s run through it real quick, like a movie montage:

- You say: “I want chai.”

- AI chops it: [“I”, “want”, “chai”].

- Adds order: 1, 2, 3.

- Turns it to numbers: Vectors, baby!

- Encoder listens: “Oh, they want chai.”

- Decoder talks: Starts building a reply.

- Picks words: Softmax and temperature team up.

- You get: “Chai sounds perfect!”

Final Vibes

So, there you go—Transformers in a nutshell! They’re the reason AI can chat, translate, or dream up wild stories. No robotic lectures here—just the good stuff, explained like we’re buddies. If you’re still curious (or confused), hit me up—I’m always up for round two!

![[Webinar] AI Is Already Inside Your SaaS Stack — Learn How to Prevent the Next Silent Breach](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiOWn65wd33dg2uO99NrtKbpYLfcepwOLidQDMls0HXKlA91k6HURluRA4WXgJRAZldEe1VReMQZyyYt1PgnoAn5JPpILsWlXIzmrBSs_TBoyPwO7hZrWouBg2-O3mdeoeSGY-l9_bsZB7vbpKjTSvG93zNytjxgTaMPqo9iq9Z5pGa05CJOs9uXpwHFT4/s1600/ai-cyber.jpg?#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[FREE EBOOKS] Machine Learning Hero, AI-Assisted Programming for Web and Machine Learning & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Rogue Company Elite tier list of best characters [April 2025]](https://media.pocketgamer.com/artwork/na-33136-1657102075/rogue-company-ios-android-tier-cover.jpg?#)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What’s new in Android’s April 2025 Google System Updates [U: 4/18]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)