How does VolumeMount work in Kubernetes? What happens in the backend?

Understanding Kubernetes VolumeMounts, PersistentVolumeClaims, and StorageClasses (with YAML Examples) Persistent storage is essential for stateful applications in Kubernetes. To manage storage dynamically and reliably, Kubernetes uses a combination of VolumeMounts, PersistentVolumeClaims (PVCs), and StorageClasses. In this post, we’ll demystify how these components work together, and provide practical YAML examples to help you implement them in your clusters. 1. VolumeMounts: Connecting Storage to Containers A VolumeMount specifies where a volume should appear inside a container. It references a volume defined at the Pod level and maps it to a directory inside the container. YAML Example: apiVersion: v1 kind: Pod metadata: name: volume-mount-example spec: containers: - name: app image: nginx volumeMounts: - name: my-storage mountPath: /usr/share/nginx/html volumes: - name: my-storage emptyDir: {} # Ephemeral storage for demonstration How it works: The volume my-storage is mounted inside the nginx container at /usr/share/nginx/html. Any files written to that path are stored in the emptyDir volume, which is deleted when the Pod is removed. 2. PersistentVolumeClaims and PersistentVolumes: Decoupling Storage from Pods PersistentVolume (PV): A cluster-wide resource representing a piece of storage. PersistentVolumeClaim (PVC): A request for storage by a user or application. YAML Example: # PersistentVolume (PV) apiVersion: v1 kind: PersistentVolume metadata: name: pv-example spec: capacity: storage: 1Gi accessModes: - ReadWriteOnce hostPath: path: /mnt/data # For demo purposes; use real storage in production --- # PersistentVolumeClaim (PVC) apiVersion: v1 kind: PersistentVolumeClaim metadata: name: pvc-example spec: accessModes: - ReadWriteOnce resources: requests: storage: 1Gi How it works: The PV is a piece of storage available in the cluster. The PVC requests 1Gi of storage with ReadWriteOnce access. Kubernetes binds the PVC to the PV if their requirements match. 3. StorageClasses: Automating and Tiering Storage A StorageClass defines how to provision storage dynamically. It specifies the provisioner (such as a cloud provider’s CSI driver) and parameters for the storage backend. YAML Example: # Example StorageClass for AWS EBS apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: ebs-csi-storageclass # This name is used by PersistentVolumeClaim provisioner: ebs.csi.aws.com # Example for AWS EBS CSI provisioner volumeBindingMode: WaitForFirstConsumer parameters: type: gp3 # Or your preferred EBS volume type iops: "5000" # Example IOPS throughput: "250" # Example Throughput encrypted: "true" # Example encryption How it works: When a PVC requests storageClassName: ebs-csi-storageclass, Kubernetes uses this StorageClass to dynamically provision a new PV. 4. Bringing It All Together: Pod Using a PVC and StorageClass Here’s how you’d use a PVC (which in turn uses a StorageClass) in a Pod: YAML Example: # PersistentVolumeClaim using a StorageClass apiVersion: v1 kind: PersistentVolumeClaim metadata: name: dynamic-pvc # This name is used by POD in "volumes" section spec: accessModes: - ReadWriteOnce resources: requests: storage: 10Gi storageClassName: ebs-csi-storageclass # see reference to StorageClass name above --- # Pod mounting the PVC apiVersion: v1 kind: Pod metadata: name: my-pod spec: containers: - name: my-container image: nginx:latest volumeMounts: - name: my-ebs-volume #This name and name in volumes section should match mountPath: /mnt/data volumes: - name: my-ebs-volume # This name and name in volumeMounts section should match persistentVolumeClaim: claimName: dynamic-pvc #see reference in PersistentVolumeClaim above Workflow: The PVC (dynamic-pvc) requests 5Gi storage using the ebs-csi-storageclass StorageClass. Kubernetes dynamically provisions a PV using the StorageClass. The Pod references the PVC in its volumes section. The PVC is mounted inside the container at /mnt/data. 5. Lifecycle and Backend Process Provisioning: If dynamic provisioning is used, the StorageClass’s provisioner creates the storage when a PVC is created. Binding: The PVC is bound to a PV (either static or dynamically provisioned). Mounting: When the Pod is scheduled, the kubelet mounts the storage to the container’s filesystem at the specified mountPath. Reclaiming: When the PVC is deleted, the PV’s reclaimPolicy determines if the storage is deleted, retained, or recycled. Summary Table Component Purpose Defined By Key Fields VolumeMount Mounts storage inside a container Pod spec (user) name, mountPath PersistentVolume Cluster storage resource Admin/Kubernetes capaci

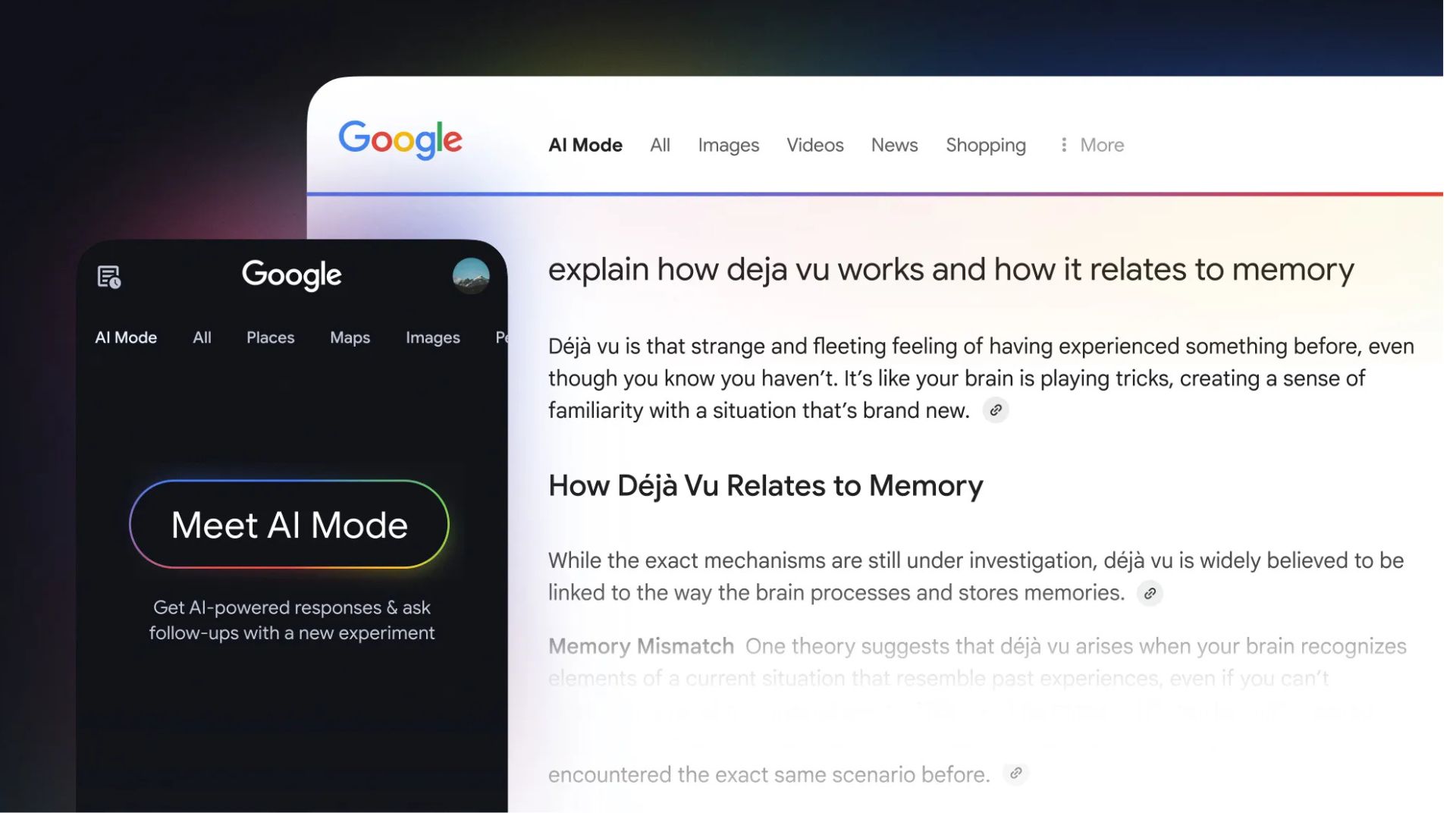

Understanding Kubernetes VolumeMounts, PersistentVolumeClaims, and StorageClasses (with YAML Examples)

Persistent storage is essential for stateful applications in Kubernetes. To manage storage dynamically and reliably, Kubernetes uses a combination of VolumeMounts, PersistentVolumeClaims (PVCs), and StorageClasses. In this post, we’ll demystify how these components work together, and provide practical YAML examples to help you implement them in your clusters.

1. VolumeMounts: Connecting Storage to Containers

A VolumeMount specifies where a volume should appear inside a container. It references a volume defined at the Pod level and maps it to a directory inside the container.

YAML Example:

apiVersion: v1

kind: Pod

metadata:

name: volume-mount-example

spec:

containers:

- name: app

image: nginx

volumeMounts:

- name: my-storage

mountPath: /usr/share/nginx/html

volumes:

- name: my-storage

emptyDir: {} # Ephemeral storage for demonstration

How it works:

- The volume

my-storageis mounted inside thenginxcontainer at/usr/share/nginx/html. - Any files written to that path are stored in the

emptyDirvolume, which is deleted when the Pod is removed.

2. PersistentVolumeClaims and PersistentVolumes: Decoupling Storage from Pods

PersistentVolume (PV):

A cluster-wide resource representing a piece of storage.

PersistentVolumeClaim (PVC):

A request for storage by a user or application.

YAML Example:

# PersistentVolume (PV)

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-example

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

hostPath:

path: /mnt/data # For demo purposes; use real storage in production

---

# PersistentVolumeClaim (PVC)

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-example

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

How it works:

- The PV is a piece of storage available in the cluster.

- The PVC requests 1Gi of storage with

ReadWriteOnceaccess. - Kubernetes binds the PVC to the PV if their requirements match.

3. StorageClasses: Automating and Tiering Storage

A StorageClass defines how to provision storage dynamically. It specifies the provisioner (such as a cloud provider’s CSI driver) and parameters for the storage backend.

YAML Example:

# Example StorageClass for AWS EBS

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: ebs-csi-storageclass # This name is used by PersistentVolumeClaim

provisioner: ebs.csi.aws.com # Example for AWS EBS CSI provisioner

volumeBindingMode: WaitForFirstConsumer

parameters:

type: gp3 # Or your preferred EBS volume type

iops: "5000" # Example IOPS

throughput: "250" # Example Throughput

encrypted: "true" # Example encryption

How it works:

- When a PVC requests

storageClassName: ebs-csi-storageclass, Kubernetes uses this StorageClass to dynamically provision a new PV.

4. Bringing It All Together: Pod Using a PVC and StorageClass

Here’s how you’d use a PVC (which in turn uses a StorageClass) in a Pod:

YAML Example:

# PersistentVolumeClaim using a StorageClass

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: dynamic-pvc # This name is used by POD in "volumes" section

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: ebs-csi-storageclass # see reference to StorageClass name above

---

# Pod mounting the PVC

apiVersion: v1

kind: Pod

metadata:

name: my-pod

spec:

containers:

- name: my-container

image: nginx:latest

volumeMounts:

- name: my-ebs-volume #This name and name in volumes section should match

mountPath: /mnt/data

volumes:

- name: my-ebs-volume # This name and name in volumeMounts section should match

persistentVolumeClaim:

claimName: dynamic-pvc #see reference in PersistentVolumeClaim above

Workflow:

- The PVC (

dynamic-pvc) requests 5Gi storage using theebs-csi-storageclassStorageClass. - Kubernetes dynamically provisions a PV using the StorageClass.

- The Pod references the PVC in its

volumessection. - The PVC is mounted inside the container at

/mnt/data.

5. Lifecycle and Backend Process

- Provisioning: If dynamic provisioning is used, the StorageClass’s provisioner creates the storage when a PVC is created.

- Binding: The PVC is bound to a PV (either static or dynamically provisioned).

-

Mounting: When the Pod is scheduled, the kubelet mounts the storage to the container’s filesystem at the specified

mountPath. -

Reclaiming: When the PVC is deleted, the PV’s

reclaimPolicydetermines if the storage is deleted, retained, or recycled.

Summary Table

| Component | Purpose | Defined By | Key Fields |

|---|---|---|---|

| VolumeMount | Mounts storage inside a container | Pod spec (user) | name, mountPath |

| PersistentVolume | Cluster storage resource | Admin/Kubernetes | capacity, accessModes |

| PersistentVolumeClaim | Request for storage | User | resources, accessModes, storageClassName |

| StorageClass | Storage “profile” for dynamic provisioning | Admin | provisioner, parameters |

Kubernetes Storage: Common Issues, Troubleshooting, and Limitations

Building on the fundamentals of VolumeMounts, PersistentVolumeClaims, and StorageClasses, it’s crucial to understand the real-world challenges that teams face when running stateful workloads in Kubernetes. Here, we’ll cover common issues, troubleshooting strategies, and key limitations you should be aware of.

Common Issues with Kubernetes Storage

1. PersistentVolumeClaim (PVC) Not Bound

- The PVC remains in a

Pendingstate and is not bound to any PersistentVolume (PV). - Causes include mismatched storage size, access modes, or StorageClass between the PVC and available PVs, or insufficient underlying storage resources.

2. Volume Mount Failures

- Pods may fail to start with errors related to mounting the volume.

- This can be due to incorrect volume definitions, unavailable storage backends, or node failures.

3. Storage Plugin/CSI Issues

- Problems with the Container Storage Interface (CSI) driver, such as outdated versions or plugin crashes, can prevent volumes from being provisioned or mounted.

4. Pod Stuck in Pending or CrashLoopBackOff

- Storage-related configuration errors can cause pods to remain in

Pendingor repeatedly crash (CrashLoopBackOff), especially when required volumes are not available or properly mounted.

5. Deletion and Reclaim Policy Problems

- PVs may not be deleted or released as expected due to misconfigured reclaim policies, leading to orphaned resources and wasted storage.

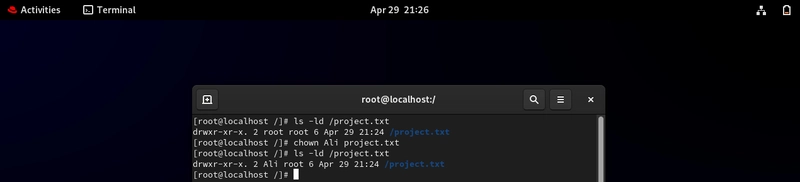

Troubleshooting Kubernetes Storage Issues

Step-by-Step Troubleshooting:

-

Check Pod Events and Status

- Use

kubectl describe podto view events and error messages related to volume mounting or PVC binding.

- Use

-

Inspect PVC and PV Status

- Run

kubectl get pvcandkubectl describe pvcto check if the PVC is bound and to see any error messages. - Use

kubectl get pvto examine the status and properties of PersistentVolumes.

- Run

-

Verify StorageClass and CSI Driver

- Ensure the correct StorageClass is referenced and the CSI driver is running and up to date (

kubectl get pod -n kube-system | grep csi).

- Ensure the correct StorageClass is referenced and the CSI driver is running and up to date (

-

Review Node and Network Health

- Check node status and network connectivity to the storage backend, as network issues can prevent volume attachment.

-

Check for Resource Constraints

- Ensure there are enough resources (CPU, memory, storage) available on nodes to support the requested volumes.

-

Zone-aware Auto Scaling

- If your workloads are zone-specific you'll need to create separate nodegroups for each zone. This is because the

cluster-autoscalerassumes that all nodes in a group are exactly equivalent. So, for example, if a scale-up event is triggered by a pod which needs a zone-specific PVC (e.g. an EBS volume), the new node might get scheduled in the wrong AZ and the pod will fail to start.

- If your workloads are zone-specific you'll need to create separate nodegroups for each zone. This is because the

-

Logs and Observability

- Examine logs from the affected pod and CSI driver for detailed error information. Use monitoring tools to track resource usage and events.

-

Manual Remediation

- If a node fails, remove it with

kubectl delete nodeto trigger pod rescheduling and volume reattachment. - Deleting and recreating pods or PVCs can sometimes resolve transient issues.

- If a node fails, remove it with

Limitations of Kubernetes Storage

1. Storage Backend Compatibility

- Not all storage solutions support every Kubernetes feature (e.g., ReadWriteMany access mode is not available on many block storage backends).

2. Dynamic Provisioning Constraints

- Dynamic provisioning relies on properly configured StorageClasses and CSI drivers. Misconfiguration or lack of support for certain features can lead to failed provisioning. also see Zone-aware Auto Scaling in Troubleshooting section above.

3. Data Durability and Redundancy

- Kubernetes itself does not provide data replication or backup; this is the responsibility of the storage backend or external tools.

4. Performance Overheads

- Storage performance depends on the underlying infrastructure. Network-attached storage may introduce latency compared to local disks.

5. Scaling and Resource Quotas

- Storage scalability is limited by the backend and resource quotas. Over-provisioning or lack of quotas can lead to resource contention and degraded performance.

6. Security and Access Controls

- Fine-grained access controls for storage resources may be limited, especially when using some legacy or simple backends.

Example: Troubleshooting a PVC Not Bound

# Check PVC status

kubectl get pvc

# Describe the PVC for detailed events and errors

kubectl describe pvc dynamic-pvc

# Check available PVs and their properties

kubectl get pv

# If needed, check StorageClass and CSI driver status

kubectl get storageclass

kubectl get pod -n kube-system | grep csi

Best Practices

- Always match PVC requests (size, access mode, StorageClass) to available PVs.

- Monitor pod, PVC, and PV events regularly.

- Keep CSI drivers up to date and monitor their health.

- Configure storage quotas and limits to avoid resource exhaustion.

- Choose storage backends that align with your application’s durability, performance, and scalability needs.

When a Kubernetes Pod that uses a PersistentVolumeClaim (PVC) is deleted-whether due to failure, scaling, or an update-the underlying PersistentVolume (PV) and its data are preserved. Here’s how Kubernetes reattaches the volume to a new Pod:

PVC and PV Binding: The PVC remains bound to the PV as long as the PVC resource exists. The binding is tracked by the

claimReffield in the PV, which references the PVC.Pod Replacement: When a new Pod is created (for example, by a Deployment or StatefulSet), and it references the same PVC in its

.spec.volumes, Kubernetes schedules the Pod and ensures the volume is reattached and mounted at the specified path inside the new container.Volume Attachment: The kubelet on the target node coordinates with the storage backend (via the CSI driver or in-tree volume plugin) to attach the PV to the node where the new Pod is scheduled. Once attached, the volume is mounted into the container at the path specified in

volumeMounts.Data Persistence: Because the PV is persistent and not tied to any single Pod, the new Pod sees the same data as the previous Pod. This enables seamless failover or rolling updates without data loss.

Final Thoughts

Kubernetes storage is powerful and flexible. By combining VolumeMounts, PersistentVolumeClaims, and StorageClasses, you can decouple your applications from the underlying storage, automate provisioning, and ensure your workloads have the storage performance and reliability they need.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Under-Display Face ID Coming to iPhone 18 Pro and Pro Max [Rumor]](https://www.iclarified.com/images/news/97215/97215/97215-640.jpg)

![New Powerbeats Pro 2 Wireless Earbuds On Sale for $199.95 [Lowest Price Ever]](https://www.iclarified.com/images/news/97217/97217/97217-640.jpg)

![Alleged iPhone 17-19 Roadmap Leaked: Foldables and Spring Launches Ahead [Kuo]](https://www.iclarified.com/images/news/97214/97214/97214-640.jpg)