GitOps and IaC at Scale – AWS, ArgoCD, Terragrunt, and OpenTofu – Part 2 – Creating Spoke environments

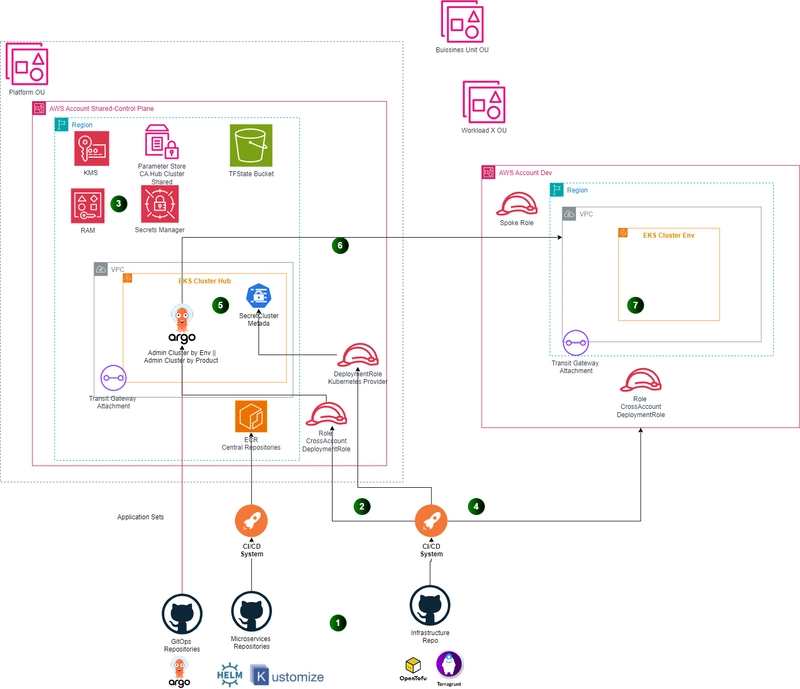

Level 400 Hi clouders, in the previous blog you can explore the considerations to deploy at scale gitops and IaC, in this post you can learn more about how to deploy spokes cluster using the GitOps bridge framework and specific use cases with AWS enterprise capabilities and security best practices. Architecture Overview Spoke clusters and Scenarios According to the best practices there are many considerations and scenarios, for example: You can have one stack or IaC repository with the definitions for each account, team or squat. It depends on your internal organizations and share responsibility model. For this scenario suppose that the model is a decentralized DevOps Operational Model, and each squat has their custom IaC for each project. Consider keeping clear separation and isolation between environments and make match your platform strategy, for example, some organizations have a single hub for managing dev, QA and prod cluster, others have a hub to manage each environment cluster and other, and others have one hub for previous environments and another for production. Another key point to note is the capacity planning and applications by team, some organizations allow share the same cluster for an organizational unit and keep the applications grouped by namespaces in each environment, others prefer to have one environment and cluster by workload or application. Considering the networking and security considerations has main pain and challenges for both scenarios. In this series the main assumption is that each workload has a dedicated cluster and account by environment but there is a transversal platform team that manages the cluster configuration and control plane. The following table describe the relationship between scenario and AWS accounts: Scenario AWS Account Clusters Single Hub – N Spokes 1 for HUB – N account by environment 1 cluster Hub, N Spoke Clusters by environment M Hub - N spokes M accounts By Hub environments – N account by environments M Hub Clusters, N Environment Clusters The Figure 1 depicts the architecture for this scenario. Figure 1. GitOps bridge Deployment architecture in AWS The FinOps practices are key point independent of your resources distribution you must consider what is the best strategy for track cost and shared resources. Hands On First, modify the hub infrastructure stacks to add the stack to manage the credentials cross account and allow the CI infrastructure agents to take them to register the cluster in the control plane. Also, create the role for Argocd to enable the authentication between Argocd hub and spoke cluster. Updating Control Plane Infrastructure So, let’s set up the credentials according to the best practices and the scenario described in the previous section, the cluster credentials are stored in parameter store and share with the organizational units for each team or business unit. You must enable RAM as trusted service in your organization from Organizations management account and RAM Console. For this task, a local module terraform-aws-parameter-store was created: └── terraform-aws-ssm-parameter-sotre ├── README.md ├── data.tf ├── main.tf ├── outputs.tf └── variables.tf The module creates the parameter and shares with organization id, organizational unit, or account id principals. Now, using terragrunt the module is called to create a new stack or terragrunt unit. #parameter_store-terragrunt.hcl include "root" { path = find_in_parent_folders("root.hcl") expose = true } dependency "eks" { config_path = "${get_parent_terragrunt_dir("root")}/infrastructure/containers/eks_control_plane" mock_outputs = { cluster_name = "dummy-cluster-name" cluster_endpoint = "dummy_cluster_endpoint" cluster_certificate_authority_data = "dummy_cluster_certificate_authority_data" cluster_version = "1.31" cluster_platform_version = "1.31" oidc_provider_arn = "dummy_arn" cluster_arn = "arn:aws:eks:us-east-2:105171185823:cluster/gitops-scale-dev-hub" } mock_outputs_merge_strategy_with_state = "shallow" } locals { # Define parameters for each workspace env = { default = { parameter_name = "/control_plane/${include.root.locals.environment.locals.workspace}/credentials" sharing_principals = ["ou-w3ow-k24p2opx"] tags = { Environment = "control-plane" Layer = "Operations" } } "dev" = { create = true } "prod" = { create = true } } # Merge parameters environment_vars = contains(keys(local.env), include.root.locals.environment.locals.workspace) ? include.root.locals.environment.locals.workspace : "default" workspace = merge(local.env["default"], local.env[local.environment_vars]) } terraform { source = "../../../modules/terraform-aws-ssm-parameter-sotre" } inputs = { parameter_name = "${local.workspace["parameter_name"]}" para

Level 400

Hi clouders, in the previous blog you can explore the considerations to deploy at scale gitops and IaC, in this post you can learn more about how to deploy spokes cluster using the GitOps bridge framework and specific use cases with AWS enterprise capabilities and security best practices.

Architecture Overview

Spoke clusters and Scenarios

According to the best practices there are many considerations and scenarios, for example:

You can have one stack or IaC repository with the definitions for each account, team or squat. It depends on your internal organizations and share responsibility model. For this scenario suppose that the model is a decentralized DevOps Operational Model, and each squat has their custom IaC for each project. Consider keeping clear separation and isolation between environments and make match your platform strategy, for example, some organizations have a single hub for managing dev, QA and prod cluster, others have a hub to manage each environment cluster and other, and others have one hub for previous environments and another for production.

Another key point to note is the capacity planning and applications by team, some organizations allow share the same cluster for an organizational unit and keep the applications grouped by namespaces in each environment, others prefer to have one environment and cluster by workload or application. Considering the networking and security considerations has main pain and challenges for both scenarios. In this series the main assumption is that each workload has a dedicated cluster and account by environment but there is a transversal platform team that manages the cluster configuration and control plane. The following table describe the relationship between scenario and AWS accounts:

| Scenario | AWS Account | Clusters |

|---|---|---|

| Single Hub – N Spokes | 1 for HUB – N account by environment | 1 cluster Hub, N Spoke Clusters by environment |

| M Hub - N spokes | M accounts By Hub environments – N account by environments | M Hub Clusters, N Environment Clusters |

The Figure 1 depicts the architecture for this scenario.

Figure 1. GitOps bridge Deployment architecture in AWS

The FinOps practices are key point independent of your resources distribution you must consider what is the best strategy for track cost and shared resources.

Hands On

First, modify the hub infrastructure stacks to add the stack to manage the credentials cross account and allow the CI infrastructure agents to take them to register the cluster in the control plane. Also, create the role for Argocd to enable the authentication between Argocd hub and spoke cluster.

Updating Control Plane Infrastructure

So, let’s set up the credentials according to the best practices and the scenario described in the previous section, the cluster credentials are stored in parameter store and share with the organizational units for each team or business unit.

You must enable RAM as trusted service in your organization from Organizations management account and RAM Console.

For this task, a local module terraform-aws-parameter-store was created:

└── terraform-aws-ssm-parameter-sotre

├── README.md

├── data.tf

├── main.tf

├── outputs.tf

└── variables.tf

The module creates the parameter and shares with organization id, organizational unit, or account id principals.

Now, using terragrunt the module is called to create a new stack or terragrunt unit.

#parameter_store-terragrunt.hcl

include "root" {

path = find_in_parent_folders("root.hcl")

expose = true

}

dependency "eks" {

config_path = "${get_parent_terragrunt_dir("root")}/infrastructure/containers/eks_control_plane"

mock_outputs = {

cluster_name = "dummy-cluster-name"

cluster_endpoint = "dummy_cluster_endpoint"

cluster_certificate_authority_data = "dummy_cluster_certificate_authority_data"

cluster_version = "1.31"

cluster_platform_version = "1.31"

oidc_provider_arn = "dummy_arn"

cluster_arn = "arn:aws:eks:us-east-2:105171185823:cluster/gitops-scale-dev-hub"

}

mock_outputs_merge_strategy_with_state = "shallow"

}

locals {

# Define parameters for each workspace

env = {

default = {

parameter_name = "/control_plane/${include.root.locals.environment.locals.workspace}/credentials"

sharing_principals = ["ou-w3ow-k24p2opx"]

tags = {

Environment = "control-plane"

Layer = "Operations"

}

}

"dev" = {

create = true

}

"prod" = {

create = true

}

}

# Merge parameters

environment_vars = contains(keys(local.env), include.root.locals.environment.locals.workspace) ? include.root.locals.environment.locals.workspace : "default"

workspace = merge(local.env["default"], local.env[local.environment_vars])

}

terraform {

source = "../../../modules/terraform-aws-ssm-parameter-sotre"

}

inputs = {

parameter_name = "${local.workspace["parameter_name"]}"

parameter_description = "Control plane credentials"

parameter_type = "SecureString"

parameter_tier = "Advanced"

create_kms = true

enable_sharing = true

sharing_principals= local.workspace["sharing_principals"]

parameter_value = jsonencode({

cluster_name = dependency.eks.outputs.cluster_name,

cluster_endpoint = dependency.eks.outputs.cluster_endpoint,

cluster_certificate_authority_data = dependency.eks.outputs.cluster_certificate_authority_data,

cluster_version = dependency.eks.outputs.cluster_version,

cluster_platform_version = dependency.eks.outputs.cluster_platform_version,

oidc_provider_arn = dependency.eks.outputs.oidc_provider_arn,

hub_account_id = split(":", dependency.eks.outputs.cluster_arn)[4]

} )

tags = local.workspace["tags"]

}

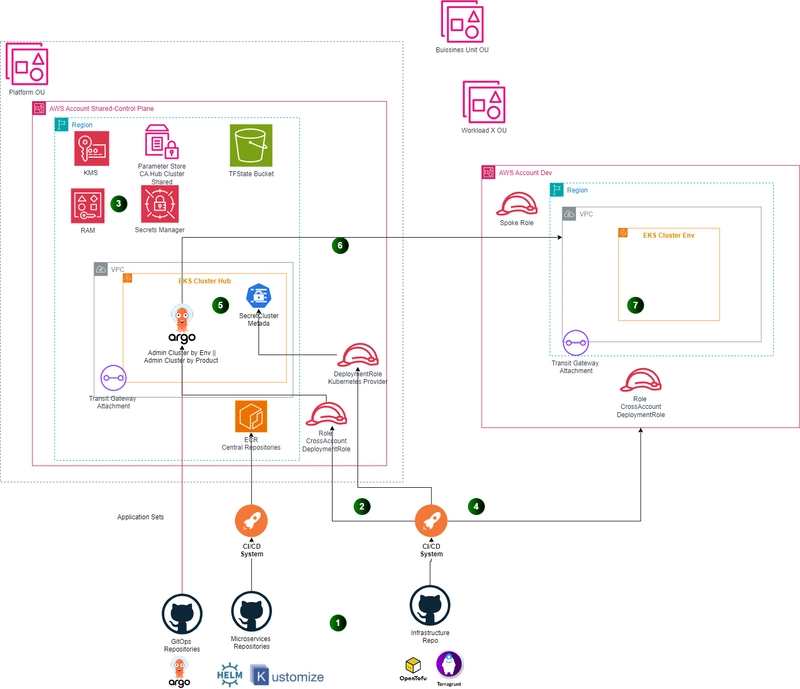

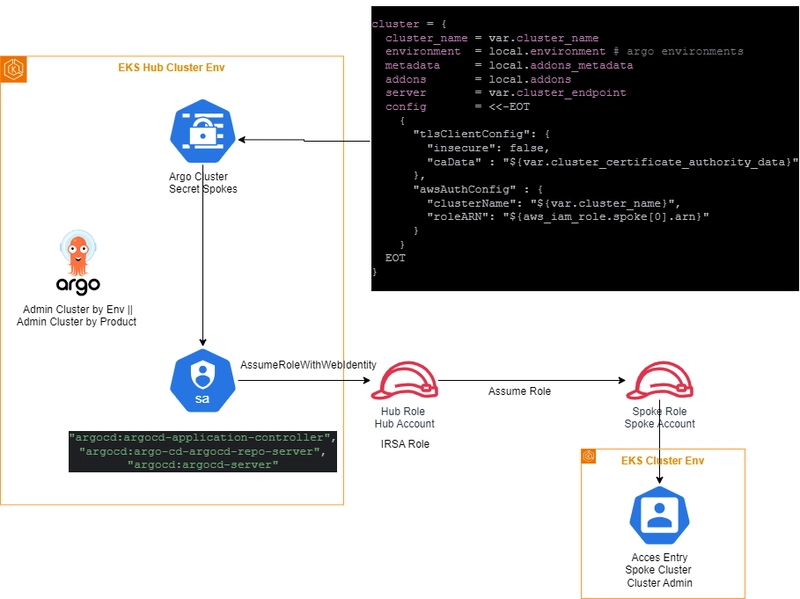

Now, another stack is necessary, the IAM role to enable service account for argocd use the IAM authentication with spoke clusters. The module terraform-aws-iam/iam-eks-role allows to create the IRSA role but also is necessary create a custom policy to allow assume role in the spoke accounts. The Figure 2 depicts in depth this setup.

You can create simple stack for managing the role, or a module that supports the EKS definition and IAM role.

Figure 2. GitOps authentication summary.

So, the module is in modules/terraform-aws-irsa-eks-hub

modules/

├── terraform-aws-irsa-eks-hub

│ ├── README.md

│ ├── main.tf

│ ├── outputs.tf

│ └── variables.tf

└── terraform-aws-ssm-parameter-sotre

├── README.md

├── data.tf

├── main.tf

├── outputs.tf

└── variables.tf

3 directories, 9 files

and the stack is:

#eks_role-terragrunt.hcl

include "root" {

path = find_in_parent_folders("root.hcl")

expose = true

}

dependency "eks" {

config_path = "${get_parent_terragrunt_dir("root")}/infrastructure/containers/eks_control_plane"

mock_outputs = {

cluster_name = "dummy-cluster-name"

cluster_endpoint = "dummy_cluster_endpoint"

cluster_certificate_authority_data = "dummy_cluster_certificate_authority_data"

cluster_version = "1.31"

cluster_platform_version = "1.31"

oidc_provider_arn = "dummy_arn"

}

mock_outputs_merge_strategy_with_state = "shallow"

}

locals {

# Define parameters for each workspace

env = {

default = {

environment = "control-plane"

role_name = "eks-role-hub"

tags = {

Environment = "control-plane"

Layer = "Networking"

}

}

"dev" = {

create = true

}

"prod" = {

create = true

}

}

# Merge parameters

environment_vars = contains(keys(local.env), include.root.locals.environment.locals.workspace) ? include.root.locals.environment.locals.workspace : "default"

workspace = merge(local.env["default"], local.env[local.environment_vars])

}

terraform {

source = "../../../modules/terraform-aws-irsa-eks-hub"

}

inputs = {

role_name = "${local.workspace["role_name"]}-${local.workspace["environment"]}"

cluster_service_accounts = {

"${dependency.eks.outputs.cluster_name}" = [

"argocd:argocd-application-controller",

"argocd:argo-cd-argocd-repo-server",

"argocd:argocd-server",

]

}

tags = local.workspace["tags"]

}

Finally, the gitops_bridge stack must look like:

#eks_control_plane-terragrunt.hcl

include "root" {

path = find_in_parent_folders("root.hcl")

expose = true

}

include "k8s_helm_provider" {

path = find_in_parent_folders("/common/additional_providers/provider_k8s_helm.hcl")

}

dependency "eks" {

config_path = "${get_parent_terragrunt_dir("root")}/infrastructure/containers/eks_control_plane"

mock_outputs = {

cluster_name = "dummy-cluster-name"

cluster_endpoint = "dummy_cluster_endpoint"

cluster_certificate_authority_data = "dummy_cluster_certificate_authority_data"

cluster_version = "1.31"

cluster_platform_version = "1.31"

oidc_provider_arn = "dummy_arn"

}

mock_outputs_merge_strategy_with_state = "shallow"

}

dependency "eks_role" {

config_path = "${get_parent_terragrunt_dir("root")}/infrastructure/iam/eks_role"

mock_outputs = {

iam_role_arn = "arn::..."

}

mock_outputs_merge_strategy_with_state = "shallow"

}

locals {

# Define parameters for each workspace

env = {

default = {

environment = "control-plane"

oss_addons = {

enable_argo_workflows = true

#enable_foo = true

# you can add any addon here, make sure to update the gitops repo with the corresponding application set

}

addons_metadata = merge(

{

addons_repo_url = "https://github.com/gitops-bridge-dev/gitops-bridge-argocd-control-plane-template"

addons_repo_basepath = ""

addons_repo_path ="bootstrap/control-plane/addons"

addons_repo_revision = "HEAD"

}

)

argocd_apps = {

addons = file("./bootstrap/addons.yaml")

#workloads = file("./bootstrap/workloads.yaml")

}

tags = {

Environment = "control-plane"

Layer = "Networking"

}

}

"dev" = {

create = true

}

"prod" = {

create = true

}

}

# Merge parameters

environment_vars = contains(keys(local.env), include.root.locals.environment.locals.workspace) ? include.root.locals.environment.locals.workspace : "default"

workspace = merge(local.env["default"], local.env[local.environment_vars])

}

terraform {

source = "tfr:///gitops-bridge-dev/gitops-bridge/helm?version=0.1.0"

}

inputs = {

cluster_name = dependency.eks.outputs.cluster_name

cluster_endpoint = dependency.eks.outputs.cluster_endpoint

cluster_platform_version = dependency.eks.outputs.cluster_platform_version

oidc_provider_arn = dependency.eks.outputs.oidc_provider_arn

cluster_certificate_authority_data = dependency.eks.outputs.cluster_certificate_authority_data

cluster = {

cluster_name = dependency.eks.outputs.cluster_name

environment = local.workspace["environment"]

metadata = local.workspace["addons_metadata"]

addons = merge(local.workspace["oss_addons"], { kubernetes_version = dependency.eks.outputs.cluster_version })

}

apps = local.workspace["argocd_apps"]

argocd = {

namespace = "argocd"

#set = [

# {

# name = "server.service.type"

# value = "LoadBalancer"

# }

#]

values = [

yamlencode(

{

configs = {

params = {

"server.insecure" = true

}

}

server = {

"serviceAccount" = {

annotations = {

"eks.amazonaws.com/role-arn" = dependency.eks_role.outputs.iam_role_arn

}

}

service = {

type = "NodePort"

}

ingress = {

enabled = false

controller = "aws"

ingressClassName : "alb"

aws = {

serviceType : "NodePort"

}

annotations = {

#"alb.ingress.kubernetes.io/backend-protocol" = "HTTPS"

#"alb.ingress.kubernetes.io/ssl-redirect" = "443"

#"service.beta.kubernetes.io/aws-load-balancer-type" = "external"

#"service.beta.kubernetes.io/aws-load-balancer-nlb-target-type" = "ip"

#"alb.ingress.kubernetes.io/listen-ports" : "[{\"HTTPS\":443}]"

}

}

}

controller = {

"serviceAccount" = {

annotations = {

"eks.amazonaws.com/role-arn" = dependency.eks_role.outputs.iam_role_arn

}

}

}

repoServer = {

"serviceAccount" = {

annotations = {

"eks.amazonaws.com/role-arn" = dependency.eks_role.outputs.iam_role_arn

}

}

}

}

)

]

}

tags = local.workspace["tags"]

}

Basically the main changes was the introduction to argocd map to setup the values for helm chart deployment to enable to use the IRSA role.

When you are using cross account deployment the profile that creates the secrets in hub cluster must to have access and permissions, for example in the repository the eks_control_plane stack introduce a new access entry:

#eks_control_plane-terragrunt.hcl

include "root" {

path = find_in_parent_folders("root.hcl")

expose = true

}

dependency "vpc" {

config_path = "${get_parent_terragrunt_dir("root")}/infrastructure/network/vpc"

mock_outputs = {

vpc_id = "vpc-04e3e1e302f8c8f06"

public_subnets = [

"subnet-0e4c5aedfc2101502",

"subnet-0d5061f70b69eda14",

]

private_subnets = [

"subnet-0e4c5aedfc2101502",

"subnet-0d5061f70b69eda14",

"subnet-0d5061f70b69eda15",

]

}

mock_outputs_merge_strategy_with_state = "shallow"

}

locals {

# Define parameters for each workspace

env = {

default = {

create = false

cluster_name = "${include.root.locals.common_vars.locals.project}-${include.root.locals.environment.locals.workspace}-hub"

cluster_version = "1.32"

# Optional

cluster_endpoint_public_access = true

# Optional: Adds the current caller identity as an administrator via cluster access entry

enable_cluster_creator_admin_permissions = true

access_entries = {

#####################################################################################################################

# Admin installation and setup for spoke accounts - Demo purpose- must be the ci Agent Role

####################################################################################################################

admins_sso = {

kubernetes_groups = []

principal_arn = "arn:aws:sts::123456781234:role/aws-reserved/sso.amazonaws.com/us-east-2/AWSReservedSSO_AWSAdministratorAccess_877fe9e4127a368d"

user_name = "arn:aws:sts::123456781234:assumed-role/AWSReservedSSO_AWSAdministratorAccess_877fe9e4127a368d/{{SessionName}}"

policy_associations = {

single = {

policy_arn = "arn:aws:eks::aws:cluster-access-policy/AmazonEKSClusterAdminPolicy"

access_scope = {

type = "cluster"

}

}

}

}

}

cluster_compute_config = {

enabled = true

node_pools = ["general-purpose"]

}

tags = {

Environment = "control-plane"

Layer = "Networking"

}

}

"dev" = {

create = true

}

"prod" = {

create = true

}

}

# Merge parameters

environment_vars = contains(keys(local.env), include.root.locals.environment.locals.workspace) ? include.root.locals.environment.locals.workspace : "default"

workspace = merge(local.env["default"], local.env[local.environment_vars])

}

terraform {

source = "tfr:///terraform-aws-modules/eks/aws?version=20.33.1"

}

inputs = {

create = local.workspace["create"]

cluster_name = local.workspace["cluster_name"]

cluster_version = local.workspace["cluster_version"]

# Optional

cluster_endpoint_public_access = local.workspace["cluster_endpoint_public_access"]

# Optional: Adds the current caller identity as an administrator via cluster access entry

enable_cluster_creator_admin_permissions = local.workspace["enable_cluster_creator_admin_permissions"]

cluster_compute_config = local.workspace["cluster_compute_config"]

vpc_id = dependency.vpc.outputs.vpc_id

subnet_ids = dependency.vpc.outputs.private_subnets

access_entries = local.workspace["access_entries"]

tags = {

Environment = include.root.locals.environment.locals.workspace

Terraform = "true"

}

tags = local.workspace["tags"]

}

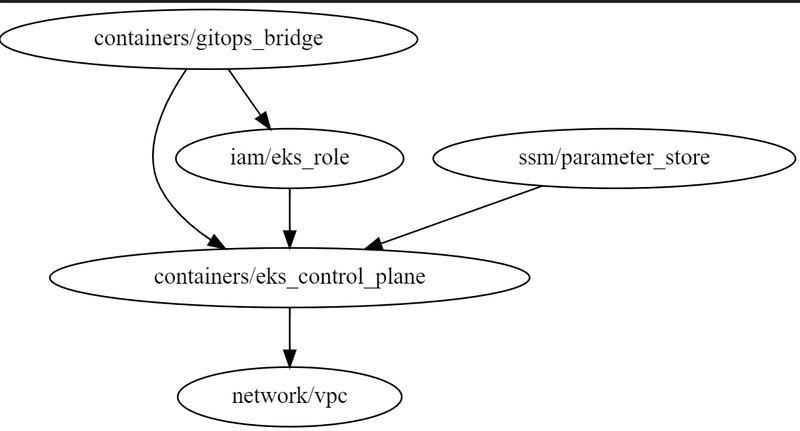

The final Hub infrastructure is:

Figure 3. Control Plane Infrastructure.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Is this too much for a modular monolith system? [closed]](https://i.sstatic.net/pYL1nsfg.png)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)