Fabric & Databricks Interoperability (3): Using Fabric Tables in Databricks for Viewing, Analyzing, and Editing

Introduction Can tables created in Fabric be seamlessly referenced and edited in Databricks? Many people may have this question. In this article, we will specifically introduce the use case of: Using tables created in Fabric within Databricks. For details on the prerequisite settings, please refer to the previous article. This article is part of a four-part series: Overview and Purpose of Interoperability Detailed Configuration of Hub Storage Using tables created in Fabric within Databricks (this article) Using tables created in Databricks within Fabric Linking Tables Created in Fabric to Databricks Creating a New Table in Fabric Upload a CSV file to the Fabric Lakehouse. :::note info The CSV file used in this article is sales.csv from the following Microsoft documentation: Create a Microsoft Fabric Lakehouse ::: From the CSV file, select [Load to Table] > [New Table]. Specify ext, which is a shortcut created in the hub storage, as the schema. Verifying the Created Table You can confirm that a new table has been created in the Lakehouse. A create_from_fabric_sales folder is created in the ext folder of the hub storage. (This means that the newly created table physically exists in the hub storage.) You can also confirm that the table is in Delta format. At this point, as expected, the table created in Fabric is not yet visible in Databricks. Enabling Databricks to Access Fabric Tables Use the Databricks SQL Editor to create an external table. Specify the hub storage folder path (the folder of the table created in Fabric) in the Location field. CREATE TABLE USING DELTA LOCATION 'abfss://@.dfs.core.windows.net/folder_name/' Then, you can view tables created in Fabric from the [Catalog]. Viewing and Analyzing Tables Created in Fabric with Databricks (BI Creation) From the [Dashboard] in Databricks, you can create a new dashboard and select an external table (i.e., a table created in Fabric) from [Data] > [Select Table]. Thus, it is possible to analyze tables created in Fabric using Databricks. Editing Tables Created in Fabric with Databricks (DML) Try executing an UPDATE statement (DML statement) from the SQL Editor in Databricks. UPDATE create_from_fabric_sales SET Item = 'No.1 Item' WHERE Item = 'Road-150 Red, 48' Of course, you can confirm that the changes have been reflected on the Databricks side. Although the edit was made from Databricks, the changes were successfully reflected on the Fabric side as well. SELECT Item, SUM(Quantity * UnitPrice) AS Revenue FROM Fabric_Lakehouse.ext.create_from_fabric_sales GROUP BY Item ORDER BY Revenue DESC; Therefore, it is possible to edit tables created in Fabric using Databricks (DML statements). Conclusion From the above, we have confirmed that "Tables created in Fabric can be used in Databricks." Once the hub storage is set up, it is relatively easy to achieve interoperability between Fabric and Databricks. In the next article, we will introduce the reverse case: "Using tables created in Databricks in Fabric." ▽ Next article FabricとDatabricksの相互運用性④:Databrick で作成したテーブルをFabricで利用する(Fabricで閲覧・分析・編集可能) #SQL - Qiita はじめにFabricで作成したテーブルをDatabricksでもシームレスに参照・編集が可能できるのか?このような疑問を持つ方も少なくないと思います。そこで今回はDatabricksで作成し… qiita.com ▽ Previous article FabricとDatabricksの相互運用性②:hubストレージ設定方法 -Databricks で作成したテーブルをFabric で利用する、Fabric で作成したテーブルをDatabricksで利用する- #Azure - Qiita はじめに今回はDatabricks で作成したテーブルをFabric で利用するFabric で作成したテーブルをDatabricksで利用するというユースケースを実施するための設定方法につ… qiita.com

Introduction

Can tables created in Fabric be seamlessly referenced and edited in Databricks?

Many people may have this question.

In this article, we will specifically introduce the use case of:

- Using tables created in Fabric within Databricks.

For details on the prerequisite settings, please refer to the previous article.

This article is part of a four-part series:

- Overview and Purpose of Interoperability

- Detailed Configuration of Hub Storage

- Using tables created in Fabric within Databricks (this article)

- Using tables created in Databricks within Fabric

Linking Tables Created in Fabric to Databricks

Creating a New Table in Fabric

Upload a CSV file to the Fabric Lakehouse.

:::note info

The CSV file used in this article is sales.csv from the following Microsoft documentation:

Create a Microsoft Fabric Lakehouse

:::

From the CSV file, select [Load to Table] > [New Table].

Specify ext, which is a shortcut created in the hub storage, as the schema.

Verifying the Created Table

You can confirm that a new table has been created in the Lakehouse.

A create_from_fabric_sales folder is created in the ext folder of the hub storage.

(This means that the newly created table physically exists in the hub storage.)

You can also confirm that the table is in Delta format.

At this point, as expected, the table created in Fabric is not yet visible in Databricks.

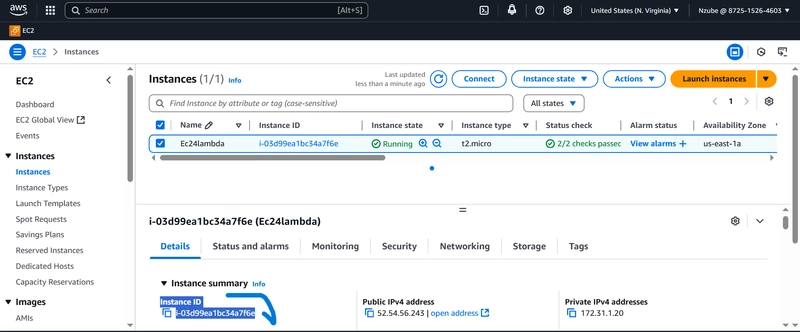

Enabling Databricks to Access Fabric Tables

Use the Databricks SQL Editor to create an external table.

Specify the hub storage folder path (the folder of the table created in Fabric) in the Location field.

CREATE TABLE <table_name>

USING DELTA

LOCATION 'abfss://@.dfs.core.windows.net/folder_name/'

Then, you can view tables created in Fabric from the [Catalog].

Viewing and Analyzing Tables Created in Fabric with Databricks (BI Creation)

From the [Dashboard] in Databricks, you can create a new dashboard and select an external table (i.e., a table created in Fabric) from [Data] > [Select Table].

Thus, it is possible to analyze tables created in Fabric using Databricks.

Editing Tables Created in Fabric with Databricks (DML)

Try executing an UPDATE statement (DML statement) from the SQL Editor in Databricks.

UPDATE create_from_fabric_sales

SET Item = 'No.1 Item'

WHERE Item = 'Road-150 Red, 48'

Of course, you can confirm that the changes have been reflected on the Databricks side.

Although the edit was made from Databricks, the changes were successfully reflected on the Fabric side as well.

SELECT Item, SUM(Quantity * UnitPrice) AS Revenue

FROM Fabric_Lakehouse.ext.create_from_fabric_sales

GROUP BY Item

ORDER BY Revenue DESC;

Therefore, it is possible to edit tables created in Fabric using Databricks (DML statements).

Conclusion

From the above, we have confirmed that

"Tables created in Fabric can be used in Databricks."

Once the hub storage is set up, it is relatively easy to achieve interoperability between Fabric and Databricks.

In the next article, we will introduce the reverse case:

"Using tables created in Databricks in Fabric."

▽ Next article

▽ Previous article

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.jpg?#)

_ArtemisDiana_Alamy.jpg?#)

(1).webp?#)

-xl.jpg)

![Yes, the Gemini icon is now bigger and brighter on Android [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/02/Gemini-on-Galaxy-S25.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Rushes Five Planes of iPhones to US Ahead of New Tariffs [Report]](https://www.iclarified.com/images/news/96967/96967/96967-640.jpg)

![Apple Vision Pro 2 Allegedly in Production Ahead of 2025 Launch [Rumor]](https://www.iclarified.com/images/news/96965/96965/96965-640.jpg)