Everything about AI Function Calling (MCP), the keyword for Agentic AI

Summary Making an Agentic AI framework specialized in AI Function Calling, we've learned a lot about function calling. This article describes everything we've discovered about function calling during our framework development. Github Repository: https://github.com/wrtnlabs/agentica Homepage: https://wrtnlabs.io/agentica Main story of this article: In 2023, when OpenAI announced AI function calling, many people predicted it would conquer the world. However, function calling couldn't deliver on this promise and was overshadowed by agent workflow approaches. We identified the reason: a lack of understanding about JSON schema and insufficient compiler-level support. Agent frameworks should strengthen fundamental computer science principles rather than focusing on fancy techniques. OpenAPI, which is much older and more widely adopted than MCP, can accomplish the same goals when a library developer has strong JSON schema understanding and compiler skills. Function calling can improve performance while reducing token costs by using our orchestration strategy, even with small models like gpt-4o-mini (8b). Let's make function calling great again with Agentica 1. Preface In 2023, OpenAI announced AI Function Calling. At that time, many AI researchers predicted it would revolutionize the industry. Even in my own network, numerous developers were rushing to build new AI applications centered around function calling. The application market will be reorganized around AI chatbots. Developers will only create or gather API functions, and AI will automatically call them. There will be no need to create complex frontend/client applications anymore. Do everything with chat. But what about today? Has function calling conquered the world? No—rather than function calling, agent workflow-driven development has become dominant in the AI development ecosystem. langchain is perhaps the most representative framework in this workflow ecosystem. Currently, many AI developers claim that AI function calling is challenging to implement, consumes too many tokens, and causes frequent hallucinations. They argue that for function calling to gain widespread adoption, AI models must become much cheaper, larger, and smarter. However, we have a different perspective. Today's LLMs are already capable enough, even smaller 8b-sized models (which can run on personal laptops). The reason function calling hasn't gained widespread adoption is that AI framework developers haven't been able to build function schemas accurately and effectively. They haven't focused enough on fundamentals like computer science, instead relying excessively on flashy techniques. 2. Concepts 2.1. AI Function Calling Function calling enables AI models to interact with external systems by identifying when a function should be called and generating the appropriate JSON to call that function. Instead of generating free-form text, the model recognizes when to invoke specific functions based on user requests and provides structured data for those function calls. 2.2. Model Context Protocol The Model Context Protocol is an open standard designed to facilitate secure, two-way connections between AI tools and external data sources. It aims to streamline the integration of AI assistants with various tools and data, allowing for more dynamic interactions. MCP standardizes how AI applications, such as chatbots, interact with external systems, enabling them to access and utilize data more effectively. It allows for flexible and scalable integrations, making it easier for developers to build applications that can adapt to different data sources and tools. 2.3. Agent Workflow An Agent Workflow is a structured approach to AI agent operation where multiple specialized AI agents work collaboratively to accomplish complex tasks. Each agent in the workflow has specific responsibilities and expertise, creating a pipeline of processes that handle different aspects of a larger task. By the way, agent workflow is primarily designed for specific-purpose applications rather than creating general-purpose agents. Agent workflows excel at solving well-defined problems with clear boundaries where the task can be decomposed into specific steps requiring different expertise. They're not typically intended to create a single "do-everything" agent. Workflow-driven development is the current trend in AI development, but it is impossible to achieve Agentic AI with this method. It is an alternative that emerged when Function Calling did not work as well as expected. 3. Function Schema 3.1. JSON Schema Specification { name: "divide", arguments: { x: { type: "number", }, y: { anyOf: [ // not supported in Gemini { type: "number", exclusiveMaximum: 0, // not supported in OpenAI and Gemini }, { type: "number",

Summary

Making an Agentic AI framework specialized in AI Function Calling, we've learned a lot about function calling. This article describes everything we've discovered about function calling during our framework development.

- Github Repository: https://github.com/wrtnlabs/agentica

- Homepage: https://wrtnlabs.io/agentica

Main story of this article:

- In 2023, when OpenAI announced AI function calling, many people predicted it would conquer the world. However, function calling couldn't deliver on this promise and was overshadowed by agent workflow approaches.

- We identified the reason: a lack of understanding about JSON schema and insufficient compiler-level support. Agent frameworks should strengthen fundamental computer science principles rather than focusing on fancy techniques.

- OpenAPI, which is much older and more widely adopted than MCP, can accomplish the same goals when a library developer has strong JSON schema understanding and compiler skills.

- Function calling can improve performance while reducing token costs by using our orchestration strategy, even with small models like

gpt-4o-mini(8b).- Let's make function calling great again with Agentica

1. Preface

In 2023, OpenAI announced AI Function Calling.

At that time, many AI researchers predicted it would revolutionize the industry. Even in my own network, numerous developers were rushing to build new AI applications centered around function calling.

The application market will be reorganized around AI chatbots.

Developers will only create or gather API functions, and AI will automatically call them. There will be no need to create complex frontend/client applications anymore.

Do everything with chat.

But what about today? Has function calling conquered the world? No—rather than function calling, agent workflow-driven development has become dominant in the AI development ecosystem. langchain is perhaps the most representative framework in this workflow ecosystem.

Currently, many AI developers claim that AI function calling is challenging to implement, consumes too many tokens, and causes frequent hallucinations. They argue that for function calling to gain widespread adoption, AI models must become much cheaper, larger, and smarter.

However, we have a different perspective. Today's LLMs are already capable enough, even smaller 8b-sized models (which can run on personal laptops). The reason function calling hasn't gained widespread adoption is that AI framework developers haven't been able to build function schemas accurately and effectively.

They haven't focused enough on fundamentals like computer science, instead relying excessively on flashy techniques.

2. Concepts

2.1. AI Function Calling

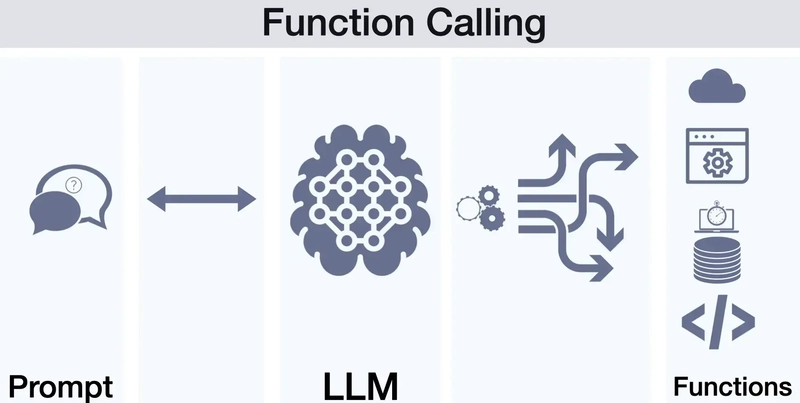

Function calling enables AI models to interact with external systems by identifying when a function should be called and generating the appropriate JSON to call that function.

Instead of generating free-form text, the model recognizes when to invoke specific functions based on user requests and provides structured data for those function calls.

2.2. Model Context Protocol

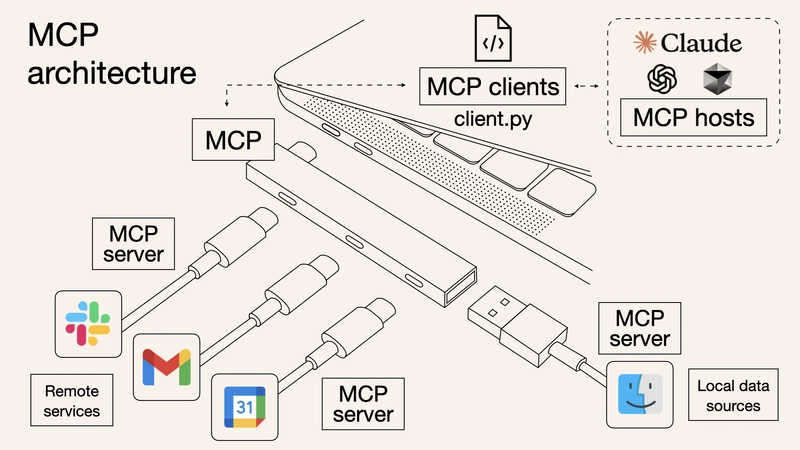

The Model Context Protocol is an open standard designed to facilitate secure, two-way connections between AI tools and external data sources. It aims to streamline the integration of AI assistants with various tools and data, allowing for more dynamic interactions.

MCP standardizes how AI applications, such as chatbots, interact with external systems, enabling them to access and utilize data more effectively.

It allows for flexible and scalable integrations, making it easier for developers to build applications that can adapt to different data sources and tools.

2.3. Agent Workflow

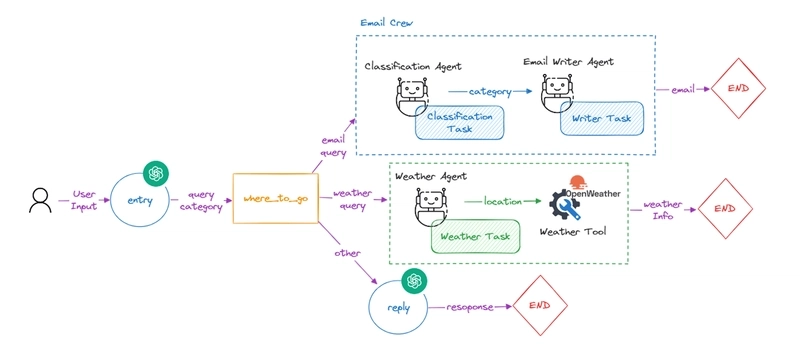

An Agent Workflow is a structured approach to AI agent operation where multiple specialized AI agents work collaboratively to accomplish complex tasks. Each agent in the workflow has specific responsibilities and expertise, creating a pipeline of processes that handle different aspects of a larger task.

By the way, agent workflow is primarily designed for specific-purpose applications rather than creating general-purpose agents. Agent workflows excel at solving well-defined problems with clear boundaries where the task can be decomposed into specific steps requiring different expertise. They're not typically intended to create a single "do-everything" agent.

Workflow-driven development is the current trend in AI development, but it is impossible to achieve Agentic AI with this method. It is an alternative that emerged when Function Calling did not work as well as expected.

3. Function Schema

3.1. JSON Schema Specification

{

name: "divide",

arguments: {

x: {

type: "number",

},

y: {

anyOf: [ // not supported in Gemini

{

type: "number",

exclusiveMaximum: 0, // not supported in OpenAI and Gemini

},

{

type: "number",

exclusiveMinimum: 0, // not supported in OpenAI and Gemini

},

],

},

},

description: "Calculate x / y",

}

You might have seen reports like this in AI communities:

I made a function schema working on OpenAI GPT, but it doesn't work on Google Gemini. Also, some of my function schemas work properly on Claude, but OpenAI throws an exception saying it's an invalid schema.

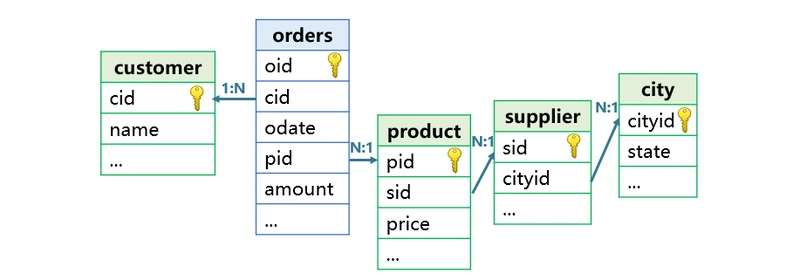

In reality, JSON schema specifications differ between AI vendors, and some don't fully support the standard JSON schema specification. For example, OpenAI doesn't support constraint properties like minimum and format: uuid, while Gemini doesn't even support types like $ref and anyOf.

Specifically, OpenAI and Gemini have their own custom JSON schema specifications that differ from the standard, while Claude fully supports JSON Schema 2020-12 draft. Other models like DeepSeek and Llama don't restrict JSON schema specs, allowing the use of standard JSON schema specifications.

Understanding the exact JSON schema specifications is the first step toward advancing from Function Calling to Agentic AI. Here's a list of JSON schema specifications for various AI vendors:

-

@samchon/openapi-

IChatGptSchema.ts: OpenAI ChatGPT -

IClaudeSchema.ts: Anthropic Claude -

IDeepSeekSchema.ts: High-Flyer DeepSeek -

IGeminiSchema.ts: Google Gemini -

ILlamaSchema.ts: Meta Llama

-

3.2. OpenAPI and MCP

import { HttpLlm, IHttpLlmApplication } from "@samchon/openapi";

const app: IHttpLlmApplication<"chatgpt"> = HttpLlm.application({

model: "chatgpt",

document: await fetch(

"https://shopping-be.wrtn.ai/editor/swagger.json",

).then((r) => r.json()),

});

console.log(app);

Converting OpenAPI document to AI function calling schemas

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Is this too much for a modular monolith system? [closed]](https://i.sstatic.net/pYL1nsfg.png)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)