DuckDB

Introduction DuckDB is widely recognized for its analytical processing capabilities, but its true power lies in its exceptional query engine. Unlike traditional databases that require extensive setup and ETL processes, DuckDB allows users to query data from various sources seamlessly. This article explores DuckDB’s query engine, its advantages, and a hands-on guide to using it effectively. What Makes DuckDB's Query Engine a Game-Changer? Unlike many databases that require data to be imported before querying, DuckDB’s query engine is designed to work directly on multiple file formats and external databases. Here’s what makes it stand out: Key Features Supports Multiple File Formats: Query CSV, Parquet, JSON, and more without manual conversion. Works with Relational Databases: Connects seamlessly to PostgreSQL, MySQL, and others. Reads Open Data Formats: Supports Iceberg and Delta Lake. Simple SQL Interface: No complex setup required. Multi-language Compatibility: Works with Python, R, Java, Node.js, Rust, and more. Supported by Visualizations tools: Supported as data-source by visualization tools such as Apache-Superset, metabase, JDBC based tools (Tableau,...), etc. Advanced Optimizations in DuckDB DuckDB is a relational (table-oriented) Database Management System (DBMS) that supports the Structured Query Language (SQL). It is designed to address the need for a database system that offers a unique set of trade-offs, particularly for analytical use cases. Key characteristics of DuckDB include its simplicity and embedded operation, drawing inspiration from SQLite. It has no external dependencies for compilation or runtime and is compiled into a single header and implementation file (an "amalgamation"), simplifying deployment. Unlike traditional client-server databases, DuckDB runs completely embedded within a host process, eliminating the need for separate server software installation and maintenance. This embedded nature allows for high-speed data transfer and the capability to process foreign data, such as Pandas DataFrames in Python, without copying. Designed to excel in analytical query workloads (OLAP) characterized by complex, long-running queries over large datasets, DuckDB employs a columnar-vectorized query execution engine. This approach processes data in batches ("vectors"), significantly reducing overhead compared to traditional row-based systems like PostgreSQL, MySQL, or SQLite. DuckDB is also extensible, allowing users to define new data types, functions, file formats, and SQL syntax through an extension mechanism. Notably, support for popular formats like Parquet and JSON, as well as protocols like HTTP(S) and s3, are implemented as extensions. DuckDB is extremely portable, capable of being compiled for all major operating systems (Linux, macOS, Windows) and CPU architectures (x86, ARM), and can even run in web browsers and on mobile phones via DuckDB-Wasm. It provides APIs for various programming languages, including Java, C, C++, Go, and Python. Despite its simplicity, DuckDB is feature-rich, offering extensive support for complex SQL queries, a large function library, window functions, transactional guarantees (ACID properties), persistent single-file databases, and secondary indexes. It is also deeply integrated into Python and R for efficient interactive data analysis. For more technical details, visit the DuckDB Official Documentation. DuckDB vs Apache Spark vs Trino: Quick Comparison Feature DuckDB Apache Spark Trino Setup Costs Free, lightweight Cluster setup required Requires distributed setup Running Costs Minimal Ongoing cluster expenses Cluster or cloud costs Learning Curve Basic SQL knowledge Requires deeper learning Moderate SQL knowledge Performance Fast for local queries Scales well for big data Optimized for distributed SQL Use Case Local analytics Distributed data processing Federated query engine DuckDB is ideal for local analytical queries, Spark is better for large-scale distributed computing, and Trino excels at federated queries across multiple sources. Hands-on: Unleashing DuckDB as Your Query Engine In this tutorial, we’ll use DuckDB to query three different data sources: PostgreSQL Database MySQL Database CSV Files JSON from a Web Server 1. Setting Up the Environment We’ll use Docker Compose to set up the infrastructure. First, clone the repository and start the services: git clone https://github.com/mikekenneth/bp_duck_as_query_engine cd bp_duck_as_query_engine make up # Start services Check running containers with: docker ps Then, access DuckDB CLI: make duckdb To ease the setup, I created a duckdb_init.sql file that contains necessary SQL commands to connect to our external Data sources 2. Connecting to External Data Sources a) Connecting to MinIO (s3-compatible Storage) To interact wi

Introduction

DuckDB is widely recognized for its analytical processing capabilities, but its true power lies in its exceptional query engine. Unlike traditional databases that require extensive setup and ETL processes, DuckDB allows users to query data from various sources seamlessly. This article explores DuckDB’s query engine, its advantages, and a hands-on guide to using it effectively.

What Makes DuckDB's Query Engine a Game-Changer?

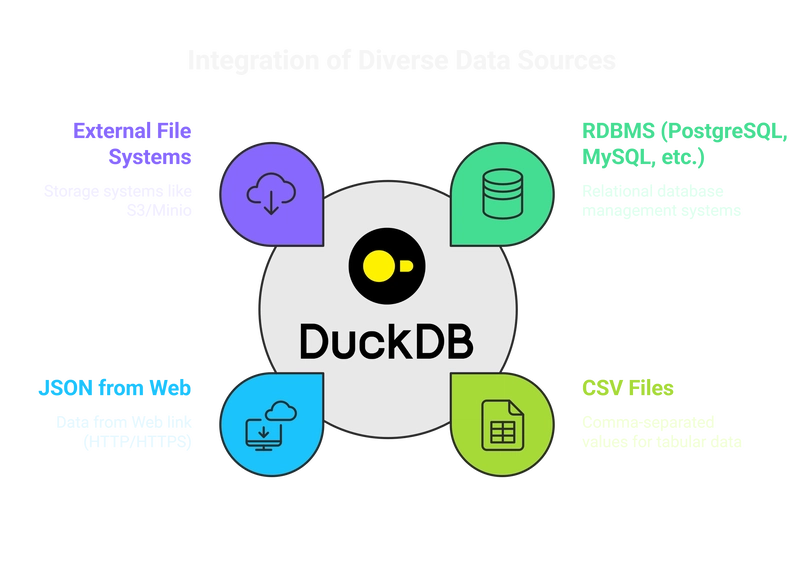

Unlike many databases that require data to be imported before querying, DuckDB’s query engine is designed to work directly on multiple file formats and external databases. Here’s what makes it stand out:

Key Features

- Supports Multiple File Formats: Query CSV, Parquet, JSON, and more without manual conversion.

- Works with Relational Databases: Connects seamlessly to PostgreSQL, MySQL, and others.

- Reads Open Data Formats: Supports Iceberg and Delta Lake.

- Simple SQL Interface: No complex setup required.

- Multi-language Compatibility: Works with Python, R, Java, Node.js, Rust, and more.

- Supported by Visualizations tools: Supported as data-source by visualization tools such as Apache-Superset, metabase, JDBC based tools (Tableau,...), etc.

Advanced Optimizations in DuckDB

DuckDB is a relational (table-oriented) Database Management System (DBMS) that supports the Structured Query Language (SQL). It is designed to address the need for a database system that offers a unique set of trade-offs, particularly for analytical use cases. Key characteristics of DuckDB include its simplicity and embedded operation, drawing inspiration from SQLite. It has no external dependencies for compilation or runtime and is compiled into a single header and implementation file (an "amalgamation"), simplifying deployment. Unlike traditional client-server databases, DuckDB runs completely embedded within a host process, eliminating the need for separate server software installation and maintenance. This embedded nature allows for high-speed data transfer and the capability to process foreign data, such as Pandas DataFrames in Python, without copying.

Designed to excel in analytical query workloads (OLAP) characterized by complex, long-running queries over large datasets, DuckDB employs a columnar-vectorized query execution engine. This approach processes data in batches ("vectors"), significantly reducing overhead compared to traditional row-based systems like PostgreSQL, MySQL, or SQLite. DuckDB is also extensible, allowing users to define new data types, functions, file formats, and SQL syntax through an extension mechanism. Notably, support for popular formats like Parquet and JSON, as well as protocols like HTTP(S) and s3, are implemented as extensions.

DuckDB is extremely portable, capable of being compiled for all major operating systems (Linux, macOS, Windows) and CPU architectures (x86, ARM), and can even run in web browsers and on mobile phones via DuckDB-Wasm. It provides APIs for various programming languages, including Java, C, C++, Go, and Python. Despite its simplicity, DuckDB is feature-rich, offering extensive support for complex SQL queries, a large function library, window functions, transactional guarantees (ACID properties), persistent single-file databases, and secondary indexes. It is also deeply integrated into Python and R for efficient interactive data analysis.

For more technical details, visit the DuckDB Official Documentation.

DuckDB vs Apache Spark vs Trino: Quick Comparison

| Feature | DuckDB | Apache Spark | Trino |

|---|---|---|---|

| Setup Costs | Free, lightweight | Cluster setup required | Requires distributed setup |

| Running Costs | Minimal | Ongoing cluster expenses | Cluster or cloud costs |

| Learning Curve | Basic SQL knowledge | Requires deeper learning | Moderate SQL knowledge |

| Performance | Fast for local queries | Scales well for big data | Optimized for distributed SQL |

| Use Case | Local analytics | Distributed data processing | Federated query engine |

DuckDB is ideal for local analytical queries, Spark is better for large-scale distributed computing, and Trino excels at federated queries across multiple sources.

Hands-on: Unleashing DuckDB as Your Query Engine

In this tutorial, we’ll use DuckDB to query three different data sources:

- PostgreSQL Database

- MySQL Database

- CSV Files

- JSON from a Web Server

1. Setting Up the Environment

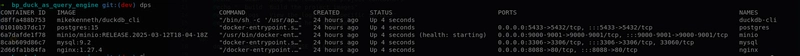

We’ll use Docker Compose to set up the infrastructure. First, clone the repository and start the services:

git clone https://github.com/mikekenneth/bp_duck_as_query_engine

cd bp_duck_as_query_engine

make up # Start services

Check running containers with:

docker ps

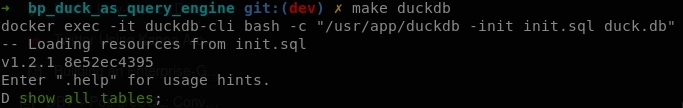

Then, access DuckDB CLI:

make duckdb

To ease the setup, I created a duckdb_init.sql file that contains necessary SQL commands to connect to our external Data sources

2. Connecting to External Data Sources

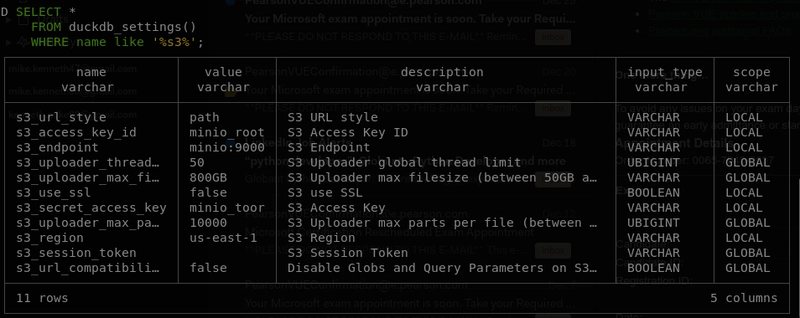

a) Connecting to MinIO (s3-compatible Storage)

To interact with data stored in MinIO, we need to enable the HTTPS extension and configure the connection settings:

-- Enable the HTTPS Extension to connect to s3/Minio

INSTALL https;

LOAD https;

-- Set Connection settings

SET s3_region='us-east-1';

SET s3_url_style='path';

SET s3_endpoint='minio:9000';

SET s3_access_key_id='minio_root' ;

SET s3_secret_access_key='minio_toor';

SET s3_use_ssl=false; -- Needed when running without SSL

Let's verify if the settings are correctly applied.

b) Connecting to PostgreSQL

To query data within our PostgreSQL instance, we need to load the PostgreSQL extension and then attach to the database:

-- Load Extension

INSTALL postgres;

LOAD postgres;

-- Attach the PostgreSQL database

ATTACH 'dbname=postgres user=postgres password=postgres host=postgres port=5432'

AS postgres_db (TYPE postgres, SCHEMA 'public');

Explanation: The

INSTALL postgres;command adds support for interacting with PostgreSQL databases. TheATTACHcommand establishes a connection to the specified PostgreSQL database (dbname,user,password,host) and assigns it the aliaspostgres_db, making its tables accessible within DuckDB. We also specify the database type and schema.

We can now create a table in PostgreSQL by reading a CSV file from our MinIO instance:

-- Create the base table in Postgres from s3

create or replace table postgres_db.fct_trips as

(

select *

from read_csv("s3://duckdb-bucket/init_data/base_raw.csv")

);

c) Connecting to MySQL

-- Load Extension

INSTALL mysql;

LOAD mysql;

-- Attach the MYSQL database

ATTACH 'database=db user=user password=password host=mysql port=3306'

AS mysql_db (TYPE mysql);

Explanation: The

INSTALL mysql;command enables interaction with MySQL databases. TheATTACHcommand connects to the MySQL database using the provided credentials and assigns it the aliasmysql_db. The database type is also specified.

Let's create a table in MySQL by reading a CSV file from MinIO:

-- Create the base table in MySQL from s3

create or replace table mysql_db.dim_credit_card as

(

select *

from read_csv("s3://duckdb-bucket/init_data/base_raw.csv")

);

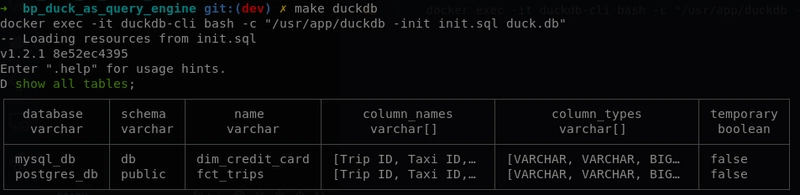

Let's confirm that both databases are successfully attached:

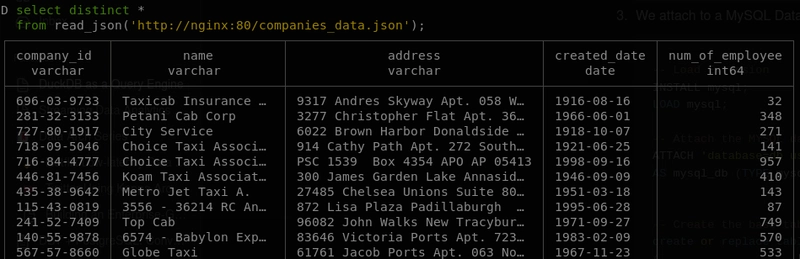

d) Querying JSON from a Web Server

Finally, let's demonstrate fetching data directly from a web server serving a JSON file:

select distinct *

from read_json('http://nginx:80/companies_data.json')

This query directly reads and processes the JSON data available at the specified URL.

Here is the output of the query:

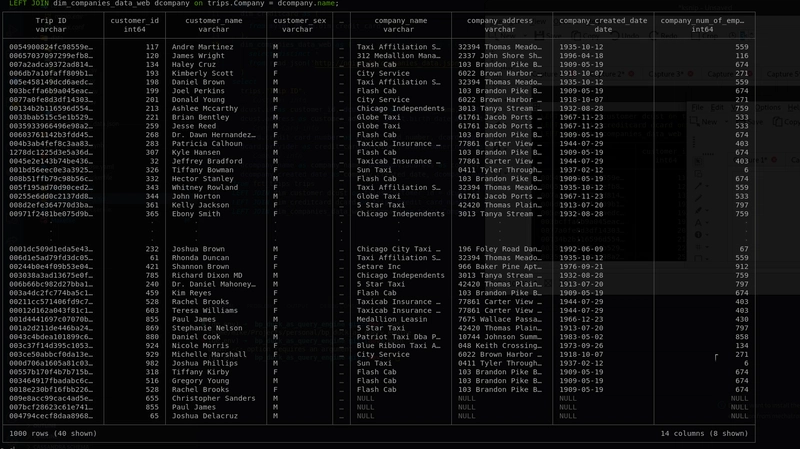

3. Querying Multiple Data Sources Simultaneously

With connectivity established to MinIO and our relational databases, we can now execute a single query that retrieves and joins data from all our configured sources:

---------------- Query multiple Data Sources Simultaneously ----------------

with

fct_trips as (

select *

from postgres_db.public.fct_trips

),

dim_customer as (

select distinct *

from read_csv('s3://duckdb-bucket/init_data/customer.csv')

),

dim_creditcard as (

select distinct *

from mysql_db.db.dim_credit_card

),

dim_companies_data_web as (

select distinct *

from read_json('http://nginx:80/companies_data.json')

)

select

trips."Trip ID",

-- Customer info

dcust.id as customer_id, dcust.name as customer_name,dcust.sex as customer_sex,

dcust.address as customer_address, dcust.birth_date as customer_birth_date,

-- Credit Card info

dcard.credit_card_number as credit_card_number, dcard.expire_date as credit_card_expire_date,

dcard.provider as credit_card_provider, dcard.owner_name as credit_card_owner_name,

-- Company info

dcompany.name as company_name, dcompany.address as company_address,

dcompany.created_date as company_created_date, dcompany.num_of_employee as company_num_of_employee

from fct_trips trips

LEFT JOIN dim_customer dcust on trips.customer_id = dcust.id

LEFT JOIN dim_creditcard dcard on trips.credit_card_number = dcard.credit_card_number

LEFT JOIN dim_companies_data_web dcompany on trips.Company = dcompany.name;

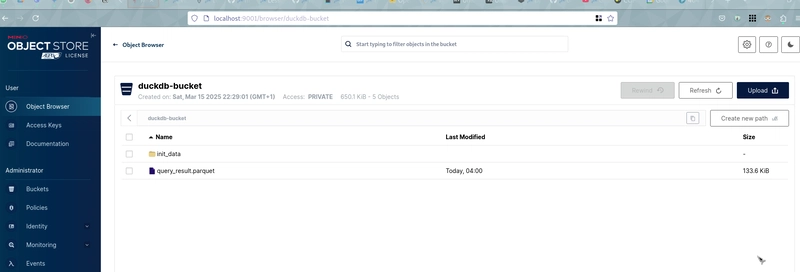

4. Exporting Query Results

Furthermore, DuckDB allows direct export of query results to external storage, such as S3/MinIO, in various file formats. Here, we'll export the joined data as a Parquet file:

COPY (

with

fct_trips as (

select *

from postgres_db.public.fct_trips

...

...

...

from fct_trips trips

LEFT JOIN dim_customer dcust on trips.customer_id = dcust.id

LEFT JOIN dim_creditcard dcard on trips.credit_card_number = dcard.credit_card_number

LEFT JOIN dim_companies_data_web dcompany on trips.Company = dcompany.name

) TO 's3://duckdb-bucket/query_result.parquet' (FORMAT parquet);

We can then verify the exported Parquet file content directly with DuckDB

We can then validate the exported file:

SELECT * FROM read_parquet('s3://duckdb-bucket/query_result.parquet');

Conclusion

DuckDB is a powerful and lightweight query engine that allows querying multiple sources effortlessly. Whether working with CSV files, databases, or web-based JSON data, its simple SQL interface and high performance make it a fantastic choice for analytics. As the ecosystem grows, DuckDB continues to add more features, making it a strong alternative for local data processing.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)