Doing DE job as DS

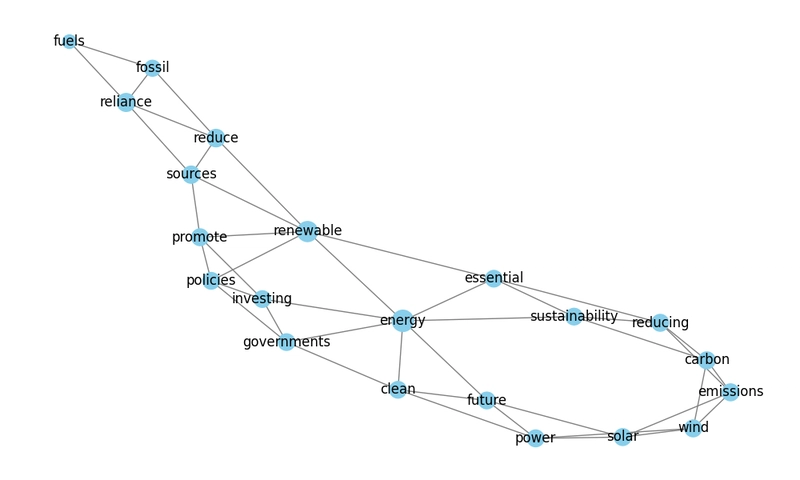

As a Machine Learning Engineer I've seen how data pipelines become crucial for effective ML systems (even more than the models itself). So instead of refusing this job, I suggest to embrace it. In a daily DS job, you inevitably end up doing DE work. Creating data pipelines to move data between systems isn't just a one-time thing, and data rarely cooperates by fitting neatly in memory. After the model development phase, you're stuck in the pipeline maintenance period. So what are our options? I've tried a few approaches... Python (Micro)service (FastAPI or CronJob in container) Works nicely for all types of data. With schedule package that's quite easy to set up for regular jobs and with FastAPI you can even go for streaming data. Most pipelines run hourly or daily anyway. I would not recommend doing it for big(-ger) data though. Sure, you can process in batches, but now you're writing that orchestration logic yourself. Plus if you ever need to speed up the process you are kinda left without scaling ability. Scheduled Jobs (Cloud Run, K8s Jobs and CronJobs) This has become my go-to for medium datasets (especially with cloud run ease of setup). Being just a Docker container means I can use whatever packages and architecture I want. Startup times are quick, and it can be manually scaled. K8s Jobs and Cloud Run Jobs both let you parameterize executions. Batch numbers, date ranges, or partition keys are parameters that can be passed to the job without rebuilding the docker image. Here you only need to handle starting up the job. That can be done via Airflow or any microservice that can send http requests or execute kubectl commands. With CronJobs you can simply set up multiple cronjobs with different parameters and the startup will be handled by k8s itself. Another upside is that you pay only for uptime. However there is an annoying part as well. You build everything by hand: integration, batching, orchestration, failure scenarios. Specialized Services (Apache Beam with Dataflow, Spark, Flink) Apache Beam allows to describe your workload and then execute it on different runners. If you have these runners available then you are in luck because Beam and its runners come with a lot of pre-built connections for pushing data around and handle batching, autoscaling and throttling out of the box. It works with streaming data too. But Apache Beam is more expensive and has a steeper learning curve even with runners available. But as a DS you will love tweaking the configs to find the perfect batch size interval. This system works really good after you spend time setting it up and configuring and have all necessary components nearby to create new or tweak existing pipeline. Despite these options, consistency triumphs theoretical perfection. If your team has invested in Apache Beam, stick with it rather than introducing yet another pipeline approach. Anyway these DE skills aren't optional extras anymore - they're becoming core to our toolkit. The data scientists are expected to manage the full DS lifecycle. What approaches have worked for you?

As a Machine Learning Engineer I've seen how data pipelines become crucial for effective ML systems (even more than the models itself). So instead of refusing this job, I suggest to embrace it. In a daily DS job, you inevitably end up doing DE work. Creating data pipelines to move data between systems isn't just a one-time thing, and data rarely cooperates by fitting neatly in memory. After the model development phase, you're stuck in the pipeline maintenance period.

So what are our options? I've tried a few approaches...

Python (Micro)service (FastAPI or CronJob in container)

Works nicely for all types of data. With schedule package that's quite easy to set up for regular jobs and with FastAPI you can even go for streaming data. Most pipelines run hourly or daily anyway. I would not recommend doing it for big(-ger) data though. Sure, you can process in batches, but now you're writing that orchestration logic yourself. Plus if you ever need to speed up the process you are kinda left without scaling ability.

Scheduled Jobs (Cloud Run, K8s Jobs and CronJobs)

This has become my go-to for medium datasets (especially with cloud run ease of setup). Being just a Docker container means I can use whatever packages and architecture I want. Startup times are quick, and it can be manually scaled.

K8s Jobs and Cloud Run Jobs both let you parameterize executions. Batch numbers, date ranges, or partition keys are parameters that can be passed to the job without rebuilding the docker image. Here you only need to handle starting up the job. That can be done via Airflow or any microservice that can send http requests or execute kubectl commands. With CronJobs you can simply set up multiple cronjobs with different parameters and the startup will be handled by k8s itself. Another upside is that you pay only for uptime. However there is an annoying part as well. You build everything by hand: integration, batching, orchestration, failure scenarios.

Specialized Services (Apache Beam with Dataflow, Spark, Flink)

Apache Beam allows to describe your workload and then execute it on different runners. If you have these runners available then you are in luck because Beam and its runners come with a lot of pre-built connections for pushing data around and handle batching, autoscaling and throttling out of the box. It works with streaming data too. But Apache Beam is more expensive and has a steeper learning curve even with runners available. But as a DS you will love tweaking the configs to find the perfect batch size interval. This system works really good after you spend time setting it up and configuring and have all necessary components nearby to create new or tweak existing pipeline.

Despite these options, consistency triumphs theoretical perfection. If your team has invested in Apache Beam, stick with it rather than introducing yet another pipeline approach.

Anyway these DE skills aren't optional extras anymore - they're becoming core to our toolkit. The data scientists are expected to manage the full DS lifecycle.

What approaches have worked for you?

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)