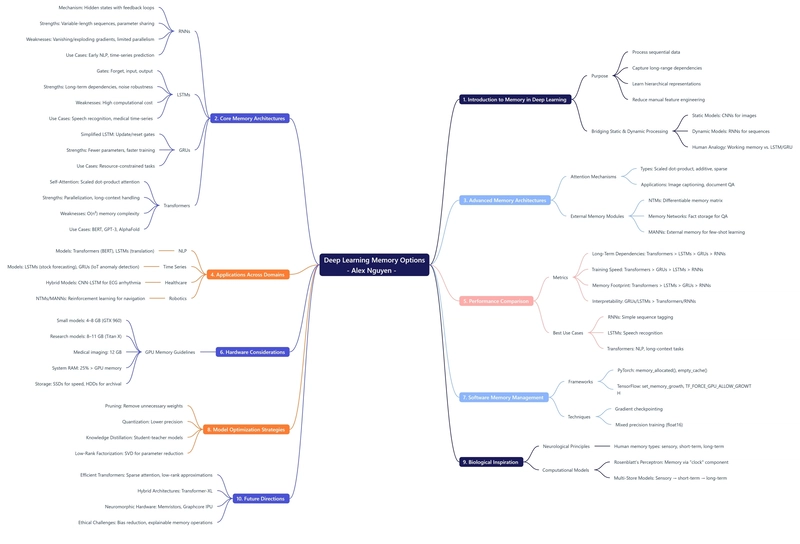

Deep Learning Memory Option - By Alex Nguyen

In the realm of artificial intelligence and machine learning, the concept of deep learning memory option plays a crucial role in enabling models to handle complex tasks that require understanding of sequences and maintaining contextual dependencies. Memory options in deep learning architectures allow for the processing of sequential data like text, speech, and time series, capture long-range dependencies, learn hierarchical representations, and significantly reduce the need for manual feature engineering by autonomously extracting intricate patterns. 1. Introduction to Memory Options in Deep Learning Memory mechanisms in deep learning are essential for enabling models to process and understand sequential data effectively. These mechanisms allow networks to maintain information over time, which is crucial for tasks such as natural language processing, time series forecasting, and any application where context and sequence matter. By providing the ability to remember past inputs and use this information to influence future outputs, memory options give deep learning models a significant advantage in handling dynamic and complex data. Purpose of Memory Mechanisms Memory mechanisms serve several critical purposes in deep learning. Firstly, they enable the processing of sequential data, such as text, speech, and time series, allowing models to consider temporal relationships that are inherent in these types of data. For instance, in natural language processing, understanding the context of words within a sentence requires remembering previous words and their meanings. Secondly, memory options help in capturing long-range dependencies. This is particularly important in tasks like language translation or document summarization, where understanding the entire context is necessary for accurate interpretation. For example, in a long sentence, a model needs to remember the subject at the beginning to correctly conjugate the verb at the end, illustrating the importance of maintaining long-term memory. Thirdly, these mechanisms facilitate the learning of hierarchical representations. This means that models can start by recognizing simple patterns (like edges in images) and progressively build up to more complex structures (such as shapes and ultimately objects). In the case of language, this could mean identifying individual words before understanding phrases and then entire sentences. Finally, memory mechanisms reduce the need for manual feature engineering. By autonomously extracting complex patterns from raw data, deep learning models with memory options can learn directly from the data, thereby simplifying the modeling process and potentially leading to more accurate and robust results. Bridging Static and Dynamic Processing The distinction between static and dynamic processing in deep learning is an important consideration when discussing memory options. Static data models, such as Convolutional Neural Networks (CNNs) used for image recognition, operate on fixed-size inputs where the spatial arrangement of data is crucial but the temporal aspect is irrelevant. In contrast, dynamic models, like Recurrent Neural Networks (RNNs), are designed to handle variable-length sequences and capture temporal dependencies, making them suitable for tasks like speech recognition and language translation. Memory functions in deep learning aim to bridge these two types of processing by mimicking human cognitive processes. Humans possess both working memory (for short-term processing) and long-term memory (for retaining information over time), and deep learning models strive to emulate these capabilities. For instance, Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs) incorporate mechanisms to selectively retain or discard information, much like human selective attention and memory consolidation processes. By integrating memory into neural networks, deep learning models gain the ability to handle not just the static aspects of data but also the dynamic, sequential nature of many real-world problems. This integration enables models to process information in a way that is more reflective of how humans interact with and interpret the world around them, leading to advancements in fields like natural language processing, time series analysis, and beyond. 2. Core Memory Architectures The development of core memory architectures in deep learning has led to significant advancements in handling sequential data and complex patterns. From the foundational Recurrent Neural Networks (RNNs) to the more sophisticated Long Short-Term Memory (LSTM), Gated Recurrent Units (GRUs), and Transformer networks, each architecture brings unique strengths and addresses different aspects of memory management in deep learning. Recurrent Neural Networks (RNNs) Recurrent Neural Networks (RNNs) are one of the earliest and most fundamental memory architectures used in deep learning. The primary mechanism behind RNN

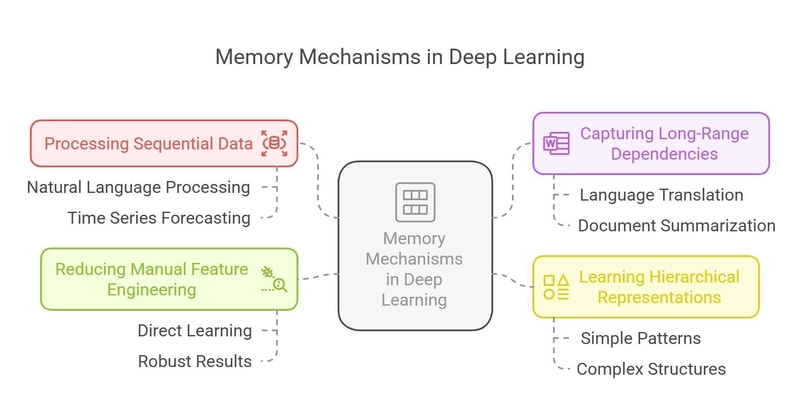

In the realm of artificial intelligence and machine learning, the concept of deep learning memory option plays a crucial role in enabling models to handle complex tasks that require understanding of sequences and maintaining contextual dependencies.

Memory options in deep learning architectures allow for the processing of sequential data like text, speech, and time series, capture long-range dependencies, learn hierarchical representations, and significantly reduce the need for manual feature engineering by autonomously extracting intricate patterns.

1. Introduction to Memory Options in Deep Learning

Memory mechanisms in deep learning are essential for enabling models to process and understand sequential data effectively. These mechanisms allow networks to maintain information over time, which is crucial for tasks such as natural language processing, time series forecasting, and any application where context and sequence matter.

By providing the ability to remember past inputs and use this information to influence future outputs, memory options give deep learning models a significant advantage in handling dynamic and complex data.

Purpose of Memory Mechanisms

Memory mechanisms serve several critical purposes in deep learning. Firstly, they enable the processing of sequential data, such as text, speech, and time series, allowing models to consider temporal relationships that are inherent in these types of data. For instance, in natural language processing, understanding the context of words within a sentence requires remembering previous words and their meanings.

Secondly, memory options help in capturing long-range dependencies. This is particularly important in tasks like language translation or document summarization, where understanding the entire context is necessary for accurate interpretation. For example, in a long sentence, a model needs to remember the subject at the beginning to correctly conjugate the verb at the end, illustrating the importance of maintaining long-term memory.

Thirdly, these mechanisms facilitate the learning of hierarchical representations. This means that models can start by recognizing simple patterns (like edges in images) and progressively build up to more complex structures (such as shapes and ultimately objects). In the case of language, this could mean identifying individual words before understanding phrases and then entire sentences.

Finally, memory mechanisms reduce the need for manual feature engineering. By autonomously extracting complex patterns from raw data, deep learning models with memory options can learn directly from the data, thereby simplifying the modeling process and potentially leading to more accurate and robust results.

Bridging Static and Dynamic Processing

The distinction between static and dynamic processing in deep learning is an important consideration when discussing memory options. Static data models, such as Convolutional Neural Networks (CNNs) used for image recognition, operate on fixed-size inputs where the spatial arrangement of data is crucial but the temporal aspect is irrelevant.

In contrast, dynamic models, like Recurrent Neural Networks (RNNs), are designed to handle variable-length sequences and capture temporal dependencies, making them suitable for tasks like speech recognition and language translation.

Memory functions in deep learning aim to bridge these two types of processing by mimicking human cognitive processes. Humans possess both working memory (for short-term processing) and long-term memory (for retaining information over time), and deep learning models strive to emulate these capabilities.

For instance, Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs) incorporate mechanisms to selectively retain or discard information, much like human selective attention and memory consolidation processes.

By integrating memory into neural networks, deep learning models gain the ability to handle not just the static aspects of data but also the dynamic, sequential nature of many real-world problems.

This integration enables models to process information in a way that is more reflective of how humans interact with and interpret the world around them, leading to advancements in fields like natural language processing, time series analysis, and beyond.

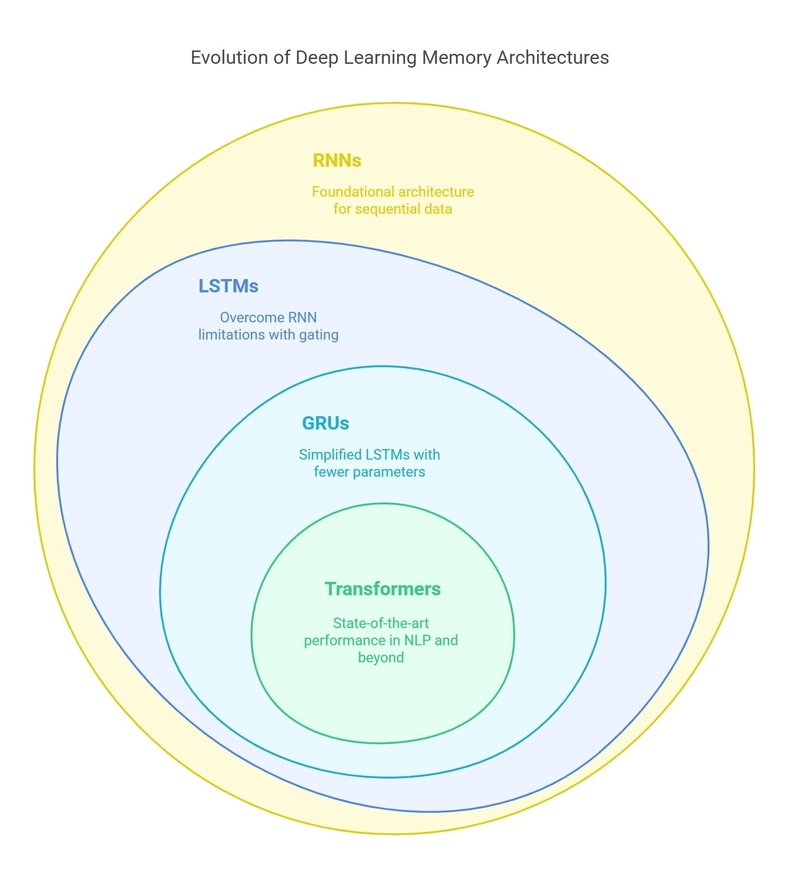

2. Core Memory Architectures

The development of core memory architectures in deep learning has led to significant advancements in handling sequential data and complex patterns. From the foundational Recurrent Neural Networks (RNNs) to the more sophisticated Long Short-Term Memory (LSTM), Gated Recurrent Units (GRUs), and Transformer networks, each architecture brings unique strengths and addresses different aspects of memory management in deep learning.

Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs) are one of the earliest and most fundamental memory architectures used in deep learning. The primary mechanism behind RNNs is the use of hidden states that propagate sequential context through the network. Mathematically, this process can be described by the equation:

[ hₜ = f(W \cdot [hₜ₋₁, xₜ] + b) ]

where ( hₜ ) represents the hidden state at time step ( t ), ( f ) is a non-linear activation function, ( W ) is the weight matrix, ( hₜ₋₁ ) is the previous hidden state, ( xₜ ) is the current input, and ( b ) is the bias term.

Architecture & Mechanism

The architecture of an RNN involves looping the output of a layer back to the input to form a feedback loop. This allows the network to maintain an internal state that captures information about previous inputs. Each neuron in an RNN receives input from the current time step and the hidden state from the previous time step, allowing the network to have a "memory" of past events.

Strengths

One of the key strengths of RNNs is their ability to handle variable-length sequences. Unlike feedforward neural networks, which require fixed-size inputs, RNNs can process sequences of any length, making them versatile for applications like natural language processing and time-series prediction. Additionally, RNNs share parameters across time steps, which helps in reducing the number of parameters needed compared to fully connected networks.

Weaknesses

Despite their strengths, RNNs suffer from the problem of vanishing and exploding gradients, which makes it difficult for them to capture long-range dependencies. As the sequence length increases, the gradients used to update the network weights either become too small (vanishing) or too large (exploding), hindering the training process. Furthermore, the sequential nature of RNNs limits their ability to be parallelized, resulting in slower training times.

Use Cases

Early applications of RNNs included tasks in natural language processing, such as sentiment analysis and language modeling, as well as simple time-series prediction tasks. While RNNs have been largely superseded by more advanced architectures like LSTMs and Transformers, they still serve as a foundational concept in understanding memory mechanisms in deep learning.

Long Short-Term Memory (LSTM)

Long Short-Term Memory (LSTM) networks are an extension of RNNs designed to address the limitations associated with vanishing and exploding gradients. LSTMs introduce a more sophisticated architecture with memory cells and gating mechanisms that allow the network to selectively remember or forget information.

Architecture & Gates

The core component of an LSTM is the memory cell (( Cₜ )), which is regulated by three gates: the forget gate (( fₜ )), the input gate (( iₜ )), and the output gate (( oₜ )). These gates control the flow of information through the network, allowing the LSTM to decide what to keep in memory and what to discard.

The key equations governing the operations of an LSTM are as follows:

[ fₜ = \sigma(W_f \cdot [hₜ₋₁, xₜ] + b_f) ] [ iₜ = \sigma(W_i \cdot [hₜ₋₁, xₜ] + b_i) ] [ \tildeₜ = \tanh(W_C \cdot [hₜ₋₁, xₜ] + b_C) ] [ Cₜ = fₜ \odot Cₜ₋₁ + iₜ \odot \tildeₜ ] [ oₜ = \sigma(W_o \cdot [hₜ₋₁, xₜ] + b_o) ] [ hₜ = oₜ \odot \tanh(Cₜ) ]

where ( \sigma ) is the sigmoid function, ( \odot ) denotes element-wise multiplication, and ( \tanh ) is the hyperbolic tangent function.

Strengths

LSTMs mitigate the vanishing gradient problem by using gating mechanisms to control the flow of information. This allows them to capture long-range dependencies more effectively than standard RNNs. Additionally, LSTMs are robust against noisy data, making them suitable for applications where input data may contain errors or inconsistencies.

Weaknesses

The main drawback of LSTMs is their higher computational cost compared to RNNs. An LSTM typically has about four times the number of parameters as an RNN, which can lead to longer training times and increased memory requirements. Moreover, LSTMs often require complex hyperparameter tuning to achieve optimal performance.

Use Cases

LSTMs have been widely adopted in various domains, including speech recognition, machine translation, and medical time-series analysis. Their ability to handle long sequences and capture intricate temporal relationships makes them a popular choice for many sequence-based tasks.

Gated Recurrent Units (GRUs)

Gated Recurrent Units (GRUs) are a simplified version of LSTMs that aim to achieve similar performance with fewer parameters. GRUs combine the forget and input gates into a single update gate, reducing the complexity of the architecture while maintaining the ability to capture long-term dependencies.

Architecture & Gates

The architecture of a GRU includes two gating mechanisms: the update gate (( zₜ )) and the reset gate (( rₜ )). The update gate balances the influence of past and new information, while the reset gate determines how much of the past information to forget.

The key equations for a GRU are:

[ zₜ = \sigma(W_z \cdot [hₜ₋₁, xₜ] + b_z) ] [ rₜ = \sigma(W_r \cdot [hₜ₋₁, xₜ] + b_r) ] [ \tildeₜ = \tanh(W \cdot [rₜ \odot hₜ₋₁, xₜ] + b) ] [ hₜ = (1 - zₜ) \odot hₜ₋₁ + zₜ \odot \tilde) V ]

where ( Q ), ( K ), and ( V ) are the query, key, and value matrices, respectively, and ( d_k ) is the dimension of the keys.

Transformers employ multi-head attention, which involves running several attention mechanisms in parallel to capture diverse contextual relationships. Additionally, positional encoding is used to inject information about the order of the sequence, typically using sinusoidal functions.

Strengths

Transformers are highly parallelizable, allowing for faster training and inference times compared to recurrent architectures. Their ability to capture long-range dependencies efficiently has led to state-of-the-art performance on various NLP benchmarks, exemplified by models like BERT and GPT.

Weaknesses

One of the main drawbacks of Transformers is their O(n²) memory complexity with respect to sequence length ( n ). This can become a bottleneck when dealing with very long sequences. Additionally, Transformers typically require large datasets for effective training, as their performance tends to improve with more data.

Use Cases

Transformers are widely used in applications such as machine translation, document summarization, and even in domains like protein folding with models like AlphaFold. Their versatility and strong performance make them a cornerstone of modern deep learning, particularly in natural language processing.

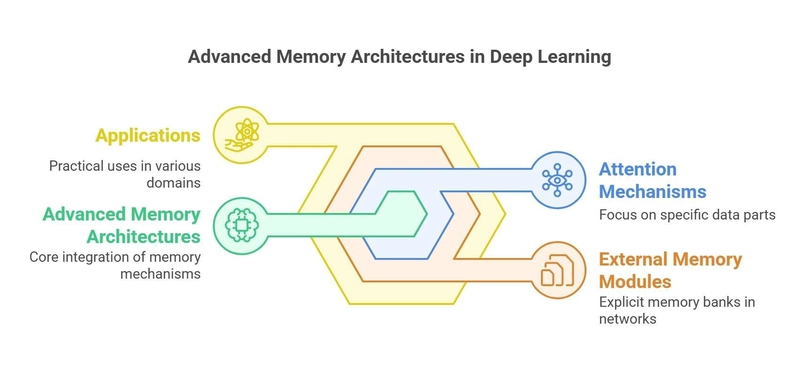

3. Advanced Memory Architectures

Beyond the core memory architectures, there exist more advanced approaches that integrate additional memory mechanisms to enhance the capabilities of deep learning models. These include various forms of attention mechanisms and external memory modules, each bringing unique benefits to the table.

Attention Mechanisms

Attention mechanisms have become a pivotal advancement in deep learning, allowing models to focus on specific parts of the input data while processing it. These mechanisms have been integrated into various architectures, enhancing their ability to capture relevant information and improving performance on a wide range of tasks.

Types

There are several types of attention mechanisms used in deep learning. The scaled dot-product attention is the basis for the self-attention mechanism used in Transformer networks. It computes the attention scores by scaling the dot product of the query and key vectors and applying a softmax function.

Another type is additive or content-based attention, which was used in early sequence-to-sequence models. This method computes attention scores using a feedforward neural network, allowing for more complex interactions between the input and the attention weights.

Sparse attention mechanisms, such as those used in the Longformer model, aim to reduce the computational cost associated with processing long sequences. By attending to only a subset of the input data, sparse attention can maintain the benefits of attention mechanisms while being more efficient.

Applications

Attention mechanisms have found numerous applications across different domains. In image captioning, attention allows the model to focus on salient regions of the image, generating more accurate and contextually relevant captions. In document question answering, attention helps retrieve relevant passages from the document, improving the accuracy of the answers provided.

External Memory Modules

External memory modules represent another class of advanced memory architectures that integrate explicit memory banks into neural networks. These modules allow models to store and retrieve information from external memory, enabling them to tackle more complex tasks and exhibit behaviors akin to human cognition.

Neural Turing Machines (NTMs)

Neural Turing Machines (NTMs) combine a controller network with a differentiable memory matrix. They use both content-based and location-based addressing mechanisms to read from and write to the memory. NTMs are capable of performing algorithmic tasks and have been shown to exhibit some degree of reasoning ability.

Memory Networks & Dynamic Memory Networks

Memory networks store facts in memory slots and perform iterative reasoning over the stored information. These networks are particularly useful in tasks like question answering, where the model needs to retrieve relevant information from a large knowledge base. Dynamic memory networks extend this concept by incorporating a more flexible memory update mechanism.

Memory-Augmented Neural Networks (MANNs)

Memory-Augmented Neural Networks (MANNs) integrate neural networks with external memory modules, allowing them to learn from and reason with stored information. These models are especially useful in few-shot learning scenarios and for tasks that require complex algorithmic reasoning. Some MANNs use explicit memory banks for long-term storage, providing a persistent memory that can be accessed throughout the model's operation.

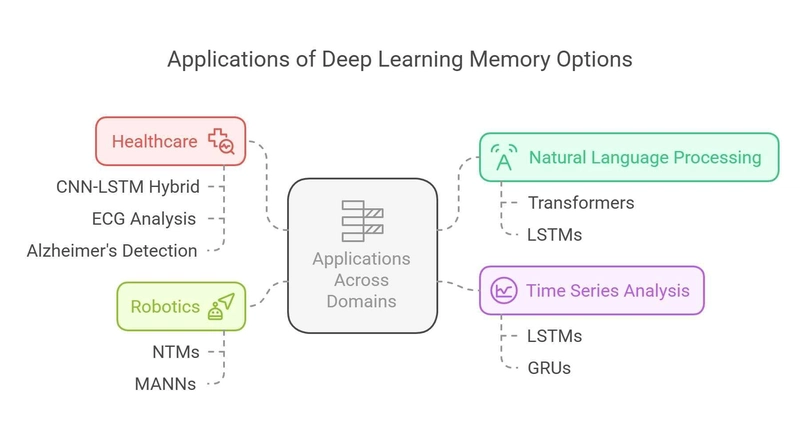

4. Applications Across Domains

Deep learning memory options have been applied across various domains, demonstrating their versatility and effectiveness in handling sequential and contextual data. From natural language processing to healthcare and robotics, these memory architectures have enabled breakthroughs in numerous fields.

Natural Language Processing (NLP)

In the realm of natural language processing, memory options such as Transformers and LSTMs have played a crucial role in advancing the field. These architectures enable models to capture the nuances of human language, from syntax and semantics to context and sentiment.

Memory Options

The primary memory options used in NLP are Transformers and LSTMs. Transformers, with their self-attention mechanisms, have revolutionized NLP by allowing models to process entire sequences simultaneously and capture long-range dependencies effectively. Models like BERT (Bidirectional Encoder Representations from Transformers) use Transformers to encode text, achieving state-of-the-art performance on tasks like text classification and named entity recognition.

LSTMs, on the other hand, are widely used for tasks that require understanding sequential data over time. They have been successful in applications like machine translation, where capturing the context of entire sentences is crucial for accurate translations.

Examples

BERT is an example of a Transformer-based model that has achieved remarkable success in encoding text. It uses bidirectional context to understand the meaning of words in a sentence, making it highly effective for tasks like sentiment analysis and question answering.

GPT-3 (Generative Pre-trained Transformer 3) is another example, showcasing the power of Transformers in text generation. With its massive number of parameters and extensive training data, GPT-3 can generate coherent and contextually relevant text, demonstrating the potential of memory-rich models in creative writing and content generation.

Time Series Analysis

Time series analysis is another domain where memory options have proven to be invaluable. The ability to capture temporal dependencies and trends is crucial for tasks like stock price forecasting and sensor data analysis.

Memory Options

LSTMs and GRUs are the primary memory options used in time series analysis. Both architectures are designed to handle sequential data, making them suitable for analyzing time series data where past values influence future predictions.

Examples

In stock forecasting, LSTMs can be used to predict future stock prices based on historical data. By capturing the long-term dependencies in stock price movements, LSTMs can provide more accurate forecasts compared to traditional statistical methods.

In IoT sensor anomaly detection, GRUs are often employed to monitor sensor data over time and identify unusual patterns that may indicate a malfunction or an event of interest. Their ability to process long sequences with fewer parameters makes them a practical choice for real-time applications.

Healthcare

In healthcare, deep learning models with memory options have been used to analyze medical data, such as ECG signals and brain scans, to detect and diagnose various conditions.

Memory Options

Hybrid CNN-LSTM architectures are commonly used in healthcare applications. The CNN component is used to extract features from images or signals, while the LSTM component captures the temporal dependencies in the data.

Examples

In ECG arrhythmia classification, hybrid CNN-LSTM models can analyze ECG signals over time to identify irregular heartbeats. The CNN extracts features from the ECG waveform, and the LSTM captures the temporal patterns that indicate different types of arrhythmias.

In Alzheimer's disease detection, similar hybrid models have been used to analyze brain scans and track changes over time. By capturing the progression of brain atrophy and other markers, these models can aid in early diagnosis and monitoring of the disease.

Robotics

In the field of robotics, memory options play a crucial role in enabling robots to navigate and interact with their environment effectively. Memory-augmented models can help robots learn from past experiences and adapt to new situations.

Memory Options

Neural Turing Machines (NTMs) and Memory-Augmented Neural Networks (MANNs) are key memory options used in robotics. These models allow robots to store and retrieve information from external memory, facilitating complex decision-making and navigation tasks.

Examples

In reinforcement learning for navigation, NTMs can be used to store maps and trajectories, allowing robots to learn optimal paths and avoid obstacles. By leveraging external memory, robots can improve their navigation performance over time and adapt to changing environments.

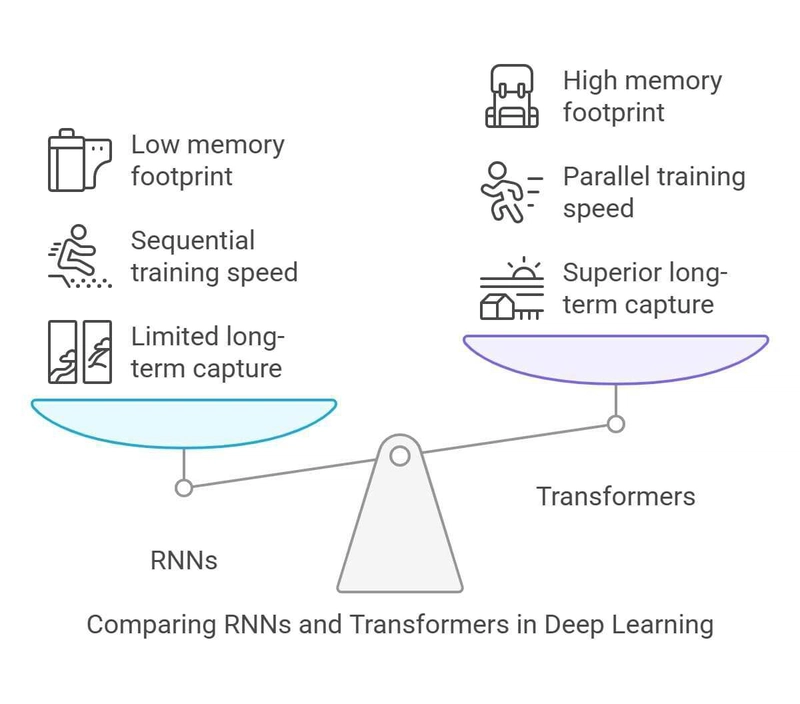

5. Performance Comparison of Memory Architectures

Comparing the performance of different memory architectures in deep learning is essential for choosing the right model for a given task. Various metrics can be used to evaluate these architectures, including their ability to capture long-term dependencies, training speed, memory footprint, and interpretability.

Metric: Long-Term Dependencies

The ability to capture long-term dependencies is a critical measure of performance for memory architectures. Traditional RNNs struggle with this due to the problem of vanishing and exploding gradients, making them poor performers in tasks requiring long-range context.

LSTMs excel in capturing long-term dependencies thanks to their gating mechanisms, which allow the network to selectively remember or forget information over time. GRUs also perform well in this regard, though they may be slightly less effective than LSTMs for highly complex tasks.

Transformers are considered the best performers in capturing long-term dependencies, as their self-attention mechanisms allow them to consider the entire input sequence simultaneously. This makes them particularly effective for tasks like language translation and text generation.

Metric: Training Speed

Training speed is another important metric, as it affects the time required to develop and deploy deep learning models. RNNs are generally fast to train on short sequences, but their sequential nature limits parallelism, leading to slower training on longer sequences.

LSTMs tend to be slower to train due to their complex gating mechanisms and larger number of parameters. GRUs strike a balance between training speed and performance, offering moderate training times with competitive accuracy.

Transformers are known for their fast training speeds due to their highly parallelizable architecture. This makes them particularly advantageous for large-scale applications where training time is a critical factor.

Metric: Memory Footprint

The memory footprint of a model is a crucial consideration, especially in resource-constrained environments. RNNs have a relatively low memory footprint, as they do not require storing extensive intermediate states.

LSTMs have a higher memory footprint due to the need to maintain both the cell state and the hidden state. GRUs have a moderate memory footprint, falling between RNNs and LSTMs in terms of memory usage.

Transformers have a very high memory footprint, especially for long sequences, due to their O(n²) complexity. This can be a limiting factor in applications where memory resources are limited.

Metric: Interpretability

Interpretability is increasingly important in deep learning, as it helps users understand and trust model predictions. RNNs have low interpretability due to their black-box nature and the difficulty in understanding how information flows through the network over time.

LSTMs and GRUs offer moderate interpretability, as their gating mechanisms provide some insight into which information is retained or discarded. However, understanding the exact decision-making process remains challenging.

Transformers also have low interpretability, as their attention mechanisms can be difficult to interpret. Despite efforts to visualize attention weights, understanding the full workings of a Transformer remains a complex task.

Best Use Case

Each memory architecture has its best use cases, depending on the specific requirements of the task at hand. RNNs are best suited for simple sequence tagging tasks where capturing long-term dependencies is not crucial.

LSTMs are ideal for applications like speech recognition and machine translation, where understanding long sequences and capturing temporal dependencies is essential. GRUs are well-suited for resource-constrained tasks, offering a good balance between performance and efficiency.

Transformers are the architecture of choice for NLP and long-context tasks, where their ability to process entire sequences simultaneously and capture long-range dependencies provides significant advantages.

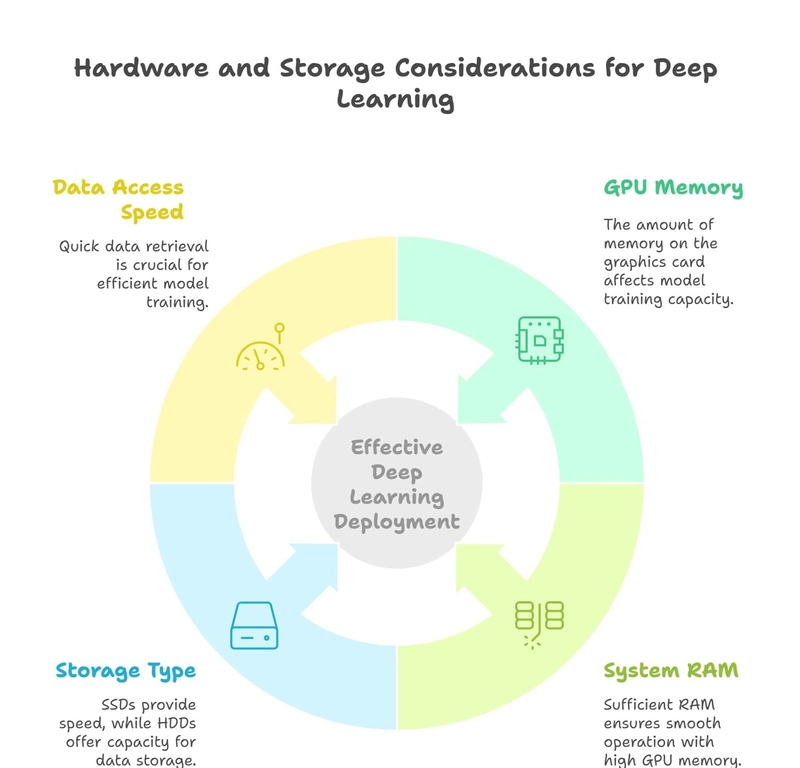

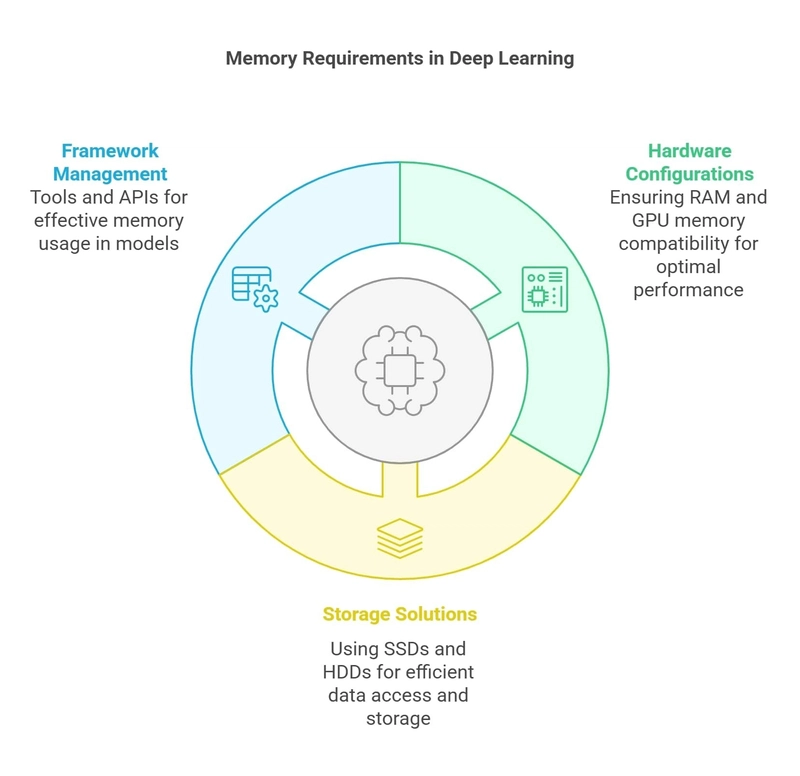

6. Hardware Considerations and Memory Requirements

Understanding the hardware considerations and memory requirements for deep learning is crucial for effectively deploying and training models. These considerations include GPU and system RAM, as well as storage options like SSDs and HDDs.

GPU and System RAM

The choice of GPU and the amount of system RAM available can significantly impact the performance and feasibility of training deep learning models. Depending on the complexity of the model and the size of the dataset, different GPU configurations may be necessary.

GPU Memory Guidelines

For small models and competitions on platforms like Kaggle, GPUs with 4–8 GB of memory, such as the GTX 960, are often sufficient. These are adequate for tasks like MNIST and CIFAR10 classification.

For research models, a minimum of 8 GB of GPU memory is recommended. State-of-the-art research often requires GPUs with 11 GB or more, such as the NVIDIA Titan X, to handle complex architectures and large datasets.

In medical imaging applications, approximately 12 GB of GPU memory is needed. For example, the NVIDIA Titan X can handle datasets of up to 50,000 high-resolution images.

For large models, such as those used in language translation or text generation, GPUs like the Tesla M40 or more advanced models with greater capabilities are often required to meet the high memory demands.

System RAM Recommendations

System RAM should ideally match or exceed the largest GPU memory to ensure smooth operation. For example, if using a GPU with 24 GB of memory, such as the Titan RTX, pairing it with at least 24 GB of system RAM is advisable.

A general rule of thumb is to have system RAM capacity at least 25% greater than the GPU memory. For instance, an RTX 3090 with 24 GB of GPU memory would pair well with 32 GB or more of system RAM.

Storage Considerations

Storage considerations are equally important, as they affect the speed and efficiency of data access during model training and deployment. The choice between SSDs and HDDs, as well as the overall storage capacity, can significantly impact performance.

SSDs vs. HDDs

Solid-state drives (SSDs) are preferred for rapid data access and temporary dataset storage. They offer faster read and write speeds compared to hard disk drives (HDDs), making them ideal for applications requiring quick data retrieval.

HDDs, on the other hand, are suitable for long-term, less frequently accessed data. They provide larger storage capacity at a lower cost, making them a good choice for archival purposes.

Data Access

High-performance storage systems are critical for handling large datasets during training. Ensuring that data can be accessed quickly and efficiently is essential for maintaining high training speeds and minimizing bottlenecks.

7. Software Memory Management

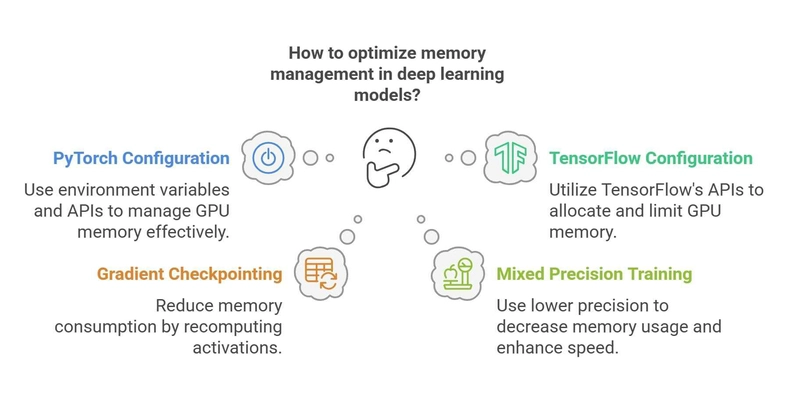

Effective software memory management is crucial for optimizing the performance of deep learning models. Frameworks like PyTorch and TensorFlow offer various tools and techniques to manage memory efficiently, ensuring that models can be trained and deployed effectively.

Framework Configuration Options

Both PyTorch and TensorFlow provide configuration options to manage memory usage and optimize performance. These options allow users to fine-tune their models and ensure efficient use of available resources.

PyTorch

In PyTorch, memory management can be configured using environment variables and APIs. The PYTORCH_CUDA_ALLOC_CONF environment variable allows users to set various parameters, such as memory growth, maximum split size, and garbage collection threshold.

PyTorch also provides APIs like memory_allocated(), memory_reserved(), and empty_cache() to monitor and manage memory usage. These functions allow users to track the amount of memory allocated and reserved by the GPU and free up unused memory as needed.

TensorFlow

TensorFlow offers similar memory management options through its configuration APIs. The tf.config.experimental.set_memory_growth function can be used to allocate GPU memory on demand, allowing for more efficient use of resources.

Users can also configure tf.config.set_logical_device_configuration to set hard memory limits on GPUs, such as allocating 1 GB per GPU. The TF_FORCE_GPU_ALLOW_GROWTH environment variable can be used for platform-specific adjustments to memory allocation.

Techniques

Several techniques can be employed to optimize memory usage in deep learning models. These techniques help reduce the memory footprint and improve training efficiency, making it possible to train larger and more complex models.

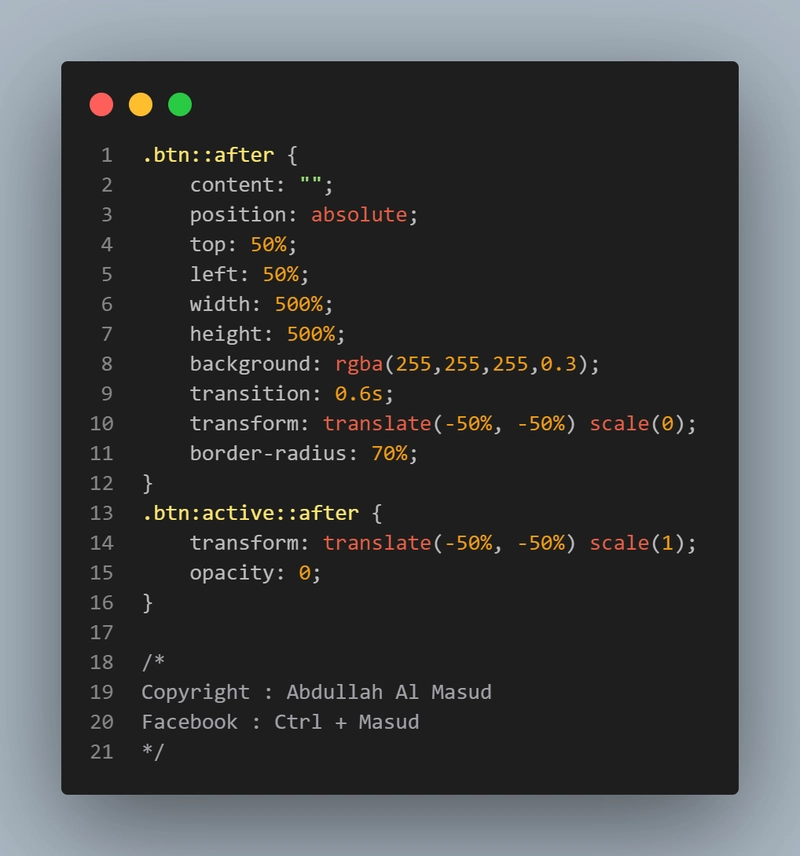

Gradient Checkpointing

Gradient checkpointing is a technique used to reduce memory consumption by recomputing activations instead of storing them. This allows for the training of deeper models, as it reduces the need to store intermediate states. However, it comes with a trade-off in computation time, as the model needs to recompute the activations during the backward pass.

Mixed Precision Training

Mixed precision training involves using lower precision (e.g., float16) for some operations to reduce the memory footprint and speed up computations. This technique can significantly improve training efficiency without sacrificing model accuracy, making it a popular choice for resource-constrained environments.

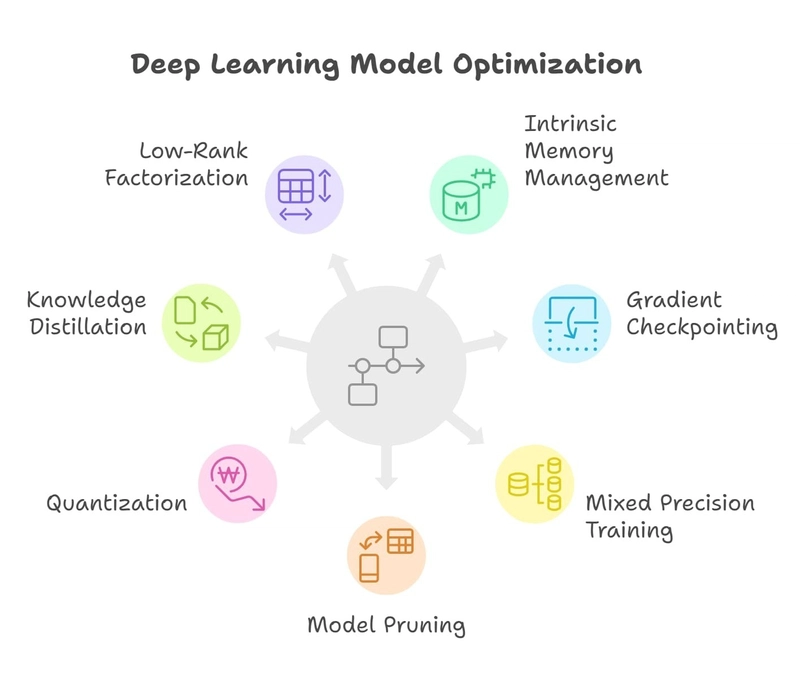

8. Model Design and Optimization Strategies

Designing and optimizing deep learning models involves various strategies to manage memory effectively and improve performance. These strategies include intrinsic memory management, gradient checkpointing, mixed precision training, model pruning and quantization, knowledge distillation, and low-rank factorization.

Intrinsic Memory Management

Intrinsic memory management refers to the built-in mechanisms within neural architectures that manage memory usage. For example, LSTMs and Transformers inherently manage memory through their gating and self-attention mechanisms, respectively.

Memory-augmented models, such as Neural Turing Machines (NTMs) and Differentiable Neural Computers (DNCs), explicitly include memory banks to store and retrieve information. These models are designed to handle tasks that require complex reasoning and long-term memory.

Optimization Techniques

Various optimization techniques can be employed to enhance the performance and efficiency of deep learning models. These techniques help in reducing memory usage and improving training and inference times.

Gradient Checkpointing

Gradient checkpointing is a technique that allows for the training of deeper models by recomputing activations rather than storing them. This reduces the memory footprint, enabling the training of larger networks. However, it increases the computational load during the backward pass, as the model needs to recompute the activations.

Mixed Precision Training

Mixed precision training involves using lower precision data types (e.g., float16) for certain operations, which reduces memory consumption and speeds up computations. This technique can lead to significant performance improvements, especially on GPUs that support mixed precision operations.

Model Pruning and Quantization

Model pruning involves removing unnecessary weights from the neural network, reducing its size and memory footprint. There are two main types of pruning: structured and unstructured. Structured pruning removes entire neurons or layers, while unstructured pruning removes individual weights.

Quantization involves converting the model's weights and activations to lower-precision data types, such as integers or lower-bit floating-point numbers. There are several quantization techniques:

- Dynamic Quantization: Converts weights and activations to lower precision during inference.

- Static Quantization: Pre-calibrates weights and activations to lower precision before deployment.

- Quantization-Aware Training (QAT): Simulates low-precision training during the training process, leading to better performance after quantization.

Knowledge Distillation

Knowledge distillation involves training a smaller "student" model to mimic the behavior of a larger "teacher" model. This technique allows for the deployment of more compact models without sacrificing too much accuracy, making it suitable for resource-constrained environments.

Low-Rank Factorization

Low-rank factorization involves decomposing weight matrices into lower-dimensional matrices using techniques like Singular Value Decomposition (SVD). This reduces the number of parameters in the model, leading to a smaller memory footprint and potentially faster training and inference times.

9. Fundamental Memory Requirements in Deep Learning Systems

Understanding the fundamental memory requirements in deep learning systems is essential for designing and deploying efficient models. These requirements encompass both hardware configurations and software management techniques.

Hardware Memory Configurations

Proper hardware memory configurations are crucial for ensuring the smooth operation of deep learning models. These configurations involve matching the GPU memory with the appropriate amount of system RAM and utilizing the right storage solutions.

General Rules

A general rule of thumb is that system RAM should be at least 25% greater than the GPU memory. This ensures that the system can handle the data and computations required by the GPU without running into memory bottlenecks.

Examples

For instance, an NVIDIA GeForce RTX 3090 with 24 GB of GPU memory would ideally be paired with 32 GB or more of system RAM. Similarly, an RTX 3060 with 12 GB of GPU memory would work optimally with 16 GB or more of system RAM.

Storage Hierarchy

The storage hierarchy in deep learning systems typically involves using SSDs for high-speed data access and HDDs for archival storage. SSDs provide faster read and write speeds, making them ideal for storing datasets that need to be accessed frequently during training. HDDs, on the other hand, offer larger storage capacity at a lower cost, making them suitable for long-term data storage.

Memory Management in Frameworks and Libraries

Effective memory management within deep learning frameworks and libraries is crucial for optimizing the performance of models. These frameworks offer various tools and APIs to monitor and manage memory usage effectively.

Monitoring Tools

In PyTorch, users can use built-in APIs like memory_allocated() and memory_reserved() to track the amount of memory used by the GPU. The empty_cache() function can be used to free up unused memory, helping to prevent out-of-memory errors.

TensorFlow provides similar functionality through its GPU configuration functions. Users can leverage tf.config.experimental.set_memory_growth to allocate GPU memory on demand and tf.config.set_logical_device_configuration to set hard memory limits, ensuring efficient use of available resources.

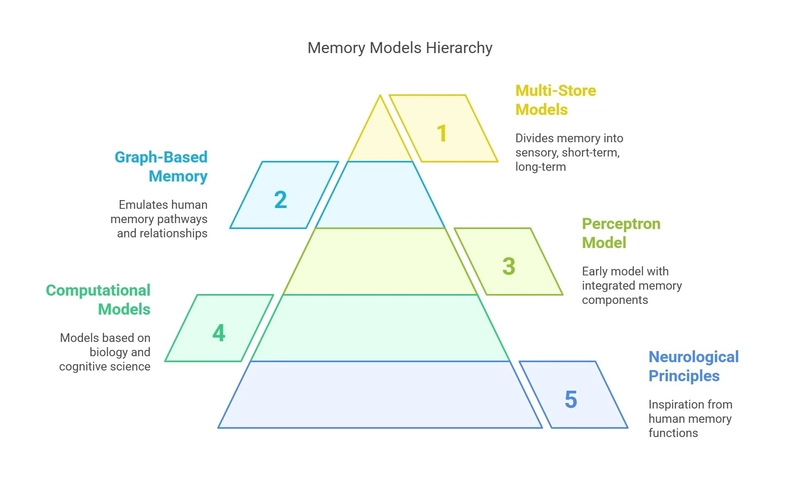

10. Biological Inspiration and Cognitive Memory Models

The design of memory options in deep learning is often inspired by biological and cognitive memory models. Understanding these inspirations can provide insights into why certain architectures are effective and how they can be further improved.

Neurological Memory Principles

Deep learning models often draw inspiration from human memory functions, which are embedded in the structures of neurons and synapses. Even simple organisms, like C. elegans with its 302 neurons, display basic memory functionalities, illustrating the fundamental role of memory in biological systems.

Human memory involves multiple types of memory, including sensory memory, short-term memory, and long-term memory. These types of memory work together to process and store information, much like the various components of deep learning memory architectures.

Computational Memory Models

Several computational models of memory have been developed, drawing on principles from biology and cognitive science. These models help in understanding and designing effective memory mechanisms for deep learning.

Rosenblatt’s Perceptron Model

Rosenblatt's Perceptron Model, one of the earliest neural network models, divides the system into several components: the S-system (input), A-system (feature detection), R-system (output), and C-system ("clock" memory). This model laid the foundation for understanding how memory can be integrated into neural networks.

Graph-Based Neural Memory

Graph-based neural memory models emulate human memory pathways through interconnected neuron networks. These models can capture complex relationships and dependencies, much like the associative nature of human memory.

Multi-Store Memory Models

Multi-store memory models divide memory into three main components: the sensory register, short-term store, and long-term store. These components process and store information at different levels, providing a framework for understanding how memory functions in both biological and computational systems.

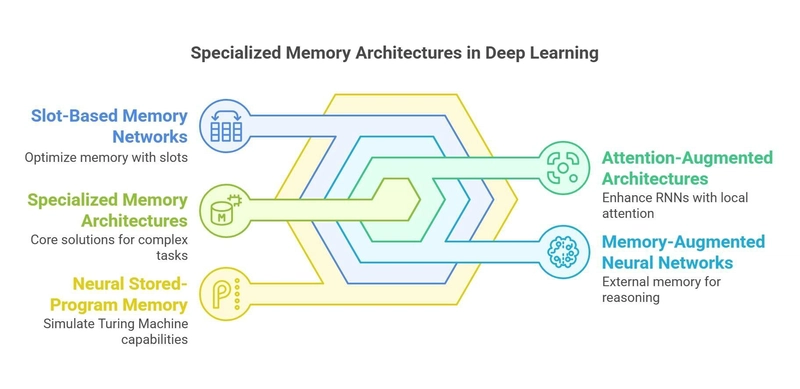

11. Specialized Memory Architectures and Augmented Models

Specialized memory architectures and augmented models offer advanced solutions for handling complex tasks and improving the performance of deep learning models. These architectures integrate various memory mechanisms to enhance their capabilities and address specific challenges.

Attention-Augmented Architectures

Attention-augmented architectures enhance standard RNNs with local attention mechanisms, allowing them to focus on relevant parts of the input data. This improves their ability to capture important contextual information and enhances their performance on tasks like machine translation and text summarization.

Memory-Augmented Neural Networks (MANNs)

Memory-Augmented Neural Networks (MANNs) integrate external memory modules, such as Neural Turing Machines (NTMs) and Differentiable Neural Computers (DNCs), to handle complex tasks that require reasoning and long-term memory. These models can store and retrieve information from external memory, making them suitable for tasks like few-shot learning and algorithmic reasoning.

Slot-Based Memory Networks

Slot-based memory networks optimize memory writing operations to enhance capacity and efficiency. By organizing memory into slots, these networks can store and retrieve information more effectively, improving their performance on tasks that require managing large amounts of data.

Neural Stored-Program Memory

Neural stored-program memory models simulate Universal Turing Machine capabilities with explicit memory storage. These models can execute complex algorithms and perform tasks that require a high level of reasoning and memory management, demonstrating the potential of combining neural networks with traditional computing paradigms.

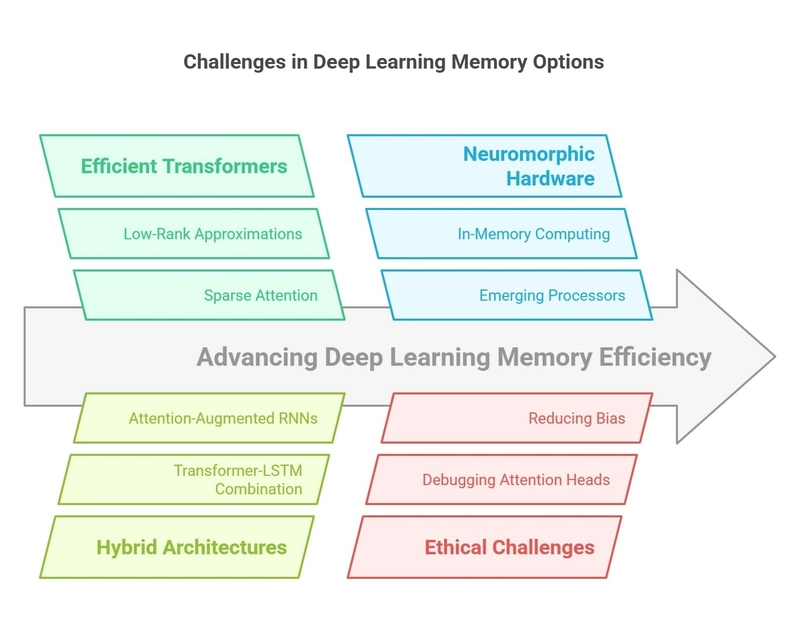

12. Future Directions and Research Challenges

The field of deep learning memory options is constantly evolving, with ongoing research aimed at improving efficiency, performance, and applicability. Several future directions and research challenges are currently at the forefront of this field.

Efficient Transformers

One major area of research is the development of efficient Transformers. Traditional Transformers have a high memory footprint due to their O(n²) complexity, which can be a bottleneck for long sequences. Researchers are exploring various techniques to address this issue:

- Sparse Attention: Models like BigBird and Linformer use sparse attention mechanisms to reduce the computational complexity to O(n), making them more efficient for long sequences.

- Low-Rank Approximations: Compressing attention matrices using low-rank approximations can help reduce the memory footprint while maintaining performance.

Hybrid Architectures

Hybrid architectures that combine different memory options are another promising direction. These architectures aim to leverage the strengths of various models to create more robust and efficient solutions:

- Combining Transformer and LSTM: Models like Transformer-XL combine the parallelism of Transformers with the recurrence of LSTMs, offering improved performance on long sequences.

- Attention-Augmented RNNs: Enhancing RNNs with attention mechanisms can improve their ability to capture relevant information and enhance performance on sequence-based tasks.

Neuromorphic Hardware

Neuromorphic hardware, which mimics the structure and function of biological neural networks, is an emerging area that could revolutionize deep learning memory options. Key developments include:

- In-Memory Computing: Using devices like memristors for in-memory computing can lead to more energy-efficient training and inference.

- Emerging Processors: New processors, such as Graphcore's IPU, integrate memory and compute, offering significant performance improvements for deep learning tasks.

Dynamic and Lifelong Learning

Dynamic and lifelong learning systems that adapt and expand their memory allocation over time are another area of interest. These systems aim to learn continuously from new data and experiences, much like human learning:

- Adaptive Memory Allocation: Developing systems that can dynamically allocate memory based on the task at hand can improve efficiency and performance.

- Explainable Memory Operations: Creating transparent AI systems that explain their memory operations can enhance trust and adoption in real-world applications.

Ethical & Interpretability Challenges

Addressing ethical and interpretability challenges is crucial for the responsible deployment of deep learning models with memory options. Key issues include:

- Debugging Complex Attention Heads: Understanding and debugging the attention mechanisms in Transformers is essential for improving their reliability and performance.

- Reducing Bias: Ensuring that memory-augmented models do not perpetuate biases is a significant challenge, requiring careful data curation and model design.

Standardized Benchmarks

Creating standardized benchmarks for evaluating memory efficiency across different architectures is an important research challenge. These benchmarks can help in comparing and improving the performance of various memory options, driving innovation in the field.

Final Thoughts on the Deep Learning Memory Option by Alex Nguyen

Deep learning memory options are integral to the performance and capabilities of modern neural networks. From the foundational Recurrent Neural Networks (RNNs) to the advanced Long Short-Term Memory (LSTM), Gated Rec

Deep learning memory options are integral to the performance and capabilities of modern neural networks. From the foundational Recurrent Neural Networks (RNNs) to the advanced Long Short-Term Memory (LSTM), Gated Recurrent Units (GRUs), and Transformer architectures, memory mechanisms have dramatically transformed how sequential data is processed.

These models facilitate the capture of long-range dependencies, hierarchical representations, and autonomous pattern extraction, significantly reducing the need for manual feature engineering.

As memory architectures evolve, they offer practitioners more sophisticated tools to tackle increasingly complex tasks across various domains. The integration of attention mechanisms, external memory modules, and novel hybrid approaches presents new avenues for enhancing model performance while managing computational efficiency.

Furthermore, understanding the hardware requirements for deploying these models - including GPU specifications, system RAM, and storage considerations - becomes crucial as we continually push the boundaries in deep learning.

Alongside improvements in architecture and hardware, strategies for efficient model management have emerged, such as using gradient checkpointing and mixed precision training. These techniques allow developers to deploy advanced models even within resource-constrained environments, thus maximizing their utility and accessibility.

The research landscape is vibrant, with significant focus on improving existing memory mechanisms and exploring innovative solutions informed by biological inspiration.

Areas like neuromorphic computing, dynamic learning systems, and addressing ethical challenges show great promise for future advancements. As artificial intelligence continues to permeate various aspects of society, the adaptability of memory architectures will be vital for ensuring effective and responsible use in practical applications.

In the fast-evolving field of artificial intelligence, a comprehensive understanding of memory mechanisms is essential for practitioners aiming to design, build, and deploy effective deep learning models.

Recognizing how different memory architectures operate, their strengths, weaknesses, and the specific use cases they are best suited for provides valuable insight into making informed decisions when selecting model architectures to tackle specific projects.

Moreover, considerations about hardware limitations, optimization strategies, and emerging research directions equip practitioners with the knowledge needed to not only enhance model performance but also operationalize their AI solutions sustainably and efficiently.

By staying attuned to industry developments and breakthroughs, professionals can position themselves at the forefront of the ongoing advancements in deep learning and memory mechanisms, ultimately contributing to innovative applications that harness the power of artificial intelligence in transformative ways.

In conclusion, the intersection of memory, computation, and artificial intelligence lays the groundwork for exciting advancements in technology.

As researchers continue to unlock the potential of these memory architectures, there lies abundant opportunity for groundbreaking applications that could reshape industries, enhance human capabilities, and foster deeper connections between humanity and technology itself.

Hi, I'm Alex Nguyen. With 10 years of experience in the financial industry, I've had the opportunity to work with a leading Vietnamese securities firm and a global CFD brokerage. I specialize in Stocks, Forex, and CFDs - focusing on algorithmic and automated trading.

I develop Expert Advisor bots on MetaTrader using MQL5, and my expertise in JavaScript and Python enables me to build advanced financial applications. Passionate about fintech, I integrate AI, deep learning, and n8n into trading strategies, merging traditional finance with modern technology.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)