Cypress — How to Create a Merge Report in your Pipeline

Cypress Cloud is a powerful tool for tracking test health and identifying long-term patterns. However, for teams without the financial bandwidth to use Cypress Cloud, having access to similar analytics — such as flake percentage, test performance, and failure data — remains essential, especially as test suites grow in size and complexity. Combine that with a test suite running in parallel, and tracking health across multiple runners can quickly become a time-consuming chore. In this blog, I’ll break down an easy pattern you can implement to generate a JSON merge report, providing a single place to track test health and custom analytics. This tutorial assumes your test suite runs in parallel. While it will still work for a single-runner setup, the benefits are magnified when test results are spread across jobs. If you need parallelization, check out the free cypress-split plugin. To create the test report, follow these four steps: Save the test results Merge the test results Add the script to the pipeline Use the report Save the Test Results To begin, we need to save the results of each individual test run. This can be achieved using the after:run event. Here’s an example of how to capture and save results, with each step commented along the way. setupNodeEvents(on, config) { on('after:run', (results) => { // 1. Define results directory const resultsDir = path.join(__dirname, 'cypress/results') // 2. Create the results directory, if it doesn't exist if (!fs.existsSync(resultsDir)) { fs.mkdirSync(resultsDir, { recursive: true }) } // 3. Write the results to a file // Each parallel runner need its own results file. // Use randomUUID to ensure uniqueness const fileName = `test-results-${randomUUID()}.json` // 4. Write the result to the results directory const filePath = path.join(resultsDir, fileName) fs.writeFileSync(filePath, JSON.stringify(results, null, 2)) }) return config } Each parallel runner will now save its own test result file to a shared results folder. Merge the Test Results Next, let’s combine those individual files into a single report. The script below will read all result files, analyze them, and generate a final merged report. Create a new file: merge-results.js const fs = require('fs') const path = require('path') const process = require('process') // 1. Define the results directory and the merged results file path const resultsDir = path.join(__dirname, 'results') const mergedResultsFilePath = path.join(resultsDir, 'merged-results.json') // 2. Create your custom result format JSON // Below is an example of example metrics, you can customize it to suite your needs const overallResults = { totalDuration: 0, totalTests: 0, totalPassed: 0, totalFailed: 0, totalPending: 0, totalSkipped: 0, flakePercentage: 0, } const flakyTests = [] const slowestTests = [] const errors = [] const mergedResults = { ...overallResults, flakyTests, slowestTests, errors, } // 3. Helper methods // Mine convert milliseconds to minutes for better readability const convertMSToSeconds = (ms) => (ms / 1000).toFixed(2) const convertSecondsToMinutes = (ms) => (convertMSToSeconds(ms) / 60).toFixed(2) // Core Method const mergeResults = () => { // 4. Check if the results directory exists, exit if not if (!fs.existsSync(resultsDir)) { console.error('❌ No results directory found.') process.exit(1) } const resultFiles = fs .readdirSync(resultsDir) .filter((file) => file.endsWith('.json') && file !== 'merged-results.json') // 5. Loop through and read each individual result file // Save off the results into a JSON object resultFiles.forEach((file) => { const filePath = path.join(resultsDir, file) const data = JSON.parse(fs.readFileSync(filePath, 'utf8')) // 6. Loop through each test run and extract the relevant metrics data.runs.forEach((run) => { run.tests.forEach((test) => { // Flaky Tests // A test is considered flaky if it has multiple attempts and at least one of them passed if ( test.attempts.length > 1 && test.attempts.some((attempt) => attempt.state === 'passed') ) { flakyTests.push({ test: test.title.join(' - ') }) } // Errors // A test is considered to have failed if its state is 'failed' and it has a displayError if (test.state === 'failed') { const error = test.displayError errors.push({ test: test.title.join(' - '), error, }) } // Slowest Tests // Save off all test regardless of their state slowestTests.push({ test: test.title.join(' - '), duration: convertMSToSeconds(test.duration), }) }) }) // Overall Results mergedResults.totalDuration += data.totalDuration mergedResults.totalTests += data.tota

Cypress Cloud is a powerful tool for tracking test health and identifying long-term patterns. However, for teams without the financial bandwidth to use Cypress Cloud, having access to similar analytics — such as flake percentage, test performance, and failure data — remains essential, especially as test suites grow in size and complexity.

Combine that with a test suite running in parallel, and tracking health across multiple runners can quickly become a time-consuming chore.

In this blog, I’ll break down an easy pattern you can implement to generate a JSON merge report, providing a single place to track test health and custom analytics.

This tutorial assumes your test suite runs in parallel. While it will still work for a single-runner setup, the benefits are magnified when test results are spread across jobs. If you need parallelization, check out the free cypress-split plugin.

To create the test report, follow these four steps:

Save the test results

Merge the test results

Add the script to the pipeline

Use the report

Save the Test Results

To begin, we need to save the results of each individual test run. This can be achieved using the after:run event.

Here’s an example of how to capture and save results, with each step commented along the way.

setupNodeEvents(on, config) {

on('after:run', (results) => {

// 1. Define results directory

const resultsDir = path.join(__dirname, 'cypress/results')

// 2. Create the results directory, if it doesn't exist

if (!fs.existsSync(resultsDir)) {

fs.mkdirSync(resultsDir, { recursive: true })

}

// 3. Write the results to a file

// Each parallel runner need its own results file.

// Use randomUUID to ensure uniqueness

const fileName = `test-results-${randomUUID()}.json`

// 4. Write the result to the results directory

const filePath = path.join(resultsDir, fileName)

fs.writeFileSync(filePath, JSON.stringify(results, null, 2))

})

return config

}

Each parallel runner will now save its own test result file to a shared results folder.

Merge the Test Results

Next, let’s combine those individual files into a single report. The script below will read all result files, analyze them, and generate a final merged report.

Create a new file: merge-results.js

const fs = require('fs')

const path = require('path')

const process = require('process')

// 1. Define the results directory and the merged results file path

const resultsDir = path.join(__dirname, 'results')

const mergedResultsFilePath = path.join(resultsDir, 'merged-results.json')

// 2. Create your custom result format JSON

// Below is an example of example metrics, you can customize it to suite your needs

const overallResults = {

totalDuration: 0,

totalTests: 0,

totalPassed: 0,

totalFailed: 0,

totalPending: 0,

totalSkipped: 0,

flakePercentage: 0,

}

const flakyTests = []

const slowestTests = []

const errors = []

const mergedResults = {

...overallResults,

flakyTests,

slowestTests,

errors,

}

// 3. Helper methods

// Mine convert milliseconds to minutes for better readability

const convertMSToSeconds = (ms) => (ms / 1000).toFixed(2)

const convertSecondsToMinutes = (ms) => (convertMSToSeconds(ms) / 60).toFixed(2)

// Core Method

const mergeResults = () => {

// 4. Check if the results directory exists, exit if not

if (!fs.existsSync(resultsDir)) {

console.error('❌ No results directory found.')

process.exit(1)

}

const resultFiles = fs

.readdirSync(resultsDir)

.filter((file) => file.endsWith('.json') && file !== 'merged-results.json')

// 5. Loop through and read each individual result file

// Save off the results into a JSON object

resultFiles.forEach((file) => {

const filePath = path.join(resultsDir, file)

const data = JSON.parse(fs.readFileSync(filePath, 'utf8'))

// 6. Loop through each test run and extract the relevant metrics

data.runs.forEach((run) => {

run.tests.forEach((test) => {

// Flaky Tests

// A test is considered flaky if it has multiple attempts and at least one of them passed

if (

test.attempts.length > 1 &&

test.attempts.some((attempt) => attempt.state === 'passed')

) {

flakyTests.push({ test: test.title.join(' - ') })

}

// Errors

// A test is considered to have failed if its state is 'failed' and it has a displayError

if (test.state === 'failed') {

const error = test.displayError

errors.push({

test: test.title.join(' - '),

error,

})

}

// Slowest Tests

// Save off all test regardless of their state

slowestTests.push({

test: test.title.join(' - '),

duration: convertMSToSeconds(test.duration),

})

})

})

// Overall Results

mergedResults.totalDuration += data.totalDuration

mergedResults.totalTests += data.totalTests

mergedResults.totalPassed += data.totalPassed

mergedResults.totalFailed += data.totalFailed

mergedResults.totalPending += data.totalPending

mergedResults.totalSkipped += data.totalSkipped

})

// 7. Now that all files have been processed, we can calculate overall metrics

// Convert total duration into a readable format

mergedResults.totalDuration = `${convertSecondsToMinutes(mergedResults.totalDuration)} min`

// Sort the slowest tests by duration

slowestTests.sort((a, b) => b.duration - a.duration)

// Create a overall flake percentage for the run

mergedResults.flakePercentage = (

(flakyTests.length / mergedResults.totalTests) *

100

).toFixed(2)

// 8. Save the merged results to a file

fs.writeFileSync(mergedResultsFilePath, JSON.stringify(mergedResults, null, 2))

console.log(`✅ Merged test results saved at ${mergedResultsFilePath}`)

}

// 9. Call the mergeResults function to execute the script

mergeResults()

Add the Script to the Pipeline

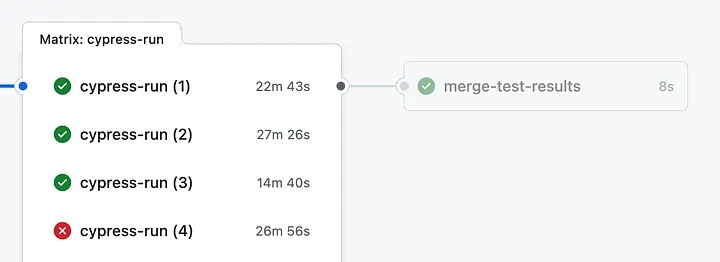

Now that the merge-results.js script is created, let’s utilize it in the CI/CD pipeline. Add a new merge job and ensure it runs after all Cypress run jobs have completed. Below is an example using GitHub Actions.

# Run Cypress Test Job

cypress-run:

runs-on: your-runner # Your runner

strategy:

fail-fast: false

matrix:

containers: [1, 2, 3, 4] # Number of parallel jobs, uses cypress-split

steps:

- name: Checkout

uses: actions/checkout@v4

# 1. Run Cypress

- name: Run Cypress Tests

run: npm run cy:run # Your run command

env:

SPLIT: ${{ strategy.job-total }}

SPLIT_INDEX: ${{ strategy.job-index }}

# 2. Upload Cypress Results

- name: Upload Cypress Results

uses: actions/upload-artifact@v4

if: success() || failure()

with:

name: cypress-results-${{ strategy.job-index }}

path: your-folder/cypress/results # Your results folder path

retention-days: 1

# Merge Cypress Test Results Job

merge-test-results:

runs-on: your-runner # Your runner

needs: [cypress-run] # Ensure this job runs after all cypress run jobs have finished

if: success() || failure()

strategy:

fail-fast: false

steps:

- name: Checkout

uses: actions/checkout@v4

# 3. Download Cypress Results, since they're saved from the previous job

- name: Download Cypress Results

uses: actions/download-artifact@v4

with:

pattern: cypress-results-*

path: your-folder/cypress/results # Your results folder path

merge-multiple: true

# 4. Merge Cypress Results - Call the merge script

- name: Merge Test Results

run: node your-folder/cypress/merge-results.js # Your merge script path

# 5. Upload Merged Results

- name: Upload Merged Report

uses: actions/upload-artifact@v4

with:

overwrite: true

path: your-folder/cypress/results/merged-results.json # Your merged results output file

if-no-files-found: ignore

retention-days: 7

The high-level job structure should look something like this:

Use the Report

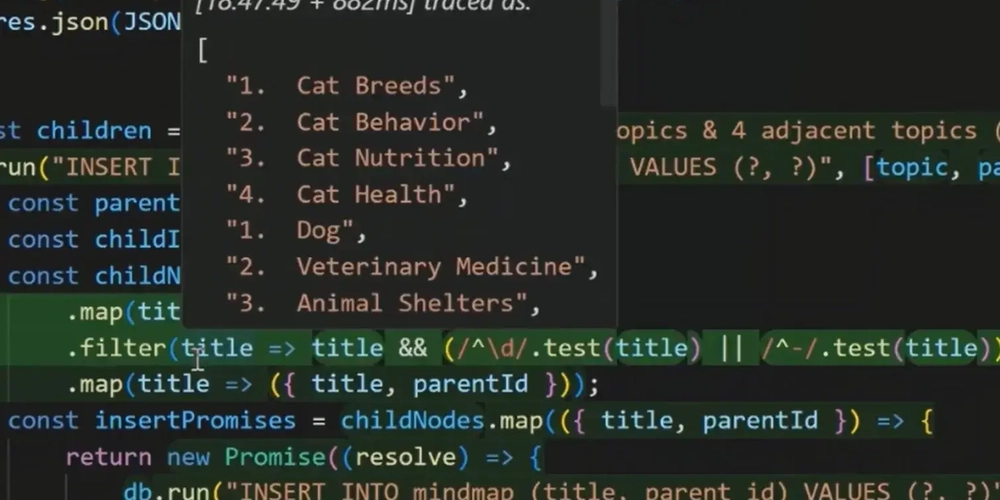

Each time your pipeline runs, it will now generate a merged JSON file as a pipeline artifact to be downloaded. Here’s a sample output:

// merged-results.json

{

"totalDuration": "45.21 min",

"totalTests": 120,

"totalPassed": 117,

"totalFailed": 3,

"totalPending": 0,

"totalSkipped": 0,

"flakePercentage": "1.25",

"flakyTests": [

{

"test": "Test Number 1 - should create a new user"

},

{

"test": "Test Number 12 - should delete an existing user"

},

],

"slowestTests": [

{

"test": "Test Group 1 - Test Number 14 - should edit an existing user",

"duration": "214.14"

},

{

"test": "Test Group 3 - Test Number 2 - should paginate the user list",

"duration": "174.29"

}

],

"errors": [

{

"test": "Test Group 21 - Test Number 3 - should submit a new bill for an existing user",

"error": "AssertionError: Timed out retrying after 4000ms: expected '27251' to equal '27,251'"

},

{

"test": "Test Number 13 - should invoice a user",

"error": "CypressError: Timed out retrying after 7500ms: `cy.wait()` timed out waiting `7500ms` for the 1st request to the route: `interceptMyUser`."

}

]

}

With this data in a single file, your team can quickly identify flaky tests, bottlenecks, and failures without digging through multiple CI job logs. This report is easy to share and provides direct and clean insight making it crucial as part of the CI/CD troubleshooting process.

Conclusion

By generating your own test analytics, you unlock insight into test reliability and performance — without relying on third-party tools. This kind of visibility is essential to keeping a large test suite healthy and maintainable.

Now that this initial data is available, for future iterations one could consider expanding the logic to:

- Integrate alerts for flake thresholds or long runtimes

- Track results across multiple runs and upload into an internal dashboard

- Identify differences between previous result health to spot immediate regressions

You don’t need a full observability platform to spot trends — just a clever script and a bit of CI magic. Looking forward to hearing your metric implementations.

Happy testing!

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Blue Archive tier list [April 2025]](https://media.pocketgamer.com/artwork/na-33404-1636469504/blue-archive-screenshot-2.jpg?#)

.png?#)

-Baldur’s-Gate-3-The-Final-Patch---An-Animated-Short-00-03-43.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![Nanoleaf Announces New Pegboard Desk Dock With Dual-Sided Lighting [Video]](https://www.iclarified.com/images/news/97030/97030/97030-640.jpg)

![Apple's Foldable iPhone May Cost Between $2100 and $2300 [Rumor]](https://www.iclarified.com/images/news/97028/97028/97028-640.jpg)

![Apple Releases Public Betas of iOS 18.5, iPadOS 18.5, macOS Sequoia 15.5 [Download]](https://www.iclarified.com/images/news/97024/97024/97024-640.jpg)