Connecting RDBs and Search Engines — Chapter 4 Part 1

Chapter 4 (Part 1): Outputting Kafka CDC Data to Console with Flink In this chapter, we will process the CDC data delivered to Kafka using Flink SQL and print the output to the console. Before persisting to OpenSearch, we visually verify that Flink is correctly consuming and processing the data from Kafka. 1. Prerequisites Ensure the following components are already running: PostgreSQL Apache Kafka Kafka Connect ZooKeeper Flink Refer to Chapter 3 for details on setting up the Debezium → Kafka pipeline. 2. Architecture Overview graph TD subgraph source PG[PostgreSQL] end subgraph Change Data Capture DBZ[Debezium Connect] end subgraph stream platform TOPIC[Kafka Topic] end subgraph stream processing FLINK[Flink SQL Kafka Source] PRINT[Flink Print Sink Console] end PG --> DBZ --> TOPIC --> FLINK --> PRINT We verify that CDC events flow from Kafka to Flink and appear in the standard output in a format like +I[...]. 3. Add Kafka Connector to Flink Add the Kafka SQL connector JAR to Flink: flink-sql-connector-kafka-3.3.0-1.19.jar ⚠️ To avoid interference with Flink core libraries, place the JAR in flink/lib/ext/ and copy it into /opt/flink/lib/ at startup. docker-compose.yaml Excerpt flink-jobmanager: image: flink:1.19 command: ["/bin/bash", "/jobmanager-entrypoint.sh"] volumes: - ./flink/sql:/opt/flink/sql - ./flink/lib/ext:/opt/flink/lib/ext - ./flink/jobmanager-entrypoint.sh:/jobmanager-entrypoint.sh jobmanager-entrypoint.sh Example #!/bin/bash set -e cp /opt/flink/lib/ext/*.jar /opt/flink/lib/ exec /docker-entrypoint.sh jobmanager Apply a similar setup to TaskManager to copy the JAR. 4. Write Flink SQL Script Save the following SQL in flink/sql/cdc_to_console.sql: CREATE TABLE cdc_source ( id INT, message STRING, PRIMARY KEY (id) NOT ENFORCED ) WITH ( 'connector' = 'kafka', 'topic' = 'dbserver1.public.testtable', 'properties.bootstrap.servers' = 'kafka:9092', 'format' = 'debezium-json', 'scan.startup.mode' = 'earliest-offset' ); CREATE TABLE print_sink ( id INT, message STRING ) WITH ( 'connector' = 'print' ); INSERT INTO print_sink SELECT * FROM cdc_source; Explanation of Flink SQL Tables Kafka Source Table: cdc_source Property Description connector = 'kafka' Reads data from a Kafka topic format = 'debezium-json' Handles JSON messages in Debezium format scan.startup.mode = 'earliest-offset' Reads from the earliest offset PRIMARY KEY (...) NOT ENFORCED Defines a primary key without enforcement Console Sink Table: print_sink Uses Flink's internal print connector to write output to stdout. 5. Run the Flink SQL Job docker compose exec flink-jobmanager bash sql-client.sh -f /opt/flink/sql/cdc_to_console.sql Verify Running Jobs docker compose exec flink-jobmanager bash flink list Expected output: ------------------ Running/Restarting Jobs ------------------- : insert-into_default_catalog.default_database.print_sink (RUNNING) 6. Check the Output docker compose logs flink-taskmanager Expected output: flink-taskmanager-1 | +I[1, CDC test row] Why TaskManager? The print sink outputs to the logs of the TaskManager running the job. Use docker compose logs flink-taskmanager to view the output. 7. Troubleshooting SQL Client Doesn’t Start Check if JobManager is running: docker compose logs flink-jobmanager No CDC Output Appears Is the Kafka topic name correct? Is there data in the Kafka topic? Is scan.startup.mode set to earliest-offset? Check topic content: kafka-console-consumer --bootstrap-server localhost:9092 \ --topic dbserver1.public.testtable \ --from-beginning Bonus: Observing Parallelism If you scale Flink to use multiple TaskManagers, you’ll see output distributed across their logs. This allows you to observe parallel execution, slot allocation, and subtask distribution. In this chapter, we confirmed the flow from Kafka → Flink → console output. Next, we will write the results to OpenSearch for persistence. (Coming soon: Chapter 4 Part 2 — Integrating Kafka CDC Data with OpenSearch Using Flink)

Chapter 4 (Part 1): Outputting Kafka CDC Data to Console with Flink

In this chapter, we will process the CDC data delivered to Kafka using Flink SQL and print the output to the console. Before persisting to OpenSearch, we visually verify that Flink is correctly consuming and processing the data from Kafka.

1. Prerequisites

Ensure the following components are already running:

- PostgreSQL

- Apache Kafka

- Kafka Connect

- ZooKeeper

- Flink

Refer to Chapter 3 for details on setting up the Debezium → Kafka pipeline.

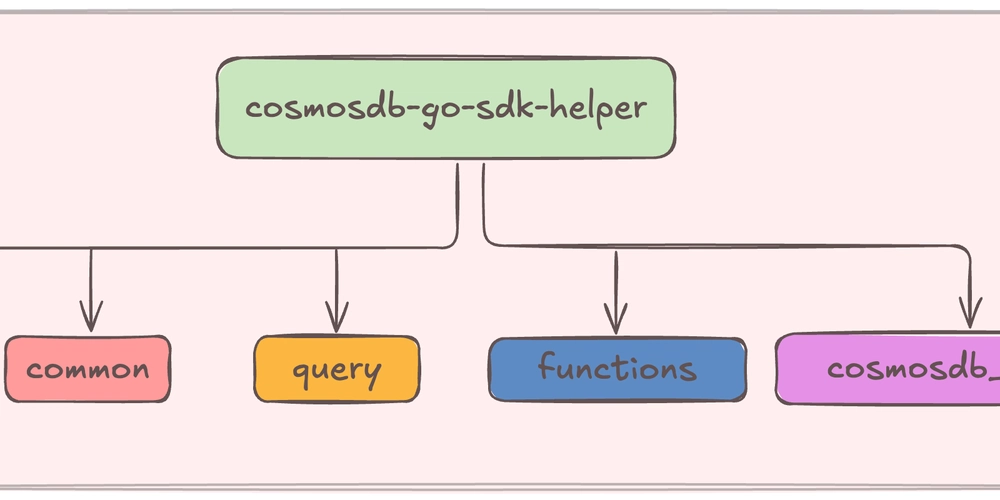

2. Architecture Overview

graph TD

subgraph source

PG[PostgreSQL]

end

subgraph Change Data Capture

DBZ[Debezium Connect]

end

subgraph stream platform

TOPIC[Kafka Topic]

end

subgraph stream processing

FLINK[Flink SQL Kafka Source]

PRINT[Flink Print Sink Console]

end

PG --> DBZ --> TOPIC --> FLINK --> PRINT

We verify that CDC events flow from Kafka to Flink and appear in the standard output in a format like +I[...].

3. Add Kafka Connector to Flink

Add the Kafka SQL connector JAR to Flink:

flink-sql-connector-kafka-3.3.0-1.19.jar

⚠️ To avoid interference with Flink core libraries, place the JAR in

flink/lib/ext/and copy it into/opt/flink/lib/at startup.

docker-compose.yaml Excerpt

flink-jobmanager:

image: flink:1.19

command: ["/bin/bash", "/jobmanager-entrypoint.sh"]

volumes:

- ./flink/sql:/opt/flink/sql

- ./flink/lib/ext:/opt/flink/lib/ext

- ./flink/jobmanager-entrypoint.sh:/jobmanager-entrypoint.sh

jobmanager-entrypoint.sh Example

#!/bin/bash

set -e

cp /opt/flink/lib/ext/*.jar /opt/flink/lib/

exec /docker-entrypoint.sh jobmanager

Apply a similar setup to TaskManager to copy the JAR.

4. Write Flink SQL Script

Save the following SQL in flink/sql/cdc_to_console.sql:

CREATE TABLE cdc_source (

id INT,

message STRING,

PRIMARY KEY (id) NOT ENFORCED

) WITH (

'connector' = 'kafka',

'topic' = 'dbserver1.public.testtable',

'properties.bootstrap.servers' = 'kafka:9092',

'format' = 'debezium-json',

'scan.startup.mode' = 'earliest-offset'

);

CREATE TABLE print_sink (

id INT,

message STRING

) WITH (

'connector' = 'print'

);

INSERT INTO print_sink

SELECT * FROM cdc_source;

Explanation of Flink SQL Tables

Kafka Source Table: cdc_source

| Property | Description |

|---|---|

connector = 'kafka' |

Reads data from a Kafka topic |

format = 'debezium-json' |

Handles JSON messages in Debezium format |

scan.startup.mode = 'earliest-offset' |

Reads from the earliest offset |

PRIMARY KEY (...) NOT ENFORCED |

Defines a primary key without enforcement |

Console Sink Table: print_sink

- Uses Flink's internal print connector to write output to stdout.

5. Run the Flink SQL Job

docker compose exec flink-jobmanager bash

sql-client.sh -f /opt/flink/sql/cdc_to_console.sql

Verify Running Jobs

docker compose exec flink-jobmanager bash

flink list

Expected output:

------------------ Running/Restarting Jobs -------------------

: insert-into_default_catalog.default_database.print_sink (RUNNING)

6. Check the Output

docker compose logs flink-taskmanager

Expected output:

flink-taskmanager-1 | +I[1, CDC test row]

Why TaskManager?

- The print sink outputs to the logs of the TaskManager running the job.

- Use

docker compose logs flink-taskmanagerto view the output.

7. Troubleshooting

SQL Client Doesn’t Start

- Check if JobManager is running:

docker compose logs flink-jobmanager

No CDC Output Appears

- Is the Kafka topic name correct?

- Is there data in the Kafka topic?

- Is

scan.startup.modeset toearliest-offset?

Check topic content:

kafka-console-consumer --bootstrap-server localhost:9092 \

--topic dbserver1.public.testtable \

--from-beginning

Bonus: Observing Parallelism

If you scale Flink to use multiple TaskManagers, you’ll see output distributed across their logs.

This allows you to observe parallel execution, slot allocation, and subtask distribution.

In this chapter, we confirmed the flow from Kafka → Flink → console output. Next, we will write the results to OpenSearch for persistence.

(Coming soon: Chapter 4 Part 2 — Integrating Kafka CDC Data with OpenSearch Using Flink)

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![[DEALS] Internxt Cloud Storage Lifetime Subscription: 10TB Plan (88% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Ditching a Microsoft Job to Enter Startup Purgatory with Lonewolf Engineer Sam Crombie [Podcast #171]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746753508177/0cd57f66-fdb0-4972-b285-1443a7db39fc.png?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![Gurman: First Foldable iPhone 'Should Be on the Market by 2027' [Updated]](https://images.macrumors.com/t/7O_4ilWjMpNSXf1pIBM37P_dKgU=/2500x/article-new/2025/03/Foldable-iPhone-2023-Feature-Homescreen.jpg)

![Federal ‘click to cancel subscriptions’ rule delayed, may be weakened [U]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2024/10/Federal-click-to-cancel-subscriptions-rule-is-ratified.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![What Google Messages features are rolling out [May 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2023/12/google-messages-name-cover.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![[Fixed] Gemini 2.5 Flash missing file upload for free app users](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/03/google-gemini-workspace-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![So your [expletive] test failed. So [obscene participle] what?](https://regmedia.co.uk/2016/08/18/shutterstock_mobile_surprise.jpg)

![Apple Shares 'Last Scene' Short Film Shot on iPhone 16 Pro [Video]](https://www.iclarified.com/images/news/97289/97289/97289-640.jpg)

![Apple M4 MacBook Air Hits New All-Time Low of $824 [Deal]](https://www.iclarified.com/images/news/97288/97288/97288-640.jpg)

![An Apple Product Renaissance Is on the Way [Gurman]](https://www.iclarified.com/images/news/97286/97286/97286-640.jpg)

![Apple to Sync Captive Wi-Fi Logins Across iPhone, iPad, and Mac [Report]](https://www.iclarified.com/images/news/97284/97284/97284-640.jpg)