Comprehensive Breakdown of a Serverless Resume Uploader Project on AWS

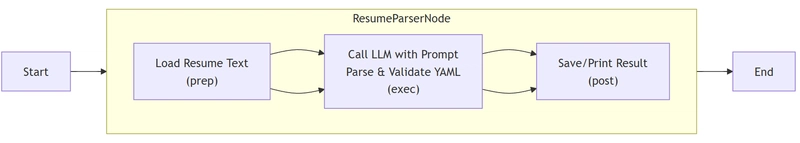

This project demonstrates a serverless architecture for uploading and processing resumes using AWS S3, Lambda, DynamoDB, CloudFormation, and SAM. Before we go into the technical aspect, lets understand what event-driven architectures (EDAs) and what a Serverless system is. Serverless and EDA are two architectural patterns that complement each other beautifully. Serverless doesn’t mean "no servers" — it means you don’t manage the servers. The cloud provider handles provisioning, scaling, and maintenance. You just focus on writing and deploying your business logic that differentiate you from the rest. In AWS, the most popular serverless compute service is AWS Lambda. Event-Driven Architecture (EDA) EDA is a system design where events (changes in state or updates) trigger actions. Events are typically emitted, captured, and reacted to using components like event producers, event routers (brokers), and event consumers. Think of it like this: Producer: "Hey! A new user signed up!" Router: "Got it! Who needs to know about this?" Consumers: (e.g., send welcome email, add to CRM, log signup) Now that we have that out of the way, below is a detailed, step-by-step explanation of the project architecture, covering infrastructure setup, deployment, automation, and best practices. 1. Project Overview Objective Build a serverless system that: Accepts resume uploads via S3. Triggers a Lambda function on file upload. Stores metadata (filename,timestamp,size) in DynamoDB. Uses Infrastructure as Code (IaC) for reproducibility. Key AWS Services Used AWS S3 Stores uploaded resumes AWS Lambda Processes files and updates metadata IAM Security permissions AWS CloudFormation Infrastructure as Code (IaC) AWS SAM Simplifies serverless deployments DynamoDB Stores file metatdata (NoSQL) 2. Step-by-Step Execution Breakdown Phase 1: Infrastructure Deployment (Core AWS Resources) Goal: Deploy S3 bucket + DynamoDB table independently to avoid circular dependencies. What Happened? CloudFormation Template (infrastructure.yaml) defined: S3 Bucket (UploadBucket) for storing resumes. DynamoDB Table (FileMetadataTable) for tracking metadata. IAM Roles for least-privilege access. Deployed via CLI: aws cloudformation deploy \ --template-file infrastructure.yaml \ --stack-name ServerlessResumeUploader-Infra \ --capabilities CAPABILITY_IAM CloudFormation provisioned resources sequentially (IAM → S3 → DynamoDB). Outputs (BucketName, TableName) were stored for cross-stack reference. Verification: aws cloudformation describe-stacks --stack-name ServerlessResumeUploader-Infra Phase 2: Lambda Function Deployment (Processing Logic) Goal: Deploy Lambda function that processes uploads and updates DynamoDB. What Happened? AWS SAM Template (lambda.yaml) defined: Lambda Function (FileMetadataFunction) in Python. IAM Policies (auto-generated by SAM for S3 + DynamoDB access). Environment Variables (DynamoDB table name, bucket name). Deployed via SAM CLI: sam deploy \ --template-file lambda.yaml \ --stack-name ServerlessResumeUploader-Lambda \ --parameter-overrides BucketName=... TableName=... \ --capabilities CAPABILITY_IAM SAM automatically: Packaged Lambda code into a .zip. Uploaded to S3. Generated expanded CloudFormation template. Deployed using CloudFormation. Behind the Scenes: SAM simplified Lambda definition (vs raw CloudFormation). Auto-generated IAM policies (no manual JSON needed). Managed cross-stack references (bucket + table names). Phase 3: Permission & Event Configuration Goal: Allow S3 to trigger Lambda when a file is uploaded. What Happened? Granted S3 Permission to Invoke Lambda: Ensures only the S3 bucket can call this Lambda function. aws lambda add-permission \ --function-name FileMetadataFunction \ --principal s3.amazonaws.com \ --action lambda:InvokeFunction \ --source-arn arn:aws:s3:::upload-bucket-name Configured S3 Event Notification: aws s3api put-bucket-notification-configuration \ --bucket upload-bucket-name \ --notification-configuration '{ "LambdaFunctionConfigurations": [{ "LambdaFunctionArn": "arn:aws:lambda:...", "Events": ["s3:ObjectCreated:*"] }] }' Phase 4: System Verification (Testing) Goal: Ensure end-to-end workflow works. 1. Uploaded a Test File: aws s3 cp resume.pdf s3://upload-bucket-name/test-resume.pdf 2. Lambda Execution: - S3 event triggered Lambda. - Lambda processed the file (extracted metadata). - Stored data in DynamoDB: ``` { "fileId": "123", "fileName": "test-resume.pdf", "uploadTime": "2024-04-09T12:00:00Z",

This project demonstrates a serverless architecture for uploading and processing resumes using AWS S3, Lambda, DynamoDB, CloudFormation, and SAM. Before we go into the technical aspect, lets understand what event-driven architectures (EDAs) and what a Serverless system is.

Serverless and EDA are two architectural patterns that complement each other beautifully.

Serverless doesn’t mean "no servers" — it means you don’t manage the servers. The cloud provider handles provisioning, scaling, and maintenance. You just focus on writing and deploying your business logic that differentiate you from the rest.

In AWS, the most popular serverless compute service is AWS Lambda.

Event-Driven Architecture (EDA)

EDA is a system design where events (changes in state or updates) trigger actions. Events are typically emitted, captured, and reacted to using components like event producers, event routers (brokers), and event consumers.

Think of it like this:

Producer: "Hey! A new user signed up!"

Router: "Got it! Who needs to know about this?"

Consumers: (e.g., send welcome email, add to CRM, log signup)

Now that we have that out of the way, below is a detailed, step-by-step explanation of the project architecture, covering infrastructure setup, deployment, automation, and best practices.

1. Project Overview

Objective

- Build a serverless system that:

- Accepts resume uploads via S3.

- Triggers a Lambda function on file upload.

- Stores metadata (filename,timestamp,size) in DynamoDB.

- Uses Infrastructure as Code (IaC) for reproducibility.

Key AWS Services Used

AWS S3 Stores uploaded resumes

AWS Lambda Processes files and updates metadata

IAM Security permissions

AWS CloudFormation Infrastructure as Code (IaC)

AWS SAM Simplifies serverless deployments

DynamoDB Stores file metatdata (NoSQL)

2. Step-by-Step Execution Breakdown

Phase 1: Infrastructure Deployment (Core AWS Resources)

Goal: Deploy S3 bucket + DynamoDB table independently to avoid circular dependencies.

What Happened?

-

CloudFormation Template

(infrastructure.yaml)defined:- S3 Bucket

(UploadBucket)for storing resumes. - DynamoDB Table

(FileMetadataTable)for tracking metadata. - IAM Roles for least-privilege access.

- S3 Bucket

-

Deployed via CLI:

aws cloudformation deploy \ --template-file infrastructure.yaml \ --stack-name ServerlessResumeUploader-Infra \ --capabilities CAPABILITY_IAM

- CloudFormation provisioned resources sequentially (IAM → S3 → DynamoDB).

- Outputs

(BucketName, TableName)were stored for cross-stack reference.

-

Verification:

aws cloudformation describe-stacks --stack-name ServerlessResumeUploader-Infra

Phase 2: Lambda Function Deployment (Processing Logic)

Goal: Deploy Lambda function that processes uploads and updates DynamoDB.

What Happened?

-

AWS SAM Template

(lambda.yaml)defined:-

Lambda Function

(FileMetadataFunction)in Python. - IAM Policies (auto-generated by SAM for S3 + DynamoDB access).

- Environment Variables (DynamoDB table name, bucket name).

-

Lambda Function

-

Deployed via SAM CLI:

sam deploy \ --template-file lambda.yaml \ --stack-name ServerlessResumeUploader-Lambda \ --parameter-overrides BucketName=... TableName=... \ --capabilities CAPABILITY_IAM

- SAM automatically:

- Packaged Lambda code into a .zip.

- Uploaded to S3.

- Generated expanded CloudFormation template.

- Deployed using CloudFormation.

-

Behind the Scenes:

- SAM simplified Lambda definition (vs raw CloudFormation).

- Auto-generated IAM policies (no manual JSON needed).

- Managed cross-stack references (bucket + table names).

Phase 3: Permission & Event Configuration

Goal: Allow S3 to trigger Lambda when a file is uploaded.

What Happened?

-

Granted S3 Permission to Invoke Lambda:

- Ensures only the S3 bucket can call this Lambda function.

aws lambda add-permission \

--function-name FileMetadataFunction \

--principal s3.amazonaws.com \

--action lambda:InvokeFunction \

--source-arn arn:aws:s3:::upload-bucket-name

-

Configured S3 Event Notification:

aws s3api put-bucket-notification-configuration \ --bucket upload-bucket-name \ --notification-configuration '{ "LambdaFunctionConfigurations": [{ "LambdaFunctionArn": "arn:aws:lambda:...", "Events": ["s3:ObjectCreated:*"] }] }'

Phase 4: System Verification (Testing)

Goal: Ensure end-to-end workflow works.

1. Uploaded a Test File:

aws s3 cp resume.pdf s3://upload-bucket-name/test-resume.pdf

2. Lambda Execution:

- S3 event triggered Lambda.

- Lambda processed the file (extracted metadata).

- Stored data in DynamoDB:

```

{

"fileId": "123",

"fileName": "test-resume.pdf",

"uploadTime": "2024-04-09T12:00:00Z",

"fileSize": "250KB"

}

```

3. Verified Logs & DynamoDB:

# Check Lambda logs

aws logs tail /aws/lambda/FileMetadataFunction

# Scan DynamoDB

aws dynamodb scan --table-name FileMetadataTable

3. Key Technical Takeaways

1. CloudFormation’s Role

- Infrastructure as Code (IaC): Defined all resources in YAML.

- Dependency Management: Handled IAM → Lambda → S3 → DynamoDB order.

- Cross-Stack References: Used outputs/parameters to link stacks.

- Rollback Safety: If Lambda deployment failed, CloudFormation rolledback.

2. AWS SAM’s Advantages

- Simplified Serverless Deployments:

-

AWS::Serverless::FunctionvsAWS::Lambda::Function - Auto-packaging

sam package - Built-in IAM policies (

S3CrudPolicy,DynamoDBCrudPolicy).

-

- Local Testing

sam local invoke - Guided Deployments

sam deploy --guided

3. Event-Driven Architecture

- S3 → Lambda → DynamoDB flow.

- Fully automated (no servers to manage).

- Scalable (handles 1 or 1,000 uploads seamlessly).

4. Best Practices Followed

Least Privilege IAM SAM auto-generated minimal policies.

Separation of Concerns Split infra (S3/DynamoDB) and app (Lambda) stacks.

Idempotent Deployments CloudFormation ensures consistency.

Automated Testing sam local invoke before deployment.

Infrastructure Versioning Templates stored in Git.

The separation into two stacks makes sense because:

Separation of Concerns - Each stack handles a distinct functional area (resume uploads vs general S3 metadata processing)

Resource Isolation - Keeps resources for different purposes logically separated

Independent Scaling - Each stack can be updated and scaled independently

Security Boundaries - Different IAM permissions can be applied to each stack

Maintainability - Smaller, focused stacks are easier to manage than one monolithic stack.

![[Webinar] AI Is Already Inside Your SaaS Stack — Learn How to Prevent the Next Silent Breach](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiOWn65wd33dg2uO99NrtKbpYLfcepwOLidQDMls0HXKlA91k6HURluRA4WXgJRAZldEe1VReMQZyyYt1PgnoAn5JPpILsWlXIzmrBSs_TBoyPwO7hZrWouBg2-O3mdeoeSGY-l9_bsZB7vbpKjTSvG93zNytjxgTaMPqo9iq9Z5pGa05CJOs9uXpwHFT4/s1600/ai-cyber.jpg?#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Rogue Company Elite tier list of best characters [April 2025]](https://media.pocketgamer.com/artwork/na-33136-1657102075/rogue-company-ios-android-tier-cover.jpg?#)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What’s new in Android’s April 2025 Google System Updates [U: 4/18]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)