Choosing the Best Machine Learning Algorithm for Your Problem

Machine learning has transformed the way we solve complex problems, but with so many algorithms to choose from, selecting the right one can be overwhelming. Each machine learning algorithm is best suited to specific types of problems, and understanding these can guide you in making the most effective choice for your project. In this blog, we will walk you through the different types of machine learning problems and the algorithms that are most suitable for each. By the end, you’ll have a clearer idea of which algorithm will work best for your needs. 1. Regression: Predicting Continuous Values When you’re trying to predict a continuous output variable, such as predicting house prices, stock prices, or sales revenue, you're dealing with a regression problem. The goal is to predict numerical values based on input features. Best Algorithms for Regression: Linear Regression: A simple and interpretable algorithm when there is a linear relationship between input variables and the output. Decision Trees (Regression): Handles non-linear relationships and is easy to interpret. Random Forest (Regression): An ensemble method that reduces overfitting and improves accuracy by averaging multiple decision trees. Gradient Boosting Machines (GBM): Known for its high accuracy, this algorithm builds models in a sequential manner to improve the prediction. Example Use Case: Predicting house prices based on features like square footage, number of rooms, and location. 2. Classification: Predicting Categories When your goal is to predict discrete categories (e.g., spam vs. not spam, fraud vs. non-fraud), you’re dealing with a classification problem. The output is categorical, and the task is to determine which category the input belongs to. Best Algorithms for Classification: Logistic Regression: Great for binary classification tasks. K-Nearest Neighbors (KNN): Effective for problems with complex, non-linear boundaries. Support Vector Machines (SVM): Effective in high-dimensional spaces and when classes are not linearly separable. Random Forest (Classification): Handles high-dimensional data and can capture complex relationships. Neural Networks: Useful for complex datasets, especially with unstructured data like images or text. Example Use Case: Classifying emails as spam or not spam, or identifying whether a customer will churn or not. 3. Clustering: Unsupervised Learning In clustering, you don’t have labeled data, and the goal is to group similar data points together. This is often used in unsupervised learning, where the algorithm identifies inherent patterns in the data. Best Algorithms for Clustering: K-Means Clustering: One of the most popular algorithms for partitioning data into clusters based on similarity. DBSCAN: A density-based algorithm that works well with clusters of varying shapes and sizes and handles outliers. Hierarchical Clustering: Creates a tree-like structure of nested clusters, helpful for understanding data at multiple levels. Example Use Case: Segmenting customers based on purchasing behavior or grouping documents by topics. 4. Dimensionality Reduction: Reducing Feature Space When working with high-dimensional data, dimensionality reduction techniques help reduce the number of features while retaining key patterns. This can improve model performance and speed up training time. Best Algorithms for Dimensionality Reduction: Principal Component Analysis (PCA): A linear method that reduces dimensions by transforming the data into principal components. t-SNE (t-Distributed Stochastic Neighbor Embedding): A non-linear technique often used for visualizing high-dimensional data in lower dimensions. Autoencoders: Neural networks used for learning efficient representations of the data. Example Use Case: Reducing the number of features in genomic data or visualizing high-dimensional data like images. 5. Time Series Prediction: Forecasting Future Values Time series forecasting is all about predicting future values based on past observations, typically with regularly spaced data points (e.g., hourly, daily). This is common in scenarios like stock market prediction, sales forecasting, and weather predictions. Best Algorithms for Time Series Prediction: ARIMA (AutoRegressive Integrated Moving Average): Ideal for stationary time series data and provides a good foundation for time series forecasting. LSTM (Long Short-Term Memory): A type of recurrent neural network (RNN) that excels at capturing long-term dependencies in sequential data. Prophet: Developed by Facebook, Prophet is a forecasting tool that handles daily and seasonal trends well. Example Use Case: Predicting stock prices based on past data or forecasting demand for products in the upcoming months. 6. Anomaly Detection:

Machine learning has transformed the way we solve complex problems, but with so many algorithms to choose from, selecting the right one can be overwhelming. Each machine learning algorithm is best suited to specific types of problems, and understanding these can guide you in making the most effective choice for your project.

In this blog, we will walk you through the different types of machine learning problems and the algorithms that are most suitable for each. By the end, you’ll have a clearer idea of which algorithm will work best for your needs.

1. Regression: Predicting Continuous Values

When you’re trying to predict a continuous output variable, such as predicting house prices, stock prices, or sales revenue, you're dealing with a regression problem. The goal is to predict numerical values based on input features.

Best Algorithms for Regression:

- Linear Regression: A simple and interpretable algorithm when there is a linear relationship between input variables and the output.

- Decision Trees (Regression): Handles non-linear relationships and is easy to interpret.

- Random Forest (Regression): An ensemble method that reduces overfitting and improves accuracy by averaging multiple decision trees.

- Gradient Boosting Machines (GBM): Known for its high accuracy, this algorithm builds models in a sequential manner to improve the prediction.

Example Use Case:

- Predicting house prices based on features like square footage, number of rooms, and location.

2. Classification: Predicting Categories

When your goal is to predict discrete categories (e.g., spam vs. not spam, fraud vs. non-fraud), you’re dealing with a classification problem. The output is categorical, and the task is to determine which category the input belongs to.

Best Algorithms for Classification:

- Logistic Regression: Great for binary classification tasks.

- K-Nearest Neighbors (KNN): Effective for problems with complex, non-linear boundaries.

- Support Vector Machines (SVM): Effective in high-dimensional spaces and when classes are not linearly separable.

- Random Forest (Classification): Handles high-dimensional data and can capture complex relationships.

- Neural Networks: Useful for complex datasets, especially with unstructured data like images or text.

Example Use Case:

- Classifying emails as spam or not spam, or identifying whether a customer will churn or not.

3. Clustering: Unsupervised Learning

In clustering, you don’t have labeled data, and the goal is to group similar data points together. This is often used in unsupervised learning, where the algorithm identifies inherent patterns in the data.

Best Algorithms for Clustering:

- K-Means Clustering: One of the most popular algorithms for partitioning data into clusters based on similarity.

- DBSCAN: A density-based algorithm that works well with clusters of varying shapes and sizes and handles outliers.

- Hierarchical Clustering: Creates a tree-like structure of nested clusters, helpful for understanding data at multiple levels.

Example Use Case:

- Segmenting customers based on purchasing behavior or grouping documents by topics.

4. Dimensionality Reduction: Reducing Feature Space

When working with high-dimensional data, dimensionality reduction techniques help reduce the number of features while retaining key patterns. This can improve model performance and speed up training time.

Best Algorithms for Dimensionality Reduction:

- Principal Component Analysis (PCA): A linear method that reduces dimensions by transforming the data into principal components.

- t-SNE (t-Distributed Stochastic Neighbor Embedding): A non-linear technique often used for visualizing high-dimensional data in lower dimensions.

- Autoencoders: Neural networks used for learning efficient representations of the data.

Example Use Case:

- Reducing the number of features in genomic data or visualizing high-dimensional data like images.

5. Time Series Prediction: Forecasting Future Values

Time series forecasting is all about predicting future values based on past observations, typically with regularly spaced data points (e.g., hourly, daily). This is common in scenarios like stock market prediction, sales forecasting, and weather predictions.

Best Algorithms for Time Series Prediction:

- ARIMA (AutoRegressive Integrated Moving Average): Ideal for stationary time series data and provides a good foundation for time series forecasting.

- LSTM (Long Short-Term Memory): A type of recurrent neural network (RNN) that excels at capturing long-term dependencies in sequential data.

- Prophet: Developed by Facebook, Prophet is a forecasting tool that handles daily and seasonal trends well.

Example Use Case:

- Predicting stock prices based on past data or forecasting demand for products in the upcoming months.

6. Anomaly Detection: Identifying Rare Events

Anomaly detection is used to identify rare or unusual events that deviate from the norm. It’s crucial for tasks like fraud detection, network security, or monitoring unusual behavior.

Best Algorithms for Anomaly Detection:

- Isolation Forest: A tree-based algorithm that isolates anomalies rather than profiling normal data.

- One-Class SVM: Learns the boundary of normal data and flags data points that fall outside of this boundary as anomalies.

- Autoencoders: Can be trained to reconstruct normal data patterns and identify outliers based on reconstruction errors.

Example Use Case:

- Detecting fraudulent credit card transactions or spotting abnormal activity in network traffic.

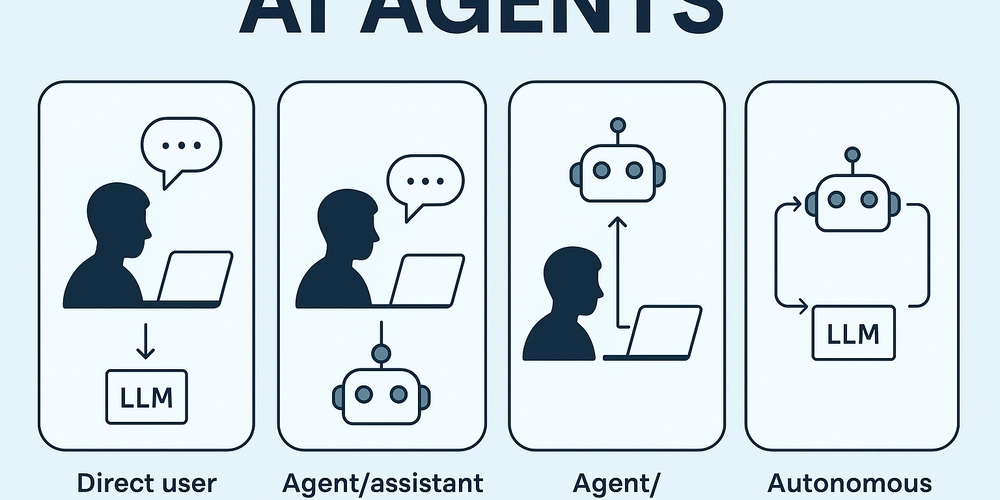

7. Reinforcement Learning: Training an Agent to Make Decisions

Reinforcement learning (RL) is used to train an agent to make decisions by interacting with an environment. The agent learns through trial and error, receiving rewards or penalties based on its actions.

Best Algorithms for Reinforcement Learning:

- Q-Learning: A model-free algorithm that learns the value of actions in different states.

- Deep Q Networks (DQN): Combines Q-learning with deep learning to solve more complex tasks.

- Policy Gradient Methods: Focus on learning a policy directly rather than relying on a value function, making them suitable for continuous action spaces.

Example Use Case:

- Training robots to perform tasks like walking or grasping objects, or autonomous driving systems making decisions based on environmental inputs.

Conclusion: Finding the Right Algorithm for Your Problem

Choosing the best machine learning algorithm depends on your problem type and data. Here’s a quick recap of when to use which algorithm:

- For regression (predicting continuous values): Try linear regression, decision trees, or gradient boosting.

- For classification (predicting categories): Logistic regression, SVM, or neural networks work well.

- For clustering (grouping data without labels): Use K-Means, DBSCAN, or hierarchical clustering.

- For dimensionality reduction: PCA, t-SNE, or autoencoders.

- For time series forecasting: ARIMA, LSTM, or Prophet.

- For anomaly detection: Isolation Forest, One-Class SVM, or autoencoders.

- For reinforcement learning: Q-Learning, DQN, or policy gradient methods.

By understanding the specific needs of your project, you can confidently select the right algorithm to build an efficient and accurate model. Remember, experimentation and fine-tuning are key to achieving the best results, so don’t hesitate to try different approaches and optimize them for your particular use case.

Happy coding !

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)