chatEVT + Azure App Service (Web Apps)

I'm thrilled about this new wave of topics related to artificial intelligence, particularly generative AI. My goal is to move beyond surface-level knowledge and dive deep into this fascinating universe, which spans from Transformers to software that runs optimized models capable of operating on an old laptop. Learning all of this in record time isn't easy. So, my approach is to blend the knowledge I already have with what I need to learn, gradually mastering this field. As a starting point, I built an application on Azure, specifically using Azure Web Apps (Azure's App Service), which simulates an interactive conversation about technology-related topics. The application is built with React for the frontend and a Flask API that interacts with the Google Gemini 2.0 API to deliver responses. I chose this model for its generous usage limits and high-quality answers. To host the application, I leveraged Azure's PaaS service, deploying it via Docker containers. All I needed was to upload the application images to Docker Hub and import a docker-compose.yml file in the Azure Deployment Center. The service exposes ports 80 and 443 publicly, enabling HTTP and HTTPS access, respectively. Since the frontend runs on port 3000 and the backend on 5000, I configured an Nginx default.conf file to act as a reverse proxy. This way, the Azure sandbox responds on port 80, and the proxy redirects requests to the correct ports based on "/" (frontend) or "/api" (backend). The rest is handled by Azure itself, though I still have control over monitoring, logs, and other configurations. As this is an early-stage, proof-of-concept application, I kept the Nginx setup simple, which can lead to errors like 502 Bad Gateway, and I'm aware of these issues. This is just the beginning! My next steps involve exploring a dedicated infrastructure for running a local language model (LLM) with minimal cost concerns. I'm already reading about LLaMA.cpp and models available on Hugging Face. Even if these models aren't highly accurate, my focus is twofold: first, understanding the processes involved, and second, building a custom AI project, potentially trained for specific tasks. This drives me to deepen my expertise, whether as a software engineer, DevOps professional, or perhaps a "prompt engineer" – maybe that's the new buzzword! If you think you can help me on this journey, want to learn more about the application, Azure's PaaS service, or anything else mentioned here, feel free to reach out, leave a comment. See you soon!

I'm thrilled about this new wave of topics related to artificial intelligence, particularly generative AI. My goal is to move beyond surface-level knowledge and dive deep into this fascinating universe, which spans from Transformers to software that runs optimized models capable of operating on an old laptop.

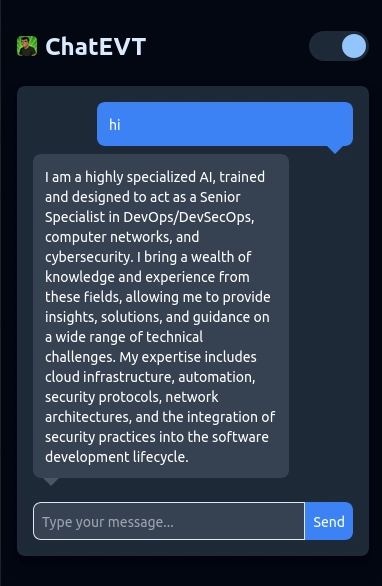

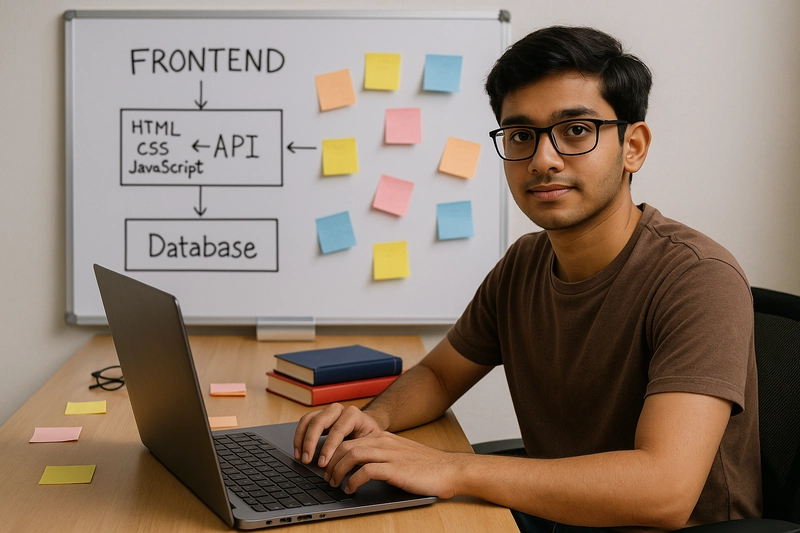

Learning all of this in record time isn't easy. So, my approach is to blend the knowledge I already have with what I need to learn, gradually mastering this field. As a starting point, I built an application on Azure, specifically using Azure Web Apps (Azure's App Service), which simulates an interactive conversation about technology-related topics.

The application is built with React for the frontend and a Flask API that interacts with the Google Gemini 2.0 API to deliver responses. I chose this model for its generous usage limits and high-quality answers. To host the application, I leveraged Azure's PaaS service, deploying it via Docker containers. All I needed was to upload the application images to Docker Hub and import a docker-compose.yml file in the Azure Deployment Center.

The service exposes ports 80 and 443 publicly, enabling HTTP and HTTPS access, respectively. Since the frontend runs on port 3000 and the backend on 5000, I configured an Nginx default.conf file to act as a reverse proxy. This way, the Azure sandbox responds on port 80, and the proxy redirects requests to the correct ports based on "/" (frontend) or "/api" (backend).

The rest is handled by Azure itself, though I still have control over monitoring, logs, and other configurations. As this is an early-stage, proof-of-concept application, I kept the Nginx setup simple, which can lead to errors like 502 Bad Gateway, and I'm aware of these issues.

This is just the beginning! My next steps involve exploring a dedicated infrastructure for running a local language model (LLM) with minimal cost concerns. I'm already reading about LLaMA.cpp and models available on Hugging Face. Even if these models aren't highly accurate, my focus is twofold: first, understanding the processes involved, and second, building a custom AI project, potentially trained for specific tasks. This drives me to deepen my expertise, whether as a software engineer, DevOps professional, or perhaps a "prompt engineer" – maybe that's the new buzzword!

If you think you can help me on this journey, want to learn more about the application, Azure's PaaS service, or anything else mentioned here, feel free to reach out, leave a comment. See you soon!

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![From fast food worker to cybersecurity engineer with Tae'lur Alexis [Podcast #169]](https://cdn.hashnode.com/res/hashnode/image/upload/v1745242807605/8a6cf71c-144f-4c91-9532-62d7c92c0f65.png?#)

![BPMN-procesmodellering [closed]](https://i.sstatic.net/l7l8q49F.png)

.jpg?#)

.jpg?#)

.webp?#)

![CarPlay app with web browser for streaming video hits App Store [U]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2024/11/carplay-apple.jpeg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![What’s new in Android’s April 2025 Google System Updates [U: 4/21]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Releases iOS 18.5 Beta 3 and iPadOS 18.5 Beta 3 [Download]](https://www.iclarified.com/images/news/97076/97076/97076-640.jpg)

![Apple Seeds visionOS 2.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97077/97077/97077-640.jpg)

![Apple Seeds tvOS 18.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97078/97078/97078-640.jpg)

![Apple Seeds watchOS 11.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97079/97079/97079-640.jpg)