Building Voice AI Agents with the OpenAI Agents SDK

Beyond Single Turns: OpenAI Enters the Voice Agent Arena In our previous post, Building Multi-Agent Conversations with WebRTC & LiveKit, we explored how to create complex, multi-stage voice interactions using the real-time power of WebRTC and the orchestration capabilities of the LiveKit Agents framework. We saw how crucial low latency and effective state management are for natural conversations, especially when handing off between different agent roles. Recently, OpenAI has significantly enhanced its offerings for building agentic systems, including dedicated tools and SDKs for creating voice agents. While the core concept of chaining Speech-to-Text (STT), Large Language Model (LLM), and Text-to-Speech (TTS) remains, OpenAI now provides more integrated primitives and an SDK designed to simplify this process, particularly within their ecosystem. This article dives into building voice agents using the OpenAI Agents SDK. We'll examine its architecture, walk through a Python example, and critically compare this approach with the LiveKit method discussed previously, highlighting the strengths, weaknesses, and ideal use cases for each. OpenAI's Vision for Agents: Primitives and Orchestration OpenAI positions its platform as a set of composable primitives for building agents, covering domains like: Models: Core intelligence (GPT-4o, the latest GPT-4.1 and GPT-4.1-mini, etc.) capable of reasoning and handling multimodality. Tools: Interfaces to the outside world, including developer-defined function calling, built-in web search, file search, etc. Knowledge & Memory: Using Vector Stores and Embeddings for context and persistence. Audio & Speech: Primitives for understanding and generating voice. Guardrails: Moderation and instruction hierarchy for safety and control. Orchestration: The Agents SDK, Tracing, Evaluations, and Fine-tuning to manage the agent lifecycle. For Voice Agents, OpenAI presents two main architectural paths: Speech-to-Speech (Multimodal - Realtime API): Uses models like gpt-4o-realtime-preview that process audio input directly and generate audio output, aiming for the lowest latency and understanding vocal nuances. This uses a specific Realtime API separate from the main Chat Completions API. Chained (Agents SDK + Voice): The more traditional STT → LLM → TTS flow, but orchestrated using the openai-agents SDK with its [voice] extension. This provides more transparency (text transcripts at each stage) and control, making it easier to integrate into existing text-based agent workflows. This post will focus on the Chained architecture using the OpenAI Agents SDK, as it aligns more closely with common agent development patterns and provides a clearer comparison point to the plugin-based approach of LiveKit. The OpenAI Agents SDK: Simplifying Agent Logic The openai-agents Python SDK aims to provide a lightweight way to build agents with a few core concepts: Agent: An LLM equipped with instructions, tools, and potentially knowledge about when to hand off tasks. Handoffs: A mechanism allowing one agent to delegate tasks to another, more specialized agent. Agents are configured with a list of potential agents they can hand off to. Tools (@function_tool): Decorator to easily expose Python functions to the agent, similar to standard OpenAI function calling. Guardrails: Functions to validate inputs or outputs and enforce constraints. Runner: Executes the agent logic, handling the loop of calling the LLM, executing tools, and managing handoffs. VoicePipeline (with [voice] extra): Wraps an agent workflow (like one using Runner) to handle the STT and TTS parts of a voice interaction. The philosophy is "Python-first," relying on Python's built-in features for orchestration rather than introducing many complex abstractions. Architecture with OpenAI Agents SDK (Chained Voice) When using the VoicePipeline from the SDK, the typical flow for a voice turn looks like this: Audio Input: Raw audio data (e.g., from a microphone) is captured. VoicePipeline (STT): The pipeline receives audio chunks. It uses an OpenAI STT model (like gpt-4o-transcribe via the API) to transcribe the user's speech into text once speech ends (or via push-to-talk). Agent Workflow Execution (MyWorkflow.run in the example): The transcribed text is passed to your defined workflow (e.g., a class inheriting from VoiceWorkflowBase). Inside the workflow, the Runner is invoked with the current Agent, conversation history, and the new user text. The Agent (LLM) decides whether to respond directly, call a Tool (function), or Handoff to another agent based on its instructions and the user input. If a tool is called, the Runner executes the Python function and sends the result back to the LLM. If a handoff occurs, the Runner switches context to the new agent. The LLM generates the text response. VoicePipeline (TTS): The final text response from the agent workflow is sent t

Beyond Single Turns: OpenAI Enters the Voice Agent Arena

In our previous post, Building Multi-Agent Conversations with WebRTC & LiveKit, we explored how to create complex, multi-stage voice interactions using the real-time power of WebRTC and the orchestration capabilities of the LiveKit Agents framework. We saw how crucial low latency and effective state management are for natural conversations, especially when handing off between different agent roles.

Recently, OpenAI has significantly enhanced its offerings for building agentic systems, including dedicated tools and SDKs for creating voice agents. While the core concept of chaining Speech-to-Text (STT), Large Language Model (LLM), and Text-to-Speech (TTS) remains, OpenAI now provides more integrated primitives and an SDK designed to simplify this process, particularly within their ecosystem.

This article dives into building voice agents using the OpenAI Agents SDK. We'll examine its architecture, walk through a Python example, and critically compare this approach with the LiveKit method discussed previously, highlighting the strengths, weaknesses, and ideal use cases for each.

OpenAI's Vision for Agents: Primitives and Orchestration

OpenAI positions its platform as a set of composable primitives for building agents, covering domains like:

- Models: Core intelligence (GPT-4o, the latest GPT-4.1 and GPT-4.1-mini, etc.) capable of reasoning and handling multimodality.

- Tools: Interfaces to the outside world, including developer-defined function calling, built-in web search, file search, etc.

- Knowledge & Memory: Using Vector Stores and Embeddings for context and persistence.

- Audio & Speech: Primitives for understanding and generating voice.

- Guardrails: Moderation and instruction hierarchy for safety and control.

- Orchestration: The Agents SDK, Tracing, Evaluations, and Fine-tuning to manage the agent lifecycle.

For Voice Agents, OpenAI presents two main architectural paths:

- Speech-to-Speech (Multimodal - Realtime API): Uses models like gpt-4o-realtime-preview that process audio input directly and generate audio output, aiming for the lowest latency and understanding vocal nuances. This uses a specific Realtime API separate from the main Chat Completions API.

- Chained (Agents SDK + Voice): The more traditional STT → LLM → TTS flow, but orchestrated using the openai-agents SDK with its [voice] extension. This provides more transparency (text transcripts at each stage) and control, making it easier to integrate into existing text-based agent workflows.

This post will focus on the Chained architecture using the OpenAI Agents SDK, as it aligns more closely with common agent development patterns and provides a clearer comparison point to the plugin-based approach of LiveKit.

The OpenAI Agents SDK: Simplifying Agent Logic

The openai-agents Python SDK aims to provide a lightweight way to build agents with a few core concepts:

- Agent: An LLM equipped with instructions, tools, and potentially knowledge about when to hand off tasks.

- Handoffs: A mechanism allowing one agent to delegate tasks to another, more specialized agent. Agents are configured with a list of potential agents they can hand off to.

-

Tools (

@function_tool): Decorator to easily expose Python functions to the agent, similar to standard OpenAI function calling. - Guardrails: Functions to validate inputs or outputs and enforce constraints.

- Runner: Executes the agent logic, handling the loop of calling the LLM, executing tools, and managing handoffs.

- VoicePipeline (with [voice] extra): Wraps an agent workflow (like one using Runner) to handle the STT and TTS parts of a voice interaction.

The philosophy is "Python-first," relying on Python's built-in features for orchestration rather than introducing many complex abstractions.

Architecture with OpenAI Agents SDK (Chained Voice)

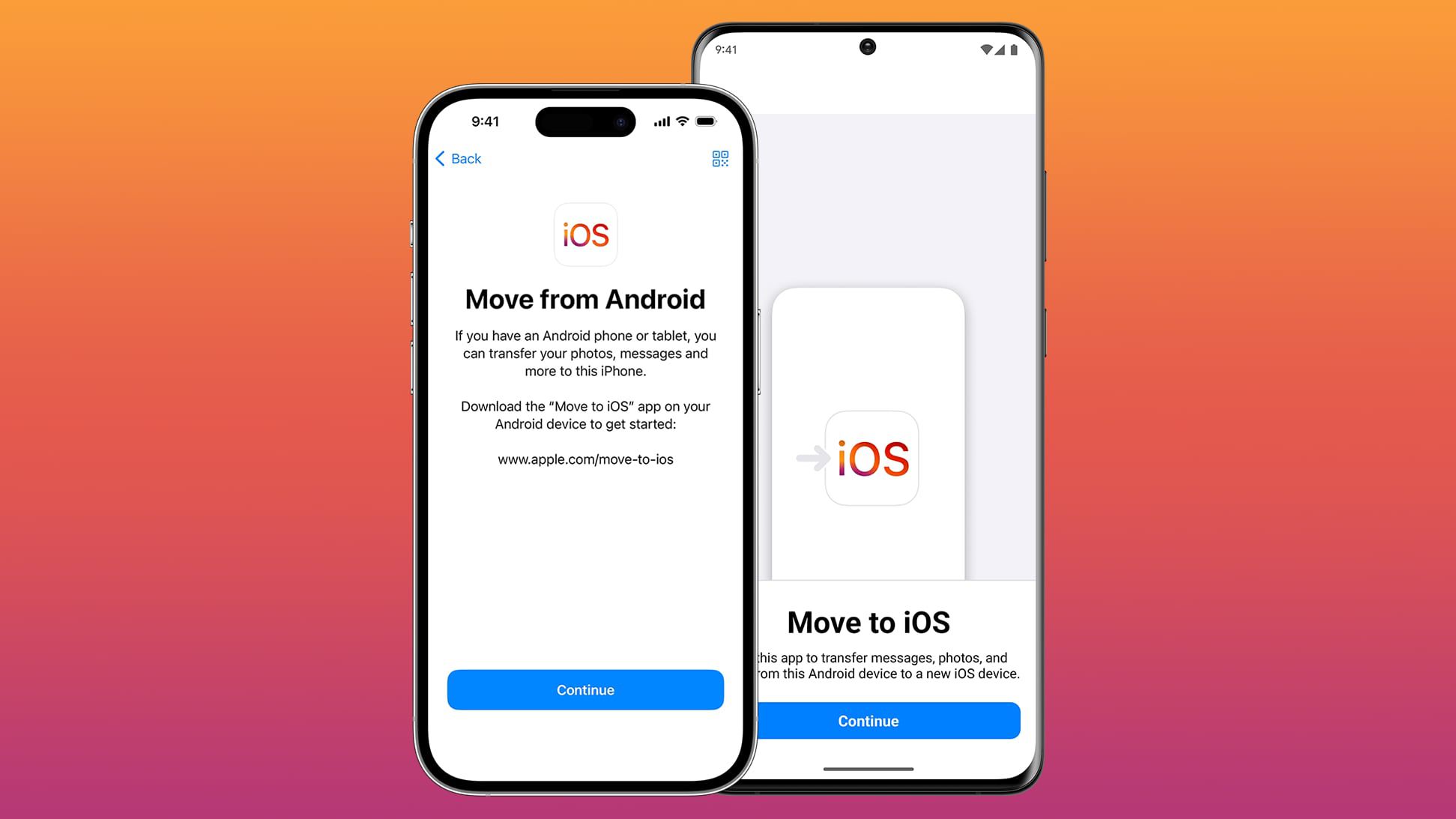

When using the VoicePipeline from the SDK, the typical flow for a voice turn looks like this:

- Audio Input: Raw audio data (e.g., from a microphone) is captured.

-

VoicePipeline (STT): The pipeline receives audio chunks. It uses an OpenAI STT model (like

gpt-4o-transcribevia the API) to transcribe the user's speech into text once speech ends (or via push-to-talk). -

Agent Workflow Execution (MyWorkflow.run in the example):

- The transcribed text is passed to your defined workflow (e.g., a class inheriting from

VoiceWorkflowBase). - Inside the workflow, the

Runneris invoked with the currentAgent, conversation history, and the new user text. - The

Agent(LLM) decides whether to respond directly, call aTool(function), orHandoffto another agent based on its instructions and the user input. - If a tool is called, the

Runnerexecutes the Python function and sends the result back to the LLM. - If a handoff occurs, the

Runnerswitches context to the new agent. - The LLM generates the text response.

- The transcribed text is passed to your defined workflow (e.g., a class inheriting from

-

VoicePipeline (TTS): The final text response from the agent workflow is sent to an OpenAI TTS model (e.g.,

gpt-4o-mini-tts) via the API to generate audio. - Audio Output: The generated audio data is streamed back to be played to the user.

(Diagram: Microphone feeds audio to VoicePipeline for STT. Text goes to Agent Workflow (using Runner, Agent, Tools, Handoffs). Text response goes back to VoicePipeline for TTS, then to Speaker.)

This contrasts with the LiveKit architecture where WebRTC handles the audio transport layer directly, and the livekit-agents framework integrates STT/LLM/TTS plugins into that real-time stream.

Let's Build: The Multi-Lingual Assistant (Python Example)

Let's break down the key parts of the official OpenAI Agents SDK voice example. (Link to the repository will be at the end).

1. Prerequisites

- Python 3.8+

- OpenAI API Key.

- Install the SDK with voice extras:

pip install "openai-agents[voice]" sounddevice numpy python-dotenv textual # For the demo UI

2. Setup (.env file)

# .env

OPENAI_API_KEY="sk-..."

3. Core Agent Logic (my_workflow.py)

This file defines the agents and the workflow logic that runs after speech is transcribed to text and before the response text is sent for synthesis.

-

Imports: Necessary components from

agentsSDK (Agent,Runner,function_tool,VoiceWorkflowBase, etc.). -

Tool Definition (

get_weather): A simple Python function decorated with@function_toolto make it callable by theagent. The SDK handles generating the schema for the LLM.

import random

from collections.abc import AsyncIterator

from typing import Callable

from agents import Agent, Runner, TResponseInputItem, function_tool

from agents.extensions.handoff_prompt import prompt_with_handoff_instructions

from agents.voice import VoiceWorkflowBase, VoiceWorkflowHelper

@function_tool

def get_weather(city: str) -> str:

"""Get the weather for a given city."""

print(f"[debug] get_weather called with city: {city}")

choices = ["sunny", "cloudy", "rainy", "snowy"]

return f"The weather in {city} is {random.choice(choices)}."

-

Agent Definitions (

spanish_agent,agent):- Each

Agentis created with aname,instructions(using a helperprompt_with_handoff_instructionsto guide its behavior regarding handoffs), amodel, and optionallytoolsit can use and otherhandoffsit can initiate. - The

handoff_descriptionhelps the calling agent decide which agent to hand off to.

- Each

spanish_agent = Agent(

name="Spanish",

handoff_description="A spanish speaking agent.",

instructions=prompt_with_handoff_instructions(

"You're speaking to a human, so be polite and concise. Speak in Spanish.",

),

model="gpt-4.1",

)

agent = Agent(

name="Assistant",

instructions=prompt_with_handoff_instructions(

"You're speaking to a human, so be polite and concise. If the user speaks in Spanish, handoff to the spanish agent.",

),

model="gpt-4.1",

handoffs=[spanish_agent], # List of agents it can hand off to

tools=[get_weather], # List of tools it can use

)

-

Workflow Class (

MyWorkflow):- Inherits from

VoiceWorkflowBase. -

__init__: Stores configuration (like thesecret_wordfor a simple game logic) and maintains state like conversation history (_input_history) and the currently active agent (_current_agent). -

run(transcription: str): This is the core method called by theVoicePipelineafter STT. - It receives the user's transcribed text.

- Updates the conversation history.

- Contains custom logic (like checking for the secret word).

- Invokes

Runner.run_streamedwith the current agent and history. This handles the interaction with the LLM, tool calls, and potential handoffs based on the agent's configuration. - Uses

VoiceWorkflowHelper.stream_text_fromto yield text chunks as they are generated by the LLM (enabling faster TTS start). - Updates the history and potentially the

_current_agentbased on theRunner's result (if a handoff occurred).

- Inherits from

class MyWorkflow(VoiceWorkflowBase):

def __init__(self, secret_word: str, on_start: Callable[[str], None]):

# ... (init stores history, current_agent, secret_word, callback) ...

self._input_history: list[TResponseInputItem] = []

self._current_agent = agent

self._secret_word = secret_word.lower()

self._on_start = on_start # Callback for UI updates

async def run(self, transcription: str) -> AsyncIterator[str]:

self._on_start(transcription) # Call the UI callback

self._input_history.append({"role": "user", "content": transcription})

if self._secret_word in transcription.lower(): # Custom logic example

yield "You guessed the secret word!"

# ... (update history) ...

return

# Run the agent logic using the Runner

result = Runner.run_streamed(self._current_agent, self._input_history)

# Stream text chunks for faster TTS

async for chunk in VoiceWorkflowHelper.stream_text_from(result):

yield chunk

# Update state for the next turn

self._input_history = result.to_input_list()

self._current_agent = result.last_agent # Agent might have changed via handoff

4. Client & Pipeline Setup (main.py)

This file sets up a simple Textual-based UI and manages the audio input/output and the VoicePipeline.

- It initializes

sounddevicefor microphone input and speaker output. - Creates the

VoicePipeline, passing in theMyWorkflowinstance. - Uses

StreamedAudioInputto feed microphone data into the pipeline. - Starts the pipeline using

pipeline.run(self._audio_input). - Asynchronously iterates through the

result.stream()to:- Play back audio chunks (

voice_stream_event_audio). - Display lifecycle events or transcriptions in the UI.

- Play back audio chunks (

- Handles starting/stopping recording based on key presses ('k'). (Note: We won't dive deep into the Textual UI code here, focusing instead on the agent interaction pattern.)

5. Running the Example

- Ensure .env is set up.

- Run the main script: python main.py

- Press 'k' to start recording, speak, press 'k' again to stop. The agent should respond.

Comparing Approaches: OpenAI Agents SDK vs. LiveKit Agents

Both frameworks allow building sophisticated voice agents with multiple roles, but they excel in different areas due to their underlying philosophies and technologies:

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Under-Display Face ID Coming to iPhone 18 Pro and Pro Max [Rumor]](https://www.iclarified.com/images/news/97215/97215/97215-640.jpg)

![New Powerbeats Pro 2 Wireless Earbuds On Sale for $199.95 [Lowest Price Ever]](https://www.iclarified.com/images/news/97217/97217/97217-640.jpg)

![Alleged iPhone 17-19 Roadmap Leaked: Foldables and Spring Launches Ahead [Kuo]](https://www.iclarified.com/images/news/97214/97214/97214-640.jpg)