Building Next-Gen AI Chatbots for Healthcare: Key Considerations

AI-powered virtual assistants are not just answering basic questions anymore — they’re helping patients schedule appointments, track symptoms, access medical records, and others. Undoubtedly, the potential of healthcare AI solutions is huge, but when it comes to people’s health, there are many things you absolutely have to get right. Let’s have a look at below key considerations when building next-gen AI chatbots for the healthcare world. Data privacy and security concerns First and foremost, healthcare data is incredibly sensitive. Whether it's test results, symptoms, or personal details — it has to be protected. Any chatbot in this space needs to follow privacy laws like HIPAA or GDPR. Their communication with the user must be encrypted with strict access controls and secure data storage. Also, developers must be cautious about data collection and sharing - patient consents are crucial and transparency must be a priority. Medical accuracy and safety Unlike conversational AI solutions or general-purpose chatbots, healthcare bots can’t afford to provide “close enough” answers. Any misinformation can lead to real harm. That’s why next-gen healthcare chatbots must be trained on verified medical databases. Developers should work alongside real doctors and medical professionals to make sure the chatbot gives reliable, safe responses. And most importantly, always make it clear [via disclaimer or others] that the chatbot is not a substitute for a real doctor — especially in emergencies. Multi-language support Being an important [but often overlooked], multi-language support makes a healthcare AI solution truly accessible. In many countries, people speak dozens of languages and dialects. Imagine someone who doesn't speak English well trying to explain a health concern — it can be scary and frustrating. Such scenarios demand multilingual chatbots so people don’t miss critical health information just because of a language barrier. In addition to the direct translations, the chatbot should also understand regional slang, cultural expressions, and health-related terms that may vary across communities. This will create a more inclusive system where access to care isn’t limited by language — which is how it should be. Understanding real people or personalization People don’t talk like textbooks — especially when they’re not feeling well. Someone might say, “I feel dizzy and weird” instead of using clinical terms. A good healthcare chatbot needs to be able to understand all kinds of ways people describe symptoms. It should ask gentle follow-up questions to get clarity without making the person feel confused or judged. Seamless integration with health systems For a chatbot to really be helpful, it can’t just work in isolation. It has to be smoothly integrated with hospital systems, appointment schedulers, electronic health records (EHRs), and even with health tracking apps. This way, it can do things like remind patients to take their meds, alert doctors about worrying symptoms, or help users book follow-up visits — all in one place. Final thoughts By building a next-gen healthcare AI chatbot you’re creating a tool that people will turn to when they’re confused, scared, or seeking answers about their health. It needs to be secure, accurate, empathetic, and inclusive. If we can get this right, these chatbots can truly lighten the load for healthcare workers, make quality care more accessible, and empower people to take control of their health in ways we’ve never seen before.

AI-powered virtual assistants are not just answering basic questions anymore — they’re helping patients schedule appointments, track symptoms, access medical records, and others. Undoubtedly, the potential of healthcare AI solutions is huge, but when it comes to people’s health, there are many things you absolutely have to get right.

Let’s have a look at below key considerations when building next-gen AI chatbots for the healthcare world.

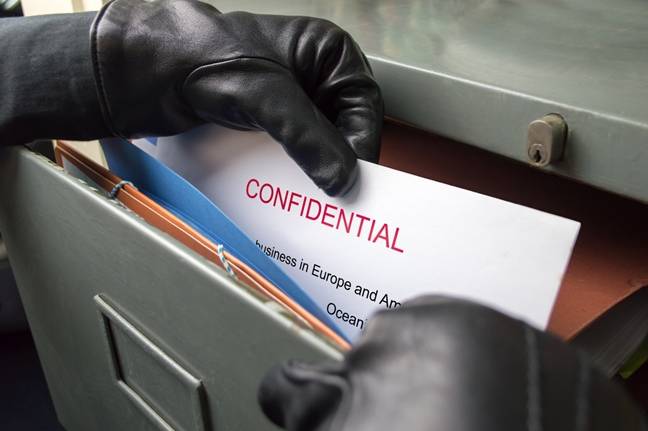

Data privacy and security concerns

First and foremost, healthcare data is incredibly sensitive. Whether it's test results, symptoms, or personal details — it has to be protected. Any chatbot in this space needs to follow privacy laws like HIPAA or GDPR. Their communication with the user must be encrypted with strict access controls and secure data storage. Also, developers must be cautious about data collection and sharing - patient consents are crucial and transparency must be a priority.

Medical accuracy and safety

Unlike conversational AI solutions or general-purpose chatbots, healthcare bots can’t afford to provide “close enough” answers. Any misinformation can lead to real harm. That’s why next-gen healthcare chatbots must be trained on verified medical databases. Developers should work alongside real doctors and medical professionals to make sure the chatbot gives reliable, safe responses. And most importantly, always make it clear [via disclaimer or others] that the chatbot is not a substitute for a real doctor — especially in emergencies.

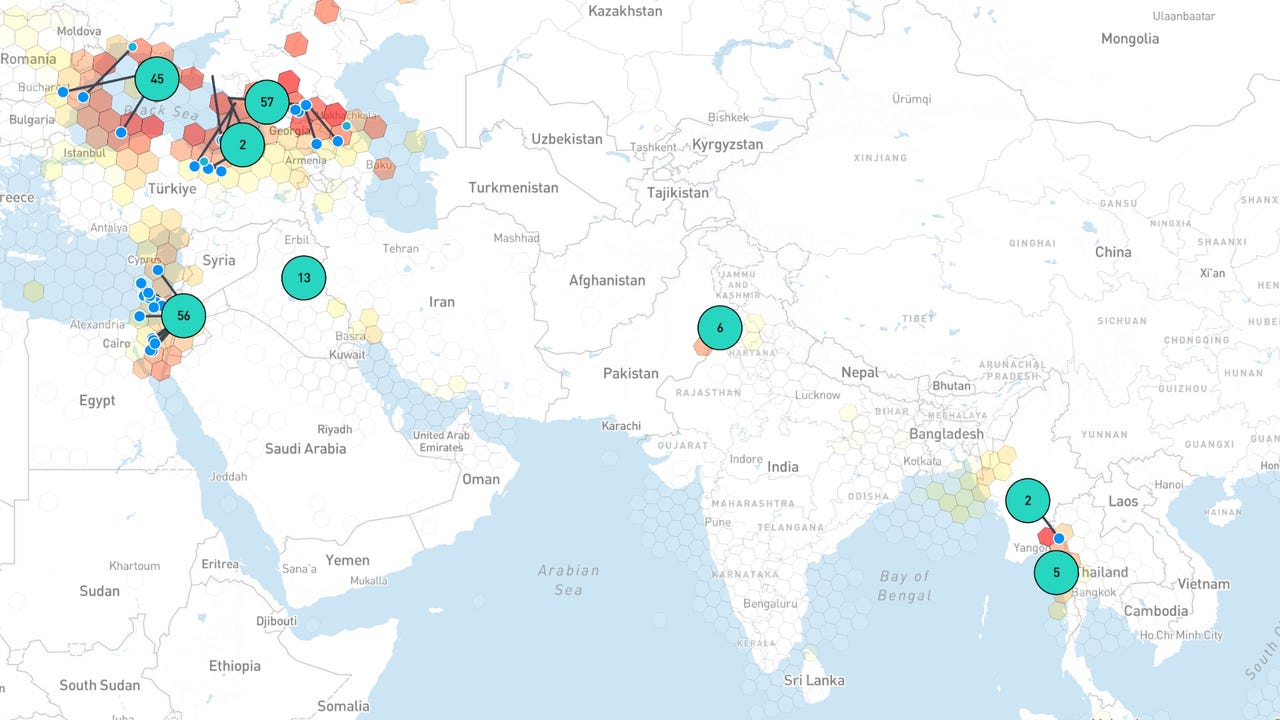

Multi-language support

Being an important [but often overlooked], multi-language support makes a healthcare AI solution truly accessible. In many countries, people speak dozens of languages and dialects. Imagine someone who doesn't speak English well trying to explain a health concern — it can be scary and frustrating. Such scenarios demand multilingual chatbots so people don’t miss critical health information just because of a language barrier.

In addition to the direct translations, the chatbot should also understand regional slang, cultural expressions, and health-related terms that may vary across communities. This will create a more inclusive system where access to care isn’t limited by language — which is how it should be.

Understanding real people or personalization

People don’t talk like textbooks — especially when they’re not feeling well. Someone might say, “I feel dizzy and weird” instead of using clinical terms. A good healthcare chatbot needs to be able to understand all kinds of ways people describe symptoms. It should ask gentle follow-up questions to get clarity without making the person feel confused or judged.

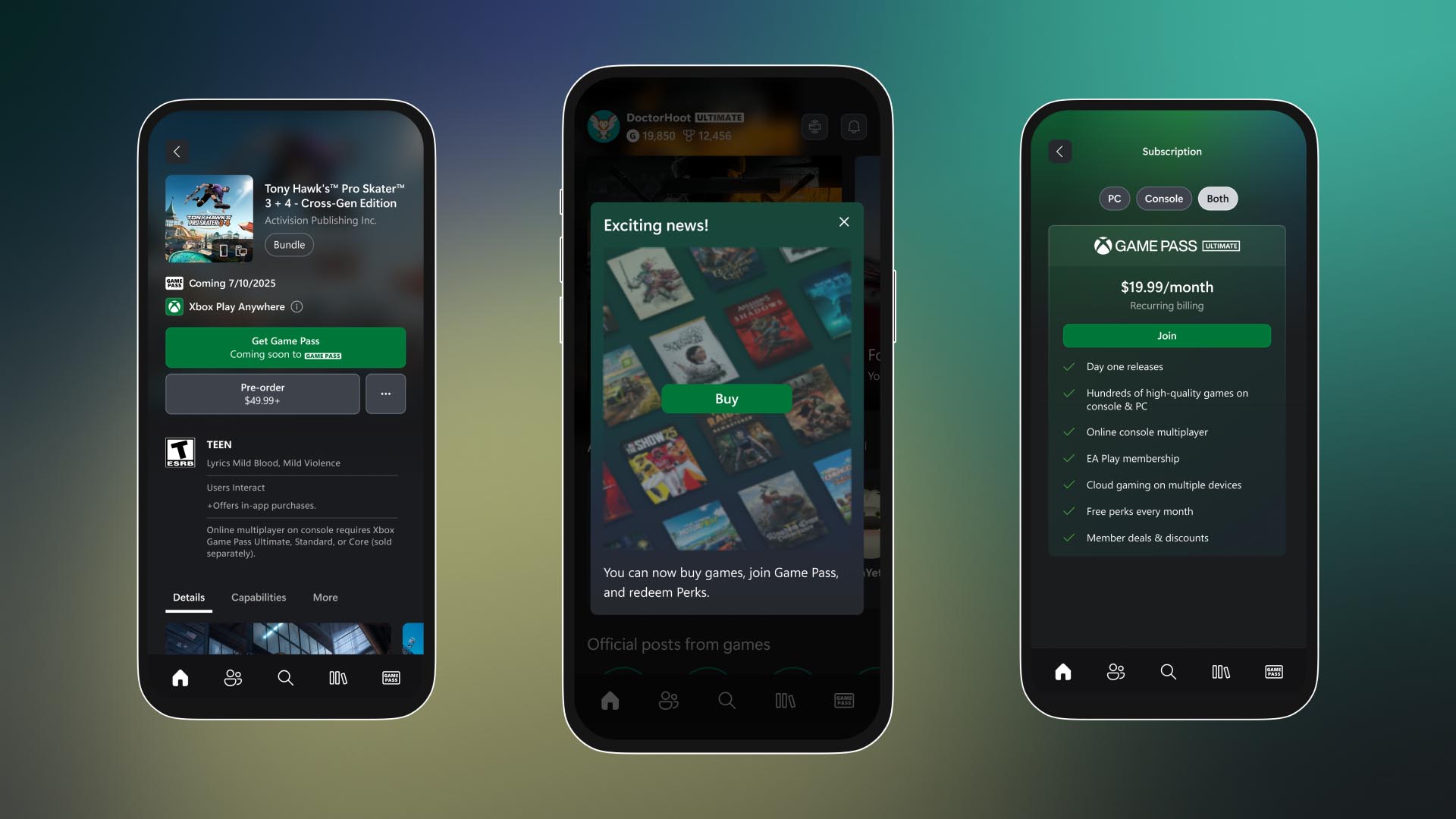

Seamless integration with health systems

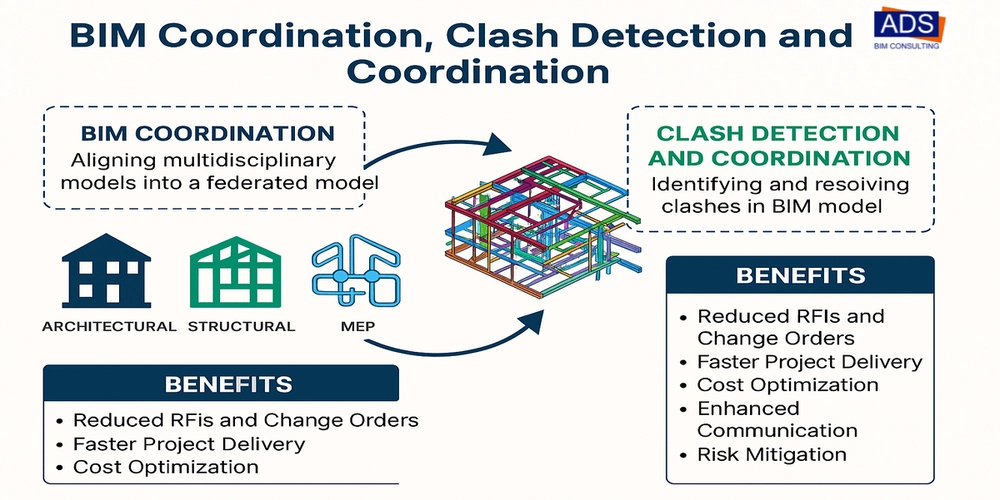

For a chatbot to really be helpful, it can’t just work in isolation. It has to be smoothly integrated with hospital systems, appointment schedulers, electronic health records (EHRs), and even with health tracking apps. This way, it can do things like remind patients to take their meds, alert doctors about worrying symptoms, or help users book follow-up visits — all in one place.

Final thoughts

By building a next-gen healthcare AI chatbot you’re creating a tool that people will turn to when they’re confused, scared, or seeking answers about their health. It needs to be secure, accurate, empathetic, and inclusive. If we can get this right, these chatbots can truly lighten the load for healthcare workers, make quality care more accessible, and empower people to take control of their health in ways we’ve never seen before.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![GrandChase tier list of the best characters available [April 2025]](https://media.pocketgamer.com/artwork/na-33057-1637756796/grandchase-ios-android-3rd-anniversary.jpg?#)

![Apple M4 13-inch iPad Pro On Sale for $200 Off [Deal]](https://www.iclarified.com/images/news/97056/97056/97056-640.jpg)

![Apple Shares New 'Mac Does That' Ads for MacBook Pro [Video]](https://www.iclarified.com/images/news/97055/97055/97055-640.jpg)