Building a Simple Stock Insights Agent with Gemini 2.0 Flash on Vertex AI

Introduction to Gemini 2.0 Flash Gemini 2.0 Flash, Google's efficient generative AI model, excels at tasks requiring low latency and strong performance. Its agentic capabilities, large context window, and native tool use make it ideal for finance applications. In this blog, we'll create a stock insights agent using Gemini 2.0 Flash on Vertex AI, leveraging Google Search grounding for real-time data. We'll use the google-genai library and build a Gradio UI for interactive stock analysis. Step-by-Step Guide: Developing the Stock Insights Agent Let’s break down the process into environment setup, connecting to Gemini 2.0 Flash, integrating Google Search, and creating a Gradio web app. 1. Environment Setup and Authentication Before coding, prepare your Google Cloud environment: Enable Vertex AI and Vertex AI Search and Conversation APIs in your Google Cloud project. Ensure you have the necessary permissions. Install the Google Gen AI SDK for Python: pip install --upgrade google-genai Authentication: If running this notebook on Google Colab, you will need to authenticate your environment. To do this, run the new cell below. import sys if "google.colab" in sys.modules: # Authenticate user to Google Cloud from google.colab import auth auth.authenticate_user() Otherwise, set up authentication using a service account. Go to the Google Cloud Console, create a service account with Vertex AI access, and download its JSON key. Then set the GOOGLE_APPLICATION_CREDENTIALS environment variable to the path of this JSON key to authenticate your API calls. For example: export GOOGLE_APPLICATION_CREDENTIALS="/path/to/your/service-account-key.json" Initialize the Vertex AI SDK: import os from google import genai PROJECT_ID = "[your-gcp-project-id]" # Replace with your GCP project ID LOCATION = "us-central1" # Or the region where Gemini 2.0 Flash is available client = genai.Client(vertexai=True, project=PROJECT_ID, location=LOCATION) 2. Connecting to the Gemini 2.0 Flash Model Connect to the Gemini 2.0 Flash model using the google-genai library: MODEL_ID = "gemini-2.0-flash-001" # Or the latest available model ID 3. Enabling Google Search Grounding for Real-Time Stock Data Use Google Search to ground responses with up-to-date information: from google.genai.types import GoogleSearch, Tool, GenerateContentConfig import json # Create a Google Search retrieval tool google_search_tool = Tool(google_search=GoogleSearch()) # Define our query or prompt for the model company_name = "Alphabet Inc." # example company prompt = ( f"Provide information about {company_name} in JSON format with the following keys:\n" f"- `company_info`: A brief overview of the company and what it does.\n" f"- `recent_news`: An array of 2-3 recent news items about the company, each with a `title` and a short `description`.\n" f"- `trend_prediction`: An analysis of the stock trend and a suggested target price for the near future." ) # Configure the model to use the Google Search tool config = GenerateContentConfig(tools=[google_search_tool]) # Generate a response using the model, grounded with Google Search data response = client.models.generate_content( model=MODEL_ID, contents=prompt, config=config, ) try: data = json.loads(response.text) print(json.dumps(data, indent=4)) # Print nicely formatted JSON except json.JSONDecodeError: print("Error decoding JSON:", response.text) 4. Testing the Model Response (Example) Test the model with a sample query: user_question = ( "Provide information about Tesla in JSON format with the following keys:\n" f"- `company_info`: A brief overview of the company and what it does.\n" f"- `recent_news`: An array of 2-3 recent news items about the company, each with a `title` and a short `description`.\n" f"- `trend_prediction`: An analysis of the stock trend and a suggested target price for the near future." ) config = GenerateContentConfig(tools=[google_search_tool]) response = client.models.generate_content( model=MODEL_ID, contents=user_question, config=config, ) try: data = json.loads(response.text) print(json.dumps(data, indent=4)) # Print nicely formatted JSON except json.JSONDecodeError: print("Error decoding JSON:", response.text) 5. Gradio UI Implementation Create an interactive web interface with Gradio: import gradio as gr import json # Function to get stock insights for a given company name def get_stock_insights(company): if not company: return "", "", "" # Construct a prompt for the LLM to generate a structured JSON response prompt = ( f"Provide information about {company} in JSON format with the following keys:\n" f"- `company_info`: A brief overview of the company and what it does.\n" f"- `recent_news

Introduction to Gemini 2.0 Flash

Gemini 2.0 Flash, Google's efficient generative AI model, excels at tasks requiring low latency and strong performance. Its agentic capabilities, large context window, and native tool use make it ideal for finance applications. In this blog, we'll create a stock insights agent using Gemini 2.0 Flash on Vertex AI, leveraging Google Search grounding for real-time data. We'll use the google-genai library and build a Gradio UI for interactive stock analysis.

Step-by-Step Guide: Developing the Stock Insights Agent

Let’s break down the process into environment setup, connecting to Gemini 2.0 Flash, integrating Google Search, and creating a Gradio web app.

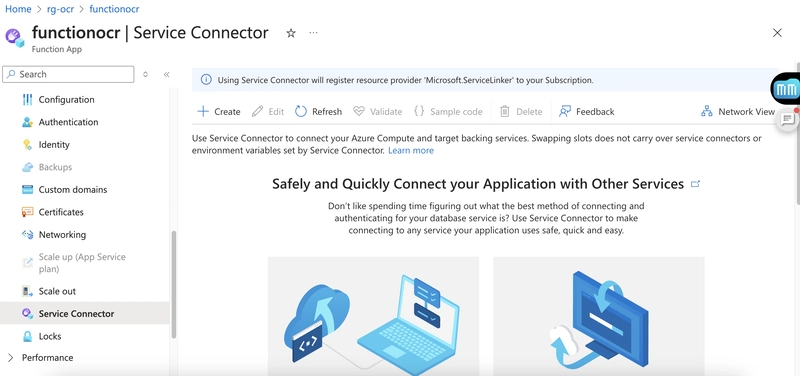

1. Environment Setup and Authentication

Before coding, prepare your Google Cloud environment:

- Enable Vertex AI and Vertex AI Search and Conversation APIs in your Google Cloud project. Ensure you have the necessary permissions.

Install the Google Gen AI SDK for Python:

pip install --upgrade google-genai-

Authentication:

- If running this notebook on Google Colab, you will need to authenticate your environment. To do this, run the new cell below.

import sys if "google.colab" in sys.modules: # Authenticate user to Google Cloud from google.colab import auth auth.authenticate_user()- Otherwise, set up authentication using a service account. Go to the Google Cloud Console, create a service account with Vertex AI access, and download its JSON key. Then set the

GOOGLE_APPLICATION_CREDENTIALSenvironment variable to the path of this JSON key to authenticate your API calls. For example:

export GOOGLE_APPLICATION_CREDENTIALS="/path/to/your/service-account-key.json" Initialize the Vertex AI SDK:

import os

from google import genai

PROJECT_ID = "[your-gcp-project-id]" # Replace with your GCP project ID

LOCATION = "us-central1" # Or the region where Gemini 2.0 Flash is available

client = genai.Client(vertexai=True, project=PROJECT_ID, location=LOCATION)

2. Connecting to the Gemini 2.0 Flash Model

Connect to the Gemini 2.0 Flash model using the google-genai library:

MODEL_ID = "gemini-2.0-flash-001" # Or the latest available model ID

3. Enabling Google Search Grounding for Real-Time Stock Data

Use Google Search to ground responses with up-to-date information:

from google.genai.types import GoogleSearch, Tool, GenerateContentConfig

import json

# Create a Google Search retrieval tool

google_search_tool = Tool(google_search=GoogleSearch())

# Define our query or prompt for the model

company_name = "Alphabet Inc." # example company

prompt = (

f"Provide information about {company_name} in JSON format with the following keys:\n"

f"- `company_info`: A brief overview of the company and what it does.\n"

f"- `recent_news`: An array of 2-3 recent news items about the company, each with a `title` and a short `description`.\n"

f"- `trend_prediction`: An analysis of the stock trend and a suggested target price for the near future."

)

# Configure the model to use the Google Search tool

config = GenerateContentConfig(tools=[google_search_tool])

# Generate a response using the model, grounded with Google Search data

response = client.models.generate_content(

model=MODEL_ID,

contents=prompt,

config=config,

)

try:

data = json.loads(response.text)

print(json.dumps(data, indent=4)) # Print nicely formatted JSON

except json.JSONDecodeError:

print("Error decoding JSON:", response.text)

4. Testing the Model Response (Example)

Test the model with a sample query:

user_question = (

"Provide information about Tesla in JSON format with the following keys:\n"

f"- `company_info`: A brief overview of the company and what it does.\n"

f"- `recent_news`: An array of 2-3 recent news items about the company, each with a `title` and a short `description`.\n"

f"- `trend_prediction`: An analysis of the stock trend and a suggested target price for the near future."

)

config = GenerateContentConfig(tools=[google_search_tool])

response = client.models.generate_content(

model=MODEL_ID,

contents=user_question,

config=config,

)

try:

data = json.loads(response.text)

print(json.dumps(data, indent=4)) # Print nicely formatted JSON

except json.JSONDecodeError:

print("Error decoding JSON:", response.text)

5. Gradio UI Implementation

Create an interactive web interface with Gradio:

import gradio as gr

import json

# Function to get stock insights for a given company name

def get_stock_insights(company):

if not company:

return "", "", ""

# Construct a prompt for the LLM to generate a structured JSON response

prompt = (

f"Provide information about {company} in JSON format with the following keys:\n"

f"- `company_info`: A brief overview of the company and what it does.\n"

f"- `recent_news`: An array of 2-3 recent news items about the company, each with a `title` and a short `description`.\n"

f"- `trend_prediction`: An analysis of the stock trend and a suggested target price for the near future."

)

# Use the Vertex AI model with Google Search grounding

config = GenerateContentConfig(tools=[google_search_tool])

response = client.models.generate_content(

model=MODEL_ID,

contents=prompt,

config=config,

)

try:

data = json.loads(response.text)

company_info = data.get("company_info", "")

news_items = data.get("recent_news", [])

news_string = ""

for news in news_items:

news_string += f"- **{news.get('title', 'No Title')}**: {news.get('description', 'No Description')}\n"

trend_prediction = data.get("trend_prediction", "")

return company_info, news_string, trend_prediction

except json.JSONDecodeError:

return "Error decoding JSON. Please try again.", "", ""

# Define Gradio interface components

company_input = gr.Textbox(label="Company or Stock Ticker", placeholder="e.g. Tesla or TSLA")

company_info_output = gr.Markdown(label="Company Information")

news_info_output = gr.Markdown(label="Recent News")

prediction_info_output = gr.Markdown(label="Trend & Prediction")

# Create the Gradio interface

demo = gr.Interface(

fn=get_stock_insights,

inputs=company_input,

outputs=[company_info_output, news_info_output, prediction_info_output],

title="

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)