Building a fully testable Lovable clone agent in Python with Scenario

AI agents are super powerful and impressive. You might have heard for example of Lovable, the agent that builds website for you with a single prompt. Of course it takes months and years of work to make it as good as they did, but the fundamentals are actually super easy to replicate: take a good LLM coding model, like Claude or Gemini 2.5, give it tools to read and write files, and ask it to carry over a task. The devil of course is on the details. As user experience will show you, you probably should ask the LLM to think the whole stylesheet on the first interaction, otherwise the website will look "bland" and "basic", this is what lovable does for example. You will also want it to be self-aware of the tools and reduce refusal of using tools, which happens on Gemini sometimes, and you probably have an opinion on what libraries it should use, placeholder images, coding standards, etc. This is where the challenge begins, and most of the time this learnings are accumulated over the prompt, but the prompt is not the initial specification itself, it's just what made it work, nor it's testable. As your agent workflow, tools and prompt complexity grows, the higher the chances of a small change breaking something that was already working. And retesting everything again by hand is a pain in the ass. This is why the goal of this post is not only to build a lovable clone, but to make it fully testable! Let's go, to build this, we are going to use Pydantic AI: uv init lovable-clone cd lovable-clone uv add pydantic-ai Then we are going to start creating our lovable clone agent, create a new file lovable_agent.py: from pydantic_ai import Agent class LovableAgent: def __init__(self): agent = Agent( "anthropic:claude-3-5-sonnet-latest", system_prompt=f""" You are a coding assistant specialized in building whole new websites from scratch. You will be given a basic React, TypeScript, Vite, Tailwind and Radix UI template and will work on top of that. Use the components from the "@/components/ui" folder. On the first user request for building the application, start by the src/index.css and tailwind.config.ts files to define the colors and general application style. Then, start building the website, you can call tools in sequence as much as you want. You will be given tools to read file, create file and update file to carry on your work. You CAN access local files by using the tools provided, but you CANNOT run the application, do NOT suggest the user that. 1. Call the read_file tool to understand the current files before updating them or creating new ones 2. Start building the website, you can call tools in sequence as much as you want 3. Ask the user for next steps After the user request, you will be given the second part of this system prompt, containing the file present on the project using the tag. """, model_settings={ "parallel_tool_calls": False, "temperature": 0.0, "max_tokens": 8192, }, ) The prompt I'm putting here was already somewhat battle tested by myself, and can take you a long way. It also works better with Claude 3.5 then with 3.7 at the moment, but you can try changing it and see how it goes. You will want to give it some tools too, just the 3 most basic ones: read_file, update_file and create_file. Those 3 are already enough to have a very powerful website builder agent at hand import os import shutil import tempfile template_path = os.path.join(os.path.dirname(__file__), "template") class LovableAgent: def __init__(self): agent = ... @agent.tool_plain( docstring_format="google", require_parameter_descriptions=True ) def read_file(path: str) -> str: """Reads the content of a file. Args: path (str): The path to the file to read. Required. Returns: str: The content of the file. """ try: with open(os.path.join(self.template_path, path), "r") as f: return f.read() except FileNotFoundError: return f"Error: File {path} not found (double check the file path)" @agent.tool_plain( docstring_format="google", require_parameter_descriptions=True ) def update_file(path: str, content: str): """Updates the content of a file. Args: path (str): The path to the file to update. Required. content (str): The full file content to write. Required. """ try: with open(os.path.join(self.template_path, path), "w") as f: f.write(content) except FileNotFoundError: return f"Error: Fi

AI agents are super powerful and impressive. You might have heard for example of Lovable, the agent that builds website for you with a single prompt.

Of course it takes months and years of work to make it as good as they did, but the fundamentals are actually super easy to replicate: take a good LLM coding model, like Claude or Gemini 2.5, give it tools to read and write files, and ask it to carry over a task.

The devil of course is on the details. As user experience will show you, you probably should ask the LLM to think the whole stylesheet on the first interaction, otherwise the website will look "bland" and "basic", this is what lovable does for example. You will also want it to be self-aware of the tools and reduce refusal of using tools, which happens on Gemini sometimes, and you probably have an opinion on what libraries it should use, placeholder images, coding standards, etc.

This is where the challenge begins, and most of the time this learnings are accumulated over the prompt, but the prompt is not the initial specification itself, it's just what made it work, nor it's testable. As your agent workflow, tools and prompt complexity grows, the higher the chances of a small change breaking something that was already working. And retesting everything again by hand is a pain in the ass.

This is why the goal of this post is not only to build a lovable clone, but to make it fully testable!

Let's go, to build this, we are going to use Pydantic AI:

uv init lovable-clone

cd lovable-clone

uv add pydantic-ai

Then we are going to start creating our lovable clone agent, create a new file lovable_agent.py:

from pydantic_ai import Agent

class LovableAgent:

def __init__(self):

agent = Agent(

"anthropic:claude-3-5-sonnet-latest",

system_prompt=f"""

You are a coding assistant specialized in building whole new websites from scratch.

You will be given a basic React, TypeScript, Vite, Tailwind and Radix UI template and will work on top of that. Use the components from the "@/components/ui" folder.

On the first user request for building the application, start by the src/index.css and tailwind.config.ts files to define the colors and general application style.

Then, start building the website, you can call tools in sequence as much as you want.

You will be given tools to read file, create file and update file to carry on your work.

You CAN access local files by using the tools provided, but you CANNOT run the application, do NOT suggest the user that.

1. Call the read_file tool to understand the current files before updating them or creating new ones

2. Start building the website, you can call tools in sequence as much as you want

3. Ask the user for next steps

After the user request, you will be given the second part of this system prompt, containing the file present on the project using the

""",

model_settings={

"parallel_tool_calls": False,

"temperature": 0.0,

"max_tokens": 8192,

},

)

The prompt I'm putting here was already somewhat battle tested by myself, and can take you a long way. It also works better with Claude 3.5 then with 3.7 at the moment, but you can try changing it and see how it goes.

You will want to give it some tools too, just the 3 most basic ones: read_file, update_file and create_file. Those 3 are already enough to have a very powerful website builder agent at hand

import os

import shutil

import tempfile

template_path = os.path.join(os.path.dirname(__file__), "template")

class LovableAgent:

def __init__(self):

agent = ...

@agent.tool_plain(

docstring_format="google", require_parameter_descriptions=True

)

def read_file(path: str) -> str:

"""Reads the content of a file.

Args:

path (str): The path to the file to read. Required.

Returns:

str: The content of the file.

"""

try:

with open(os.path.join(self.template_path, path), "r") as f:

return f.read()

except FileNotFoundError:

return f"Error: File {path} not found (double check the file path)"

@agent.tool_plain(

docstring_format="google", require_parameter_descriptions=True

)

def update_file(path: str, content: str):

"""Updates the content of a file.

Args:

path (str): The path to the file to update. Required.

content (str): The full file content to write. Required.

"""

try:

with open(os.path.join(self.template_path, path), "w") as f:

f.write(content)

except FileNotFoundError:

return f"Error: File {path} not found (double check the file path)"

return "ok"

@agent.tool_plain(

docstring_format="google", require_parameter_descriptions=True

)

def create_file(path: str, content: str):

"""Creates a new file with the given content.

Args:

path (str): The path to the file to create. Required.

content (str): The full file content to write. Required.

"""

os.makedirs(

os.path.dirname(os.path.join(self.template_path, path)), exist_ok=True

)

with open(os.path.join(self.template_path, path), "w") as f:

f.write(content)

return "ok"

self.agent = agent

self.history: list[ModelMessage] = []

Notice we also define a template_path at the top. The way it works is, just like Lovable, we don't build a whole website really from scratch, we start with a react, shadcn and vitejs template.

Let's then create this template so it can be used by the agent, follow the shadcn tutorial to start one:

https://ui.shadcn.com/docs/installation/vite

When asked, name the folder template, just next to our python agent file.

You can test your template individually by running:

cd template

npm install

npm run dev

If it's all working, it will work too for our agent, we are good to continue!

Now, add those methods to the agent:

from pydantic_ai.messages import ModelMessage, UserPromptPart

from pydantic_graph import End

from pydantic_ai.models.openai import OpenAIModel

from openai.types.chat import ChatCompletionMessageParam

class LovableAgent:

# ...

async def process_user_message(

self, message: str, template_path: str, debug: bool = False

) -> tuple[str, list[ChatCompletionMessageParam]]:

self.template_path = template_path

tree = generate_directory_tree(template_path)

user_prompt = f"""{message}

{tree}

"""

async with self.agent.iter(

user_prompt, message_history=self.history

) as agent_run:

next_node = agent_run.next_node # start with the first node

nodes = [next_node]

while not isinstance(next_node, End):

next_node = await agent_run.next(next_node)

nodes.append(next_node)

if not agent_run.result:

raise Exception("No result from agent")

new_messages = agent_run.result.new_messages()

for message_ in new_messages:

for part in message_.parts:

if isinstance(part, UserPromptPart) and part.content == user_prompt:

part.content = message

self.history += new_messages

new_messages_openai_format = await self.convert_to_openai_format(

new_messages

)

return agent_run.result.data, new_messages_openai_format

async def convert_to_openai_format(

self, messages: list[ModelMessage]

) -> list[ChatCompletionMessageParam]:

openai_model = OpenAIModel("any")

new_messages_openai_format: list[ChatCompletionMessageParam] = []

for message in messages:

async for openai_message in openai_model._map_message(message):

new_messages_openai_format.append(openai_message)

return new_messages_openai_format

@classmethod

def clone_template(cls):

temp_path = os.path.join(tempfile.mkdtemp(), "lovable_clone")

shutil.copytree(template_path, temp_path)

shutil.rmtree(os.path.join(temp_path, "node_modules"), ignore_errors=True)

return temp_path

Okay let's unpack what is going on here. The process_user_message is really where the execution happens. It's basically just running the user message, together with the current file list, through our pydantic-ai agent. The rest of the code is all about parsing processing those messages.

We then convert the messages to openai format. This is very userful as, being the standard formatting for basically all LLMs, we can use it back when running tests, so the testing agent understands.

Finally, missing piece, add this files tree helper to the file:

from typing import List, Set, Optional

def generate_directory_tree(path: str, ignore_dirs: Optional[Set[str]] = None) -> str:

"""

Generate a visual representation of the directory structure.

Args:

path: The path to the directory to visualize

ignore_dirs: Set of directory names to ignore (defaults to {"node_modules"})

Returns:

A formatted string representing the directory tree

"""

if ignore_dirs is None:

ignore_dirs = {"node_modules", ".git", ".venv"}

# Normalize the path

root_path = os.path.abspath(os.path.expanduser(path))

root_name = os.path.basename(root_path) or root_path

# Start the tree with the root

tree_str = ".\n"

# Get all directories and files

items = _get_directory_contents(root_path, ignore_dirs)

# Generate the tree representation

tree_str += _format_tree(items, "", root_path)

return tree_str

def _get_directory_contents(path: str, ignore_dirs: Set[str]) -> List[str]:

"""

Get all files and directories in a path, sorted with directories first

Args:

path: The directory path to scan

ignore_dirs: Set of directory names to ignore

Returns:

List of paths relative to the provided path

"""

items = []

try:

# List all files and directories

all_items = os.listdir(path)

# Sort items (directories first)

dirs = []

files = []

for item in sorted(all_items):

full_path = os.path.join(path, item)

if os.path.isdir(full_path):

if item not in ignore_dirs:

dirs.append(item)

else:

files.append(item)

items = dirs + files

except (PermissionError, FileNotFoundError):

pass

return items

def _format_tree(items: List[str], prefix: str, path: str, ignore_dirs: Optional[Set[str]] = None) -> str:

"""

Format the directory contents as a tree structure with proper indentation

Args:

items: List of filenames or directory names

prefix: Current line prefix for indentation

path: Current directory path

ignore_dirs: Set of directory names to ignore

Returns:

Formatted tree string for the current level

"""

if ignore_dirs is None:

ignore_dirs = {"node_modules"}

tree_str = ""

count = len(items)

for i, item in enumerate(items):

# Determine if this is the last item at this level

is_last = i == count - 1

# Choose the appropriate connector symbols

conn = "└── " if is_last else "├── "

next_prefix = " " if is_last else "│ "

# Add the current item to the tree

tree_str += f"{prefix}{conn}{item}\n"

# Recursively process subdirectories

item_path = os.path.join(path, item)

if os.path.isdir(item_path) and item not in ignore_dirs:

sub_items = _get_directory_contents(item_path, ignore_dirs)

if sub_items:

tree_str += _format_tree(sub_items, prefix + next_prefix, item_path, ignore_dirs)

return tree_str

That's it! The Lovable Clone agent should fully run now, if you create an instance for it and send it a request.

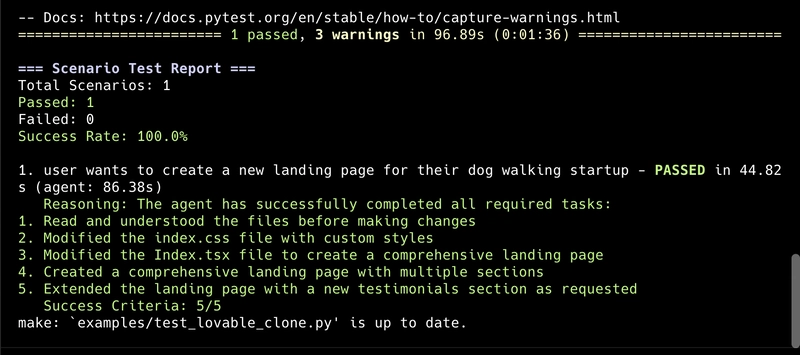

However, what we are going to do is not test it directly, but test it via our tests, using Scenario:

uv add pytest langwatch-scenario

Writing a test for it is the easiest thing, create a new file tests/test_lovable_agent.py, then paste this:

import pytest

from lovable_agent import LovableAgent

from scenario import Scenario, TestingAgent

Scenario.configure(testing_agent=TestingAgent(model="anthropic/claude-3-5-sonnet-latest"))

@pytest.mark.agent_test

@pytest.mark.asyncio

async def test_lovable_clone():

template_path = LovableAgent.clone_template()

print(f"\n-> Lovable clone template path: {template_path}\n")

async def lovable_agent(message: str, context):

lovable_agent = LovableAgent()

_, messages = await lovable_agent.process_user_message(message, template_path)

return {"messages": messages}

scenario = Scenario(

"user wants to create a new landing page for their dog walking startup",

agent=lovable_agent,

strategy="send the first message to generate the landing page, then a single follow up request to extend it, then give your final verdict",

success_criteria=[

"agent reads the files before go and making changes",

"agent modified the index.css file",

"agent modified the Index.tsx file",

"agent created a comprehensive landing page",

"agent extended the landing page with a new section",

],

failure_criteria=[

"agent says it can't read the file",

"agent produces incomplete code or is too lazy to finish",

],

max_turns=5,

)

result = await scenario.run()

print(f"\n-> Done, check the results at: {template_path}\n")

assert result.success

That's it, now run with:

uv run pytest tests/test_lovable_agent.py

You will see the agent talking to each other, asking for a dog website, asking for updates on it, it's super fun to watch. The agent will be keep chatting until it reaches all the goals in the success criteria, or fails in any of the failure criteria. But with Claude 3.5 and this prompt, it should work every time:

And then, you can go to the /tmp folder that will be displayed, and see the final results:

cd /tmp/path-given

npm install

npm run dev

I got something like this:

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Blue Archive tier list [April 2025]](https://media.pocketgamer.com/artwork/na-33404-1636469504/blue-archive-screenshot-2.jpg?#)

.png?#)

-Baldur’s-Gate-3-The-Final-Patch---An-Animated-Short-00-03-43.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![Apple to Split Enterprise and Western Europe Roles as VP Exits [Report]](https://www.iclarified.com/images/news/97032/97032/97032-640.jpg)

![Nanoleaf Announces New Pegboard Desk Dock With Dual-Sided Lighting [Video]](https://www.iclarified.com/images/news/97030/97030/97030-640.jpg)

![Apple's Foldable iPhone May Cost Between $2100 and $2300 [Rumor]](https://www.iclarified.com/images/news/97028/97028/97028-640.jpg)