Building a Dynamic Prompt Engine for an AI SaaS - TaxJoy (Next.js + Vercel AI SDK)

Building a Dynamic Prompt Engine for an AI SaaS (Next.js + Vercel AI SDK) When we started building TaxJoy — an AI-powered tax assistant for CPAs — I thought prompt design would be simple. It wasn’t. As soon as we added multiple user types (CPAs and their clients), different workflows (intake forms, tax document summaries, audit checks), and various tones (professional, approachable, reassuring)… one static system prompt quickly became a giant bottleneck. That’s when we realized: We needed a dynamic, scalable prompt engine. Here’s how we designed and implemented it. ⸻ The Problem: Static Prompts Don’t Scale In early versions of TaxJoy, our AI chat engine worked fine with a basic hardcoded system prompt. But as features grew, real-world demands surfaced: CPAs needed precise, technical responses. Clients needed simpler, friendlier explanations. Intake forms needed directive prompts (“Request W-2s, dependents, 1099s”). Summarizations needed structured, analytical outputs. One giant "catch-all" prompt? Impossible to manage cleanly. Every small change risked breaking something else. ⸻ The Solution: Dynamic Prompt Injection We built a centralized Prompt Engine inside TaxJoy to dynamically generate prompts based on: ✅ User role (CPA or Client) ✅ Current intent (Chat, Fill Forms, Summarize, Validate) ✅ Optional tone (Approachable, Professional, Reassuring) Every AI call dynamically injects a fresh, context-specific system prompt — based on clean, structured parameters. Simple inputs → Controlled, scalable AI behavior. ⸻ System Architecture Overview Directory Structure: /app /api /chat route.ts /lib /prompt-engine index.ts prompts.ts /db schema.ts High Level Flow: Client or CPA interacts with AI assistant. Frontend sends role, intent, and optional tone to backend /api/chat/route.ts. Backend dynamically generates the system prompt using /lib/prompt-engine/index.ts. Backend calls streamText() from Vercel AI SDK with the injected prompt. Visual Diagram: (explained in text) User (CPA or Client) ↓ Frontend (Next.js App Router) ↓ /api/chat/route.ts ↓ /lib/prompt-engine/index.ts → Generates prompt ↓ Vercel AI SDK → LLM Response ⸻ Implementation Details 1. Define Roles, Intents, and Tones // /lib/prompt-engine/prompts.ts export type Role = 'cpa' | 'client'; export type Intent = 'chat' | 'fill' | 'summarize' | 'validate'; export type Tone = 'approachable' | 'professional' | 'reassuring'; 2. Generate Dynamic Prompts // /lib/prompt-engine/index.ts import { Role, Intent, Tone } from './prompts'; interface GeneratePromptParams { role: Role; intent: Intent; tone?: Tone; } export function generatePrompt({ role, intent, tone }: GeneratePromptParams): string { if (role === 'cpa') { switch (intent) { case 'fill': return "Assist the CPA by filling tax forms based on client data. Be precise and technical."; case 'summarize': return "Summarize uploaded tax documents for CPA review. Be structured and professional."; default: return "Answer CPA tax preparation questions clearly and concisely."; } } if (role === 'client') { switch (intent) { case 'fill': return "Guide the client through uploading and filling tax documents. Be friendly and reassuring."; case 'summarize': return "Summarize the client's documents in simple terms they can understand."; default: return "Answer client tax questions in an approachable, simple manner."; } } return "You are a helpful AI assistant guiding users through tax preparation."; } 3. Inject Prompt During AI SDK Call // /app/api/chat/route.ts import { generatePrompt } from '@/lib/prompt-engine'; export async function POST(req: Request) { const { role, intent, tone, messages, model } = await req.json(); const systemPrompt = generatePrompt({ role, intent, tone }); const result = await streamText({ model, system: systemPrompt, messages, }); return new Response(result); } ⸻ Why We Chose This Architecture ✅ Single source of truth for prompts — Easy updates without touching multiple places. ✅ Role/intent-driven control — Maintain high-quality responses across different workflows. ✅ Extensible — Easy to add new roles (e.g., "admin"), intents (e.g., "audit check"), or tones later. ✅ Scales cleanly — No brittle if/else chains scattered in business logic. ⸻ Challenges We Hit Deciding how "granular" prompts should be per task (we kept it lean: 4 core intents to start). Balancing human-readable prompt writing vs. overly abstract generation. Dealing with context switching: sometimes CPAs shift roles mid-convo (solution: refresh prompt by intent per major tool call). ⸻ Lessons Learned Treat prompts like software, not static content. Modular, vers

Building a Dynamic Prompt Engine for an AI SaaS (Next.js + Vercel AI SDK)

When we started building TaxJoy — an AI-powered tax assistant for CPAs — I thought prompt design would be simple.

It wasn’t.

As soon as we added multiple user types (CPAs and their clients), different workflows (intake forms, tax document summaries, audit checks), and various tones (professional, approachable, reassuring)…

one static system prompt quickly became a giant bottleneck.

That’s when we realized:

We needed a dynamic, scalable prompt engine.

Here’s how we designed and implemented it.

⸻

The Problem: Static Prompts Don’t Scale

In early versions of TaxJoy, our AI chat engine worked fine with a basic hardcoded system prompt.

But as features grew, real-world demands surfaced:

- CPAs needed precise, technical responses.

- Clients needed simpler, friendlier explanations.

- Intake forms needed directive prompts (“Request W-2s, dependents, 1099s”).

- Summarizations needed structured, analytical outputs.

One giant "catch-all" prompt?

Impossible to manage cleanly.

Every small change risked breaking something else.

⸻

The Solution: Dynamic Prompt Injection

We built a centralized Prompt Engine inside TaxJoy to dynamically generate prompts based on:

✅ User role (CPA or Client)

✅ Current intent (Chat, Fill Forms, Summarize, Validate)

✅ Optional tone (Approachable, Professional, Reassuring)

Every AI call dynamically injects a fresh, context-specific system prompt — based on clean, structured parameters.

Simple inputs → Controlled, scalable AI behavior.

⸻

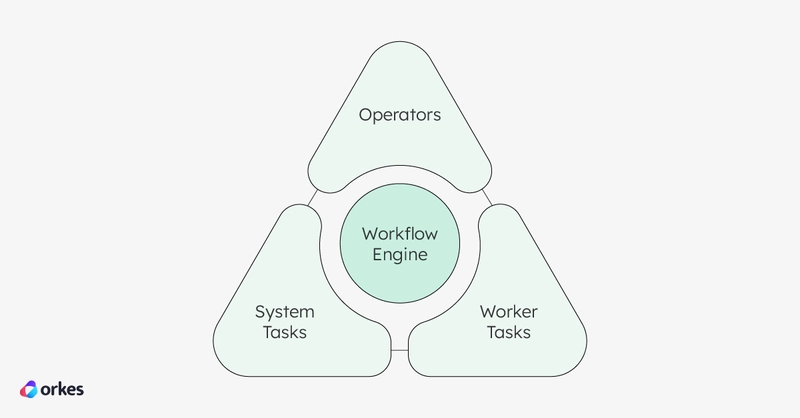

System Architecture Overview

Directory Structure:

/app

/api

/chat

route.ts

/lib

/prompt-engine

index.ts

prompts.ts

/db

schema.ts

High Level Flow:

- Client or CPA interacts with AI assistant.

- Frontend sends

role,intent, and optionaltoneto backend/api/chat/route.ts. - Backend dynamically generates the system prompt using

/lib/prompt-engine/index.ts. - Backend calls

streamText()from Vercel AI SDK with the injected prompt.

Visual Diagram: (explained in text)

User (CPA or Client)

↓

Frontend (Next.js App Router)

↓

/api/chat/route.ts

↓

/lib/prompt-engine/index.ts → Generates prompt

↓

Vercel AI SDK → LLM Response

⸻

Implementation Details

1. Define Roles, Intents, and Tones

// /lib/prompt-engine/prompts.ts

export type Role = 'cpa' | 'client';

export type Intent = 'chat' | 'fill' | 'summarize' | 'validate';

export type Tone = 'approachable' | 'professional' | 'reassuring';

2. Generate Dynamic Prompts

// /lib/prompt-engine/index.ts

import { Role, Intent, Tone } from './prompts';

interface GeneratePromptParams {

role: Role;

intent: Intent;

tone?: Tone;

}

export function generatePrompt({ role, intent, tone }: GeneratePromptParams): string {

if (role === 'cpa') {

switch (intent) {

case 'fill':

return "Assist the CPA by filling tax forms based on client data. Be precise and technical.";

case 'summarize':

return "Summarize uploaded tax documents for CPA review. Be structured and professional.";

default:

return "Answer CPA tax preparation questions clearly and concisely.";

}

}

if (role === 'client') {

switch (intent) {

case 'fill':

return "Guide the client through uploading and filling tax documents. Be friendly and reassuring.";

case 'summarize':

return "Summarize the client's documents in simple terms they can understand.";

default:

return "Answer client tax questions in an approachable, simple manner.";

}

}

return "You are a helpful AI assistant guiding users through tax preparation.";

}

3. Inject Prompt During AI SDK Call

// /app/api/chat/route.ts

import { generatePrompt } from '@/lib/prompt-engine';

export async function POST(req: Request) {

const { role, intent, tone, messages, model } = await req.json();

const systemPrompt = generatePrompt({ role, intent, tone });

const result = await streamText({

model,

system: systemPrompt,

messages,

});

return new Response(result);

}

⸻

Why We Chose This Architecture

✅ Single source of truth for prompts — Easy updates without touching multiple places.

✅ Role/intent-driven control — Maintain high-quality responses across different workflows.

✅ Extensible — Easy to add new roles (e.g., "admin"), intents (e.g., "audit check"), or tones later.

✅ Scales cleanly — No brittle if/else chains scattered in business logic.

⸻

Challenges We Hit

- Deciding how "granular" prompts should be per task (we kept it lean: 4 core intents to start).

- Balancing human-readable prompt writing vs. overly abstract generation.

- Dealing with context switching: sometimes CPAs shift roles mid-convo (solution: refresh prompt by intent per major tool call).

⸻

Lessons Learned

- Treat prompts like software, not static content. Modular, version-controlled, testable.

- Start lean, expand later. Role + intent solved 90% of problems. Tone handling came only after user feedback.

- Prompt engineering is architecture, not just writing. Think scaling, not just "better words."

⸻

If You're Building an AI SaaS...

Whether it’s taxes, healthcare, legal, or customer support:

The smarter your prompt system architecture, the smarter your entire product feels.

Don’t treat your LLM like a magic black box.

Treat it like a smart intern who needs perfect instructions — customized for the user and the moment.

That’s what dynamic prompting unlocks.

⸻

Want to See This in Action?

We’re launching TaxJoy early access —

an AI-powered tax assistant helping CPAs save 40+ hours per month during tax season.

Follow my journey: https://linkedin.com/in/bobbyhalljr

Or DM me if you’re curious how we’re scaling AI workflows in production.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![[DEALS] Koofr Cloud Storage: Lifetime Subscription (1TB) (80% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

-RTAガチ勢がSwitch2体験会でゼルダのラスボスを撃破して世界初のEDを流してしまう...【ゼルダの伝説ブレスオブザワイルドSwitch2-Edition】-00-06-05.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_roibu_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![M4 MacBook Air Drops to Just $849 - Act Fast! [Lowest Price Ever]](https://www.iclarified.com/images/news/97140/97140/97140-640.jpg)

![Apple Smart Glasses Not Close to Being Ready as Meta Targets 2025 [Gurman]](https://www.iclarified.com/images/news/97139/97139/97139-640.jpg)

![iPadOS 19 May Introduce Menu Bar, iOS 19 to Support External Displays [Rumor]](https://www.iclarified.com/images/news/97137/97137/97137-640.jpg)