My Learning Notes: Choosing the Right AI Model and Hardware

My Learning Notes: Choosing the Right AI Model and Hardware The Core Challenge: Model vs. Hardware At its heart, the relationship is simple: Bigger, more capable models generally require more powerful hardware. The most critical hardware resource for running Large Language Models (LLMs) is usually GPU Memory (VRAM). If you try to run a huge model on a GPU with too little VRAM, it either won't work or will be incredibly slow (if it tries to use system RAM, which is much slower for these tasks). Understanding the Model Side When looking at models, especially on places like Hugging Face or when using tools like Ollama, a few key things determine their resource needs: Parameter Count (e.g., 7B, 13B, 70B): - This is roughly the "size" or "complexity" of the model's brain. 'B' stands for billions of parameters. - **More parameters generally mean the model _might_ be smarter**, better at understanding nuance, and have more knowledge. - **BUT, more parameters directly translate to needing more VRAM and disk space.** Quantization (e.g., FP16, Q8_0, Q5_K_M, Q4_0): - Think of this like compressing a file. Quantization reduces the precision used to store the model's parameters (its numbers). - **Why do it?** To make the model smaller! A quantized model takes up less disk space and, crucially, less VRAM. This allows bigger models (more parameters) to run on less powerful hardware. - **The Trade-off:** Reducing precision _can_ reduce the model's performance and accuracy. It might make slightly dumber mistakes or lose some nuance. The goal is to find a balance. - **Common Types:** - `FP16` (Float Precision 16-bit): Half-precision. Often the baseline before quantization. Good quality, but larger VRAM needs. - `Q8_0` (Quantized 8-bit): Uses 8 bits per parameter. Generally very little quality loss compared to FP16, but half the size. - `Q4_K_M`, `Q5_K_M`, etc. (Quantized 4-bit, 5-bit, etc.): Use even fewer bits. Offer significant size reduction, making larger models accessible on consumer GPUs. `_K_M` variants are often preferred as they use clever techniques to maintain quality better than simpler `_0` or `_1` types at the same bit level. - `Q2`, `Q3` (Quantized 2/3-bit): Extreme quantization. Very small, but often come with a significant and sometimes unpredictable drop in quality. Model Version/Architecture: - Newer models (like Qwen 2.5 vs Qwen 1.5, or Llama 3 vs Llama 2) often perform better than older models _of the same size_. This is usually thanks to better training techniques, more data, or improved architectures. Training vs. Inference: Inference: Just running the model to get answers. This is what most people do locally. VRAM needs depend mainly on model weights and conversation context (KV Cache). Training/Fine-tuning: Teaching the model new things or adapting it. This requires much more VRAM because you need to store not just the weights, but also gradients and optimizer states (intermediate calculations). We won't focus much on training here, as inference is the more common starting point. Understanding the Hardware Side (Especially VRAM for Inference) So, how much VRAM do you actually need to run a model (inference)? It mainly comes down to storing a few key things on the GPU: Model Weights: This is usually the biggest chunk. The VRAM needed depends directly on the parameter count and the quantization level. - **Rough Calculation:** `VRAM for Weights (GB) ≈ (Number of Parameters in Billions * Bits per Parameter) / 8` - _Example (7 Billion Parameter Model):_ - `FP16 (16-bit)`: (7 \* 16) / 8 = 14 GB - `Q8_0 (8-bit)`: (7 \* 8) / 8 = 7 GB - `Q4_K_M (≈4-bit)`: (7 \* 4) / 8 = 3.5 GB - `Q2_K (≈2-bit)`: (7 \* 2) / 8 = 1.75 GB KV Cache (Key-Value Cache): This is memory used to keep track of the conversation context. Without it, the model would have to re-read the entire chat history for every single new word it generates! - **Its size depends heavily on:** - **Context Length:** How much conversation history the model can handle (e.g., 4096 tokens, 32k tokens). Longer context = bigger KV cache needed. - **Batch Size:** How many requests you're processing at the same time. Each simultaneous request needs its own KV cache. - **Impact:** The KV cache can easily take up several GBs of VRAM, sometimes even more than the model weights, especially with long contexts! This is often the bottleneck when trying to run models with long context windows or handle multiple users. Overhead: Some VRAM is always used by the GPU driver, the model loading libraries (like llama.cpp used by Ollama), and the inference engine itself. Budget maybe 1-2GB+ for this. Putting it Together (Example Estimate): Let's try to estimate VRAM for running a 7B Q4_K_M model (≈4-bit) with a standard 4096 token context length for a single user: Weights: ≈ 3.5 GB KV Cache (Estimate for 4096 context): Maybe 4-8 GB (this varies

My Learning Notes: Choosing the Right AI Model and Hardware

The Core Challenge: Model vs. Hardware

At its heart, the relationship is simple:

- Bigger, more capable models generally require more powerful hardware.

- The most critical hardware resource for running Large Language Models (LLMs) is usually GPU Memory (VRAM).

If you try to run a huge model on a GPU with too little VRAM, it either won't work or will be incredibly slow (if it tries to use system RAM, which is much slower for these tasks).

Understanding the Model Side

When looking at models, especially on places like Hugging Face or when using tools like Ollama, a few key things determine their resource needs:

- Parameter Count (e.g., 7B, 13B, 70B):

- This is roughly the "size" or "complexity" of the model's brain. 'B' stands for billions of parameters.

- **More parameters generally mean the model _might_ be smarter**, better at understanding nuance, and have more knowledge.

- **BUT, more parameters directly translate to needing more VRAM and disk space.**

- Quantization (e.g., FP16, Q8_0, Q5_K_M, Q4_0):

- Think of this like compressing a file. Quantization reduces the precision used to store the model's parameters (its numbers).

- **Why do it?** To make the model smaller! A quantized model takes up less disk space and, crucially, less VRAM. This allows bigger models (more parameters) to run on less powerful hardware.

- **The Trade-off:** Reducing precision _can_ reduce the model's performance and accuracy. It might make slightly dumber mistakes or lose some nuance. The goal is to find a balance.

- **Common Types:**

- `FP16` (Float Precision 16-bit): Half-precision. Often the baseline before quantization. Good quality, but larger VRAM needs.

- `Q8_0` (Quantized 8-bit): Uses 8 bits per parameter. Generally very little quality loss compared to FP16, but half the size.

- `Q4_K_M`, `Q5_K_M`, etc. (Quantized 4-bit, 5-bit, etc.): Use even fewer bits. Offer significant size reduction, making larger models accessible on consumer GPUs. `_K_M` variants are often preferred as they use clever techniques to maintain quality better than simpler `_0` or `_1` types at the same bit level.

- `Q2`, `Q3` (Quantized 2/3-bit): Extreme quantization. Very small, but often come with a significant and sometimes unpredictable drop in quality.

- Model Version/Architecture:

- Newer models (like Qwen 2.5 vs Qwen 1.5, or Llama 3 vs Llama 2) often perform better than older models _of the same size_. This is usually thanks to better training techniques, more data, or improved architectures.

- Training vs. Inference:

- Inference: Just running the model to get answers. This is what most people do locally. VRAM needs depend mainly on model weights and conversation context (KV Cache).

- Training/Fine-tuning: Teaching the model new things or adapting it. This requires much more VRAM because you need to store not just the weights, but also gradients and optimizer states (intermediate calculations). We won't focus much on training here, as inference is the more common starting point.

Understanding the Hardware Side (Especially VRAM for Inference)

So, how much VRAM do you actually need to run a model (inference)? It mainly comes down to storing a few key things on the GPU:

- Model Weights: This is usually the biggest chunk. The VRAM needed depends directly on the parameter count and the quantization level.

- **Rough Calculation:** `VRAM for Weights (GB) ≈ (Number of Parameters in Billions * Bits per Parameter) / 8`

- _Example (7 Billion Parameter Model):_

- `FP16 (16-bit)`: (7 \* 16) / 8 = 14 GB

- `Q8_0 (8-bit)`: (7 \* 8) / 8 = 7 GB

- `Q4_K_M (≈4-bit)`: (7 \* 4) / 8 = 3.5 GB

- `Q2_K (≈2-bit)`: (7 \* 2) / 8 = 1.75 GB

- KV Cache (Key-Value Cache): This is memory used to keep track of the conversation context. Without it, the model would have to re-read the entire chat history for every single new word it generates!

- **Its size depends heavily on:**

- **Context Length:** How much conversation history the model can handle (e.g., 4096 tokens, 32k tokens). Longer context = bigger KV cache needed.

- **Batch Size:** How many requests you're processing at the same time. Each simultaneous request needs its own KV cache.

- **Impact:** The KV cache can easily take up several GBs of VRAM, sometimes even more than the model weights, especially with long contexts! This is often the bottleneck when trying to run models with long context windows or handle multiple users.

- Overhead: Some VRAM is always used by the GPU driver, the model loading libraries (like llama.cpp used by Ollama), and the inference engine itself. Budget maybe 1-2GB+ for this.

Putting it Together (Example Estimate):

Let's try to estimate VRAM for running a 7B Q4_K_M model (≈4-bit) with a standard 4096 token context length for a single user:

- Weights: ≈ 3.5 GB

- KV Cache (Estimate for 4096 context): Maybe 4-8 GB (this varies a lot!)

- Overhead: ≈ 1.5 GB

- Total Estimated VRAM: 3.5 + 6 (mid-estimate) + 1.5 = ~11 GB

Key Takeaway: Even a relatively small 7B model, quantized to 4-bit, can push the limits of lower-VRAM GPUs (like 8GB cards) once you factor in the KV cache for a decent context length. A 16GB card might be tight, while 24GB offers more breathing room. Running multiple requests concurrently would need even more VRAM, primarily due to multiple KV caches.

Making the Choice: Common Trade-offs

Based on community discussions and benchmarks, here are some general pointers I've picked up when VRAM is limited:

-

More Parameters vs. Less Quantization?

- General wisdom suggests that a larger model with more quantization often performs better than a smaller model with less quantization, up to a point. For example, a 14B model at Q4 might be better than a 7B model at Q8 or FP16, assuming they fit in your VRAM.

-

Sweet Spot: Quantization levels like

Q4_K_MandQ5_K_Mseem to offer a great balance of size reduction and performance preservation for many models. -

Avoid Extremes:

Q2andQ3quantizations can lead to significant performance drops and unpredictable behavior. It might be better to run a smaller model at Q4 than a larger one at Q2. Diminishing returns often hit hard above Q5 – the quality gain from Q6 or Q8 might not be worth the extra VRAM unless you have specific needs and plenty of memory.

- Newer vs. Older Models: As mentioned, newer architectures or versions trained on more data (like Gemma 2 vs Gemma 1, or Llama 3 vs Llama 2) often provide better results even if the parameter count is similar or slightly smaller than an older model.

- Accuracy vs. Speed: Smaller models and more heavily quantized models generally run faster (generate tokens per second quicker). You trade off some potential quality for speed.

Don't Forget System RAM and CPU!

While VRAM is critical, your regular computer memory (RAM) and processor (CPU) still play important roles:

-

System RAM:

- Needed to initially load the model file from disk before transferring it to VRAM.

- Used by the operating system, background tasks, and the application serving the model (like Ollama or a FastAPI app).

- Handles data processing (like reading input text).

- Rule of Thumb: Having at least as much RAM as the unquantized size of the model is a safe starting point (e.g., ~14GB RAM for a 7B model, so 16GB minimum, 32GB better). You'll need more if processing large datasets or handling many requests.

-

CPU:

- Orchestrates the whole process.

- Handles tasks like text tokenization (breaking words into pieces the model understands), running the web server, managing requests.

- A reasonably modern multi-core CPU is needed, but for inference, the GPU is usually the bottleneck. (For training, a faster CPU becomes more important).

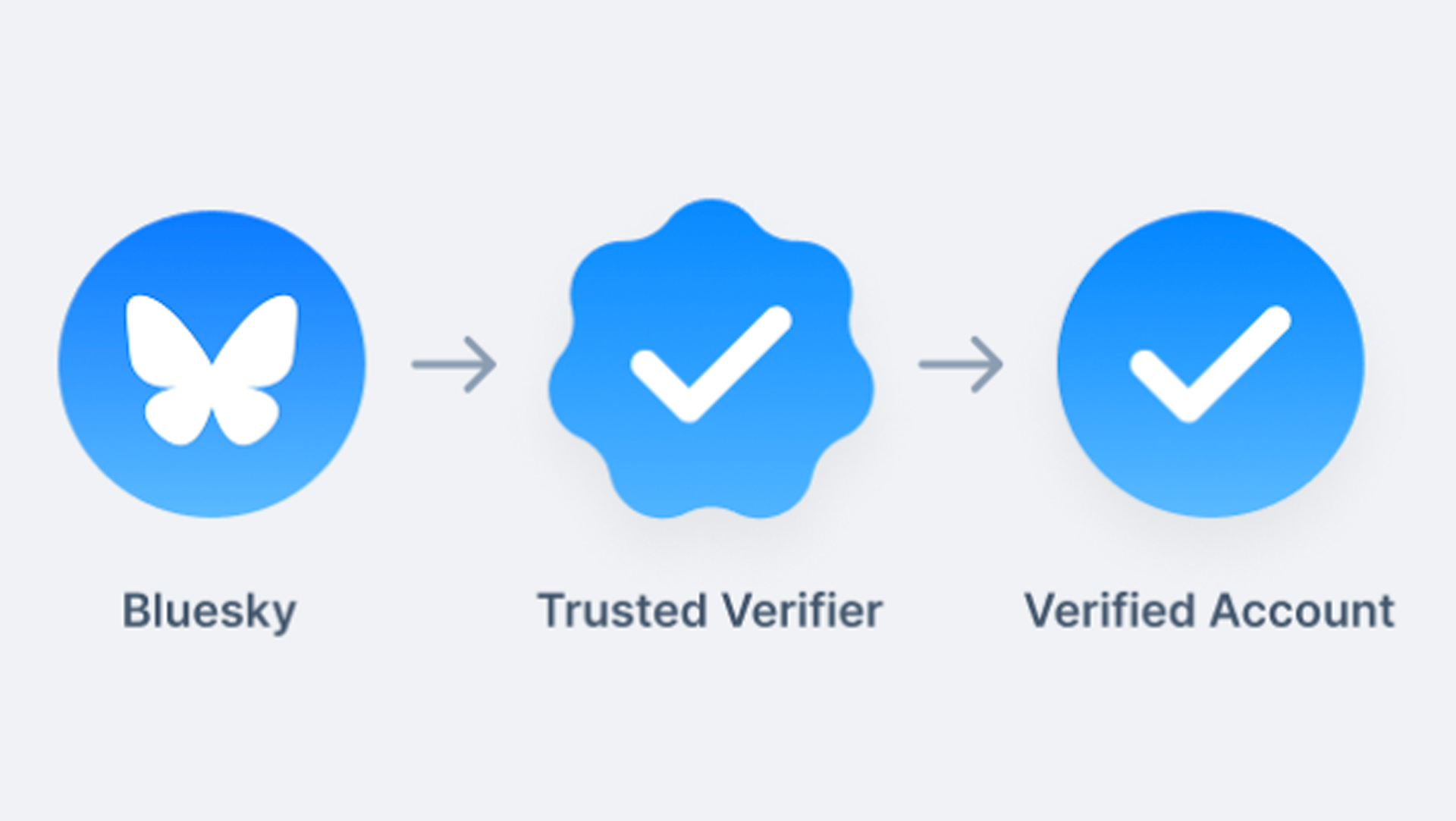

Tools Matter Too (Briefly)

Tools like Ollama make running models easy. More advanced inference engines like VLLM are specifically designed for high performance (more requests per second, better VRAM usage via techniques like PagedAttention), but might be more complex to set up. For starting out, Ollama is great. If you need maximum performance later, exploring alternatives like VLLM might be worthwhile.

A Quick Guide to GGUF Quantization Types (Common Ones)

When using Ollama or llama.cpp, you'll often see GGUF files with names indicating the quantization. Here's a rough quality/size hierarchy (from smallest/lowest quality to largest/highest quality):

-

Q2_K: Extreme quantization (2-bit). Smallest size, highest potential quality loss. Use only if severely VRAM limited. -

Q3_K_S,Q3_K_M,Q3_K_L: Aggressive (3-bit). Better than Q2, but still significant potential quality loss._Lis best within 3-bit. -

Q4_0,Q4_1: Basic 4-bit. Decent size reduction. Newer_Kmethods are usually preferred. -

Q4_K_S,Q4_K_M: Popular Choice (4-bit). Excellent balance between size, VRAM usage, and quality._Mis slightly larger/better than_S. Great starting point. -

Q5_0,Q5_1: Basic 5-bit. -

Q5_K_S,Q5_K_M: Popular Choice (5-bit). Slightly better quality than Q4_K for slightly more VRAM/size._Mis preferred. Often worth it ifQ4_K_Mfits comfortably. -

Q6_K: High Quality (6-bit). Noticeably larger than Q5_K. Good option if you have plenty of VRAM and want quality closer to Q8. -

Q8_0: Near Lossless (8-bit). Very close to FP16 quality. Requires double the VRAM of Q4. Good for production quality if hardware allows. -

FP16: Half Precision (16-bit). No quantization loss (from original FP16 model). Baseline for quality comparison. Requires the most VRAM among common inference types. -

FP32: Full Precision (32-bit). Rarely used for inference due to huge size. Mostly relevant during training.

Recommendation: For a typical 7B or 13B model on consumer GPUs (like those with 16GB or 24GB VRAM), starting with Q4_K_M or Q5_K_M is usually the best bet.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![From fast food worker to cybersecurity engineer with Tae'lur Alexis [Podcast #169]](https://cdn.hashnode.com/res/hashnode/image/upload/v1745242807605/8a6cf71c-144f-4c91-9532-62d7c92c0f65.png?#)

![BPMN-procesmodellering [closed]](https://i.sstatic.net/l7l8q49F.png)

.jpg?#)

_Tanapong_Sungkaew_via_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![PSA: Reddit confirms ongoing outage [U: Fixed]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2023/06/reddit-down.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Releases iOS 18.5 Beta 3 and iPadOS 18.5 Beta 3 [Download]](https://www.iclarified.com/images/news/97076/97076/97076-640.jpg)

![Apple Seeds visionOS 2.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97077/97077/97077-640.jpg)

![Apple Seeds tvOS 18.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97078/97078/97078-640.jpg)

![Apple Seeds watchOS 11.5 Beta 3 to Developers [Download]](https://www.iclarified.com/images/news/97079/97079/97079-640.jpg)

![Mobile Legends: Bang Bang [MLBB] Free Redeem Codes April 2025](https://www.talkandroid.com/wp-content/uploads/2024/07/Screenshot_20240704-093036_Mobile-Legends-Bang-Bang.jpg)

![Cat6 vs Cat7: Which Ethernet Cable is Right for Your Network? [Updated 2025]](https://media2.dev.to/dynamic/image/width=800%2Cheight=%2Cfit=scale-down%2Cgravity=auto%2Cformat=auto/https%3A%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Farticles%2Fjtz4k498dwu467krt9g3.png)