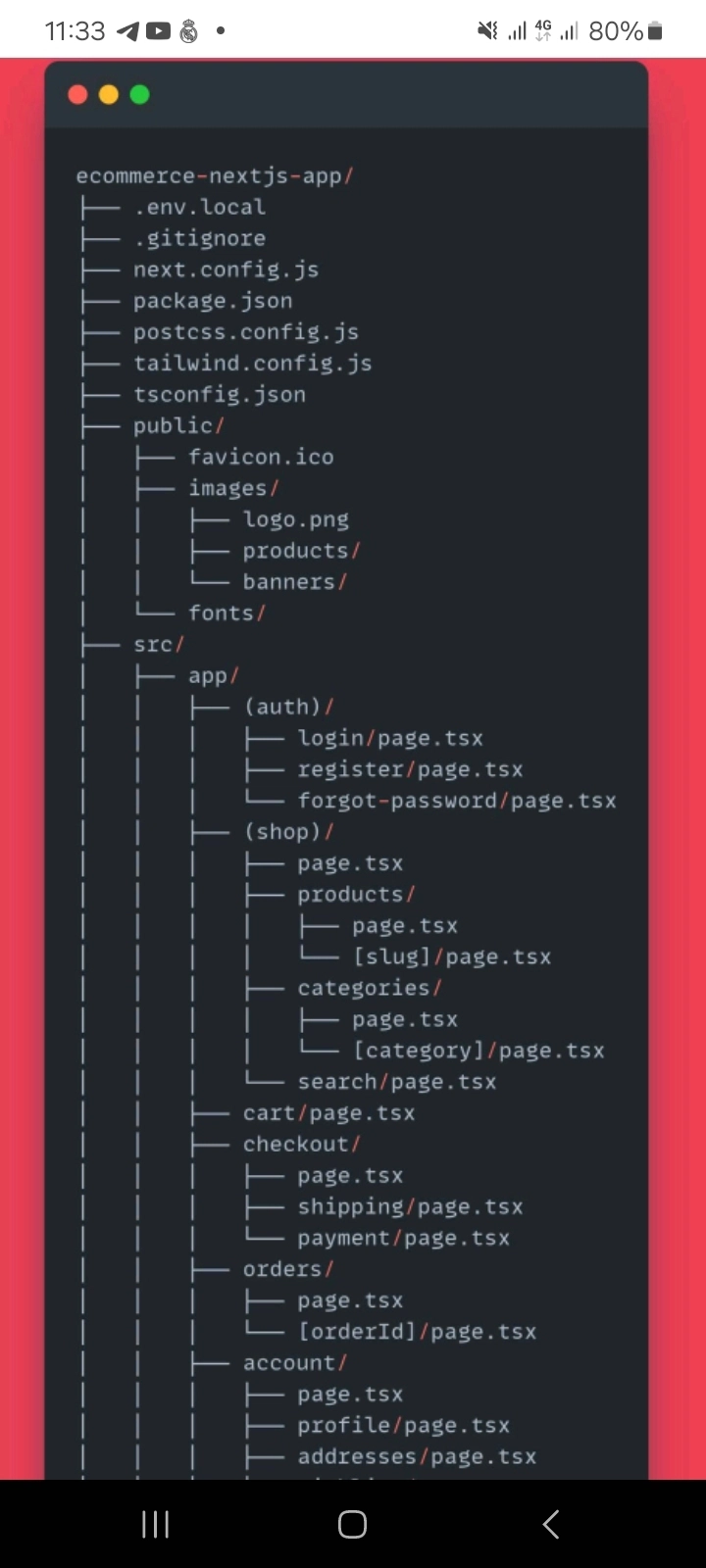

Bridging the ML Gap: How Java Powers Enterprise AI in Production

Machine learning breakthroughs are happening fast—but getting them into production is a whole different story. While data scientists prototype cutting-edge models in Python or R, deploying those models in real-world enterprise systems is where things get messy. Enter: Java—a stable, scalable powerhouse that’s bridging the ML gap in production environments. Let’s dive into how Java is helping enterprises turn AI experiments into production-ready solutions. Why ML Projects Struggle to Reach Production According to McKinsey, only 22% of companies have successfully integrated ML into their production systems. So, what’s holding the rest back? Dev vs. Prod Divide: Data scientists thrive in Python notebooks. But enterprise systems demand reliability, security, and compliance—areas where notebooks fall short. Performance Bottlenecks: Models that shine in development often collapse under real-world traffic. Integration Headaches: ML systems must plug into legacy infrastructure, databases, and real-time pipelines—often built on Java. These gaps are where Java shines. Java-Powered MLOps in Action Java is proving its strength in end-to-end ML deployment. Companies like Netflix, LinkedIn, and Alibaba are already using Java-based MLOps setups to scale AI in production. Key Patterns We're Seeing: Model Serving with Deeplearning4j or H2O Java-native ML libraries ensure tight integration with JVM stacks—no flaky wrappers. Spring Boot for Scalable Inference Services Package models as microservices and deploy them in containers or serverless environments. Real-Time Predictions with Kafka & Flink Java’s deep ecosystem makes it ideal for real-time stream processing and online inference. Case Study: Retail Banking at ScaleA Fortune 100 bank built a customer churn prediction system that handles 25,000 TPS using this hybrid setup: Python + scikit-learn for model development PMML for model portability Java + JPMML Evaluator for fast production inference Spring Boot for integrating with legacy systems Micrometer + Prometheus + Grafana for monitoring Results: 42% reduction in false positives Sub-10ms latency 99.99% uptime Java for Governance & Compliance For industries like finance or healthcare, governance isn't optional—it's critical. Java brings: Granular Audit Trails: Logging frameworks like Logback and SLF4J ensure every step is tracked. RBAC: Plug directly into enterprise identity systems (LDAP, Keycloak, etc.). CI/CD Pipelines: Leverage Maven/Gradle + Jenkins/GitHub Actions to validate, test, and promote models automatically. Wrapping Up AI in production isn't just about brilliant models—it’s about robust systems. By embracing Java’s stability and integration power, enterprises can close the ML gap and unlock real business value. How is your team handling ML deployment? Are you leveraging Java in your AI stack? Still relying on Python wrappers in production? Dealing with tricky integration points? Let’s chat in the comments—I’d love to hear your experience! Tags: Java #MachineLearning #MLOps #AI #EnterpriseAI #SpringBoot #Kafka

Machine learning breakthroughs are happening fast—but getting them into production is a whole different story.

While data scientists prototype cutting-edge models in Python or R, deploying those models in real-world enterprise systems is where things get messy. Enter: Java—a stable, scalable powerhouse that’s bridging the ML gap in production environments.

Let’s dive into how Java is helping enterprises turn AI experiments into production-ready solutions.

Why ML Projects Struggle to Reach Production

According to McKinsey, only 22% of companies have successfully integrated ML into their production systems.

So, what’s holding the rest back?

Dev vs. Prod Divide: Data scientists thrive in Python notebooks. But enterprise systems demand reliability, security, and compliance—areas where notebooks fall short.

Performance Bottlenecks: Models that shine in development often collapse under real-world traffic.

Integration Headaches: ML systems must plug into legacy infrastructure, databases, and real-time pipelines—often built on Java.

These gaps are where Java shines.

Java-Powered MLOps in Action

Java is proving its strength in end-to-end ML deployment. Companies like Netflix, LinkedIn, and Alibaba are already using Java-based MLOps setups to scale AI in production.

Key Patterns We're Seeing:

Model Serving with Deeplearning4j or H2O

Java-native ML libraries ensure tight integration with JVM stacks—no flaky wrappers.

Spring Boot for Scalable Inference Services

Package models as microservices and deploy them in containers or serverless environments.

Real-Time Predictions with Kafka & Flink

Java’s deep ecosystem makes it ideal for real-time stream processing and online inference.

Case Study: Retail Banking at ScaleA Fortune 100 bank built a customer churn prediction system that handles 25,000 TPS using this hybrid setup:

Python + scikit-learn for model development

PMML for model portability

Java + JPMML Evaluator for fast production inference

Spring Boot for integrating with legacy systems

Micrometer + Prometheus + Grafana for monitoring

Results:

42% reduction in false positives

Sub-10ms latency

99.99% uptime

Java for Governance & Compliance

For industries like finance or healthcare, governance isn't optional—it's critical. Java brings:

Granular Audit Trails: Logging frameworks like Logback and SLF4J ensure every step is tracked.

RBAC: Plug directly into enterprise identity systems (LDAP, Keycloak, etc.).

CI/CD Pipelines: Leverage Maven/Gradle + Jenkins/GitHub Actions to validate, test, and promote models automatically.

Wrapping Up

AI in production isn't just about brilliant models—it’s about robust systems.

By embracing Java’s stability and integration power, enterprises can close the ML gap and unlock real business value.

How is your team handling ML deployment?

Are you leveraging Java in your AI stack?

Still relying on Python wrappers in production?

Dealing with tricky integration points?

Let’s chat in the comments—I’d love to hear your experience!

Tags:

![[Webinar] AI Is Already Inside Your SaaS Stack — Learn How to Prevent the Next Silent Breach](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiOWn65wd33dg2uO99NrtKbpYLfcepwOLidQDMls0HXKlA91k6HURluRA4WXgJRAZldEe1VReMQZyyYt1PgnoAn5JPpILsWlXIzmrBSs_TBoyPwO7hZrWouBg2-O3mdeoeSGY-l9_bsZB7vbpKjTSvG93zNytjxgTaMPqo9iq9Z5pGa05CJOs9uXpwHFT4/s1600/ai-cyber.jpg?#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Rogue Company Elite tier list of best characters [April 2025]](https://media.pocketgamer.com/artwork/na-33136-1657102075/rogue-company-ios-android-tier-cover.jpg?#)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What’s new in Android’s April 2025 Google System Updates [U: 4/18]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)