Background Process Communication in CLI Applications

Context Currently, I am working on implementing a custom CLI application in Go at work which is a first for me in this scope. One of the challenges which I want to blog about today is communication between the daemon and user-facing CLI part. For simple context - lets say that daemon is a process which transforms an object that user submits via interacting with a command and does something with it - writes some updates since it takes some time to actually finish it and we want to give some feedback to the user while it's doing the work. When I think about it, large amount of software which I usually use throughout the day requires users to create and edit configuration files to communicate with the software. Once you edit the configuration file, it is required to restart the service which parses the configuration file and validates it at the startup. Communication during the running process is usually not viable. These are services like Docker, Nginx, SQLC,... But some (actually quite a lot :P) software requires modification during the process. But how do we communicate between processes? One big question that I had during the development was how to implement communication between 2 different processes. After searching the web about how other people tackled this problem I found out several possibilities: file: write data to a file which multiple processes can access shared memory: one block of memory reserved by the processes used for exchanging information message queue: self evident - queue of messages And many more! Read a general overview on the wiki page for a generally okay general read. Embedded database This was my first thought due to reading about bbolt. I really wanted to give it a try and went on to prototype a quick working example. Everything went pretty butter smooth - I really liked that I could use the same codebase for interacting with myself. Then it hit me during the testing... bbolt actually creates a binary on the disk - how would 2 processes actually open the same file at the same time... I thought about creating 2 different files and one process would only open it for reading while the other would have write permissions... but I didnt like the idea anymore... It wasnt clean anymore... RPC Going back to things I know... I recently went over a bookbook where we replicated an orchestration software (think kubernetes) and I saw that for interacting with the software you actually implement a web server. But I implemented millions of web servers due to being a web developer... but I thought that the design would be ok. But I wanted to try out RPC and decided on that in the end. Basic REST api would work as well. Strategy First thing is that working on random environments we can't have a reserved port for the web server so we need to actually initiate the server with port 0 which signals OS to find any available port and bind it for us. We can detect which port OS binds for us via the interface. Now how do we communicate this random port to the other process? I decided to actually use the .pid file which would include both the process id and the allocated port : and when I wanted to communicate I would just first check if daemon is actually running and use the port. Conclusion I'm slightly disappointed that I didn't actually try and commit to something completely new due to having time constraints for the project... I ended up using the same solution I use for most daily work with server communications. Feels like I am using one tool for everything... unfortunately it actually felt good in the end after I implemented it so I am not really regretting anything. RPC feels okay as well.

Context

Currently, I am working on implementing a custom CLI application in Go at work which is a first for me in this scope.

One of the challenges which I want to blog about today is communication between the daemon and user-facing CLI part.

For simple context - lets say that daemon is a process which transforms an object that user submits via interacting with a command and does something with it - writes some updates since it takes some time to actually finish it and we want to give some feedback to the user while it's doing the work.

When I think about it, large amount of software which I usually use throughout the day requires users to create and edit configuration files to communicate with the software.

Once you edit the configuration file, it is required to restart the service which parses the configuration file and validates it at the startup. Communication during the running process is usually not viable. These are services like Docker, Nginx, SQLC,...

But some (actually quite a lot :P) software requires modification during the process.

But how do we communicate between processes?

One big question that I had during the development was how to implement communication between 2 different processes.

After searching the web about how other people tackled this problem I found out several possibilities:

- file: write data to a file which multiple processes can access

- shared memory: one block of memory reserved by the processes used for exchanging information

- message queue: self evident - queue of messages

And many more!

Read a general overview on the wiki page for a generally okay general read.

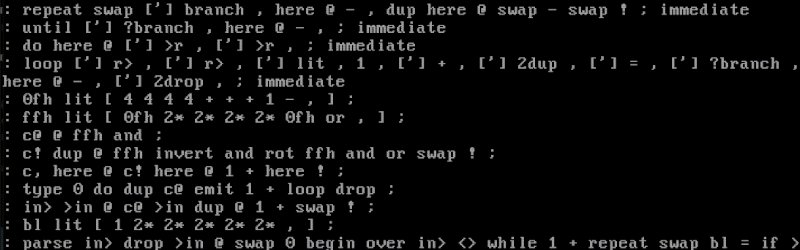

Embedded database

This was my first thought due to reading about bbolt. I really wanted to give it a try and went on to prototype a quick working example.

Everything went pretty butter smooth - I really liked that I could use the same codebase for interacting with myself. Then it hit me during the testing... bbolt actually creates a binary on the disk - how would 2 processes actually open the same file at the same time... I thought about creating 2 different files and one process would only open it for reading while the other would have write permissions... but I didnt like the idea anymore... It wasnt clean anymore...

RPC

Going back to things I know... I recently went over a bookbook where we replicated an orchestration software (think kubernetes) and I saw that for interacting with the software you actually implement a web server.

But I implemented millions of web servers due to being a web developer... but I thought that the design would be ok. But I wanted to try out RPC and decided on that in the end. Basic REST api would work as well.

Strategy

First thing is that working on random environments we can't have a reserved port for the web server so we need to actually initiate the server with port 0 which signals OS to find any available port and bind it for us.

We can detect which port OS binds for us via the interface.

Now how do we communicate this random port to the other process?

I decided to actually use the .pid file which would include both the process id and the allocated port : and when I wanted to communicate I would just first check if daemon is actually running and use the port.

Conclusion

I'm slightly disappointed that I didn't actually try and commit to something completely new due to having time constraints for the project... I ended up using the same solution I use for most daily work with server communications. Feels like I am using one tool for everything... unfortunately it actually felt good in the end after I implemented it so I am not really regretting anything. RPC feels okay as well.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)