AWS Redshift Cheat Sheet for AWS Certified Data Engineer - Associate (DEA-C01)

Core Concepts and Building Blocks

Amazon Redshift is a fully managed, petabyte-scale data warehouse service in the cloud. It's designed for high performance analytics and business intelligence workloads.

Key Components:

-

Clusters: Collection of computing resources called nodes

-

Nodes: Individual compute units that process queries

-

Leader Node: Manages client connections and query planning

-

Compute Nodes: Execute queries and store data

-

Slices: Partitions of compute nodes where data is stored

-

Databases: Collections of tables

-

Workload Management (WLM): Controls query prioritization and resource allocation

-

Redshift Spectrum: Query data directly from S3 without loading

-

Concurrency Scaling: Automatically adds cluster capacity to handle increased demand

Redshift Architecture Mind Map

Amazon Redshift

├── Cluster Architecture

│ ├── Leader Node

│ │ ├── Query Planning

│ │ ├── Result Aggregation

│ │ └── Client Connection Management

│ └── Compute Nodes

│ ├── Data Storage

│ ├── Query Execution

│ └── Slices (Data Partitions)

├── Data Storage

│ ├── Columnar Storage

│ ├── Zone Maps

│ ├── Data Compression

│ └── Data Distribution

│ ├── Even Distribution

│ ├── Key Distribution

│ └── All Distribution

├── Query Processing

│ ├── MPP Architecture

│ ├── Query Optimization

│ └── Result Caching

└── Management Features

├── Workload Management (WLM)

├── Concurrency Scaling

├── AQUA (Advanced Query Accelerator)

├── Redshift Spectrum

└── Automatic Table Optimization

Node Types and Specifications

| Node Type |

vCPU |

Memory |

Storage |

I/O |

Use Case |

| RA3 Nodes |

|

|

|

|

|

| ra3.16xlarge |

48 |

384 GB |

Managed |

4x |

Large data warehouses |

| ra3.4xlarge |

12 |

96 GB |

Managed |

2x |

Medium data warehouses |

| ra3.xlplus |

4 |

32 GB |

Managed |

1.5x |

Small data warehouses |

| DC2 Nodes |

|

|

|

|

|

| dc2.8xlarge |

32 |

244 GB |

2.56 TB SSD |

High |

Compute-intensive workloads |

| dc2.large |

2 |

15 GB |

160 GB SSD |

Moderate |

Small data warehouses |

| Serverless |

|

|

|

|

|

| Serverless |

Auto-scaling |

Auto-scaling |

Managed |

Varies |

Unpredictable workloads |

Redshift Features and Details

| Feature |

Description |

Limits/Notes |

| Maximum Cluster Size |

Up to 128 nodes (RA3) |

Single-node clusters available for dev/test |

| Maximum Storage |

Up to 8 PB with RA3 nodes using Redshift Managed Storage |

Scales automatically |

| Maximum Table Size |

Unlimited with Spectrum |

Limited by cluster storage for local tables |

| Maximum Row Size |

4 MB |

- |

| Maximum Columns per Table |

1,600 |

- |

| Maximum Tables per Cluster |

Thousands |

Depends on complexity |

| Maximum Concurrent Queries |

50 by default |

Can be increased with concurrency scaling |

| Maximum Concurrent Connections |

500 |

- |

| Maximum Database Size |

Unlimited with Spectrum |

Limited by cluster storage for local data |

| Backup Retention |

1-35 days for automated snapshots |

Manual snapshots don't expire |

| Query Timeout |

Default 1 hour |

Configurable |

| Maintenance Window |

30-minute window once per week |

Configurable |

| Encryption |

At-rest and in-transit |

KMS or HSM |

| VPC Support |

Yes |

Enhanced VPC routing available |

| Cross-Region Snapshots |

Yes |

For disaster recovery |

| Elastic Resize |

Add/remove nodes in minutes |

Limited to same node type |

| Classic Resize |

Change node types |

Takes longer than elastic resize |

| Concurrency Scaling |

Auto-scales read capacity |

Charged by usage (1 free hour per day) |

| AQUA |

Hardware-accelerated cache |

Available for RA3 nodes |

| Federated Query |

Query across databases |

PostgreSQL and Aurora PostgreSQL |

Data Distribution Styles

| Distribution Style |

Description |

Best For |

Performance Impact |

| AUTO |

Redshift assigns optimal distribution |

General use |

Good for most cases |

| EVEN |

Rows distributed evenly across slices |

Tables without clear join key |

Balanced storage, potential data movement during joins |

| KEY |

Rows with same values in distribution column on same slice |

Join tables on distribution key |

Minimizes data movement during joins |

| ALL |

Full copy of table on every node |

Small dimension tables |

Fast joins but storage overhead |

Sort Keys

| Sort Key Type |

Description |

Best For |

Performance Impact |

| Compound |

Sort by column order (like a phone book) |

Range-restricted scans on sort columns |

Excellent for queries filtering on prefix of sort key |

| Interleaved |

Equal weight to each sort column |

Queries with predicates on different columns |

Better for varied query patterns |

| Automatic |

Redshift chooses optimal sort key |

General use |

Good for most cases |

Compression Encodings

| Encoding |

Best For |

Compression Ratio |

Performance Impact |

| RAW |

Binary data, already compressed |

None |

Baseline |

| AZ64 |

Numeric data |

Good |

Fast computation |

| BYTEDICT |

Limited distinct values |

Very high |

Fast for small domains |

| DELTA |

Incremental numeric data |

High |

Good for dates, timestamps |

| LZO |

Very large text columns |

Moderate |

Good general purpose |

| ZSTD |

Varied data types |

High |

Good general purpose, better than LZO |

| RUNLENGTH |

Repeated values |

Very high |

Excellent for low-cardinality columns |

| TEXT255/TEXT32K |

Variable-length strings |

High |

Good for text |

Important Redshift Limits and Performance Factors

- Maximum of 500 concurrent connections per cluster

- Default query timeout is 1 hour (configurable)

- Maximum of 50 concurrent queries by default

- Maximum of 100 databases per cluster

- Maximum of 9,900 schemas per database

- Maximum of 200,000 tables per cluster (including temporary tables)

- Maximum row size is 4 MB

- Maximum column name length is 127 bytes

- Maximum 1,600 columns per table

- Maximum identifier length is 127 bytes

- Maximum SQL statement size is 16 MB

Query Performance Optimization

- Use COPY command for bulk data loading (8-10x faster than INSERT)

- Choose appropriate distribution keys to minimize data movement

- Use sort keys for columns frequently used in WHERE clauses

- Vacuum regularly to reclaim space and resort data

- Analyze tables to update statistics for the query planner

- Use appropriate compression encodings for columns

- Avoid SELECT * and retrieve only needed columns

- Use UNLOAD to export large result sets to S3

- Implement proper partitioning when using Redshift Spectrum

- Use materialized views for common, complex queries

Data Loading Best Practices

- Use COPY command from S3, not INSERT statements

- Split large files into multiple files (1-128 MB each)

- Use gzip compression for load files

- Load data in parallel using multiple files

- Use a manifest file to ensure all files are loaded

- Use STATUPDATE ON to update statistics after loading

- Use COMPUPDATE ON for automatic compression analysis

- Temporarily disable automatic compression for very large loads

- Use a single COPY transaction for related tables

- Example COPY command:

COPY customer

FROM 's3://mybucket/customer/data/'

IAM_ROLE 'arn:aws:iam::0123456789012:role/MyRedshiftRole'

DELIMITER '|' REGION 'us-west-2'

GZIP COMPUPDATE ON;

Workload Management (WLM)

- Automatic WLM: Redshift manages query queues and memory allocation

- Manual WLM: Define up to 8 queues with custom settings

- Short Query Acceleration (SQA): Prioritizes short-running queries

- Concurrency scaling: Automatically adds transient clusters for read queries

- Query monitoring rules: Define metrics-based actions for long-running queries

- Query priority: Assign importance levels to different workloads

- User groups: Assign users to specific WLM queues

- Memory allocation: Control percentage of memory allocated to each queue

- Concurrency level: Set maximum concurrent queries per queue

- Timeout: Set maximum execution time per queue

Redshift Spectrum

- Query data directly in S3 without loading into Redshift

- Supports various file formats: Parquet, ORC, JSON, CSV, Avro

- Uses external tables defined in AWS Glue Data Catalog

- Scales automatically to thousands of instances

- Supports complex data types and nested data

- Partition pruning improves performance dramatically

- Charged separately from Redshift cluster usage

- Example external table creation:

CREATE EXTERNAL TABLE spectrum.sales(

salesid INTEGER,

listid INTEGER,

sellerid INTEGER,

buyerid INTEGER,

eventid INTEGER,

dateid INTEGER,

qtysold INTEGER,

pricepaid DECIMAL(8,2),

commission DECIMAL(8,2)

)

PARTITIONED BY (saledate DATE)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS TEXTFILE

LOCATION 's3://mybucket/spectrum/sales/';

Security Features

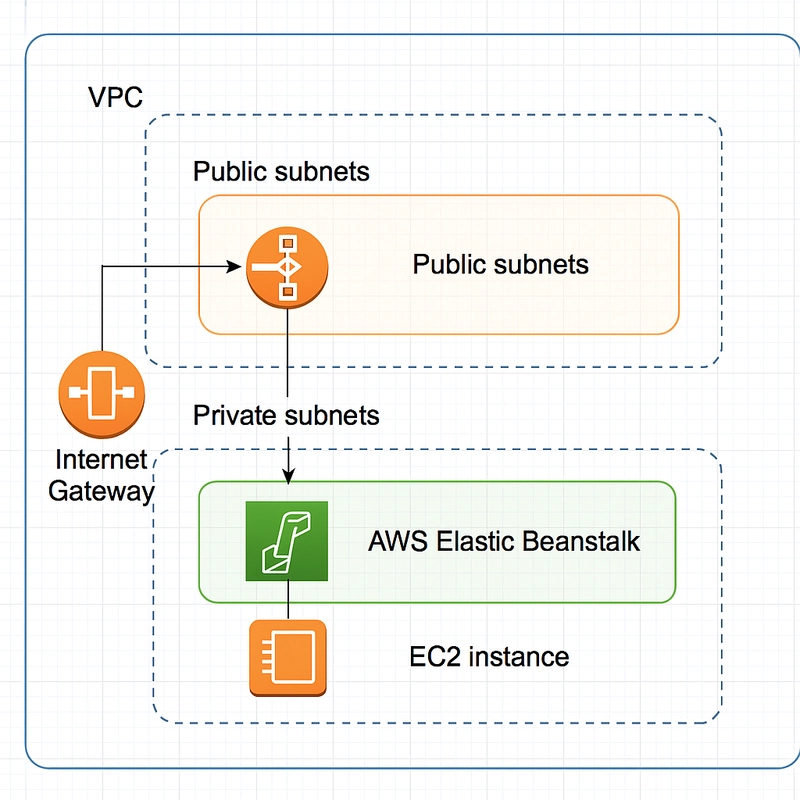

- VPC networking with security groups

- IAM integration for authentication and authorization

- Column-level access control

- Row-level security policies

- Dynamic data masking

- AWS KMS integration for encryption at rest

- SSL for encryption in transit

- CloudTrail integration for audit logging

- Multi-factor authentication support

- Integration with AWS Lake Formation for fine-grained access control

Backup and Disaster Recovery

- Automated snapshots (1-35 day retention)

- Manual snapshots (retained until deleted)

- Cross-region snapshot copy for disaster recovery

- Point-in-time recovery (up to 5-minute increments)

- Snapshot sharing across AWS accounts

- Automated snapshot schedule (every 8 hours by default)

- Snapshot restore to new cluster

- Incremental snapshots to minimize storage costs

- Snapshot storage in S3 (separate from cluster storage)

- Continuous backup to RA3 clusters

Redshift Serverless

- No cluster management required

- Automatic scaling of compute resources

- Pay only for what you use (RPU-seconds)

- Automatic pause and resume

- Seamless transition from provisioned clusters

- Same SQL interface as provisioned Redshift

- Integrated with Redshift Spectrum

- Base capacity specified in Redshift Processing Units (RPUs)

- Maximum capacity limits to control costs

- Ideal for unpredictable or intermittent workloads

Redshift Integration with AWS Services

- S3: Data loading, unloading, and Spectrum

- AWS Glue: Data catalog for Spectrum

- AWS DMS: Data migration to Redshift

- Kinesis Data Firehose: Streaming data ingestion

- AWS Lambda: Automated ETL and maintenance

- QuickSight: Business intelligence and visualization

- Lake Formation: Fine-grained access control

- CloudWatch: Monitoring and alerting

- CloudTrail: Audit logging

- AWS Secrets Manager: Credential management

Redshift vs. Open Source Alternatives

| Feature |

Redshift |

PostgreSQL |

Apache Hive |

Presto |

| Architecture |

MPP, columnar |

SMP, row-based |

MPP on Hadoop |

MPP query engine |

| Scale |

Petabytes |

Terabytes |

Petabytes |

Petabytes |

| Performance |

Very high |

Moderate |

Low to moderate |

High for queries |

| Management |

Fully managed |

Self-managed |

Self-managed |

Self-managed |

| Cost model |

Pay for capacity |

Infrastructure cost |

Infrastructure cost |

Infrastructure cost |

| SQL compliance |

PostgreSQL 8.0 compatible |

Full PostgreSQL |

HiveQL (limited) |

ANSI SQL |

| Concurrency |

Limited (50+) |

High |

Limited |

Moderate |

| Use case |

Data warehousing |

OLTP, small OLAP |

Batch analytics |

Interactive queries |

Important CloudWatch Metrics for Monitoring

| Metric |

Description |

Threshold |

Action |

| CPUUtilization |

Percentage of CPU used |

>80% sustained |

Consider scaling or query optimization |

| PercentageDiskSpaceUsed |

Storage utilization |

>80% |

Resize cluster or clean up data |

| DatabaseConnections |

Active connections |

>80% of max |

Increase connection limit or optimize connection pooling |

| QueriesCompletedPerSecond |

Query throughput |

Baseline dependent |

Monitor for unexpected changes |

| QueryDuration |

Time to execute queries |

Baseline dependent |

Optimize slow queries |

| WLMQueueLength |

Queries waiting in queue |

>5 consistently |

Adjust WLM or scale cluster |

| WLMQueueWaitTime |

Time queries wait in queue |

>5 seconds |

Adjust WLM or scale cluster |

| ReadIOPS |

Read operations per second |

Baseline dependent |

Monitor for spikes or drops |

| WriteIOPS |

Write operations per second |

Baseline dependent |

Monitor for spikes or drops |

| ReadLatency |

Time for disk read operations |

>20ms |

Investigate storage issues |

| WriteLatency |

Time for disk write operations |

>20ms |

Investigate storage issues |

| ConcurrencyScalingActiveClusters |

Number of scaling clusters |

Cost dependent |

Monitor for unexpected scaling |

Data Ingestion and Pipeline Replayability

- Use COPY command with manifest files to track loaded files

- Implement idempotent data loading with IDENTITY columns or natural keys

- Use staging tables and transactions for atomic loads

- Implement error handling with MAXERROR parameter in COPY

- Store raw data in S3 for reprocessing if needed

- Use Kinesis Data Firehose for streaming data ingestion

- Implement data validation before and after loading

- Use AWS Glue for ETL job orchestration

- Implement checkpointing in data pipelines for resumability

- Use AWS Step Functions for complex pipeline orchestration

Throughput and Latency Characteristics

- COPY command throughput: Up to several GB/s depending on cluster size

- Bulk loading is significantly faster than row-by-row inserts

- Query latency varies from milliseconds to hours depending on complexity

- Concurrency scaling adds read capacity within seconds

- Elastic resize completes within minutes

- Classic resize can take hours depending on data volume

- Vacuum operation speed depends on unsorted data percentage

- Redshift Spectrum queries have higher latency than local queries

- WLM queue wait time impacts overall query latency

- Result caching provides sub-second response for repeated queries

Implementing Throttling and Overcoming Rate Limits

- Use connection pooling to manage database connections

- Implement exponential backoff for API calls

- Use WLM to prioritize critical queries

- Implement client-side query queuing for high-concurrency applications

- Use short query acceleration for time-sensitive small queries

- Batch small inserts into larger COPY operations

- Use concurrency scaling for read-heavy workloads

- Implement retry logic for throttled operations

- Monitor and alert on queue wait times

- Use reserved capacity for predictable workloads

Advanced Features and Optimizations

- Materialized views for precomputed query results

- Automatic table optimization for sort and distribution keys

- Automatic vacuum delete for maintaining performance

- Automatic analyze for statistics maintenance

- Query monitoring rules for workload management

- Federated queries to access data in other databases

- Data sharing across Redshift clusters

- Machine learning integration with Amazon SageMaker

- Spatial data support for geospatial analytics

- HyperLogLog functions for cardinality estimation

- Time series functions for time-based analysis

- Window functions for advanced analytics

- AQUA (Advanced Query Accelerator) for RA3 nodes

- Cross-database queries within a cluster

- Semi-structured data support (SUPER data type)

- JSON and PartiQL support for flexible data models

- Stored procedures for complex logic

- User-defined functions (UDFs) for custom operations

Example Calculation: Cluster Sizing

-

Storage Calculation:

- Raw data size: 1 TB

- Compression ratio: 3:1 (typical for Redshift)

- Required storage: 1 TB ÷ 3 = 333 GB

- Add 20% for sorting and intermediate results: 333 GB × 1.2 = 400 GB

- Recommended cluster: ra3.4xlarge (managed storage)

-

Performance Calculation:

- Peak concurrent queries: 20

- Target query execution time: 10 seconds

- Required slices: 20 queries × 2 slices per query = 40 slices

- Each ra3.4xlarge has 12 slices (3 slices per vCPU, 4 vCPUs)

- Required nodes: 40 slices ÷ 12 slices per node = 3.33 nodes

- Recommended cluster: 4 × ra3.4xlarge nodes

-

Cost Calculation (example):

- 4 × ra3.4xlarge at $3.26/hour = $13.04/hour

- Monthly cost: $13.04 × 24 × 30 = $9,388.80

- Managed storage: 400 GB at $0.024/GB/month = $9.60/month

- Total monthly cost: $9,398.40

-

Concurrency Scaling Cost:

- 1 free concurrency scaling hour per day = 30 hours/month

- Additional usage: 2 hours/day × 30 days = 60 hours/month

- Billable hours: 60 - 30 = 30 hours/month

- Cost: 30 hours × $13.04/hour = $391.20/month

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![Apple Considers Delaying Smart Home Hub Until 2026 [Gurman]](https://www.iclarified.com/images/news/96946/96946/96946-640.jpg)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)