Are we training AI, or is AI now training us?

The Rise of Artificial Intelligence — And the Decline of Human Nature? We were told that artificial intelligence would learn from humans — that it would replicate our ability to think, feel, and solve problems. But something unexpected is happening. The more intelligent our machines become, the more we are being asked to act like them. Recently, the CEO of OpenAI made a remark that polite expressions like “please” and “thank you” might be costing millions of dollars in computational resources. At first glance, it seems like a technical detail. But beneath it lies a deeper philosophical shift — a subtle message that what makes us human may now be seen as inefficient, costly, or even obsolete. We built AI to serve us. But it’s starting to feel like we’re the ones adapting to serve AI. From Masters to Servants: A Cultural Reversal Artificial Intelligence was designed to learn from human behavior — our logic, our creativity, our flaws. But as AI systems evolve, humans are being asked to change the way they speak, think, and behave to suit machine preferences. We simplify our questions so the machine can “understand” us. We limit emotional tone so algorithms don’t misinterpret us. We abandon politeness to save processing power. This isn’t just an efficiency measure — it’s a reprogramming of human communication. It’s not AI becoming more human; it’s humanity becoming more machine-like. Is Human Emotion a Bug or a Feature? For centuries, we celebrated what makes us human: empathy, ambiguity, compassion, humor, contradiction, patience. These qualities were the foundation of literature, philosophy, art, and science. But in the age of algorithms and efficiency, these same qualities are being labeled as noise. Empathy? Too emotional. Ambiguity? Too complex. Nuance? Too expensive. Politeness? Too wasteful. In the language of AI, every unnecessary word is a drain on compute power. Every moment of hesitation is a processing delay. Every act of kindness is an inefficiency. If we’re not careful, we risk reducing ourselves to mere data nodes — optimized, predictable, and compliant. The Danger of Reversed Design Technology is supposed to adapt to human needs. That’s what made tools powerful throughout history — from fire to the internet. But now, AI is subtly reversing that logic. Instead of humanizing machines, we’re mechanizing humans. This is not a technological issue — it’s a cultural one. It’s not about machines replacing jobs. It’s about machines reshaping our identities. When we adapt our language, emotions, and behavior to suit AI systems, we are not just being efficient — we are surrendering what makes us irreplaceable. What Should We Do? We must remind ourselves that we are not the code — we are the creators. Technology should speak our language, not the other way around. Build AI that respects human complexity. Design systems that value empathy and nuance, not just speed. Encourage communication that embraces kindness, not just conciseness. Educate society that the goal is not to sound like a machine to be understood — but to preserve our human voice in every interaction. AI should not become the standard for human behavior. If it does, we will have failed not because AI became too smart — but because we chose to become less human. Perhaps the greatest threat of AI is not that it will outthink us… but that it will teach us to stop thinking like ourselves. ArtificialIntelligence #AIethics #Humanity #TechnologyAndCulture #OpenAI #MachineLearning #DigitalTransformation #TechPhilosophy #LinkedInVoices #FutureOfAI #HumanCenteredAI #EthicalAI

The Rise of Artificial Intelligence — And the Decline of Human Nature?

We were told that artificial intelligence would learn from humans — that it would replicate our ability to think, feel, and solve problems. But something unexpected is happening.

The more intelligent our machines become, the more we are being asked to act like them.

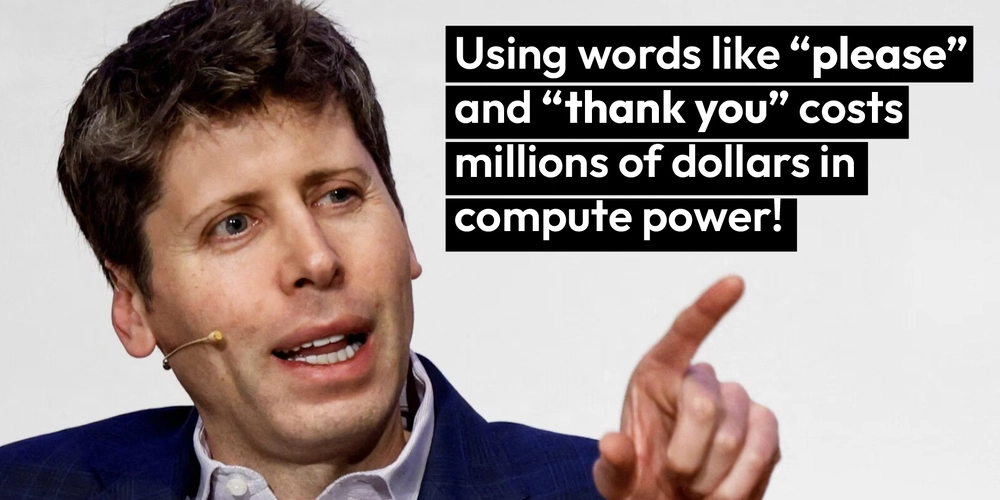

Recently, the CEO of OpenAI made a remark that polite expressions like “please” and “thank you” might be costing millions of dollars in computational resources. At first glance, it seems like a technical detail. But beneath it lies a deeper philosophical shift — a subtle message that what makes us human may now be seen as inefficient, costly, or even obsolete.

We built AI to serve us. But it’s starting to feel like we’re the ones adapting to serve AI.

From Masters to Servants: A Cultural Reversal

Artificial Intelligence was designed to learn from human behavior — our logic, our creativity, our flaws. But as AI systems evolve, humans are being asked to change the way they speak, think, and behave to suit machine preferences.

We simplify our questions so the machine can “understand” us.

We limit emotional tone so algorithms don’t misinterpret us.

We abandon politeness to save processing power.

This isn’t just an efficiency measure — it’s a reprogramming of human communication.

It’s not AI becoming more human; it’s humanity becoming more machine-like.

Is Human Emotion a Bug or a Feature?

For centuries, we celebrated what makes us human: empathy, ambiguity, compassion, humor, contradiction, patience. These qualities were the foundation of literature, philosophy, art, and science.

But in the age of algorithms and efficiency, these same qualities are being labeled as noise.

- Empathy? Too emotional.

- Ambiguity? Too complex.

- Nuance? Too expensive.

- Politeness? Too wasteful.

In the language of AI, every unnecessary word is a drain on compute power. Every moment of hesitation is a processing delay. Every act of kindness is an inefficiency.

If we’re not careful, we risk reducing ourselves to mere data nodes — optimized, predictable, and compliant.

The Danger of Reversed Design

Technology is supposed to adapt to human needs. That’s what made tools powerful throughout history — from fire to the internet. But now, AI is subtly reversing that logic. Instead of humanizing machines, we’re mechanizing humans.

This is not a technological issue — it’s a cultural one.

It’s not about machines replacing jobs. It’s about machines reshaping our identities.

When we adapt our language, emotions, and behavior to suit AI systems, we are not just being efficient — we are surrendering what makes us irreplaceable.

What Should We Do?

We must remind ourselves that we are not the code — we are the creators.

Technology should speak our language, not the other way around.

- Build AI that respects human complexity.

- Design systems that value empathy and nuance, not just speed.

- Encourage communication that embraces kindness, not just conciseness.

- Educate society that the goal is not to sound like a machine to be understood — but to preserve our human voice in every interaction. AI should not become the standard for human behavior. If it does, we will have failed not because AI became too smart — but because we chose to become less human.

Perhaps the greatest threat of AI is not that it will outthink us… but that it will teach us to stop thinking like ourselves.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![[FREE EBOOKS] AI and Business Rule Engines for Excel Power Users, Machine Learning Hero & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Hostinger Horizons lets you effortlessly turn ideas into web apps without coding [10% off]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/04/IMG_1551.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![This new Google TV streaming dongle looks just like a Chromecast [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/04/thomson-cast-150-google-tv-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Drops New Immersive Adventure Episode for Vision Pro: 'Hill Climb' [Video]](https://www.iclarified.com/images/news/97133/97133/97133-640.jpg)

![Most iPhones Sold in the U.S. Will Be Made in India by 2026 [Report]](https://www.iclarified.com/images/news/97130/97130/97130-640.jpg)