A Beginner's Journey: Deploying Applications on Amazon EKS

In this blog post, I want to walk you through the step-by-step process of deploying a straightforward web application utilising Amazon EKS (Elastic Kubernetes Service). Kubernetes has emerged as one of the most widely adopted tools in the DevOps industry. However, many individuals find themselves wrestling with the complexities of EKS and its integration with AWS. My objective is to demonstrate these concepts, making them more accessible and practical for implementation. What Is Kubernetes? Kubernetes is an innovative open-source platform designed for container orchestration. It automates critical processes such as deploying, scaling, and managing containerised applications. Originally developed by Google, Kubernetes is now watched by the Cloud Native Computing Foundation. In essence, Kubernetes serves as a powerful framework for managing your applications, enabling seamless deployment and scalability tailored to your needs. Why EKS? When it comes to deploying and managing the containerised application, Elastic Kubernetes Service stand out exceptionally well. Here's why EKS can transform your deployment strategy. Fully Managed: EKS simplifies Kubernetes management by handling tasks like deploying and scaling, allowing your team to focus on building exceptional applications. Seamless AWS Integration: EKS works effortlessly with AWS services, improving your application's functionality while maintaining strong security through IAM and other tools. Dynamic Scalability: It easily adjusts the number of nodes and pods to match traffic demands, ensuring efficient resource utilisation and potential cost savings. High Availability: Leveraging multiple Availability Zones(AZ), EKS guarantees that your applications remain online and available, even during unexpected challenges. Enhanced Security: With built-in security features like IAM integration and automatic data encryption, EKS safeguards your sensitive information. Strong Support Community: EKS has among the strong community support concerning others as it gives you access to best practices and assistance whenever needed. How Do We Deploy Cloud-Native Apps on EKS? This tutorial's primary goal is to demonstrate how to deploy a containerised application onto an EKS cluster, leveraging Helm for efficient package management. Prerequisites Before diving into the deployment process, this is the deployment process we are going to use and ensure you have the following tools installed and configured on your system: Python: A powerful programming language necessary for application development. Docker: A platform for developing, shipping, and running applications in containers. Kubernetes: The container orchestration system we'll be working with. Helm: The package manager for Kubernetes that simplifies deployment. AWS CLI: Command Line Interface for interacting with AWS services. Amazon EKS: AWS's managed Kubernetes service. To access the source code and additional resources, feel free to visit the GitHub repository here: [GitHub Repo Link: https://github.com/pratik-mahalle/Zero-to-Production]. Step 1: Dockerizing the Application Let's picture you have a simple application written in Python. The first crucial step involves creating a Docker image of this application. Below is the Dockerfile that I used: FROM python:3.9-slim WORKDIR /app COPY . . RUN pip install -r requirements.txt EXPOSE 5000 CMD ["python", "app.py"] In this Dockerfile: We start from the official Python 3.9 image with a slim footprint to keep our container lightweight. The WORKDIR instruction sets the working directory in the container to /app. All application files are copied into this directory. The command pip install -r requirements.txt installs all necessary dependencies. The EXPOSE command tells Docker to expose port 5000 on the container for web traffic. Finally, the CMD instruction specifies how to run the application. Next, we will upload this Docker image to an Amazon ECR (Elastic Container Registry). Ensure that you create an ECR repository through the AWS Console and authenticate your Docker client for the process. To obtain an authentication token and log in to your Docker registry, execute the following command in the AWS CLI: aws ecr get-login-password - region ap-south-1 | docker login - username AWS - password-stdin [AWS_ACCOUNT_ID].dkr.ecr.ap-south-1.amazonaws.com Important Note: If you encounter any issues when using the AWS CLI, check that you have the latest versions of both the AWS CLI and Docker installed. Make sure to replace the AWS_ACCOUNT_ID with your actual AWS account id. Now, to build your Docker image, use the following command. If further information on building a Docker image from scratch is needed, please refer to this resource. You can skip this if your image is already built: docker build -t demo . Once the build is complete, it's crucial to tag the image to prepar

In this blog post, I want to walk you through the step-by-step process of deploying a straightforward web application utilising Amazon EKS (Elastic Kubernetes Service).

Kubernetes has emerged as one of the most widely adopted tools in the DevOps industry. However, many individuals find themselves wrestling with the complexities of EKS and its integration with AWS. My objective is to demonstrate these concepts, making them more accessible and practical for implementation.

What Is Kubernetes?

Kubernetes is an innovative open-source platform designed for container orchestration. It automates critical processes such as deploying, scaling, and managing containerised applications.

Originally developed by Google, Kubernetes is now watched by the Cloud Native Computing Foundation. In essence, Kubernetes serves as a powerful framework for managing your applications, enabling seamless deployment and scalability tailored to your needs.

Why EKS?

When it comes to deploying and managing the containerised application, Elastic Kubernetes Service stand out exceptionally well. Here's why EKS can transform your deployment strategy.

- Fully Managed: EKS simplifies Kubernetes management by handling tasks like deploying and scaling, allowing your team to focus on building exceptional applications.

- Seamless AWS Integration: EKS works effortlessly with AWS services, improving your application's functionality while maintaining strong security through IAM and other tools.

- Dynamic Scalability: It easily adjusts the number of nodes and pods to match traffic demands, ensuring efficient resource utilisation and potential cost savings.

- High Availability: Leveraging multiple Availability Zones(AZ), EKS guarantees that your applications remain online and available, even during unexpected challenges.

- Enhanced Security: With built-in security features like IAM integration and automatic data encryption, EKS safeguards your sensitive information.

- Strong Support Community: EKS has among the strong community support concerning others as it gives you access to best practices and assistance whenever needed.

How Do We Deploy Cloud-Native Apps on EKS?

This tutorial's primary goal is to demonstrate how to deploy a containerised application onto an EKS cluster, leveraging Helm for efficient package management.

Prerequisites

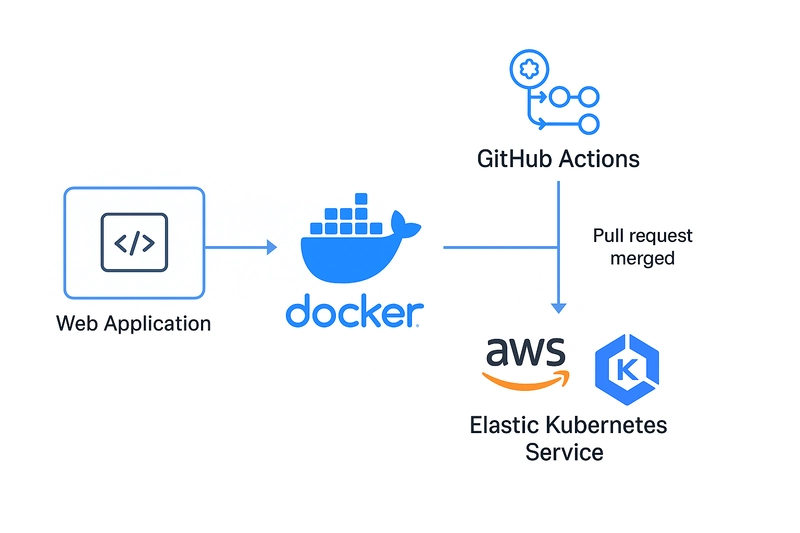

Before diving into the deployment process, this is the deployment process we are going to use and ensure you have the following tools installed and configured on your system:

- Python: A powerful programming language necessary for application development.

- Docker: A platform for developing, shipping, and running applications in containers.

- Kubernetes: The container orchestration system we'll be working with.

- Helm: The package manager for Kubernetes that simplifies deployment.

- AWS CLI: Command Line Interface for interacting with AWS services.

- Amazon EKS: AWS's managed Kubernetes service.

To access the source code and additional resources, feel free to visit the GitHub repository here: [GitHub Repo Link: https://github.com/pratik-mahalle/Zero-to-Production].

Step 1: Dockerizing the Application

Let's picture you have a simple application written in Python. The first crucial step involves creating a Docker image of this application. Below is the Dockerfile that I used:

FROM python:3.9-slim

WORKDIR /app

COPY . .

RUN pip install -r requirements.txt

EXPOSE 5000

CMD ["python", "app.py"]

In this Dockerfile:

- We start from the official Python 3.9 image with a slim footprint to keep our container lightweight.

- The

WORKDIRinstruction sets the working directory in the container to/app. - All application files are copied into this directory.

- The command

pip install -r requirements.txtinstalls all necessary dependencies. - The

EXPOSEcommand tells Docker to expose port 5000 on the container for web traffic. - Finally, the

CMDinstruction specifies how to run the application.

Next, we will upload this Docker image to an Amazon ECR (Elastic Container Registry). Ensure that you create an ECR repository through the AWS Console and authenticate your Docker client for the process.

To obtain an authentication token and log in to your Docker registry, execute the following command in the AWS CLI:

aws ecr get-login-password - region ap-south-1 | docker login - username AWS - password-stdin [AWS_ACCOUNT_ID].dkr.ecr.ap-south-1.amazonaws.com

Important Note: If you encounter any issues when using the AWS CLI, check that you have the latest versions of both the AWS CLI and Docker installed. Make sure to replace the AWS_ACCOUNT_ID with your actual AWS account id.

Now, to build your Docker image, use the following command. If further information on building a Docker image from scratch is needed, please refer to this resource. You can skip this if your image is already built:

docker build -t demo .

Once the build is complete, it's crucial to tag the image to prepare it for pushing to your ECR repository:

docker tag demo:latest [AWS_ACCOUNT_ID].dkr.ecr.ap-south-1.amazonaws.com/demo:latest

Finally, push your Docker image to the AWS repository with this command:

docker push [AWS_ACCOUNT_ID].dkr.ecr.ap-south-1.amazonaws.com/demo:latest

Congratulations! Your application is now successfully dockerized and ready for deployment.

Step 2: Create an EKS Cluster

With your application dockerized, the next step is to create an EKS cluster. First, verify that you have the AWS CLI, kubectl, and eksctl installed and properly configured on your terminal.

To create an EKS cluster, execute the following command in the terminal:

eksctl create cluster - name demo-cluster - region ap-south-1 - nodes 1 - spot

(Note: I opted for a spot instance since I plan to use this cluster for an extended period, which can be more cost-effective.)

After the cluster is created, it's crucial to update your kubeconfig file to ensure you're working with the latest resource configurations:

aws eks update-kubeconfig - name demo-cluster

To enable your pods to securely access AWS services without the need to store long-term credentials, we will configure a service account with appropriate IAM roles.

1. Create an OIDC Provider for Your EKS Cluster(Optional but recommended)

Run the following command to set up an OpenID Connect (OIDC) provider:

eksctl utils associate-iam-oidc-provider - region ap-south-1 - cluster demo-cluster - approve

2. Create an IAM Policy for ECR Access

Creating an IAM policy can be done via the AWS Console. Below is an example policy you can use, which grants the necessary permissions for ECR:

{

"Version": "2012–10–17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"ecr:DescribeImageScanFindings",

"ecr:StartImageScan",

"ecr:GetLifecyclePolicyPreview",

"ecr:CreateRepository",

"ecr:PutImageScanningConfiguration",

"ecr:GetAuthorizationToken",

"ecr:UpdateRepositoryCreationTemplate",

"ecr:PutRegistryScanningConfiguration",

"ecr:ListImages",

"ecr:GetRegistryScanningConfiguration",

"ecr:BatchGetImage",

"ecr:ReplicateImage",

"ecr:GetLifecyclePolicy"

],

"Resource": "*"

}

]

}

As a best practice, always attach the least privileged policy to enhance security measures.

3. Create the IAM Role with IRSA Binding

To create the IAM service account, execute the following command:

eksctl create iamserviceaccount \

- name ecr-access-sa \

- namespace default \

- cluster demo-cluster \

- attach-policy-arn arn:aws:iam::[AWS_ACCOUNT_ID]:policy/ECRReadAccess \

- approve \

- override-existing-serviceaccounts

Your EKS cluster is now adequately configured and ready for application deployment.

Step 3: Deploy Your Application

In the next section, we will delve into the details of registering our application with the EKS cluster and employing Kubernetes resources to manage and expose our application effectively.

Now, you're ready to deploy your app!

Here is the basic Helm structure of the app:

flask-app/

├── Chart.yaml

├── templates/

│ ├── deployment.yaml

│ └── service.yaml

└── values.yaml

Let's write the values.yaml file. This file will store all the configuration values.

replicaCount: 2

image:

repository: _.dkr.ecr.ap-south-1.amazonaws.com/demo

tag: latest

pullPolicy: IfNotPresent

service:

type: LoadBalancer

port: 80

targetPort: 5000

We are mapping the external port 80 to app`s internal port 5000 and exposing using thee LoadBalancer. Make sure to replace the repository.

Chart.yaml

apiVersion: v2

name: flask-app

version: 0.1.0

Deployment.yaml

Here's your main deployment file which manages the replica set and the desired pods are running:

apiVersion: apps/v1

kind: Deployment

metadata:

name: {{ .Chart.Name }}

spec:

serviceAccountName: ecr-access-sa

replicas: {{ .Values.replicaCount }}

selector:

matchLabels:

app: {{ .Chart.Name }}

template:

metadata:

labels:

app: {{ .Chart.Name }}

spec:

containers:

- name: {{ .Chart.Name }}

image: "{{ .Values.image.repository }}:{{ .Values.image.tag }}"

ports:

- containerPort: {{ .Values.service.targetPort }}

It uses the values from the values.yaml.

Service.yaml

apiVersion: v1

kind: Service

metadata:

name: {{ .Chart.Name }}

spec:

type: {{ .Values.service.type }}

selector:

app: {{ .Chart.Name }}

ports:

- name: http

protocol: TCP

port: 80

targetPort: 5000

We are exposing the application on port 80 using a LoadBalancer.

Now we are done with the Helm configuration. To deploy our app using Helm, use the following command:

helm upgrade - install flask-app ./Zero-to-Production - namespace default

To access your application, run:

kubectl get svc flask-app

Then copy the external IP and paste it into your browser. And that`s it, you did it!

Additional Information

You can use horizontal pod autoscaling (hpa) to scale the app in case of increased traffic, or you can simply use the kubectl scale command to scale the application. you can check the github repo for the scaling the pods.

That`s it for this now! I'm happy you made it to the end - thank you so much for your support. If you have any queries or opinions, you can connect with me on x.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Is this too much for a modular monolith system? [closed]](https://i.sstatic.net/pYL1nsfg.png)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)