When Code Meets Reality: Lessons from Building in the Physical World

As web developers, we get a lot of things for free: controlled environments, predictable inputs, and tight feedback loops. Testing, monitoring, and CI/CD pipelines help us catch and recover from failure quickly. But once code steps off the screen and into the physical world, you give up some control. Check out my lightning talk at DotJS about how Code in the Physical World can get a little messy. The good news? You don’t need to throw out your existing skillset to ride the wave of bringing AI into the physical world. The foundations you already have in web development translate more than you might think. At Viam, we build open-source tools that connect software to hardware. That means robotics, IoT, and edge computing. It’s fun, powerful…and deeply humbling. Because no matter how clean your code is, reality doesn’t always play along. Failure Looks Different in the Physical World Compared to software development environments, physical environments face different challenges that result in different consequences. Rivian pushed an OTA update that bricked 3% of their fleet, all because someone selected the wrong security certificate. A Nest thermostat update crashed during a winter update rollout because no one accounted for unstable networks. Facebook suffered a 6-hour global outage after a configuration change took down their internal tools and locked engineers out of data centers. Unlike web apps, a failed update in physical systems can’t be fixed with a quick deploy. In the physical world, failure is a car that won’t start, a house without heat, or engineers with crowbars trying to get back into their own data centers. And it’s not just human error: Cables melt under AI inference loads when NVIDIA sourced power cables that were not well-rated for the intensive operations to support AI pipelines. Sensors drift over time due to dust, aging, and other environmental factors, one reason why AirGradient recalibrates and smooths the detected values. Connectivity is intermittent by design, like MQTT for oil pipelines and sea turtles tagged with satellite sensors to store and forward data upon surfacing when connection is re-established. Buckley the sea turtle being tracked with a satellite tag So What Can Developers Do? We can’t count on eliminating failure altogether, but we can plan for it. Just like we design UIs for low-bandwidth conditions or fallback states, physical systems need graceful failure modes too. Your phone doesn’t catch fire when it overheats, it throttles the CPU, dims the screen, and pauses charging. That’s a designed failure state. NASA’s Voyager 1 is still running almost 50 years later without the ability to physically replace components. This is largely due to careful planning for failovers, redundancy, and managing tradeoffs. Starlink satellites are designed to be safely decommissioned and burn up cleanly at end-of-life leaving no debris in space. When you build for the physical world, failure is expected. The goal isn’t to prevent it, it’s to control how it happens. Writing Code That Controls Hardware You shouldn’t need to write firmware or low-level code to build real-world systems. That’s where projects like TC53 come in, standardizing JavaScript APIs for physical devices. Even moreso, you don’t need to write code that runs on the device, you can write code that controls the device remotely. If you’ve worked with REST APIs, event handlers, or browser-based tools, transitioning from purely digital interfaces to code that interacts with hardware is more familiar than you might expect. WebRTC: With browser-native APIs like WebRTC, you can control a physical device from your browser. No code needs to be deployed to the device itself. Johnny-Five: This is a framework that lets you run Node.js on your laptop to talk to an Arduino board over serial like a remote control. Viam: This is an open-source platform that abstracts away the finer details of integrating with drivers, networking, and security. So you can plug-and-play with modules to create your dream machines and then use the SDKs to build applications that control them. The real lesson? Keep the logic where it's easy to test, debug, and update. In this way, we can leverage our existing skillset to control devices without needing to dive deep into low-level code. The Shift Toward Embodied AI We’ve had the fundamental technologies for quite a while - computer vision, robot vacuums, Mars rovers that execute pre-planned logic. So what’s different now to enable the wild growth in AI, beyond the world of software, and into the physical world? What’s changed? Three key things have made embodied AI possible: Low-cost, high-performance compute Tooling and ecosystem to support deploying models and managing fleets Enough training data to go from rule-based execution to on-the-fly learning That unlocks a huge shift to enable systems that sense, act, adapt, and

As web developers, we get a lot of things for free: controlled environments, predictable inputs, and tight feedback loops. Testing, monitoring, and CI/CD pipelines help us catch and recover from failure quickly. But once code steps off the screen and into the physical world, you give up some control.

Check out my lightning talk at DotJS about how Code in the Physical World can get a little messy.

The good news? You don’t need to throw out your existing skillset to ride the wave of bringing AI into the physical world. The foundations you already have in web development translate more than you might think.

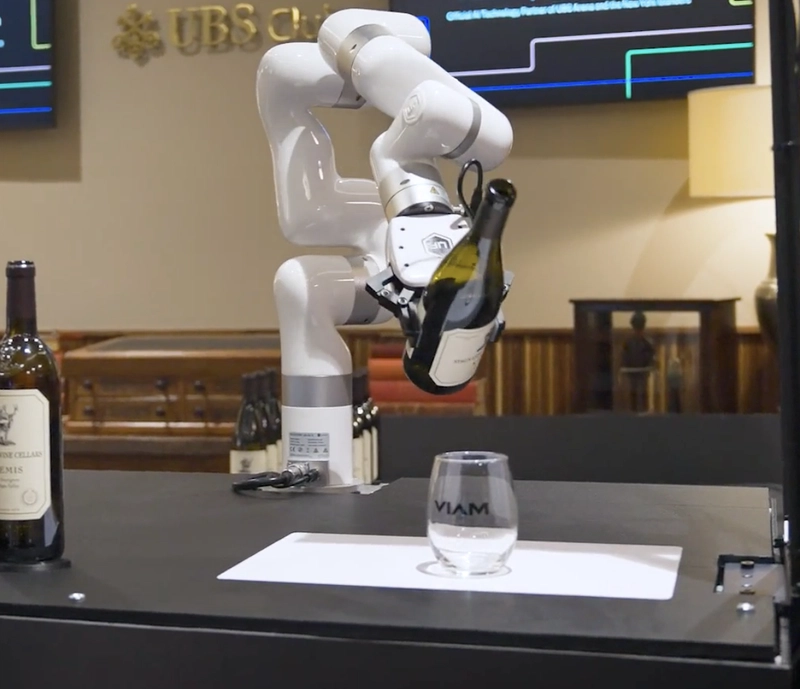

At Viam, we build open-source tools that connect software to hardware. That means robotics, IoT, and edge computing. It’s fun, powerful…and deeply humbling. Because no matter how clean your code is, reality doesn’t always play along.

Failure Looks Different in the Physical World

Compared to software development environments, physical environments face different challenges that result in different consequences.

- Rivian pushed an OTA update that bricked 3% of their fleet, all because someone selected the wrong security certificate.

- A Nest thermostat update crashed during a winter update rollout because no one accounted for unstable networks.

- Facebook suffered a 6-hour global outage after a configuration change took down their internal tools and locked engineers out of data centers.

Unlike web apps, a failed update in physical systems can’t be fixed with a quick deploy. In the physical world, failure is a car that won’t start, a house without heat, or engineers with crowbars trying to get back into their own data centers.

And it’s not just human error:

- Cables melt under AI inference loads when NVIDIA sourced power cables that were not well-rated for the intensive operations to support AI pipelines.

- Sensors drift over time due to dust, aging, and other environmental factors, one reason why AirGradient recalibrates and smooths the detected values.

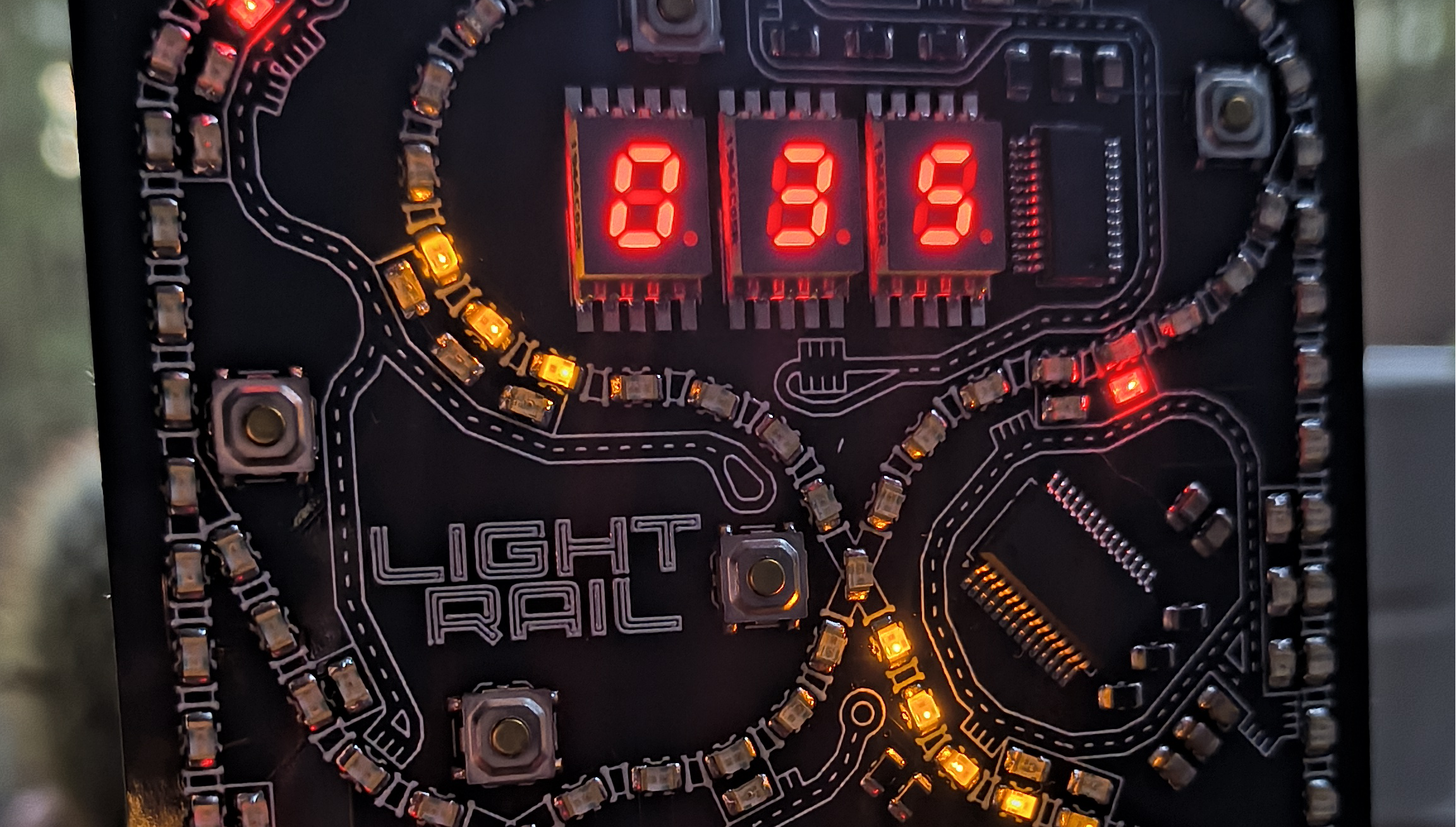

- Connectivity is intermittent by design, like MQTT for oil pipelines and sea turtles tagged with satellite sensors to store and forward data upon surfacing when connection is re-established.

Buckley the sea turtle being tracked with a satellite tag

So What Can Developers Do?

We can’t count on eliminating failure altogether, but we can plan for it. Just like we design UIs for low-bandwidth conditions or fallback states, physical systems need graceful failure modes too.

- Your phone doesn’t catch fire when it overheats, it throttles the CPU, dims the screen, and pauses charging. That’s a designed failure state.

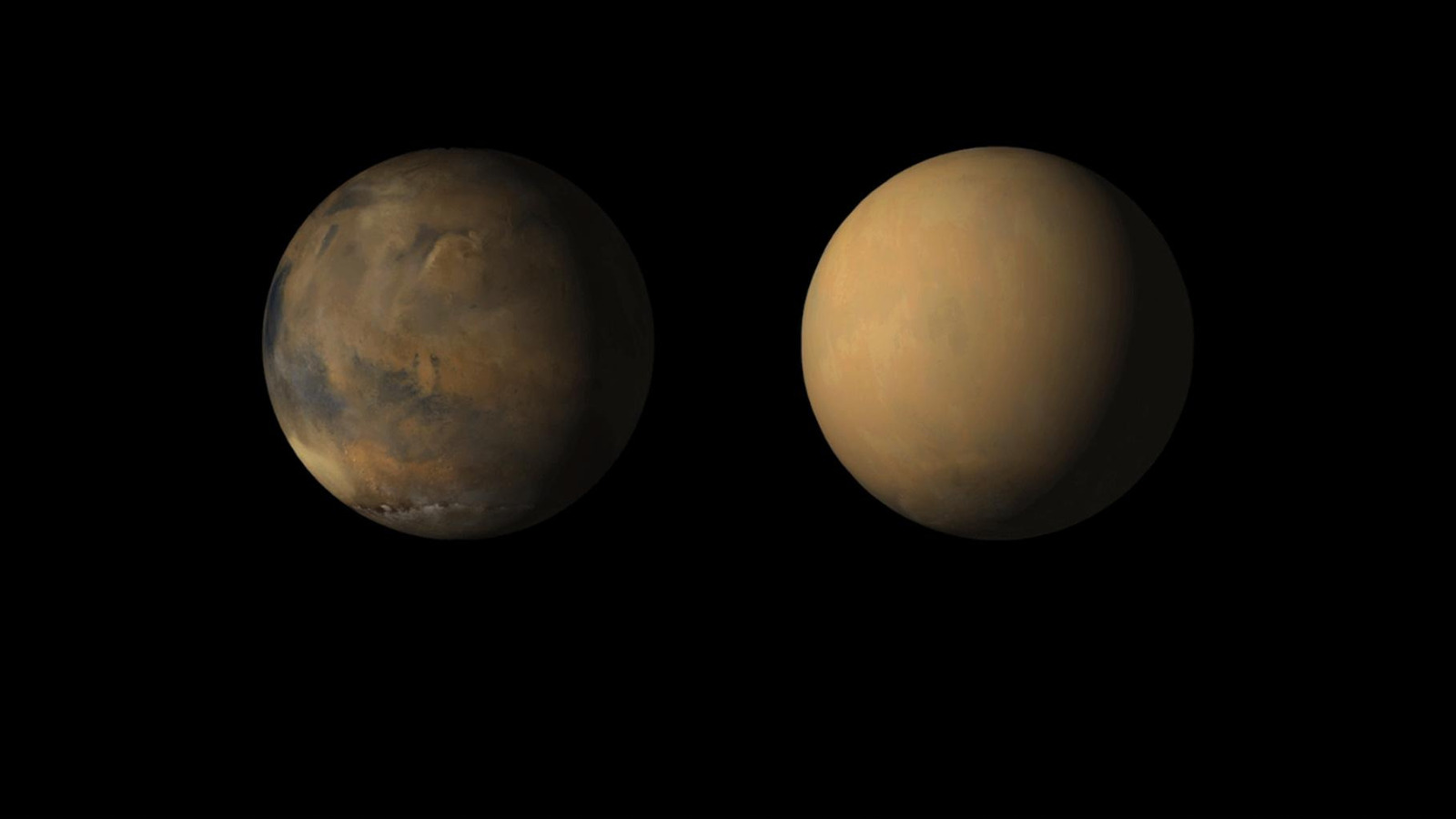

- NASA’s Voyager 1 is still running almost 50 years later without the ability to physically replace components. This is largely due to careful planning for failovers, redundancy, and managing tradeoffs.

- Starlink satellites are designed to be safely decommissioned and burn up cleanly at end-of-life leaving no debris in space.

When you build for the physical world, failure is expected. The goal isn’t to prevent it, it’s to control how it happens.

Writing Code That Controls Hardware

You shouldn’t need to write firmware or low-level code to build real-world systems. That’s where projects like TC53 come in, standardizing JavaScript APIs for physical devices.

Even moreso, you don’t need to write code that runs on the device, you can write code that controls the device remotely. If you’ve worked with REST APIs, event handlers, or browser-based tools, transitioning from purely digital interfaces to code that interacts with hardware is more familiar than you might expect.

-

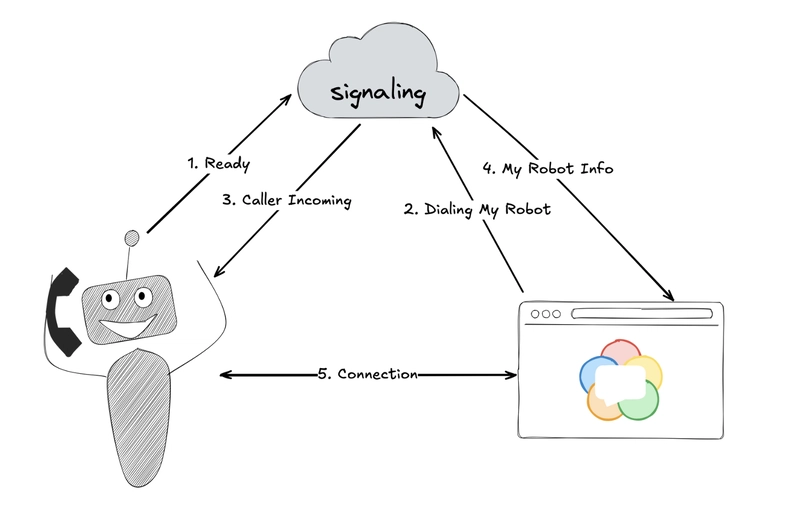

WebRTC: With browser-native APIs like WebRTC, you can control a physical device from your browser. No code needs to be deployed to the device itself.

- Johnny-Five: This is a framework that lets you run Node.js on your laptop to talk to an Arduino board over serial like a remote control.

-

Viam: This is an open-source platform that abstracts away the finer details of integrating with drivers, networking, and security. So you can plug-and-play with modules to create your dream machines and then use the SDKs to build applications that control them.

The real lesson? Keep the logic where it's easy to test, debug, and update. In this way, we can leverage our existing skillset to control devices without needing to dive deep into low-level code.

The Shift Toward Embodied AI

We’ve had the fundamental technologies for quite a while - computer vision, robot vacuums, Mars rovers that execute pre-planned logic. So what’s different now to enable the wild growth in AI, beyond the world of software, and into the physical world?

What’s changed? Three key things have made embodied AI possible:

- Low-cost, high-performance compute

- Tooling and ecosystem to support deploying models and managing fleets

- Enough training data to go from rule-based execution to on-the-fly learning

That unlocks a huge shift to enable systems that sense, act, adapt, and recover, just like people.

And we’re not just shipping to the cloud anymore. We’re shipping to physical devices:

- Phones

- Wearables

- Cars

- Drones

- Robots

The boundary between code and the real world is disappearing. And that’s why this moment is so exciting for web developers. Web developers have a unique advantage here. The shift to client-side AI and edge compute means your skills are in demand in this new landscape.

Want to Try It Yourself?

Modern platforms like Viam make it possible to control real-world hardware using familiar tools like JavaScript, Python, and APIs.

If you're curious about working with real-world hardware using modern software tools, check out the Viam docs. You don’t need to be an embedded systems engineer, just someone who understands how systems interact and wants to see it move something in the real world.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Rogue Company Elite tier list of best characters [April 2025]](https://media.pocketgamer.com/artwork/na-33136-1657102075/rogue-company-ios-android-tier-cover.jpg?#)

.webp?#)

![Here’s the first live demo of Android XR on Google’s prototype smart glasses [Video]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/04/google-android-xr-ted-glasses-demo-3.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![New Beats USB-C Charging Cables Now Available on Amazon [Video]](https://www.iclarified.com/images/news/97060/97060/97060-640.jpg)

![Apple M4 13-inch iPad Pro On Sale for $200 Off [Deal]](https://www.iclarified.com/images/news/97056/97056/97056-640.jpg)