Visual Grounding from Docling!

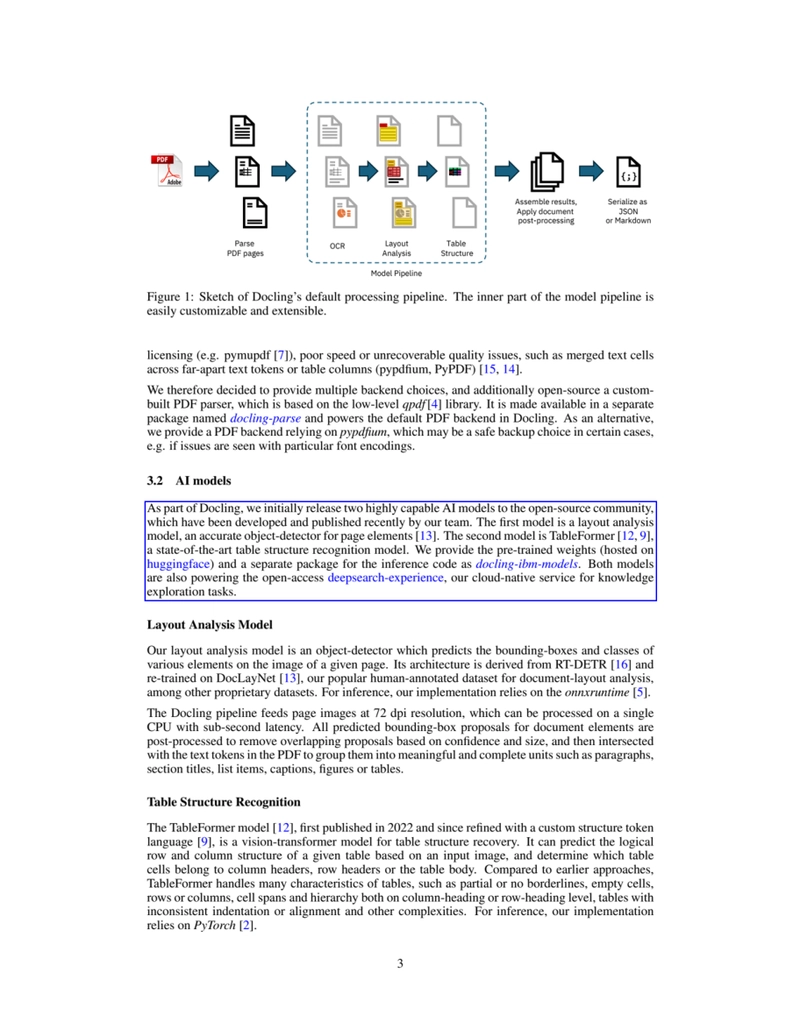

My experience testing for a demonstration “Visual Grounding” feature of Docling on Intel CPU. Introduction In discussions with our business partners, we always try to propose tools and solution to answer to specific business requirements. In a recent talk for a specific use-case I discovered “visual grounding” among other capacities of Docling set of toolings. “Visual Grounding”, in general terms is a task that aims at locating objects in an image based on a natural language query. This task, along with image captioning, visual question answering or content based image retrieval links image data with the text modality. This feature exists in Docling and there is a sample notebook provided as example. Implementation In order to test the visual grounding capacity use-case I tried to run the sample notebook, but as a Python app. Excerpt from the official documentation; This example showcases Docling’s visual grounding capabilities, which can be combined with any agentic AI / RAG framework. In this instance, we illustrate these capabilities leveraging the LangChain Docling integration, along with a Milvus vector store, as well as sentence-transformers embeddings. Also it is mentioned that…

My experience testing for a demonstration “Visual Grounding” feature of Docling on Intel CPU.

Introduction

In discussions with our business partners, we always try to propose tools and solution to answer to specific business requirements. In a recent talk for a specific use-case I discovered “visual grounding” among other capacities of Docling set of toolings.

“Visual Grounding”, in general terms is a task that aims at locating objects in an image based on a natural language query. This task, along with image captioning, visual question answering or content based image retrieval links image data with the text modality. This feature exists in Docling and there is a sample notebook provided as example.

Implementation

In order to test the visual grounding capacity use-case I tried to run the sample notebook, but as a Python app.

Excerpt from the official documentation;

This example showcases Docling’s visual grounding capabilities, which can be combined with any agentic AI / RAG framework.

In this instance, we illustrate these capabilities leveraging the LangChain Docling integration, along with a Milvus vector store, as well as sentence-transformers embeddings. Also it is mentioned that…

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Is this too much for a modular monolith system? [closed]](https://i.sstatic.net/pYL1nsfg.png)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)