Understanding MCP and How AI Engineers Can Leverage It

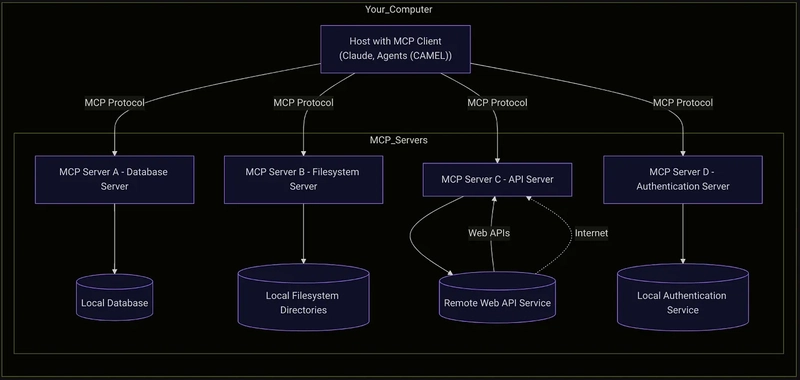

Understanding MCP (Model Context Protocol Server) and How AI Engineers Can Leverage It In the rapidly evolving landscape of AI development, Model Context Protocol (MCP) Servers have emerged as a game-changer. These servers facilitate efficient communication between AI models and applications, ensuring that contextual data is preserved and utilized effectively. For AI engineers working with large models like Mixtral, MCP servers provide the backbone for deploying scalable, intelligent agents. What is MCP (Model Context Protocol Server)? MCP (Model Context Protocol) is a framework designed to handle context-aware AI interactions by maintaining and efficiently managing session data. Traditional AI models often suffer from context loss, where a model loses track of previous interactions. MCP servers solve this by maintaining contextual state, enabling more coherent and intelligent responses. Key Features of MCP Servers: Session Persistence: Stores past interactions for improved model continuity. Scalability: Handles multiple AI model instances efficiently. Dynamic Context Management: Adapts to evolving conversations. Multi-Agent Coordination: Supports interactions between multiple AI agents. Security & Access Control: Ensures safe AI model communication. Best MCP Servers for AI Engineers AI engineers can leverage various MCP servers to enhance their AI models' performance. Below are some of the top MCP implementations: 1. LangChain Server Open-source framework for contextual AI interactions. Provides tools for managing long conversations and memory. Works well with OpenAI, Mixtral, and other LLMs. 2. FastAPI with Redis (Custom MCP Implementation) Leverages FastAPI for fast API interactions. Uses Redis for session persistence. Ideal for real-time applications needing scalable AI context management. 3. Haystack MCP Built for RAG (Retrieval-Augmented Generation). Optimized for knowledge-driven AI models. Works well for applications like chatbots and enterprise search. 4. Ollama MCP Lightweight MCP server for local AI models. Focused on privacy-preserving AI deployments. Best suited for on-premises AI applications. Use Cases of MCP in AI Applications MCP servers enable a range of powerful AI-driven solutions. Here are some critical use cases: 1. AI-Powered Virtual Assistants Ensures conversation continuity. Reduces redundant queries and improves personalization. 2. Customer Support Chatbots Maintains context across sessions. Enhances chatbot accuracy and user satisfaction. 3. AI-Powered Recommendation Systems Uses historical interactions for better recommendations. Deployed in e-commerce, entertainment, and healthcare. 4. Financial AI Agents Enhances fraud detection using contextual transaction analysis. Improves financial forecasting through data-driven insights. 5. AI in Healthcare Provides better patient interactions by remembering medical history. Supports AI-driven diagnostics and decision-making. Roadmap for Building an AI Agent with Python and Mixtral Large Building an AI agent using Python and Mixtral Large requires a structured approach. Below is a roadmap: Phase 1: Environment Setup Install Python and necessary dependencies: pip install fastapi redis mixtral openai Set up Redis for session persistence. Phase 2: Build the AI Agent Create an API backend using FastAPI: from fastapi import FastAPI import redis app = FastAPI() r = redis.Redis(host='localhost', port=6379, decode_responses=True) @app.get("/chat") def chat(session_id: str, user_input: str): context = r.get(session_id) or "" response = generate_response(context, user_input) r.set(session_id, response) return {"response": response} Integrate Mixtral Large for LLM responses: from openai import OpenAI client = OpenAI(api_key="YOUR_API_KEY") def generate_response(context, user_input): prompt = f"Context: {context}\nUser: {user_input}\nAI:" response = client.chat.completions.create( model="mixtral-large", messages=[{"role": "system", "content": prompt}] ) return response.choices[0].message['content'] Phase 3: Deploy the MCP Server Run the FastAPI MCP server: uvicorn app:app --host 0.0.0.0 --port 8000 Set up a Redis-backed session manager to maintain context. Phase 4: Integrate and Scale Deploy the solution using Docker and Kubernetes. Implement WebSockets for real-time interaction. Optimize Mixtral model calls for cost efficiency. Final Thoughts Model Context Protocol (MCP) Servers are indispensable in modern AI applications. Whether you're building virtual assistants, chatbots, or intelligent recommendation systems

Understanding MCP (Model Context Protocol Server) and How AI Engineers Can Leverage It

In the rapidly evolving landscape of AI development, Model Context Protocol (MCP) Servers have emerged as a game-changer. These servers facilitate efficient communication between AI models and applications, ensuring that contextual data is preserved and utilized effectively. For AI engineers working with large models like Mixtral, MCP servers provide the backbone for deploying scalable, intelligent agents.

What is MCP (Model Context Protocol Server)?

MCP (Model Context Protocol) is a framework designed to handle context-aware AI interactions by maintaining and efficiently managing session data. Traditional AI models often suffer from context loss, where a model loses track of previous interactions. MCP servers solve this by maintaining contextual state, enabling more coherent and intelligent responses.

Key Features of MCP Servers:

- Session Persistence: Stores past interactions for improved model continuity.

- Scalability: Handles multiple AI model instances efficiently.

- Dynamic Context Management: Adapts to evolving conversations.

- Multi-Agent Coordination: Supports interactions between multiple AI agents.

- Security & Access Control: Ensures safe AI model communication.

Best MCP Servers for AI Engineers

AI engineers can leverage various MCP servers to enhance their AI models' performance. Below are some of the top MCP implementations:

1. LangChain Server

- Open-source framework for contextual AI interactions.

- Provides tools for managing long conversations and memory.

- Works well with OpenAI, Mixtral, and other LLMs.

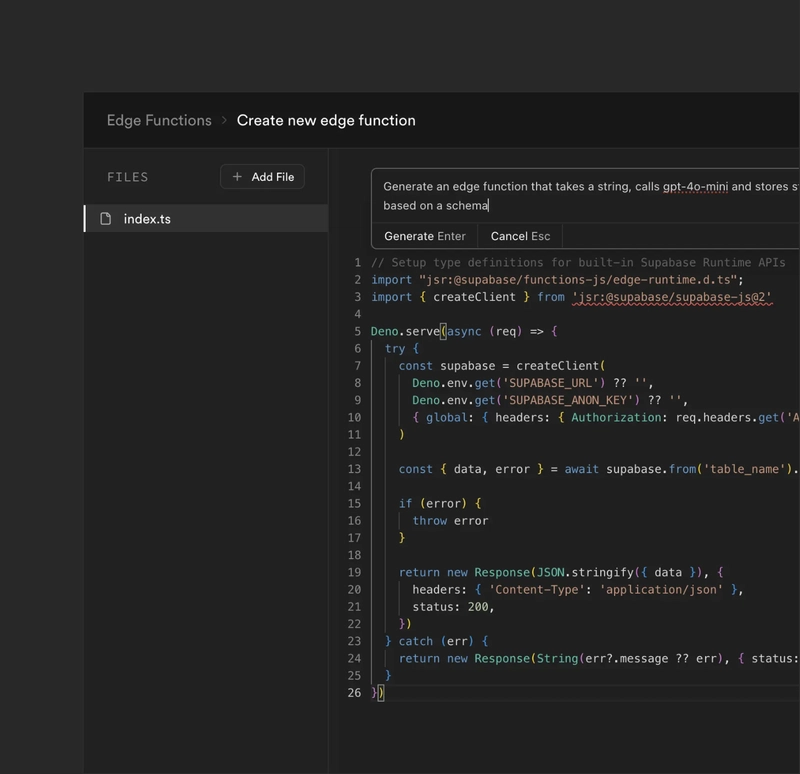

2. FastAPI with Redis (Custom MCP Implementation)

- Leverages FastAPI for fast API interactions.

- Uses Redis for session persistence.

- Ideal for real-time applications needing scalable AI context management.

3. Haystack MCP

- Built for RAG (Retrieval-Augmented Generation).

- Optimized for knowledge-driven AI models.

- Works well for applications like chatbots and enterprise search.

4. Ollama MCP

- Lightweight MCP server for local AI models.

- Focused on privacy-preserving AI deployments.

- Best suited for on-premises AI applications.

Use Cases of MCP in AI Applications

MCP servers enable a range of powerful AI-driven solutions. Here are some critical use cases:

1. AI-Powered Virtual Assistants

- Ensures conversation continuity.

- Reduces redundant queries and improves personalization.

2. Customer Support Chatbots

- Maintains context across sessions.

- Enhances chatbot accuracy and user satisfaction.

3. AI-Powered Recommendation Systems

- Uses historical interactions for better recommendations.

- Deployed in e-commerce, entertainment, and healthcare.

4. Financial AI Agents

- Enhances fraud detection using contextual transaction analysis.

- Improves financial forecasting through data-driven insights.

5. AI in Healthcare

- Provides better patient interactions by remembering medical history.

- Supports AI-driven diagnostics and decision-making.

Roadmap for Building an AI Agent with Python and Mixtral Large

Building an AI agent using Python and Mixtral Large requires a structured approach. Below is a roadmap:

Phase 1: Environment Setup

- Install Python and necessary dependencies:

pip install fastapi redis mixtral openai

- Set up Redis for session persistence.

Phase 2: Build the AI Agent

- Create an API backend using FastAPI:

from fastapi import FastAPI

import redis

app = FastAPI()

r = redis.Redis(host='localhost', port=6379, decode_responses=True)

@app.get("/chat")

def chat(session_id: str, user_input: str):

context = r.get(session_id) or ""

response = generate_response(context, user_input)

r.set(session_id, response)

return {"response": response}

- Integrate Mixtral Large for LLM responses:

from openai import OpenAI

client = OpenAI(api_key="YOUR_API_KEY")

def generate_response(context, user_input):

prompt = f"Context: {context}\nUser: {user_input}\nAI:"

response = client.chat.completions.create(

model="mixtral-large",

messages=[{"role": "system", "content": prompt}]

)

return response.choices[0].message['content']

Phase 3: Deploy the MCP Server

- Run the FastAPI MCP server:

uvicorn app:app --host 0.0.0.0 --port 8000

- Set up a Redis-backed session manager to maintain context.

Phase 4: Integrate and Scale

- Deploy the solution using Docker and Kubernetes.

- Implement WebSockets for real-time interaction.

- Optimize Mixtral model calls for cost efficiency.

Final Thoughts

Model Context Protocol (MCP) Servers are indispensable in modern AI applications. Whether you're building virtual assistants, chatbots, or intelligent recommendation systems, MCP ensures context retention, making AI more responsive and intelligent. By leveraging Python and Mixtral Large, AI engineers can build scalable, stateful AI agents that drive real-world impact.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)