Transform Settlement Process using AWS Data pipeline

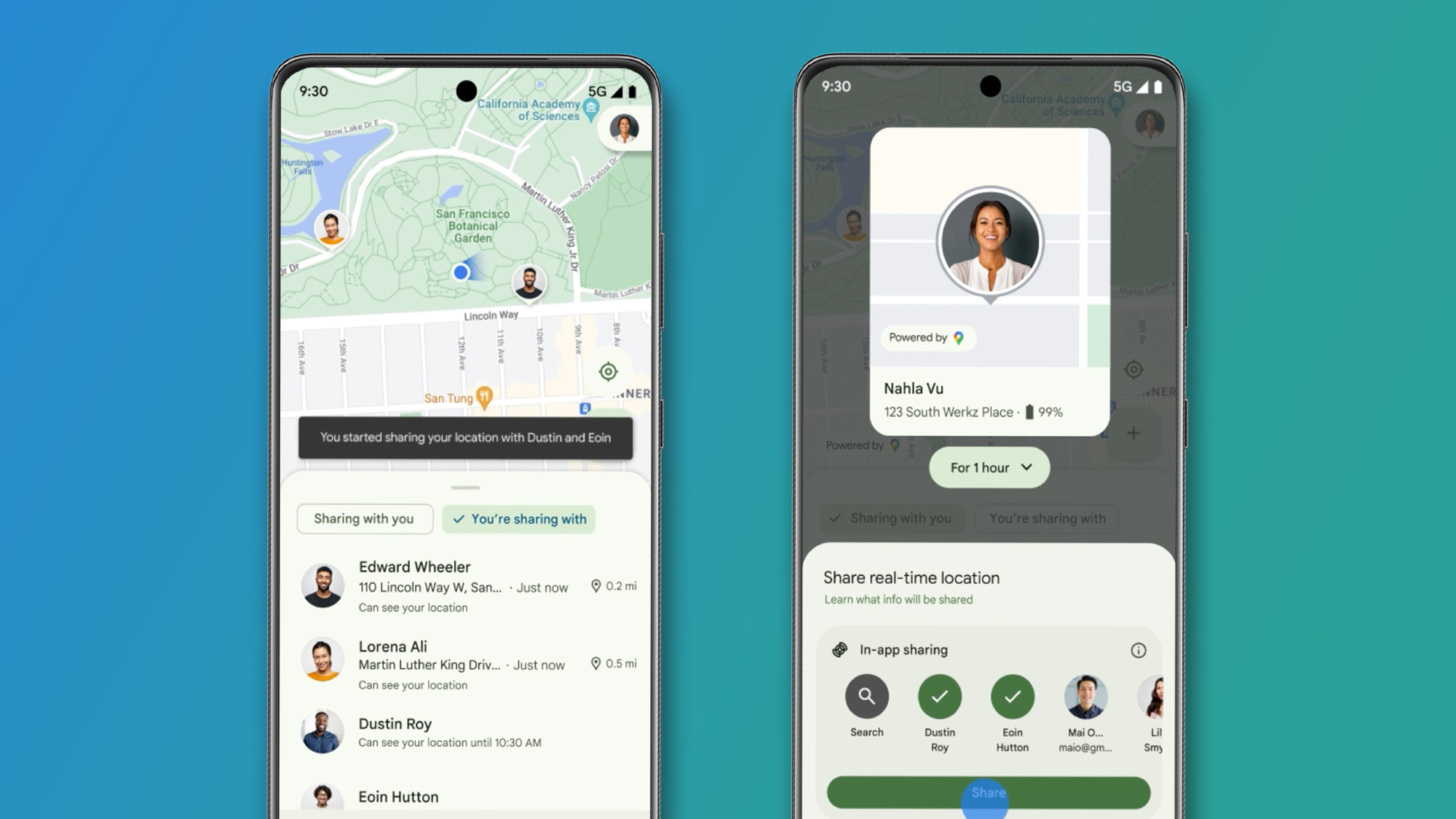

The task involves processing settlement files from various sources using AWS data pipelines. These files may come as zip files, Excel sheets, or database tables. The pipeline executes business logic and transforms inputs (often Excel) into outputs (also in Excel). It receives multiple settlement files from different locations. We optimized this by connecting to AWS S3, storing input files there, and triggering a data ETL jobs pipeline for processing. Our inputs typically come from various sources. We use inputs from existing AWS tables and external inputs in Excel format. These diverse inputs are ultimately converted to Parquet. This documentation outlines the process, and I would like to share the AWS data pipeline ETL jobs architecture for replication purposes. *AWS Architecture: * An overview of the AWS architecture including components like S3, DynamoDB, event bridges, lambdas, ETL jobs, and AWS Glue. how files are processed through different layers of S3 and the role of Lambda functions in unzipping and converting files. Event Rules and Domains: The setup of event rules in AWS, which trigger ETL processes when files are dropped in specific S3 folders. They also explained the concepts of domains and tenants in AWS and how they are used to create architectural diagrams.

The task involves processing settlement files from various sources using AWS data pipelines. These files may come as zip files, Excel sheets, or database tables. The pipeline executes business logic and transforms inputs (often Excel) into outputs (also in Excel).

It receives multiple settlement files from different locations. We optimized this by connecting to AWS S3, storing input files there, and triggering a data ETL jobs pipeline for processing.

Our inputs typically come from various sources. We use inputs from existing AWS tables and external inputs in Excel format. These diverse inputs are ultimately converted to Parquet. This documentation outlines the process, and I would like to share the AWS data pipeline ETL jobs architecture for replication purposes.

*AWS Architecture: * An overview of the AWS architecture including components like S3, DynamoDB, event bridges, lambdas, ETL jobs, and AWS Glue. how files are processed through different layers of S3 and the role of Lambda functions in unzipping and converting files.

Event Rules and Domains: The setup of event rules in AWS, which trigger ETL processes when files are dropped in specific S3 folders. They also explained the concepts of domains and tenants in AWS and how they are used to create architectural diagrams.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![[DEALS] Koofr Cloud Storage: Lifetime Subscription (1TB) (80% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Is this too much for a modular monolith system? [closed]](https://i.sstatic.net/pYL1nsfg.png)

_roibu_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![M4 MacBook Air Drops to Just $849 - Act Fast! [Lowest Price Ever]](https://www.iclarified.com/images/news/97140/97140/97140-640.jpg)

![Apple Smart Glasses Not Close to Being Ready as Meta Targets 2025 [Gurman]](https://www.iclarified.com/images/news/97139/97139/97139-640.jpg)

![iPadOS 19 May Introduce Menu Bar, iOS 19 to Support External Displays [Rumor]](https://www.iclarified.com/images/news/97137/97137/97137-640.jpg)