ToImage in PyTorch

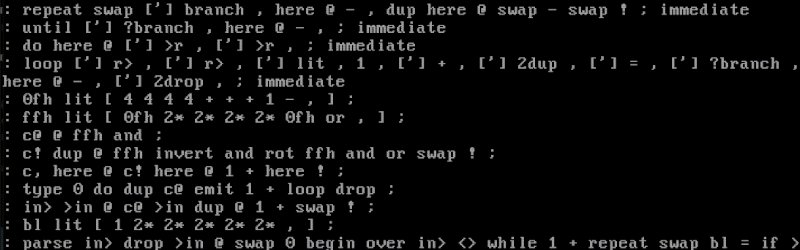

Buy Me a Coffee☕ *My post explains OxfordIIITPet(). ToImage() can convert a PIL(Pillow library) Image, tensor or ndarray to an Image as shown below: *Memos: The 1st argument is img(Required-Type:PIL Image or tensor/ndarray(int/float/complex/bool)): *Memos: A tensor must be 2D or more D. A ndarray must be 0D to 3D. Don't use img=. v2 is recommended to use according to V1 or V2? Which one should I use?. from torchvision.datasets import OxfordIIITPet from torchvision.transforms.v2 import ToImage ToImage() # ToImage() origin_data = OxfordIIITPet( # It's PIL Image. root="data", transform=None ) Image_data = OxfordIIITPet( root="data", transform=ToImage() ) Image_data # Dataset OxfordIIITPet # Number of datapoints: 3680 # Root location: data # StandardTransform # Transform: ToImage() Image_data[0] # (Image([[[37, 35, 36, ..., 247, 249, 249], # [35, 35, 37, ..., 246, 248, 249], # ..., # [28, 28, 27, ..., 59, 65, 76]], # [[20, 18, 19, ..., 248, 248, 248], # [18, 18, 20, ..., 247, 247, 248], # ..., # [27, 27, 27, ..., 94, 106, 117]], # [[12, 10, 11, ..., 253, 253, 253], # [10, 10, 12, ..., 251, 252, 253], # ..., # [35, 35, 35, ..., 214, 232, 223]]], dtype=torch.uint8,), 0) Image_data[0][0].size() # torch.Size([3, 500, 394]) Image_data[0][0] # Image([[[37, 35, 36, ..., 247, 249, 249], # [35, 35, 37, ..., 246, 248, 249], # ..., # [28, 28, 27, ..., 59, 65, 76]], # [[20, 18, 19, ..., 248, 248, 248], # [18, 18, 20, ..., 247, 247, 248], # ..., # [27, 27, 27, ..., 94, 106, 117]], # [[12, 10, 11, ..., 253, 253, 253], # [10, 10, 12, ..., 251, 252, 253], # ..., # [35, 35, 35, ..., 214, 232, 223]]], dtype=torch.uint8,) Image_data[0][1] # 0 import matplotlib.pyplot as plt plt.imshow(X=Image_data[0][0]) # TypeError: Invalid shape (3, 500, 394) for image data ti = ToImage() ti(origin_data[0][0]) # Image([[[37, 35, 36, ..., 247, 249, 249], # [35, 35, 37, ..., 246, 248, 249], # ..., # [28, 28, 27, ..., 59, 65, 76]], # [[20, 18, 19, ..., 248, 248, 248], # [18, 18, 20, ..., 247, 247, 248], # ..., # [27, 27, 27, ..., 94, 106, 117]], # [[12, 10, 11, ..., 253, 253, 253], # [10, 10, 12, ..., 251, 252, 253], # ..., # [35, 35, 35, ..., 214, 232, 223]]], dtype=torch.uint8,) plt.imshow(ti(origin_data[0][0])) # TypeError: Invalid shape (3, 500, 394) for image data ti(torch.tensor([[0, 1, 2]])) # Image([[[0, 1, 2]]],) ti(np.array([[0, 1, 2]])) # Image([[[0, 1, 2]]], dtype=torch.int32,)

*My post explains OxfordIIITPet().

ToImage() can convert a PIL(Pillow library) Image, tensor or ndarray to an Image as shown below:

*Memos:

- The 1st argument is

img(Required-Type:PIL Imageortensor/ndarray(int/float/complex/bool)): *Memos:- A tensor must be 2D or more D.

- A ndarray must be 0D to 3D.

- Don't use

img=.

-

v2is recommended to use according to V1 or V2? Which one should I use?.

from torchvision.datasets import OxfordIIITPet

from torchvision.transforms.v2 import ToImage

ToImage()

# ToImage()

origin_data = OxfordIIITPet( # It's PIL Image.

root="data",

transform=None

)

Image_data = OxfordIIITPet(

root="data",

transform=ToImage()

)

Image_data

# Dataset OxfordIIITPet

# Number of datapoints: 3680

# Root location: data

# StandardTransform

# Transform: ToImage()

Image_data[0]

# (Image([[[37, 35, 36, ..., 247, 249, 249],

# [35, 35, 37, ..., 246, 248, 249],

# ...,

# [28, 28, 27, ..., 59, 65, 76]],

# [[20, 18, 19, ..., 248, 248, 248],

# [18, 18, 20, ..., 247, 247, 248],

# ...,

# [27, 27, 27, ..., 94, 106, 117]],

# [[12, 10, 11, ..., 253, 253, 253],

# [10, 10, 12, ..., 251, 252, 253],

# ...,

# [35, 35, 35, ..., 214, 232, 223]]], dtype=torch.uint8,), 0)

Image_data[0][0].size()

# torch.Size([3, 500, 394])

Image_data[0][0]

# Image([[[37, 35, 36, ..., 247, 249, 249],

# [35, 35, 37, ..., 246, 248, 249],

# ...,

# [28, 28, 27, ..., 59, 65, 76]],

# [[20, 18, 19, ..., 248, 248, 248],

# [18, 18, 20, ..., 247, 247, 248],

# ...,

# [27, 27, 27, ..., 94, 106, 117]],

# [[12, 10, 11, ..., 253, 253, 253],

# [10, 10, 12, ..., 251, 252, 253],

# ...,

# [35, 35, 35, ..., 214, 232, 223]]], dtype=torch.uint8,)

Image_data[0][1]

# 0

import matplotlib.pyplot as plt

plt.imshow(X=Image_data[0][0])

# TypeError: Invalid shape (3, 500, 394) for image data

ti = ToImage()

ti(origin_data[0][0])

# Image([[[37, 35, 36, ..., 247, 249, 249],

# [35, 35, 37, ..., 246, 248, 249],

# ...,

# [28, 28, 27, ..., 59, 65, 76]],

# [[20, 18, 19, ..., 248, 248, 248],

# [18, 18, 20, ..., 247, 247, 248],

# ...,

# [27, 27, 27, ..., 94, 106, 117]],

# [[12, 10, 11, ..., 253, 253, 253],

# [10, 10, 12, ..., 251, 252, 253],

# ...,

# [35, 35, 35, ..., 214, 232, 223]]], dtype=torch.uint8,)

plt.imshow(ti(origin_data[0][0]))

# TypeError: Invalid shape (3, 500, 394) for image data

ti(torch.tensor([[0, 1, 2]]))

# Image([[[0, 1, 2]]],)

ti(np.array([[0, 1, 2]]))

# Image([[[0, 1, 2]]], dtype=torch.int32,)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)